Example tutorial of running ZKEACMS on CentOS

This article mainly introduces the detailed process of running ZKEACMS on CentOS, which has certain reference value. Interested friends can refer to it

ZKEACMS Core is developed based on .net core. It can run cross-platform on windows, linux, and mac. Next, let's take a look at how to run ZKEACMS on CentOS.

Install .Net Core Runtime

Run the following command to install .Net Core Runtime

sudo yum install libunwind libicu curl -sSL -o dotnet.tar.gz https://go.microsoft.com/fwlink/?linkid=843420 sudo mkdir -p /opt/dotnet && sudo tar zxf dotnet.tar.gz -C /opt/dotnet sudo ln -s /opt/dotnet/dotnet /usr/local/bin

Install Nginx

sudo yum install epel-release sudo yum install nginx sudo systemctl enable nginx

Modify the configuration of Nginx

Modify the configuration of Nginx, let it reverse proxy to localhost:5000, modify the global Configuration file /etc/nginx /nginx.conf, modify the location node to the following content

location / {

proxy_pass http://localhost:5000;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection keep-alive;

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;

}Start Nginx

sudo systemctl start nginx

At this point, our environment is ready. Next, let’s do Publish ZKEACMS

Publish ZKEACMS.Core

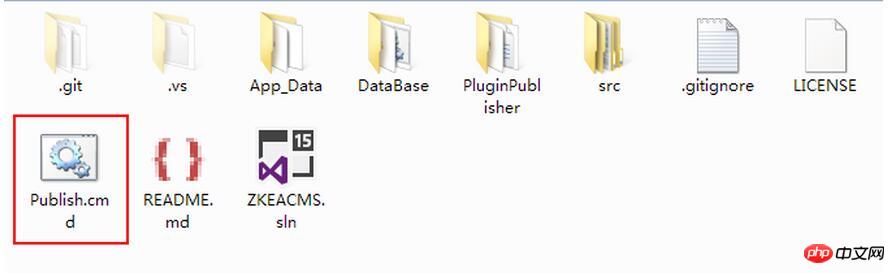

Publishing ZKEACMS.Core is relatively simple, just double-click Publish.cmd

DatabaseSQLite

For the sake of simplicity, SQLite is used as the database here, and a SQLite data is generated named Database.sqlite. In the published program folder, create the App_Data folder and put Database.sqlite into the App_Data directory. Regarding how to generate SQLite data, you can ask in the group or search on Baidu/Google yourself.

Modify the connection string

Open appsettings.json and add the SQLite database connection string. The result is as follows

{

"ConnectionStrings": {

"DefaultConnection": "",

"Sqlite": "Data Source=App_Data/Database.sqlite",

"MySql": ""

},

"ApplicationInsights": {

"InstrumentationKey": ""

},

"Logging": {

"IncludeScopes": false,

"LogLevel": {

"Default": "Debug",

"System": "Information",

"Microsoft": "Information"

}

},

"Culture": "zh-CN"

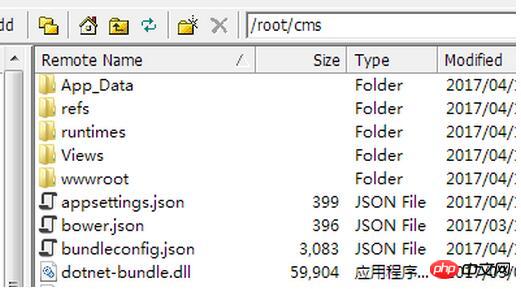

}Packaging and uploading server

We will package the released program into cms.zip and upload it to the /root directory. And unzip it to the /root/cms directory, use the following command to unzip

unzip cms.zip -d cms

Run

Locate the directory, and then use the dotnet command to run

cd /root/cms dotnet ZKEACMS.WebHost.dll

After successful operation, you can use your server’s IP or domain name to access:)

After exiting the SSH remote connection client, I found that I could not access it. This is because dotnet also exited.

Run as a service

Create a service to let dotnet run in the background. Install nano editor

yum install nano

Create service

sudo nano /etc/systemd/system/zkeacms.service

Enter the following content to save

[Unit] Description=ZKEACMS [Service] WorkingDirectory=/root/cms ExecStart=/usr/local/bin/dotnet /root/cms/ZKEACMS.WebHost.dll Restart=always RestartSec=10 SyslogIdentifier=zkeacms User=root Environment=ASPNETCORE_ENVIRONMENT=Production [Install] WantedBy=multi-user.target

Start service

systemctl start zkeacms.service

In this way, you can exit the SSH remote connection with peace of mind.

[Related recommendations]

1. .Net Core graphical verification code

2. .NET Core configuration file loading and DI Inject configuration data

3. .NET Core CLI tool documentation dotnet-publish

4. Detailed introduction to ZKEACMS for .Net Core

5. Share the example code for using forms verification in .net MVC

6. How to make an http request under .net core?

The above is the detailed content of Example tutorial of running ZKEACMS on CentOS. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1659

1659

14

14

1416

1416

52

52

1310

1310

25

25

1258

1258

29

29

1233

1233

24

24

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

Difference between centos and ubuntu

Apr 14, 2025 pm 09:09 PM

Difference between centos and ubuntu

Apr 14, 2025 pm 09:09 PM

The key differences between CentOS and Ubuntu are: origin (CentOS originates from Red Hat, for enterprises; Ubuntu originates from Debian, for individuals), package management (CentOS uses yum, focusing on stability; Ubuntu uses apt, for high update frequency), support cycle (CentOS provides 10 years of support, Ubuntu provides 5 years of LTS support), community support (CentOS focuses on stability, Ubuntu provides a wide range of tutorials and documents), uses (CentOS is biased towards servers, Ubuntu is suitable for servers and desktops), other differences include installation simplicity (CentOS is thin)

How to optimize CentOS HDFS configuration

Apr 14, 2025 pm 07:15 PM

How to optimize CentOS HDFS configuration

Apr 14, 2025 pm 07:15 PM

Improve HDFS performance on CentOS: A comprehensive optimization guide to optimize HDFS (Hadoop distributed file system) on CentOS requires comprehensive consideration of hardware, system configuration and network settings. This article provides a series of optimization strategies to help you improve HDFS performance. 1. Hardware upgrade and selection resource expansion: Increase the CPU, memory and storage capacity of the server as much as possible. High-performance hardware: adopts high-performance network cards and switches to improve network throughput. 2. System configuration fine-tuning kernel parameter adjustment: Modify /etc/sysctl.conf file to optimize kernel parameters such as TCP connection number, file handle number and memory management. For example, adjust TCP connection status and buffer size

Centos configuration IP address

Apr 14, 2025 pm 09:06 PM

Centos configuration IP address

Apr 14, 2025 pm 09:06 PM

Steps to configure IP address in CentOS: View the current network configuration: ip addr Edit the network configuration file: sudo vi /etc/sysconfig/network-scripts/ifcfg-eth0 Change IP address: Edit IPADDR= Line changes the subnet mask and gateway (optional): Edit NETMASK= and GATEWAY= Lines Restart the network service: sudo systemctl restart network verification IP address: ip addr

What are the common misunderstandings in CentOS HDFS configuration?

Apr 14, 2025 pm 07:12 PM

What are the common misunderstandings in CentOS HDFS configuration?

Apr 14, 2025 pm 07:12 PM

Common problems and solutions for Hadoop Distributed File System (HDFS) configuration under CentOS When building a HadoopHDFS cluster on CentOS, some common misconfigurations may lead to performance degradation, data loss and even the cluster cannot start. This article summarizes these common problems and their solutions to help you avoid these pitfalls and ensure the stability and efficient operation of your HDFS cluster. Rack-aware configuration error: Problem: Rack-aware information is not configured correctly, resulting in uneven distribution of data block replicas and increasing network load. Solution: Double check the rack-aware configuration in the hdfs-site.xml file and use hdfsdfsadmin-printTopo

How to install mysql in centos7

Apr 14, 2025 pm 08:30 PM

How to install mysql in centos7

Apr 14, 2025 pm 08:30 PM

The key to installing MySQL elegantly is to add the official MySQL repository. The specific steps are as follows: Download the MySQL official GPG key to prevent phishing attacks. Add MySQL repository file: rpm -Uvh https://dev.mysql.com/get/mysql80-community-release-el7-3.noarch.rpm Update yum repository cache: yum update installation MySQL: yum install mysql-server startup MySQL service: systemctl start mysqld set up booting

What steps are required to configure CentOS in HDFS

Apr 14, 2025 pm 06:42 PM

What steps are required to configure CentOS in HDFS

Apr 14, 2025 pm 06:42 PM

Building a Hadoop Distributed File System (HDFS) on a CentOS system requires multiple steps. This article provides a brief configuration guide. 1. Prepare to install JDK in the early stage: Install JavaDevelopmentKit (JDK) on all nodes, and the version must be compatible with Hadoop. The installation package can be downloaded from the Oracle official website. Environment variable configuration: Edit /etc/profile file, set Java and Hadoop environment variables, so that the system can find the installation path of JDK and Hadoop. 2. Security configuration: SSH password-free login to generate SSH key: Use the ssh-keygen command on each node