In-depth analysis of Java memory model: volatile

Characteristics of volatile

When we declare a shared variable as volatile, the reading/writing of this variable will be very special. A good way to understand the nature of volatile is to think of individual reads/writes to volatile variables as synchronizing these individual read/write operations using the same monitor lock. Below we use specific examples to illustrate. Please see the following sample code:

class VolatileFeaturesExample {

volatile long vl = 0L; //使用volatile声明64位的long型变量

public void set(long l) {

vl = l; //单个volatile变量的写

}

public void getAndIncrement () {

vl++; //复合(多个)volatile变量的读/写

}

public long get() {

return vl; //单个volatile变量的读

}

}Assume that multiple threads call the three methods of the above program respectively. This program is semantically equivalent to the following program:

class VolatileFeaturesExample {

long vl = 0L; // 64位的long型普通变量

public synchronized void set(long l) { //对单个的普通 变量的写用同一个监视器同步

vl = l;

}

public void getAndIncrement () { //普通方法调用

long temp = get(); //调用已同步的读方法

temp += 1L; //普通写操作

set(temp); //调用已同步的写方法

}

public synchronized long get() {

//对单个的普通变量的读用同一个监视器同步

return vl;

}

}As shown in the sample program above, a single read/write operation on a volatile variable is synchronized with a read/write operation on an ordinary variable using the same monitor lock. The execution effect is the same.

The happens-before rule of the monitor lock guarantees memory visibility between the two threads that release the monitor and acquire the monitor. This means that a read of a volatile variable can always be seen (any Thread) final write to this volatile variable.

The semantics of monitor locks determine that the execution of critical section code is atomic. This means that even for 64-bit long and double variables, as long as it is a volatile variable, reading and writing to the variable will be atomic. If there are multiple volatile operations or compound operations like volatile++, these operations are not atomic as a whole.

In short, volatile variables themselves have the following characteristics:

Visibility. A read from a volatile variable will always see the last write (by any thread) to the volatile variable.

Atomicity: Reading/writing any single volatile variable is atomic, but compound operations like volatile++ are not atomic.

happens before relationship established by volatile write-read

The above is about the characteristics of volatile variables themselves. For programmers, volatile has a greater impact on the memory visibility of threads than volatile itself. Features are more important and require more attention from us.

Starting from JSR-133, write-reading of volatile variables can achieve communication between threads.

From the perspective of memory semantics, volatile and monitor locks have the same effect: volatile writing and monitor release have the same memory semantics; volatile reading and monitor acquisition have the same memory semantics.

Please see the following example code using volatile variables:

class VolatileExample {

int a = 0;

volatile boolean flag = false;

public void writer() {

a = 1; //1

flag = true; //2

}

public void reader() {

if (flag) { //3

int i = a; //4

……

}

}

} Assume that after thread A executes the writer() method, thread B executes the reader() method. According to the happens before rule, the happens before relationship established in this process can be divided into two categories:

According to the program order rule, 1 happens before 2; 3 happens before 4.

According to volatile rules, 2 happens before 3.

According to the transitivity rule of happens before, 1 happens before 4.

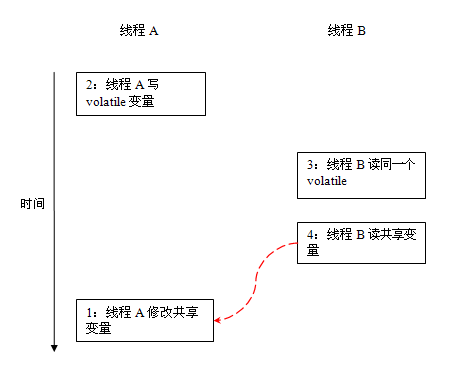

The graphical representation of the above happens before relationship is as follows:

In the above figure, the two nodes linked by each arrow represent a happens before relationship. Black arrows represent program order rules; orange arrows represent volatile rules; and blue arrows represent the happens-before guarantees provided by combining these rules.

After thread A writes a volatile variable, thread B reads the same volatile variable. All shared variables visible to thread A before writing the volatile variable will become visible to thread B immediately after thread B reads the same volatile variable.

volatile write-read memory semantics

The memory semantics of volatile write are as follows:

When writing a volatile variable, JMM will refresh the shared variable in the local memory corresponding to the thread to main memory.

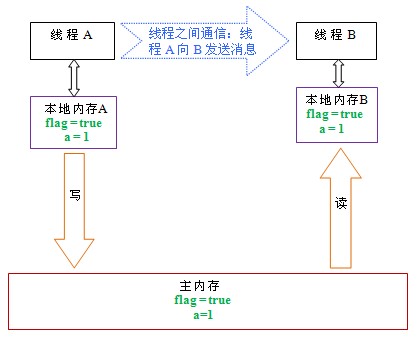

Take the above example program VolatileExample as an example. Assume that thread A first executes the writer() method, and then thread B executes the reader() method. Initially, the flag and a in the local memory of the two threads are in the initial state. . The following figure is a schematic diagram of the status of shared variables after thread A performs volatile writing:

As shown in the figure above, after thread A writes the flag variable, the local memory of thread A is The values of the two shared variables updated by A are flushed to the main memory. At this time, the values of the shared variables in local memory A and main memory are consistent.

The memory semantics of volatile reading are as follows:

When reading a volatile variable, JMM will invalidate the local memory corresponding to the thread. The thread will next read the shared variable from main memory.

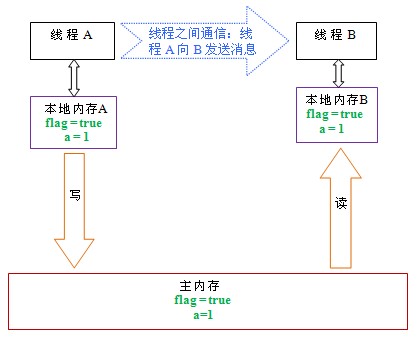

The following is a schematic diagram of the status of the shared variable after thread B reads the same volatile variable:

As shown in the figure above, after reading the flag variable, local memory B has been made invalid. At this point, thread B must read the shared variable from main memory. The read operation of thread B will cause the values of the shared variables in local memory B and main memory to become consistent.

If we combine the two steps of volatile writing and volatile reading, after the reading thread B reads a volatile variable, the value of all visible shared variables before the writing thread A writes the volatile variable will be Immediately becomes visible to reading thread B.

The following is a summary of the memory semantics of volatile writing and volatile reading:

Thread A writes a volatile variable. In essence, thread A sends a message to a thread that will next read this volatile variable. (its modification of the shared variable) message.

Thread B reads a volatile variable. In essence, thread B receives the message sent by a previous thread (the modification of the shared variable before writing the volatile variable).

Thread A writes a volatile variable, and then thread B reads the volatile variable. This process is essentially thread A sending a message to thread B through main memory.

Implementation of volatile memory semantics

Next, let’s take a look at how JMM implements volatile write/read memory semantics.

We mentioned earlier that reordering is divided into compiler reordering and processor reordering. In order to achieve volatile memory semantics, JMM will limit the reordering types of these two types respectively. The following is a table of volatile reordering rules formulated by JMM for the compiler:

Whether it can be reordered

Second operation

First operation

Normal read/write

volatile read

volatile write

Normal read/write

NO

volatile read

NO

NO

NO

volatile write

NO

NO

Example For example, the meaning of the last cell in the third row is: in the program sequence, when the first operation is a read or write of an ordinary variable, and the second operation is a volatile write, the compiler cannot reorder these two operations. operations.

We can see from the above table:

When the second operation is a volatile write, no matter what the first operation is, it cannot be reordered. This rule ensures that operations before a volatile write are not reordered by the compiler to after a volatile write.

When the first operation is a volatile read, no matter what the second operation is, it cannot be reordered. This rule ensures that operations after a volatile read will not be reordered by the compiler to precede a volatile read.

When the first operation is volatile writing and the second operation is volatile reading, reordering cannot be performed.

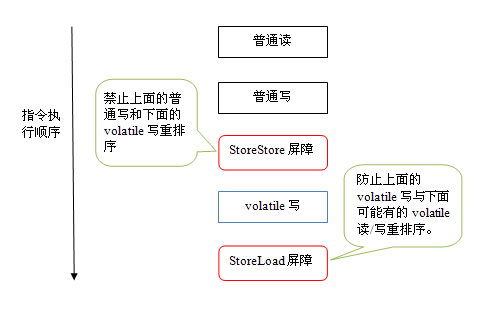

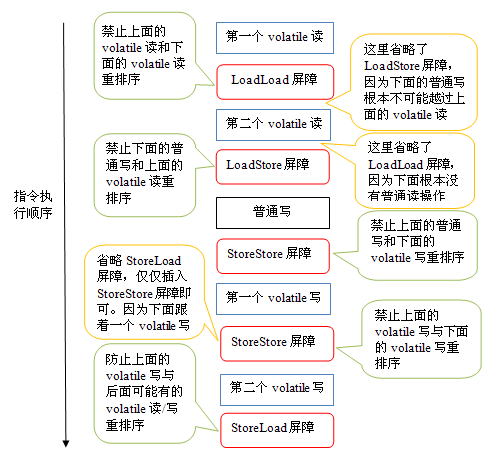

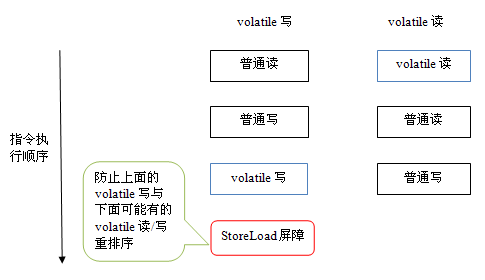

In order to achieve volatile memory semantics, when the compiler generates bytecode, it will insert memory barriers in the instruction sequence to prohibit specific types of processor reordering. It is almost impossible for the compiler to find an optimal arrangement that minimizes the total number of inserted barriers, so JMM adopts a conservative strategy. The following is a JMM memory barrier insertion strategy based on a conservative strategy:

Insert a StoreStore barrier in front of each volatile write operation.

Insert a StoreLoad barrier after each volatile write operation.

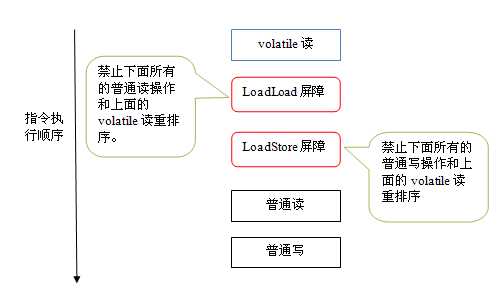

Insert a LoadLoad barrier after each volatile read operation.

Insert a LoadStore barrier after each volatile read operation.

The above memory barrier insertion strategy is very conservative, but it can ensure that correct volatile memory semantics can be obtained in any program on any processor platform.

The following is a schematic diagram of the instruction sequence generated after volatile writing inserts the memory barrier under the conservative strategy:

The StoreStore barrier in the above figure can ensure that volatile writing Previously, all normal writes preceding it were already visible to any processor. This is because the StoreStore barrier will ensure that all normal writes above are flushed to main memory before volatile writes.

What’s more interesting here is the StoreLoad barrier behind volatile writing. The purpose of this barrier is to prevent volatile writes from being reordered by subsequent volatile read/write operations. Because the compiler often cannot accurately determine whether a StoreLoad barrier needs to be inserted after a volatile write (for example, a method returns immediately after a volatile write). In order to ensure that volatile memory semantics are correctly implemented, JMM adopts a conservative strategy here: inserting a StoreLoad barrier after each volatile write or in front of each volatile read. From the perspective of overall execution efficiency, JMM chose to insert a StoreLoad barrier after each volatile write. Because the common usage pattern of volatile write-read memory semantics is: one writing thread writes a volatile variable, and multiple reading threads read the same volatile variable. When the number of read threads greatly exceeds the number of write threads, choosing to insert a StoreLoad barrier after volatile writing will bring considerable improvements in execution efficiency. From here we can see a characteristic of JMM implementation: first ensure correctness, and then pursue execution efficiency.

The following is a schematic diagram of the instruction sequence generated after volatile reading inserts the memory barrier under the conservative strategy:

上图中的LoadLoad屏障用来禁止处理器把上面的volatile读与下面的普通读重排序。LoadStore屏障用来禁止处理器把上面的volatile读与下面的普通写重排序。

上述volatile写和volatile读的内存屏障插入策略非常保守。在实际执行时,只要不改变volatile写-读的内存语义,编译器可以根据具体情况省略不必要的屏障。下面我们通过具体的示例代码来说明:

class VolatileBarrierExample {

int a;

volatile int v1 = 1;

volatile int v2 = 2;

void readAndWrite() {

int i = v1; //第一个volatile读

int j = v2; // 第二个volatile读

a = i + j; //普通写

v1 = i + 1; // 第一个volatile写

v2 = j * 2; //第二个 volatile写

}

… //其他方法

}针对readAndWrite()方法,编译器在生成字节码时可以做如下的优化:

注意,最后的StoreLoad屏障不能省略。因为第二个volatile写之后,方法立即return。此时编译器可能无法准确断定后面是否会有volatile读或写,为了安全起见,编译器常常会在这里插入一个StoreLoad屏障。

上面的优化是针对任意处理器平台,由于不同的处理器有不同“松紧度”的处理器内存模型,内存屏障的插入还可以根据具体的处理器内存模型继续优化。以x86处理器为例,上图中除最后的StoreLoad屏障外,其它的屏障都会被省略。

前面保守策略下的volatile读和写,在 x86处理器平台可以优化成:

前文提到过,x86处理器仅会对写-读操作做重排序。X86不会对读-读,读-写和写-写操作做重排序,因此在x86处理器中会省略掉这三种操作类型对应的内存屏障。在x86中,JMM仅需在volatile写后面插入一个StoreLoad屏障即可正确实现volatile写-读的内存语义。这意味着在x86处理器中,volatile写的开销比volatile读的开销会大很多(因为执行StoreLoad屏障开销会比较大)。

JSR-133为什么要增强volatile的内存语义

在JSR-133之前的旧Java内存模型中,虽然不允许volatile变量之间重排序,但旧的Java内存模型允许volatile变量与普通变量之间重排序。在旧的内存模型中,VolatileExample示例程序可能被重排序成下列时序来执行:

在旧的内存模型中,当1和2之间没有数据依赖关系时,1和2之间就可能被重排序(3和4类似)。其结果就是:读线程B执行4时,不一定能看到写线程A在执行1时对共享变量的修改。

因此在旧的内存模型中 ,volatile的写-读没有监视器的释放-获所具有的内存语义。为了提供一种比监视器锁更轻量级的线程之间通信的机制,JSR-133专家组决定增强volatile的内存语义:严格限制编译器和处理器对volatile变量与普通变量的重排序,确保volatile的写-读和监视器的释放-获取一样,具有相同的内存语义。从编译器重排序规则和处理器内存屏障插入策略来看,只要volatile变量与普通变量之间的重排序可能会破坏volatile的内存语意,这种重排序就会被编译器重排序规则和处理器内存屏障插入策略禁止。

由于volatile仅仅保证对单个volatile变量的读/写具有原子性,而监视器锁的互斥执行的特性可以确保对整个临界区代码的执行具有原子性。在功能上,监视器锁比volatile更强大;在可伸缩性和执行性能上,volatile更有优势。如果读者想在程序中用volatile代替监视器锁,请一定谨慎。

以上就是Java内存模型深度解析:volatile的内容,更多相关内容请关注PHP中文网(www.php.cn)!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Break or return from Java 8 stream forEach?

Feb 07, 2025 pm 12:09 PM

Break or return from Java 8 stream forEach?

Feb 07, 2025 pm 12:09 PM

Java 8 introduces the Stream API, providing a powerful and expressive way to process data collections. However, a common question when using Stream is: How to break or return from a forEach operation? Traditional loops allow for early interruption or return, but Stream's forEach method does not directly support this method. This article will explain the reasons and explore alternative methods for implementing premature termination in Stream processing systems. Further reading: Java Stream API improvements Understand Stream forEach The forEach method is a terminal operation that performs one operation on each element in the Stream. Its design intention is

PHP: A Key Language for Web Development

Apr 13, 2025 am 12:08 AM

PHP: A Key Language for Web Development

Apr 13, 2025 am 12:08 AM

PHP is a scripting language widely used on the server side, especially suitable for web development. 1.PHP can embed HTML, process HTTP requests and responses, and supports a variety of databases. 2.PHP is used to generate dynamic web content, process form data, access databases, etc., with strong community support and open source resources. 3. PHP is an interpreted language, and the execution process includes lexical analysis, grammatical analysis, compilation and execution. 4.PHP can be combined with MySQL for advanced applications such as user registration systems. 5. When debugging PHP, you can use functions such as error_reporting() and var_dump(). 6. Optimize PHP code to use caching mechanisms, optimize database queries and use built-in functions. 7

PHP vs. Python: Understanding the Differences

Apr 11, 2025 am 12:15 AM

PHP vs. Python: Understanding the Differences

Apr 11, 2025 am 12:15 AM

PHP and Python each have their own advantages, and the choice should be based on project requirements. 1.PHP is suitable for web development, with simple syntax and high execution efficiency. 2. Python is suitable for data science and machine learning, with concise syntax and rich libraries.

PHP vs. Other Languages: A Comparison

Apr 13, 2025 am 12:19 AM

PHP vs. Other Languages: A Comparison

Apr 13, 2025 am 12:19 AM

PHP is suitable for web development, especially in rapid development and processing dynamic content, but is not good at data science and enterprise-level applications. Compared with Python, PHP has more advantages in web development, but is not as good as Python in the field of data science; compared with Java, PHP performs worse in enterprise-level applications, but is more flexible in web development; compared with JavaScript, PHP is more concise in back-end development, but is not as good as JavaScript in front-end development.

PHP vs. Python: Core Features and Functionality

Apr 13, 2025 am 12:16 AM

PHP vs. Python: Core Features and Functionality

Apr 13, 2025 am 12:16 AM

PHP and Python each have their own advantages and are suitable for different scenarios. 1.PHP is suitable for web development and provides built-in web servers and rich function libraries. 2. Python is suitable for data science and machine learning, with concise syntax and a powerful standard library. When choosing, it should be decided based on project requirements.

Java Program to Find the Volume of Capsule

Feb 07, 2025 am 11:37 AM

Java Program to Find the Volume of Capsule

Feb 07, 2025 am 11:37 AM

Capsules are three-dimensional geometric figures, composed of a cylinder and a hemisphere at both ends. The volume of the capsule can be calculated by adding the volume of the cylinder and the volume of the hemisphere at both ends. This tutorial will discuss how to calculate the volume of a given capsule in Java using different methods. Capsule volume formula The formula for capsule volume is as follows: Capsule volume = Cylindrical volume Volume Two hemisphere volume in, r: The radius of the hemisphere. h: The height of the cylinder (excluding the hemisphere). Example 1 enter Radius = 5 units Height = 10 units Output Volume = 1570.8 cubic units explain Calculate volume using formula: Volume = π × r2 × h (4

PHP: The Foundation of Many Websites

Apr 13, 2025 am 12:07 AM

PHP: The Foundation of Many Websites

Apr 13, 2025 am 12:07 AM

The reasons why PHP is the preferred technology stack for many websites include its ease of use, strong community support, and widespread use. 1) Easy to learn and use, suitable for beginners. 2) Have a huge developer community and rich resources. 3) Widely used in WordPress, Drupal and other platforms. 4) Integrate tightly with web servers to simplify development deployment.

PHP's Impact: Web Development and Beyond

Apr 18, 2025 am 12:10 AM

PHP's Impact: Web Development and Beyond

Apr 18, 2025 am 12:10 AM

PHPhassignificantlyimpactedwebdevelopmentandextendsbeyondit.1)ItpowersmajorplatformslikeWordPressandexcelsindatabaseinteractions.2)PHP'sadaptabilityallowsittoscaleforlargeapplicationsusingframeworkslikeLaravel.3)Beyondweb,PHPisusedincommand-linescrip