We started to learn tracking.js, which is a lightweight javascript library developed by Eduardo Lundgren. It allows you to do real-time face detection, color tracking and tagging friends' faces. In this tutorial we will see how we can detect faces, eyes and mouths from static images.

I have always been interested in face tagging, detection and face recognition technology in videos and pictures. Although I know that obtaining the logic and algorithms to develop facial recognition software or plug-ins is beyond my imagination. When I learned that a Javascript library could recognize smiles, eyes, and facial structures, I was inspired to write a tutorial. There are many libraries, which are either purely based on Javascript or based on the Java language.

Today, we start learning tracking.js, which is a lightweight javascript library developed by Eduardo Lundgren. It allows you to do real-time face detection, color tracking and tagging friends' faces. In this tutorial we will see how we can detect faces, eyes and mouths from static images.

At the end of the tutorial, you can see a tutorial that provides a working example with tips and tricks and more technical details.

First, we need to create a project, download the project from github and extract the build folder, and place the build folder according to your file and directory structure. In this tutorial, I used the following file and directory structure.

Folder structure

Project Folder

│

│ index.html

│

├───assets

│ face.jpg

│

└───js

│ tracking -min.js

│ tracking.js

│

└───data

eye-min.js

eye.js

face-min.js face-min.js face-min. js

using using using using using through using through out through out through out through out through out out through out ’'s' through out ’s' ’s' through through out's'''' through through’'s' back‐‐‐‐‐‐‐‐‐‐ exception's

Below is the html code of index.html.

HTML code

<!doctype html>

<html>

<head>

<meta charset="utf-8">

<title>@tuts Face Detection Tutorial</title>

<script src="js/tracking-min.js"></script>

<script src="js/data/face-min.js"></script>

<script src="js/data/eye-min.js"></script>

<script src="js/data/mouth-min.js"></script>

<style>

.rect {

border: 2px solid #a64ceb;

left: -1000px;

position: absolute;

top: -1000px;

}

#img {

position: absolute;

top: 50%;

left: 50%;

margin: -173px 0 0 -300px;

}

</style>

</head>

<body>

<div class="imgContainer">

<img id="img" src="assets/face.jpg" / alt="How to implement face detection in JavaScript" >

</div>

</body>

</html>Copy after login

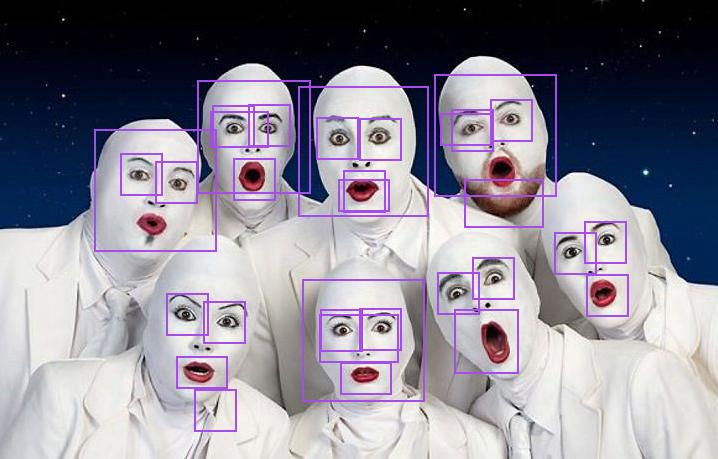

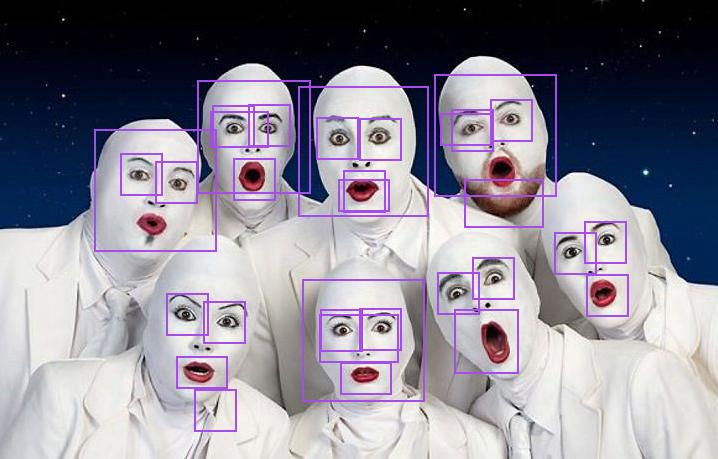

In the above HTML code, we introduce 4 javascript files from tracking.js, which help us detect faces, eyes and mouths from images. Now we write a piece of code to detect faces, eyes and mouths from static images. I chose this image intentionally because it contains several faces with different expressions and poses.

In order to achieve the goal, we need to modify the code in the header of the html file.

HTML code

<!doctype html>

<html>

<head>

<meta charset="utf-8">

<title>@tuts Face Detection Tutorial</title>

<script src="js/tracking-min.js"></script>

<script src="js/data/face-min.js"></script>

<script src="js/data/eye-min.js"></script>

<script src="js/data/mouth-min.js"></script>

<style>

.rect {

border: 2px solid #a64ceb;

left: -1000px;

position: absolute;

top: -1000px;

}

#img {

position: absolute;

top: 50%;

left: 50%;

margin: -173px 0 0 -300px;

}

</style>

// tracking code.

<script>

window.onload = function() {

var img = document.getElementById('img');

var tracker = new tracking.ObjectTracker(['face', 'eye', 'mouth']); // Based on parameter it will return an array.

tracker.setStepSize(1.7);

tracking.track('#img', tracker);

tracker.on('track', function(event) {

event.data.forEach(function(rect) {

draw(rect.x, rect.y, rect.width, rect.height);

});

});

function draw(x, y, w, h) {

var rect = document.createElement('div');

document.querySelector('.imgContainer').appendChild(rect);

rect.classList.add('rect');

rect.style.width = w + 'px';

rect.style.height = h + 'px';

rect.style.left = (img.offsetLeft + x) + 'px';

rect.style.top = (img.offsetTop + y) + 'px';

};

};

</script>

</head>

<body>

<div class="imgContainer">

<img id="img" src="assets/face.jpg" / alt="How to implement face detection in JavaScript" >

</div>

</body>

</html>Copy after login

Result

Result

Code description.tracking.ObjectTracker() method classifies the objects you want to track, and it can accept an array as a parameter.

setStepSize( ) The step size of the specified block.

We bind the object to be tracked to the “track” event. Once the object is tracked, the tracking event will be triggered by the object being tracked soon.

We use Obtain the data in the form of an object array, which contains the width, height, x and y coordinates of each object (face, mouth, and eyes);

Summary of results.

You may find that the results vary depending on the shape conditions There are differences, there are still areas that need improvement and improvement, and we also admit and sincerely agree with the development of this type of API.

Running example:

Running example with pictures.

More resources 8211; Javascript based facial recognition

https://github.com/auduno/headtrackr

https://github.com/auduno/clmtrackr

We plan to provide facial tracking for HTML5 Canvas and camera videos as well as images Tags make a tutorial. You may use the client-side camera access blog I mentioned above, which can help you access the user's camera in a way you know.

Note: Due to browser security reasons, this program needs to run in the same domain or in a browser with network security disabled.

Result

Result

![[Web front-end] Node.js quick start](https://img.php.cn/upload/course/000/000/067/662b5d34ba7c0227.png)