Node uses Puppeteer as a crawler

This time I will bring you Node's use of Puppeteer as a crawler. What are the precautions for Node's use of Puppeteer as a crawler? The following is a practical case, let's take a look.

Architecture diagram

Puppeteer architecture diagram

Puppeteer communicates with the browser through devTools

Browser A browser (chroium) instance that can have multiple pages

Page A page that contains at least one Frame

Frame has at least one execution environment for executing javascript, and can also expand multiple execution environments

Preface

I want to buy it recently A desktop computer and a notebook i5 have obvious lags when opening web pages and vsc, so I plan to use an i7 GTX1070TI or GTX1080TI computer. Searching directly on Taobao requires turning too many pages, and there are too many pictures, which is hard on my brain. The capacity cannot be accepted, so I want to crawl some data and use graphics to analyze recent price trends. Therefore, I wrote a crawler using Puppeteer to crawl relevant data.

What is Puppeteer?

Puppeteer is a Node library which provides a high-level API to control headless Chrome or Chromium over the DevTools Protocol. It can also be configured to use full (non-headless) Chrome or Chromium.

In short, this product is a node library that provides a high-level API. It can control chrome or chromium in headless mode through devtool. It can simulate any human operation in headless mode.

The difference between cheerio

cherrico is essentially just a library that uses jquery-like syntax to manipulate HTML documents. Using cherrico to crawl data only requests static HTML. Document, if the data inside the web page is dynamically obtained through ajax, then the corresponding data cannot be crawled. Puppeteer can simulate a browser's operating environment, request website information, and run the website's internal logic. Then it dynamically obtains the data inside the page through the WS protocol, and can perform any simulated operations (click, slide, hover, etc.), and supports page jumps and multi-page management. It can even inject scripts on the node into the browser's internal environment to run. In short, it can do everything you can do on a web page, and it can also do things you can't.

Start

This article is not a step-by-step tutorial, so you need to have basic knowledge of Puppeteer API. If you don’t understand, please read the official introduction first

Puppeteer official Site

PuppeteerAPI

First we observe the website information we want to crawl GTX1080

This is the Taobao page we want to crawl. Only the product items in the middle are the content we need to crawl. After carefully analyzing its structure, I believe that every front end has such capabilities.

The Typescript I use can get complete API tips for Puppetter and related libraries. If you don’t know TS, you only need to change the relevant code to ES syntax

// 引入一些需要用到的库以及一些声明

import * as puppeteer from 'puppeteer' // 引入Puppeteer

import mongo from '../lib/mongoDb' // 需要用到的 mongodb库,用来存取爬取的数据

import chalk from 'chalk' // 一个美化 console 输出的库

const log = console.log // 缩写 console.log

const TOTAL_PAGE = 50 // 定义需要爬取的网页数量,对应页面下部的跳转链接

// 定义要爬去的数据结构

interface IWriteData {

link: string // 爬取到的商品详情链接

picture: string // 爬取到的图片链接

price: number // 价格,number类型,需要从爬取下来的数据进行转型

title: string // 爬取到的商品标题

}

// 格式化的进度输出 用来显示当前爬取的进度

function formatProgress (current: number): string {

let percent = (current / TOTAL_PAGE) * 100

let done = ~~(current / TOTAL_PAGE * 40)

let left = 40 - done

let str = `当前进度:[${''.padStart(done, '=')}${''.padStart(left, '-')}] ${percent}%`

return str

}Next we start to enter the main logic of the crawler

// 因为我们需要用到大量的 await 语句,因此在外层包裹一个 async function

async function main() {

// Do something

}

main()// 进入代码的主逻辑

async function main() {

// 首先通过Puppeteer启动一个浏览器环境

const browser = await puppeteer.launch()

log(chalk.green('服务正常启动'))

// 使用 try catch 捕获异步中的错误进行统一的错误处理

try {

// 打开一个新的页面

const page = await browser.newPage()

// 监听页面内部的console消息

page.on('console', msg => {

if (typeof msg === 'object') {

console.dir(msg)

} else {

log(chalk.blue(msg))

}

})

// 打开我们刚刚看见的淘宝页面

await page.goto('https://s.taobao.com/search?q=gtx1080&imgfile=&js=1&stats_click=search_radio_all%3A1&initiative_id=staobaoz_20180416&ie=utf8')

log(chalk.yellow('页面初次加载完毕'))

// 使用一个 for await 循环,不能一个时间打开多个网络请求,这样容易因为内存过大而挂掉

for (let i = 1; i <= TOTAL_PAGE; i++) {

// 找到分页的输入框以及跳转按钮

const pageInput = await page.$(`.J_Input[type='number']`)

const submit = await page.$('.J_Submit')

// 模拟输入要跳转的页数

await pageInput.type('' + i)

// 模拟点击跳转

await submit.click()

// 等待页面加载完毕,这里设置的是固定的时间间隔,之前使用过page.waitForNavigation(),但是因为等待的时间过久导致报错(Puppeteer默认的请求超时是30s,可以修改),因为这个页面总有一些不需要的资源要加载,而我的网络最近日了狗,会导致超时,因此我设定等待2.5s就够了

await page.waitFor(2500)

// 清除当前的控制台信息

console.clear()

// 打印当前的爬取进度

log(chalk.yellow(formatProgress(i)))

log(chalk.yellow('页面数据加载完毕'))

// 处理数据,这个函数的实现在下面

await handleData()

// 一个页面爬取完毕以后稍微歇歇,不然太快淘宝会把你当成机器人弹出验证码(虽然我们本来就是机器人)

await page.waitFor(2500)

}

// 所有的数据爬取完毕后关闭浏览器

await browser.close()

log(chalk.green('服务正常结束'))

// 这是一个在内部声明的函数,之所以在内部声明而不是外部,是因为在内部可以获取相关的上下文信息,如果在外部声明我还要传入 page 这个对象

async function handleData() {

// 现在我们进入浏览器内部搞些事情,通过page.evaluate方法,该方法的参数是一个函数,这个函数将会在页面内部运行,这个函数的返回的数据将会以Promise的形式返回到外部

const list = await page.evaluate(() => {

// 先声明一个用于存储爬取数据的数组

const writeDataList: IWriteData[] = []

// 获取到所有的商品元素

let itemList = document.querySelectorAll('.item.J_MouserOnverReq')

// 遍历每一个元素,整理需要爬取的数据

for (let item of itemList) {

// 首先声明一个爬取的数据结构

let writeData: IWriteData = {

picture: undefined,

link: undefined,

title: undefined,

price: undefined

}

// 找到商品图片的地址

let img = item.querySelector('img')

writeData.picture = img.src

// 找到商品的链接

let link: HTMLAnchorElement = item.querySelector('.pic-link.J_ClickStat.J_ItemPicA')

writeData.link = link.href

// 找到商品的价格,默认是string类型 通过~~转换为整数number类型

let price = item.querySelector('strong')

writeData.price = ~~price.innerText

// 找到商品的标题,淘宝的商品标题有高亮效果,里面有很多的span标签,不过一样可以通过innerText获取文本信息

let title: HTMLAnchorElement = item.querySelector('.title>a')

writeData.title = title.innerText

// 将这个标签页的数据push进刚才声明的结果数组

writeDataList.push(writeData)

}

// 当前页面所有的返回给外部环境

return writeDataList

})

// 得到数据以后写入到mongodb

const result = await mongo.insertMany('GTX1080', list)

log(chalk.yellow('写入数据库完毕'))

}

} catch (error) {

// 出现任何错误,打印错误消息并且关闭浏览器

console.log(error)

log(chalk.red('服务意外终止'))

await browser.close()

} finally {

// 最后要退出进程

process.exit(0)

}

}Thinking

1. Why use Typescript?

Because Typescript is easy to use, I can’t memorize all Puppeteer’s APIs, and I don’t want to check every one of them, so using TS can provide intelligent reminders and avoid low-level mistakes caused by spelling. mistake. Basically after using TS, you can type the code all over again

puppeteer.png

2. What is the performance problem of the crawler?

Because Puppeteer will start a browser and execute internal logic, it takes up a lot of memory. Looking at the console, this node process takes up about 300MB of memory.

My pages are crawled one by one. If you want to crawl faster, you can start multiple processes. Note that V8 is single-threaded, so it makes no sense to open multiple pages within one process. Different parameters need to be configured to open different node processes. Of course, it can also be implemented through the node cluster (cluster). The essence is the same.

I also set different waiting times during the crawling process. On the one hand, it is for Waiting for the web page to load, on the one hand, to prevent Taobao from recognizing that I am a crawler bomb verification code

3, other functions of Puppeteer

This only uses some basic features of Puppeteer. In fact, Puppeteer has more functions. For example, the processing function on the node is introduced to be executed inside the browser and the current page is saved as a pdf or png image. And you can also launch a browser with interface effects through const browser = await puppeteer.launch({ headless: false }), and you can see how your crawler operates. In addition, for some websites that require login, if you don’t want to entrust the verification code to a third party for processing, you can also turn off headless, then set the waiting time in the program, and manually complete some verification to achieve the purpose of login.

Of course, Google has produced such an awesome library, which is not only used for crawling data. This library is also used for some automated performance analysis, interface testing, front-end website monitoring, etc.

4. Some other thoughts

Generally speaking, making a crawler to crawl data is a relatively complex exercise project that tests multiple basic skills. It has been used many times in this crawler. When it comes to async, this requires a full understanding of async, Promise and other related knowledge. When analyzing the DOM to collect data, I also used native methods to obtain DOM attributes many times (if the website has jquery, you can also use it directly, if not, external injection is required. Some configurations are required under typescript to avoid reporting unrecognized $ variables. In this way, DOM can be manipulated through jquery syntax), and the proficiency in DOM-related APIs was examined.

In addition, this is just a process-oriented programming. We can completely encapsulate it into a class for operation. This also examines the OOP understanding of ES

I believe you have mastered it after reading the case in this article For more exciting methods, please pay attention to other related articles on the php Chinese website!

Recommended reading:

Summary of how to use the state object of vuex

How to use Angular to launch a component

The above is the detailed content of Node uses Puppeteer as a crawler. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to delete node in nvm

Dec 29, 2022 am 10:07 AM

How to delete node in nvm

Dec 29, 2022 am 10:07 AM

How to delete node with nvm: 1. Download "nvm-setup.zip" and install it on the C drive; 2. Configure environment variables and check the version number through the "nvm -v" command; 3. Use the "nvm install" command Install node; 4. Delete the installed node through the "nvm uninstall" command.

How to use express to handle file upload in node project

Mar 28, 2023 pm 07:28 PM

How to use express to handle file upload in node project

Mar 28, 2023 pm 07:28 PM

How to handle file upload? The following article will introduce to you how to use express to handle file uploads in the node project. I hope it will be helpful to you!

How to do Docker mirroring of Node service? Detailed explanation of extreme optimization

Oct 19, 2022 pm 07:38 PM

How to do Docker mirroring of Node service? Detailed explanation of extreme optimization

Oct 19, 2022 pm 07:38 PM

During this period, I was developing a HTML dynamic service that is common to all categories of Tencent documents. In order to facilitate the generation and deployment of access to various categories, and to follow the trend of cloud migration, I considered using Docker to fix service content and manage product versions in a unified manner. . This article will share the optimization experience I accumulated in the process of serving Docker for your reference.

An in-depth analysis of Node's process management tool 'pm2”

Apr 03, 2023 pm 06:02 PM

An in-depth analysis of Node's process management tool 'pm2”

Apr 03, 2023 pm 06:02 PM

This article will share with you Node's process management tool "pm2", and talk about why pm2 is needed, how to install and use pm2, I hope it will be helpful to everyone!

Pi Node Teaching: What is a Pi Node? How to install and set up Pi Node?

Mar 05, 2025 pm 05:57 PM

Pi Node Teaching: What is a Pi Node? How to install and set up Pi Node?

Mar 05, 2025 pm 05:57 PM

Detailed explanation and installation guide for PiNetwork nodes This article will introduce the PiNetwork ecosystem in detail - Pi nodes, a key role in the PiNetwork ecosystem, and provide complete steps for installation and configuration. After the launch of the PiNetwork blockchain test network, Pi nodes have become an important part of many pioneers actively participating in the testing, preparing for the upcoming main network release. If you don’t know PiNetwork yet, please refer to what is Picoin? What is the price for listing? Pi usage, mining and security analysis. What is PiNetwork? The PiNetwork project started in 2019 and owns its exclusive cryptocurrency Pi Coin. The project aims to create a one that everyone can participate

What to do if npm node gyp fails

Dec 29, 2022 pm 02:42 PM

What to do if npm node gyp fails

Dec 29, 2022 pm 02:42 PM

npm node gyp fails because "node-gyp.js" does not match the version of "Node.js". The solution is: 1. Clear the node cache through "npm cache clean -f"; 2. Through "npm install -g n" Install the n module; 3. Install the "node v12.21.0" version through the "n v12.21.0" command.

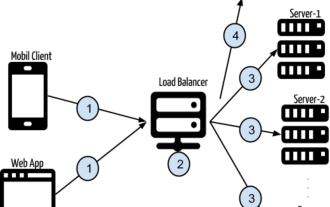

Token-based authentication with Angular and Node

Sep 01, 2023 pm 02:01 PM

Token-based authentication with Angular and Node

Sep 01, 2023 pm 02:01 PM

Authentication is one of the most important parts of any web application. This tutorial discusses token-based authentication systems and how they differ from traditional login systems. By the end of this tutorial, you will see a fully working demo written in Angular and Node.js. Traditional Authentication Systems Before moving on to token-based authentication systems, let’s take a look at traditional authentication systems. The user provides their username and password in the login form and clicks Login. After making the request, authenticate the user on the backend by querying the database. If the request is valid, a session is created using the user information obtained from the database, and the session information is returned in the response header so that the session ID is stored in the browser. Provides access to applications subject to

Let's talk about how to use pkg to package Node.js projects into executable files.

Dec 02, 2022 pm 09:06 PM

Let's talk about how to use pkg to package Node.js projects into executable files.

Dec 02, 2022 pm 09:06 PM

How to package nodejs executable file with pkg? The following article will introduce to you how to use pkg to package a Node project into an executable file. I hope it will be helpful to you!