Outline of this sharing:

What are the pitfalls of CBO optimizer

CBO optimizer The solution to the pitfall

Strengthen SQL audit and nip performance problems in their infancy

Share on-site FAQ

CBO (Cost Based Optimizer) optimizer is currently widely used in Oracle. It uses statistical information, query conversion, etc. to calculate the costs of various possible access paths, and generates a variety of alternative execution plans. Finally, Oracle will choose the lowest cost execution plan as the optimal execution plan. Compared with the RBO (Rule Based Optimizer) of the "ancient" era, it is obviously more in line with the actual situation of the database and can adapt to more application scenarios. However, due to its high complexity, there are many practical problems and bugs that CBO has not solved. In the daily optimization process, you may encounter situations where you cannot follow the correct execution plan no matter how you collect statistical information. At this time, you may have cheated CBO.

This sharing mainly uses common daily optimizer problems as an introduction to explore the solutions to the pitfalls of CBO.

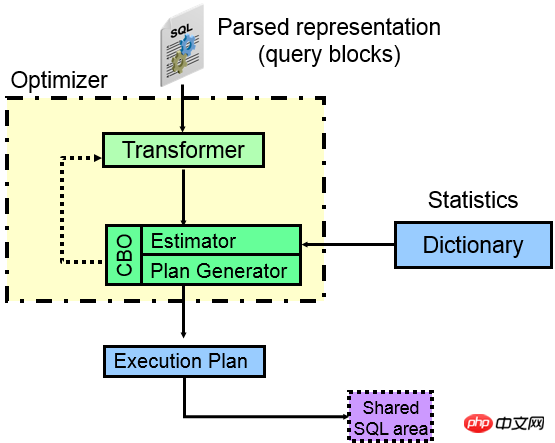

Let’s first take a look at the components of the CBO optimizer:

From the picture above, you can It can be seen that when a SQL statement enters ORACLE, the various parts will actually be separated after parsing, and each separated part becomes a query block (query blocks) independently. For example, a subquery will become a query block, and an external query will be a query. block, then what the ORACLE optimizer has to do is what kind of access path is better inside each query block (index, full table, partition?), and the second is what should be done between each query block. Which JOIN method and JOIN order are used to finally calculate which execution plan is better.

The core of the optimizer is the query converter, cost estimator and execution plan generator.

Transformer (query converter):

As can be seen from the figure, the first core device of the optimizer is the query converter. The main function of the query converter is to study various query blocks. The relationship between the SQL and SQL is syntactically and even semantically equivalent. The rewritten SQL is more easily processed by the core device cost estimator and execution plan generator, thereby using statistical information to generate the optimal execution plan.

Query converter has two ways in the optimizer: heuristic query conversion (rule-based) and COST-based query conversion. Heuristic query conversions are generally relatively simple statements, and cost-based ones are generally more complex. In other words, ORACLE that conforms to rule-based queries will perform query conversion under any circumstances, and ORACLE that does not conform may consider cost-based query conversion. Heuristic query conversion has a long history and has fewer problems. Generally, the efficiency of query conversion is higher than that without query conversion. However, cost-based query conversion, because it is closely related to the CBO optimizer, was introduced in 10G and is very complex internally. Therefore, there are many bugs. In the daily optimization process, various difficult SQL often appear in query conversion failures, because once the query conversion fails, Oracle cannot convert the original SQL into a better-structured SQL (which is easier to be optimized). (processor processing), obviously there are much fewer execution paths to choose from. For example, if the subquery cannot be UNNEST, then it is often the beginning of disaster. In fact, what Oracle does most in query conversion is to convert various queries into JOIN methods, so that various efficient JOIN methods can be used, such as HASH JOIN.

There are more than 30 methods of query transformation. Some common heuristics and COST-based query transformations are listed below.

Heuristic query transformation (a series of RULE):

Many heuristic query transformations already exist in the RBO situation. Common ones are:

Simple View merge (simple view merge), SU (Subquery unnest subquery expansion), OJPPD (old style Join predicate push-down old join predicate push method), FPD (Filter push) -down filter predicate push), OR Expansion (OR expansion), OBYE (Order by Elimination), JE (Join Elimination connection elimination or table elimination in the connection), Transitive Predicate (predicate transfer) and other technologies.

COST-based query conversion (calculated by COST):

COST-based query conversion for complex statements, common ones are:

CVM (Complex view Merging complex View merging), JPPD (Join predicate push-down), DP (Distinct placement), GBP (Group by placement) and other technologies.

Through a series of query conversion technologies, the original SQL is converted into SQL that is easier for the optimizer to understand and analyze, so that more predicates, connection conditions, etc. can be used to achieve the purpose of obtaining the best plan. To query the conversion process, you can obtain detailed information through 10053. Whether the query conversion can be successful is related to the version, optimizer restrictions, implicit parameters, patches, etc.

Just search for query conversion on MOS, and a bunch of bugs will appear:

It turned out to be a Wrong result (wrong result). Encountering this kind of BUG is not a performance problem, but a serious data correctness problem. Of course, you can find it in MOS. There are a lot of bugs like this, but in actual applications, I believe you may encounter less. If one day you see that the result of a SQL query may be wrong, then you have to boldly question it. For a behemoth like Oracle, Generally speaking, when encountering problems, questioning is a very correct way of thinking. This kind of wrong result problem may be seen during the database major version upgrade process. There are two main types of problems:

The original result was correct, but now the result is wrong. --Encountered a new version BUG

Now the result is correct, the original result was wrong. --The new version fixes the old version BUG

The first situation is normal, the second situation may also exist. I have seen a customer questioning that the result after the upgrade is incorrect. , and after verification, it turned out that the execution plan of the old version was wrong, and the execution plan of the new version was correct, that is, it had been wrong for many years without being discovered. After the upgrade, it turned out to be correct, but they thought it was wrong.

When encountering wrong results, if it is not a non-core function, it may really be buried deep for many years.

Estimator(Estimator):

Obviously, the estimator will use statistical information (tables, indexes, columns, partitions, etc.) to estimate the corresponding execution plan operations selectivity, thereby calculating the cardinality of the corresponding operation, generating the COST of the corresponding operation, and finally calculating the COST of the entire plan. For the estimator, it is very important to estimate the accuracy of the model and the accuracy of the statistical information storage. The more scientific the estimated model is, the statistical information can reflect the actual data distribution and can cover more special data, then the generated COST will be more accurate.

However, this is not possible. There are many problems in the estimator model and statistical information. For example, when calculating selectivity for strings, ORACLE will internally convert strings to RAW types. , after converting the RAW type into a number, and then ROUND 15 digits from the left, the strings may be very different. Since the number exceeds 15 digits after conversion, the results may be similar after internal conversion. This ultimately results in inaccurate calculated selectivity.

Plan Generator( Plan Generator):

The plan generator analyzes various access paths, JOIN methods, and JOIN sequences to produce different execution plans. So if there is a problem with this part, the corresponding part may have insufficient algorithms or limitations. For example, if there are many JOIN tables, then the choice of various access sequences increases in a geometric progression. ORACLE has internal limit values, which means it is impossible to calculate them all.

For example, the HASH JOIN algorithm is generally the preferred algorithm for big data processing. However, HASH JOIN inherently has a limitation: HASH collision. Once a HASH collision is encountered, the efficiency will inevitably be greatly reduced.

The CBO optimizer has many limitations. For details, please refer to MOS: Limitations of the Oracle Cost Based Optimizer (Document ID 212809.1).

This section mainly shares cases of common daily optimizer problems. Some problems are not limited to CBO optimizer, because CBO is currently widely used. The optimizer, therefore, always incorporates the CBO problem.

FILTER operation is a common operation in the execution plan. There are two situations for this operation:

There is only one child node, then it is a simple filtering operation.

If there are multiple child nodes, it is a similar operation to NESTED LOOPS, but the difference from NESTED LOOPS is that FILTER will build a HASH table internally, and will not perform repeated matching LoopSearch, but use existing results to improve efficiency. But once there are few repeated matches and the number of loops is high, the FILTER operation will seriously affect performance, and your SQL may not be able to be executed for several days.

Let’s take a look at the FILTER operation under various circumstances:

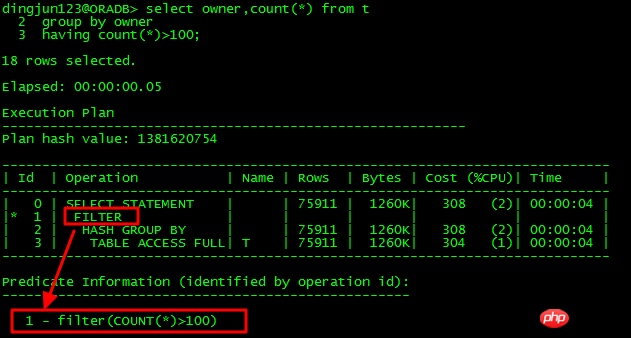

Single child node:

Obviously the ID The FILTER operation of =1 has only one child node ID=2. In this case, the FILTER operation is a simple filtering operation.

Multiple child nodes:

FILTER Multiple child nodes are often performance killers. They mainly occur when subqueries cannot be converted to UNNEST queries. Commonly encountered situations are NOT IN subqueries, subqueries and ORs. Continuous use, complex subqueries, etc.

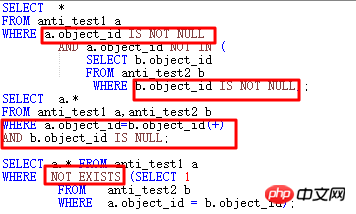

(1) FILTER in NOT IN subquery

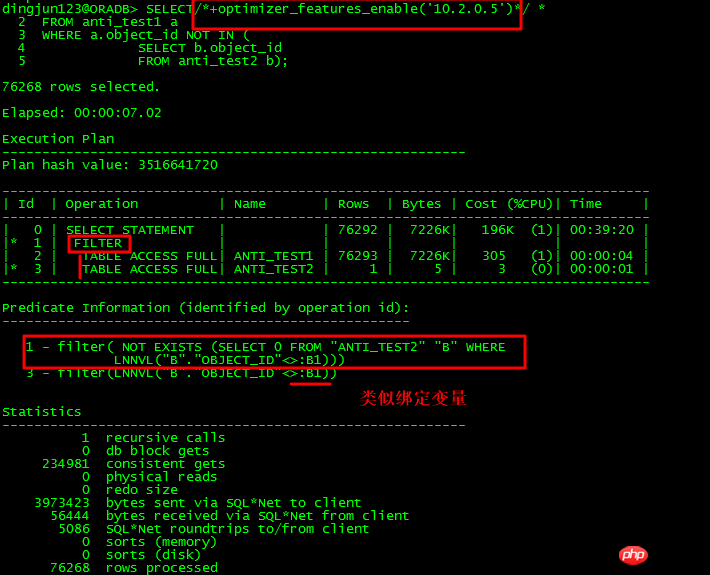

Let’s first look at the NOT IN situation:

For the above NOT IN subquery, if the subquery object_id has NULL, the entire query will have no results. Before 11g, if the main table and subtable If the object_id does not have NOT NULL constraints at the same time, or neither IS NOT NULL restrictions are added, ORACLE will use FILTER. 11g has a new ANTI NA (NULL AWARE) optimization that can UNNEST subqueries to improve efficiency.

For the unNEST subquery, use FILTER and have at least 2 child nodes. Another feature of the execution plan is that the Predicate part contains: B1, something similar to binding variables , the internal operation is similar to the NESTED LOOPS operation.

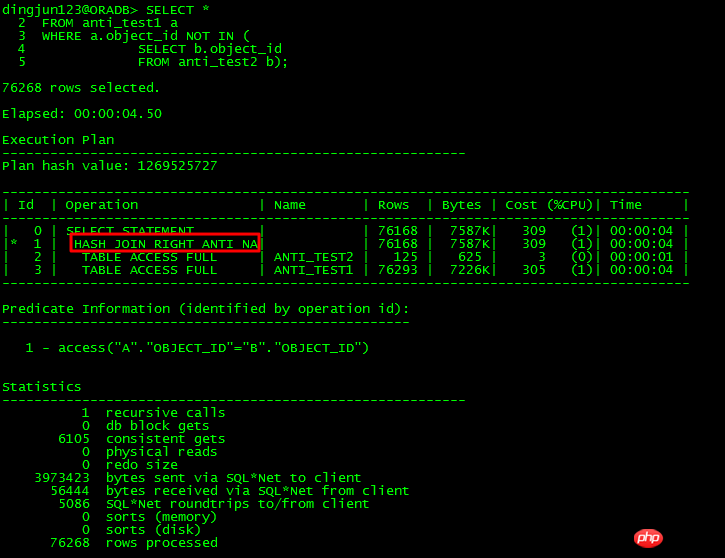

11g has NULL AWARE specifically optimized for the NOT IN problem, as shown below:

Through the NULL AWARE operation, NOT IN subqueries that cannot be UNNESTed It can be converted into JOIN form, so the efficiency is greatly improved. What should you do if you encounter NOT IN and cannot UNNEST before 11g?

Set the matching condition of the NOT IN part. For this example, both ANTI_TEST1.object_id and ANTI_TEST2.object_id are set to NOT NULL constraints.

If you do not change the NOT NULL constraint, you need to add IS NOT NULL conditions to both object_ids.

Change to NOT EXISTS.

Change to ANTI JOIN form.

The above four methods can achieve the purpose of making the optimizer use JOIN in most cases.

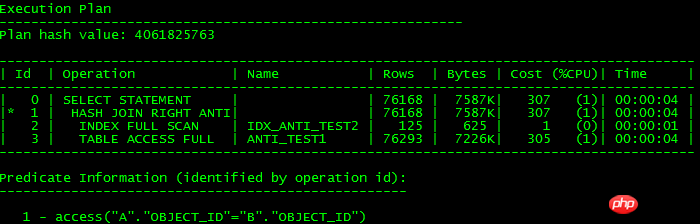

The above execution plans are the same, as shown below:

To put it bluntly, unnest subquery is conversion into JOIN form. If it can be converted into JOIN, you can use the efficient JOIN feature to improve operating efficiency. If it cannot be converted, use FILTER, which may affect efficiency. As can be seen from the execution plan of 11g's NULL AWARE, there is still a slight difference. There is no INDEX FULL SCAN scan, because there is no condition for ORACLE to know that object_id may exist NULL, so it cannot go to the index.

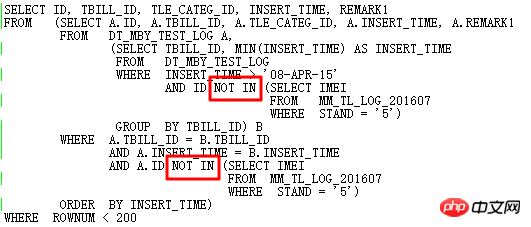

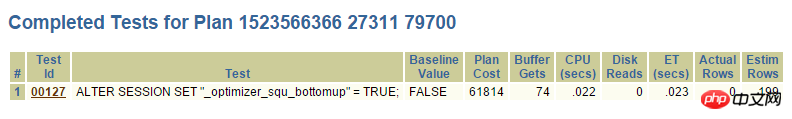

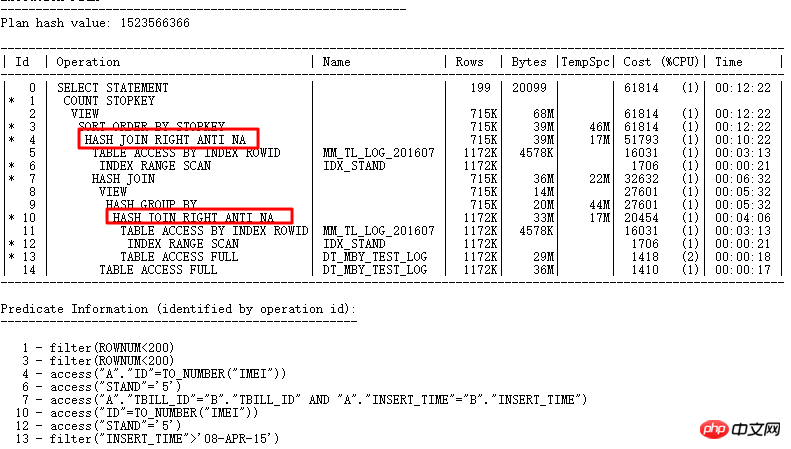

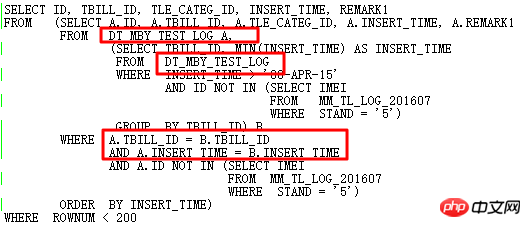

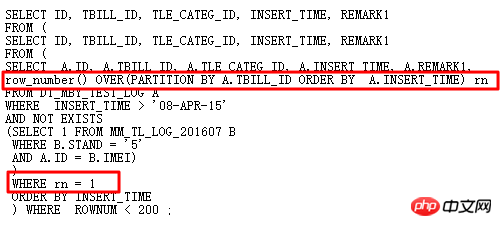

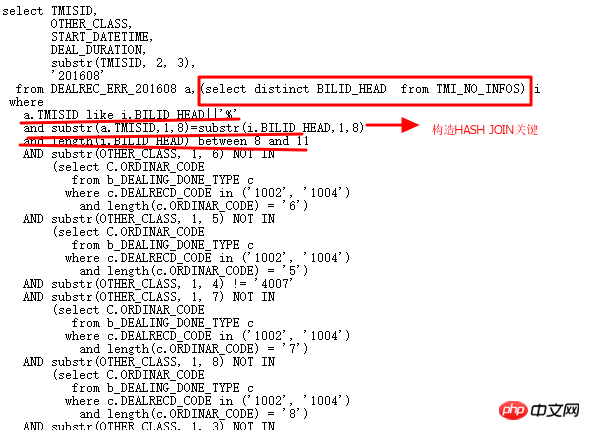

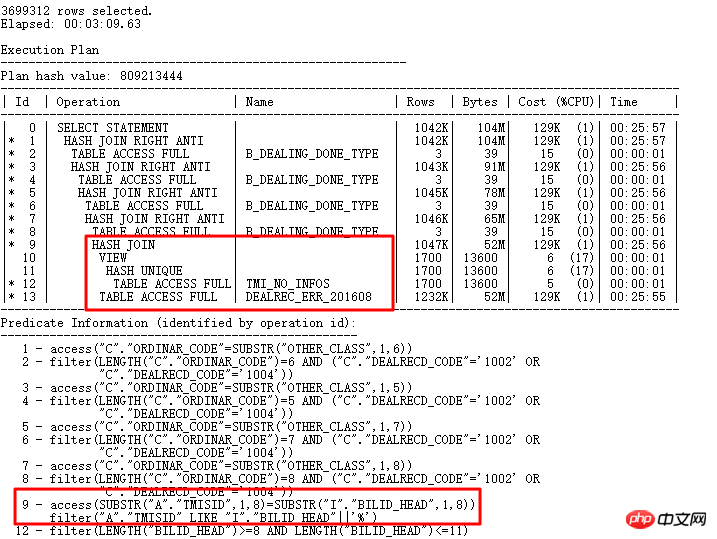

OK, now let’s talk about a case encountered during the database upgrade process. The background is that the following SQL has performance problems after upgrading from 11.2.0.2 to 11.2.0.4:

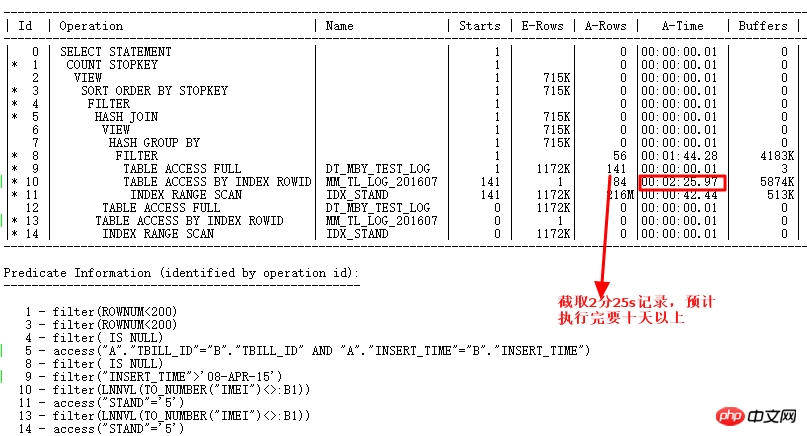

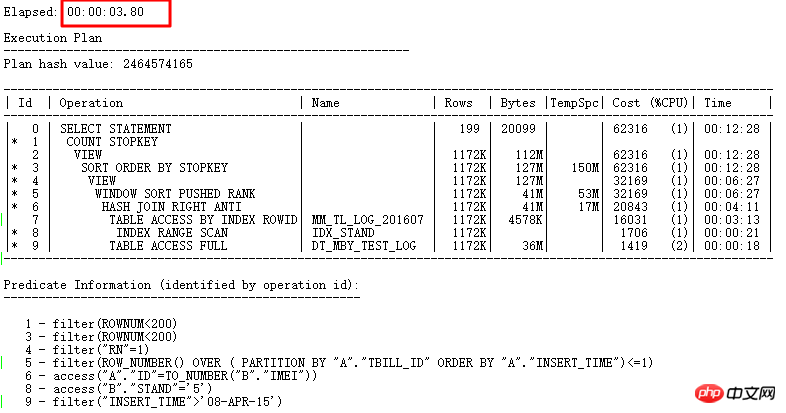

The execution plan is as follows:

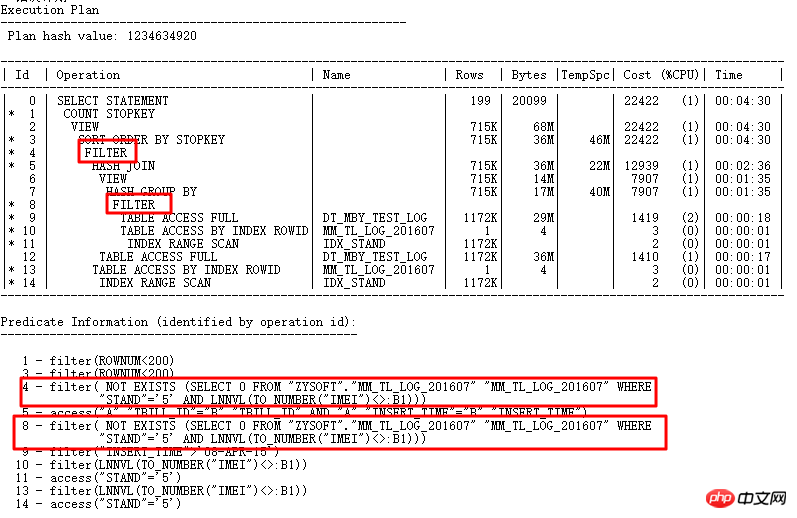

The two FILTERs ID=4 and ID=8 here both have 2 child nodes. Obviously, the NOT IN subquery cannot UNNEST. of. As mentioned above, NOT IN can be converted into NULL AWARE ANTI JOIN in 11g ORACLE CBO, and it can be converted on 11.2.0.2, but not on 11.2.0.4. How harmful are the two FILTER operations? You can see it by querying the actual execution plan:

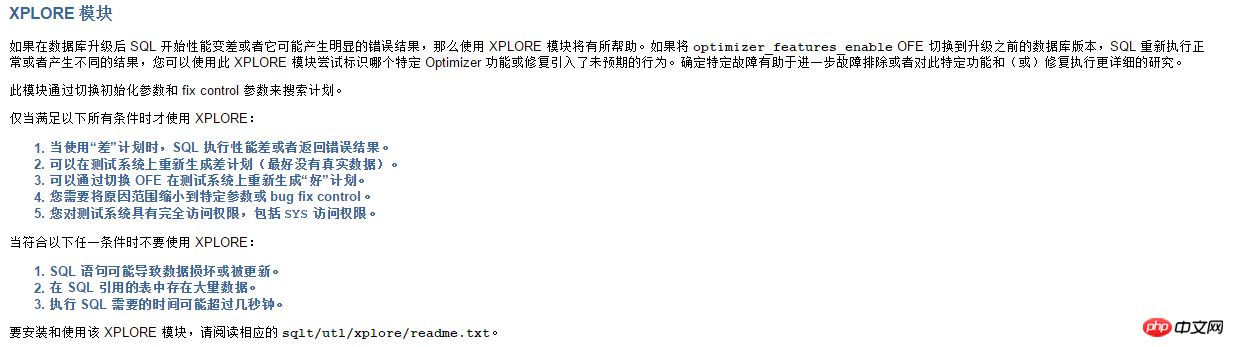

Use ALTER SESSION SET STATISTICS_LEVEL=ALL; intercept 2 minutes and 25 seconds of records to view In actual situation, the CARD=141 row with ID=9 step takes 2 minutes and 25s. The actual step is: 27w rows

, which means that this SQL will run for more than 10 days. Yes, it's simply too scary.

The analysis of this problem is as follows:

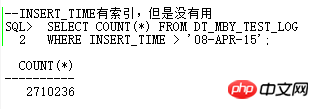

Query whether the implicit parameters related to NULL AWARE ANTI JOIN are valid

Is the collected statistical information valid?

Is it caused by a new version BUG or a parameter modification during the upgrade?

For the first situation:

The parameter is TRUE, obviously there is no problem.

For the second situation:

Collecting statistical information is found to be invalid.

At this time, we can only place our hope in the third situation: it may be a BUG or other parameters modified during the upgrade process that affect the inability to use NULL AWARE ANTI JOIN. There are so many ORACLE BUGs and parameters, so how do we quickly find out which BUG or parameter causes the root cause of the problem? Here I would like to share with you an artifact called SQLT, whose full name is (SQLTXPLAIN). This is a tool developed by ORACLE's internal performance department. It can be downloaded on MOS and has very powerful functions.

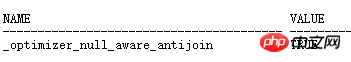

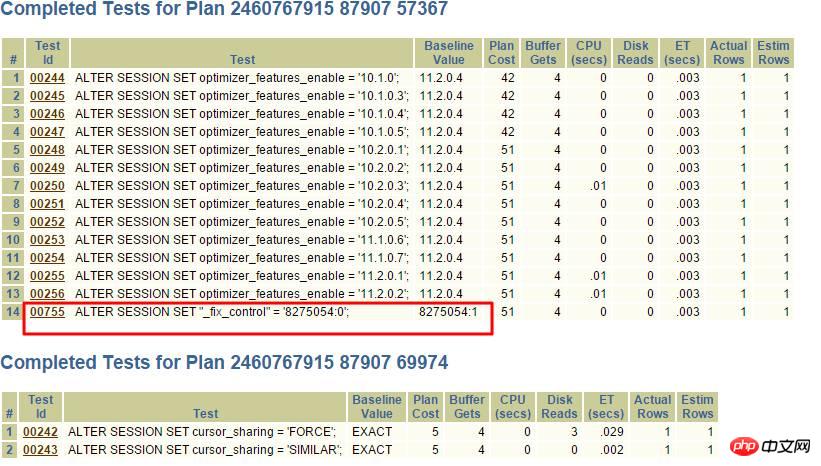

Back to the topic, now we need to find out whether the problem is caused by a new version BUG or a modified parameter. Then we need to use the advanced method of SQLT: XPLORE. XPLORE will continuously open and close various parameters in ORACLE to output the execution plan. Finally, we can find the matching execution plan through the generated report to determine whether it is a BUG problem or a parameter setting problem.

It is very simple to use. Refer to readme.txt and edit a separate file for the SQL that needs to be tested. Generally, we use the XPLAIN method for testing and call EXPLAIN PLAN FOR for testing, like this Ensure testing efficiency.

SQLT Find out the root cause of the problem:

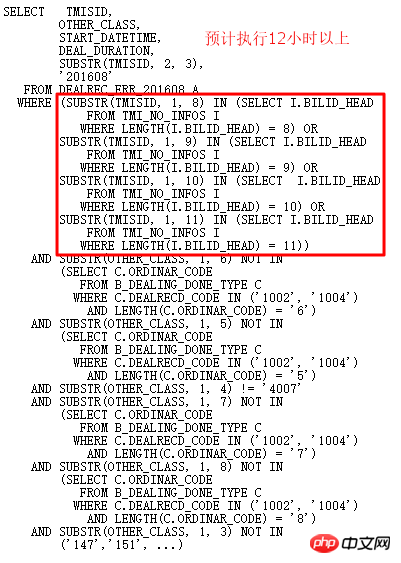

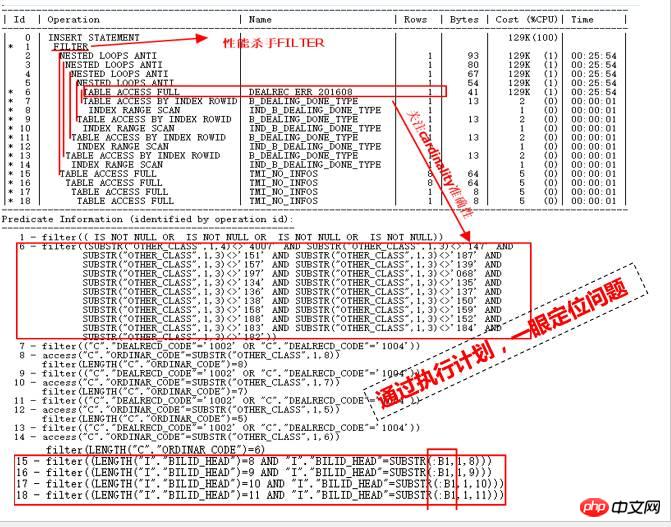

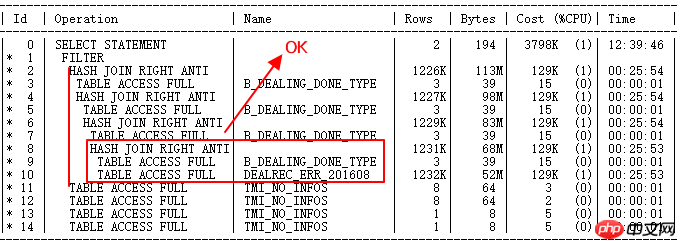

The main table DEALREC_ERR_201608 will return 2000w rows in the ID=6 query condition. It is estimated that there is only 1 row in the plan. Therefore, the NESTED LOOPS number will actually be executed tens of millions of times, resulting in low efficiency. HASH JOIN should be used. Need Update statistics.

In addition, ID=1 is FILTER, and its child nodes are ID=2 and ID=15, 16, 17, and 18. The same ID 15-18 has also been driven tens of millions of times.

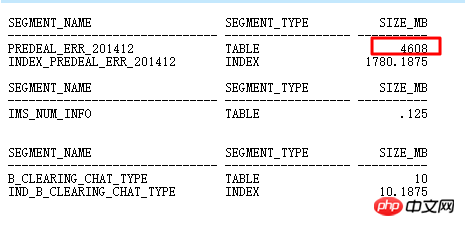

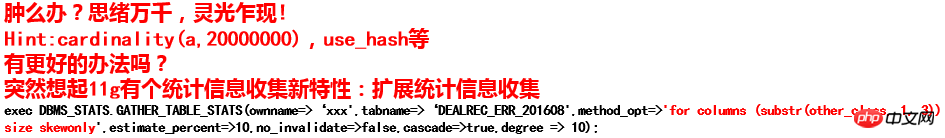

After finding out the root cause of the problem, solve it step by step. First, we need to solve the accuracy of the cardinality obtained by the query condition substr(other_class, 1, 3) NOT IN (‘147’, ‘151’, …) for the DEALREC_ERR_201608 table in the ID=6 part, that is, to collect statistical information.

However, it was found that using size auto and size repeat had no effect on collecting histograms for other_class. The query condition return estimate for other_class in the execution plan was still 1 (actual 20 million rows).

The execution plan after execution again is as follows:

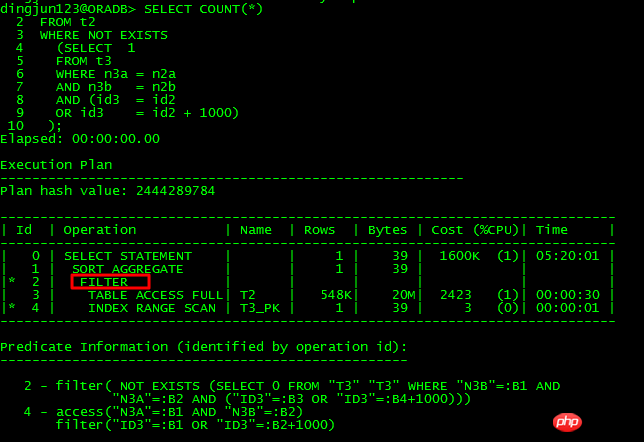

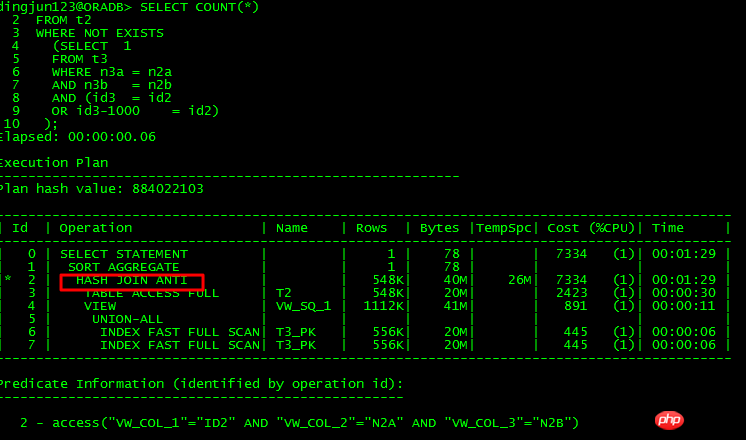

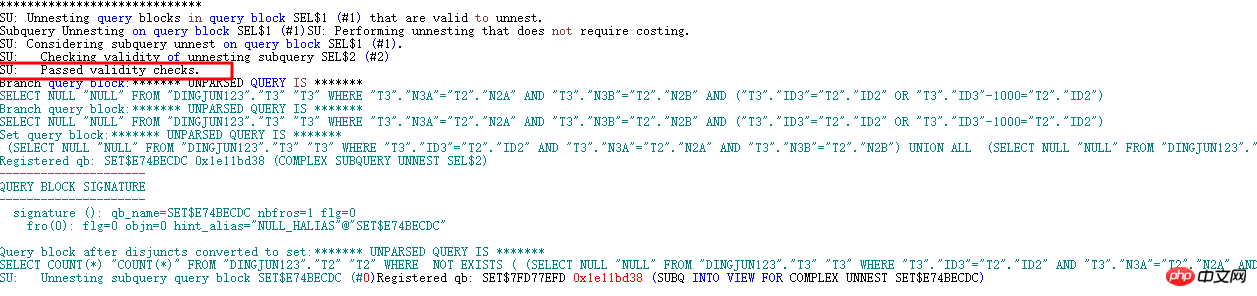

SU: SU bypassed: Invalid correlated predicates.

SU: Validity checks failed.

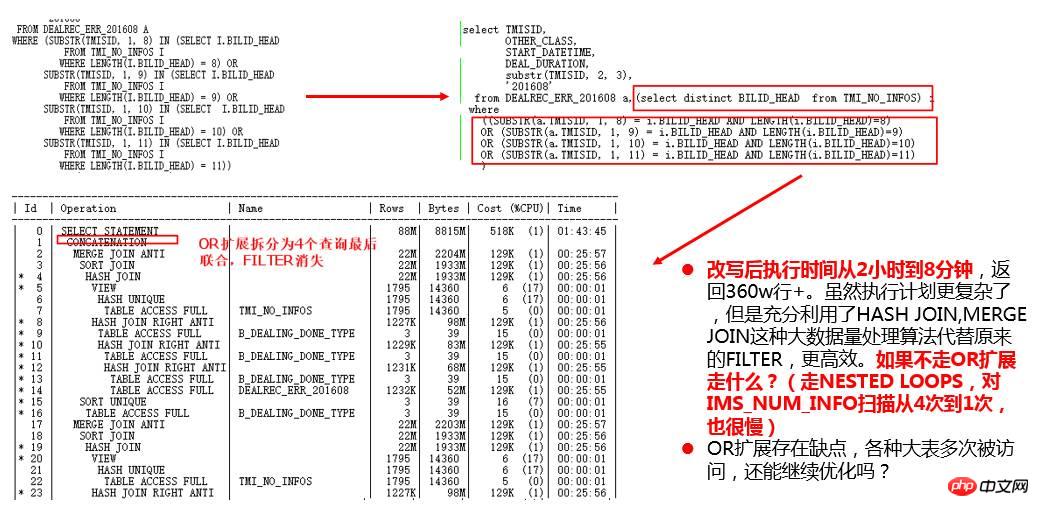

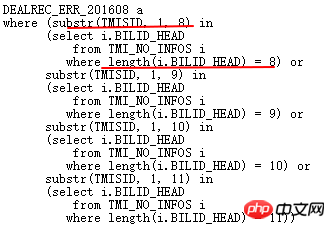

Can unnest appear:

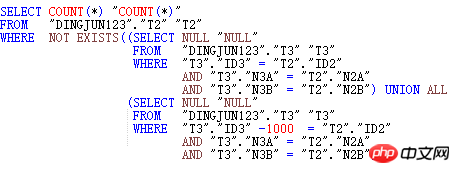

And rewrite the SQL as:

Finally, CBO first queries T3 conditions, makes a UNION ALL view, and then associates it with T2. From this point of view, the unnest requirements for the OR subquery are relatively strict. From the analysis of this statement, ORACLE can perform unnest without requiring operations on the main table columns. The optimizer itself did not shift the +1000 condition to the left. Because Strict, so in most cases, the OR subquery cannot be unnest, causing various performance problems.

(3) FILTER-like problems

FILTER-like problems are mainly reflected in UPDATE related updates and scalar subqueries. Although the FILTER keyword does not appear explicitly in such SQL statements, the internal The operation is exactly the same as the FILTER operation.

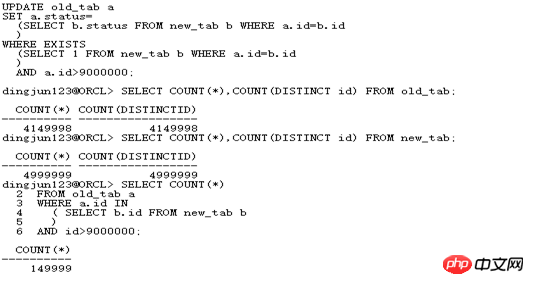

First look at the UPDATE association update:

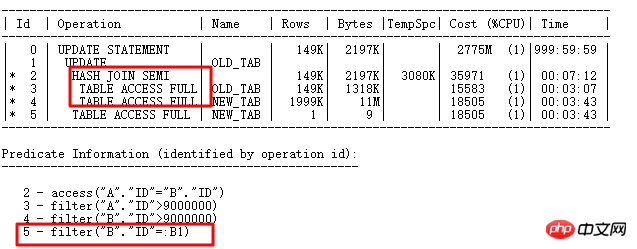

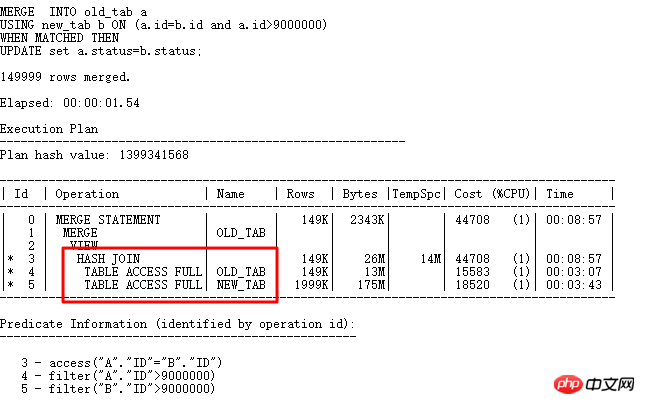

14999 rows need to be updated here, and the execution plan is as follows:

The ID=2 part is the where exists selection part. First query the conditions that need to be updated, and then execute the UPDATE associated subquery update. You can see that the binding variable appears in the ID=5 part: B1. Obviously the UPDATE operation is similar to In the original FILTER, for each selected row and the query associated with the subquery table NEW_TAB, if the ID column has fewer repeated values, the subquery will be executed a lot, which will affect the efficiency. That is, the operation with ID=5 will need to be executed a lot. Second-rate.

Of course, the field ID here is very unique. You can create UNIQUE INDEX and ordinary INDEX lights, so that the index can be used in step 5. Here is an example of this UPDATE optimization method. Without building an index, you can also handle such UPDATE: MERGR and UPDATE INLINE VIEW methods.

Use HASH JOIN directly in MERGE to avoid multiple access operations, thereby greatly increasing efficiency. Let’s take a look at how to write UPDATE LINE VIEW:

UPDATE

(SELECT a.status astatus,

b.status bstatus

FROM old_tab a,

new_tab b

WHERE a.id=b .id

AND a.id >9000000

)

SET astatus=bstatus;

Requires b.id to be a preserved key (unique index, Unique constraint, primary key), 11g bypass_ujvc will report an error, similar to the MERGE operation.

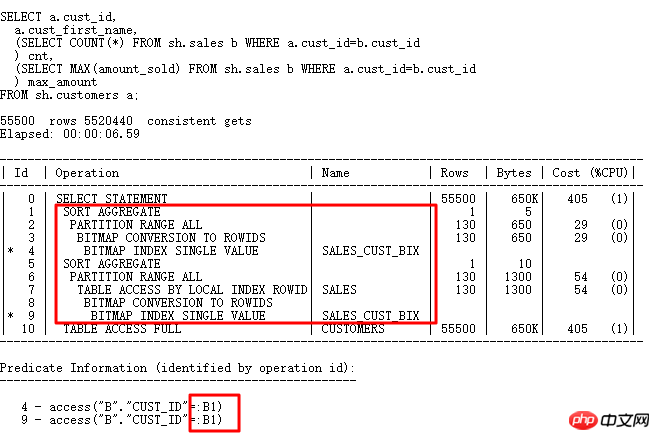

Let’s take a look at scalar subqueries. Scalar subqueries are often the killer that causes serious performance problems:

The plan of scalar subqueries and the execution of ordinary plans The order is different. Although the scalar subquery is on top, it is driven by the results of the CUSTOMERS table below. Each row drives a scalar subquery query (with the exception of CACHE), which is also similar to the FILTER operation.

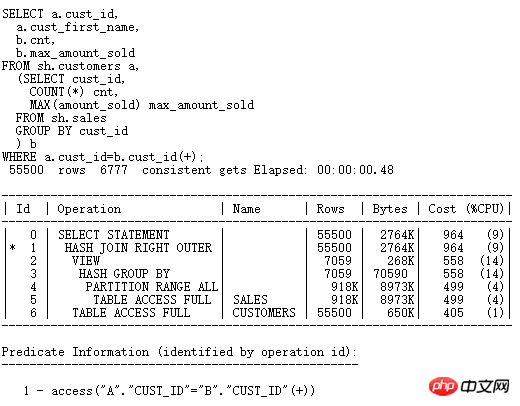

If you want to optimize the scalar subquery, you usually need to rewrite the SQL and change the scalar subquery into an outer join form (it can also be rewritten into a normal JOIN if the constraints and business are satisfied):

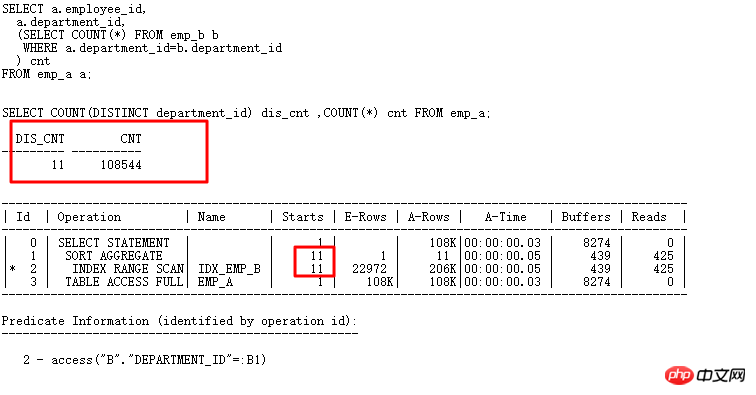

After rewriting, the efficiency is greatly increased, and the HASH JOIN algorithm is used. Let's take a look at CACHE in the scalar subquery (FILTER and UPDATE related updates are similar). If the associated column has a lot of repeated values, the subquery will be executed less often, and the efficiency will be better at this time:

The scalar subquery is the same as FILTER and has CACHE. For example, the above emp_a has 108K rows, but the repeated department_id is only 11. In this way, the query only scans 11 times, and the number of times the subquery table is scanned is small. , the efficiency will be improved.

Regarding the FILTER performance killer problem, I will mainly share these three points. Of course, there are many other points worth noting, which require us to pay more attention and accumulation on a daily basis, so as to become familiar with how to deal with some problems of the optimizer.

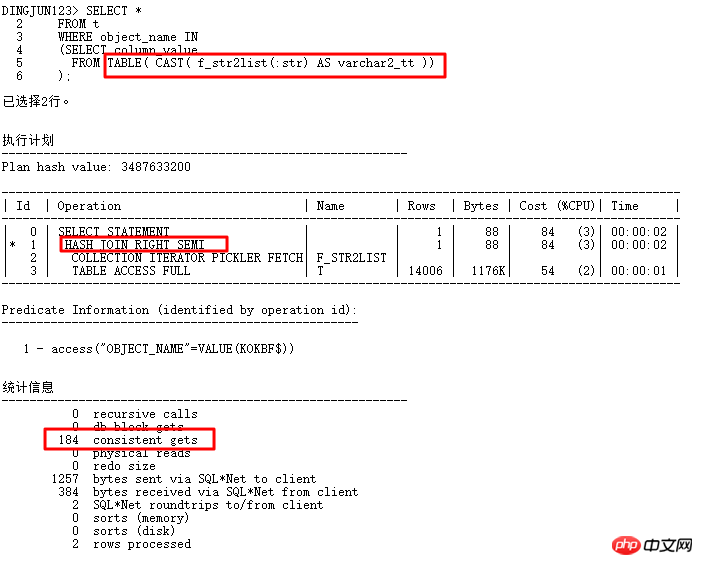

This problem comes from the binding in list problem. Use the TABLE function to construct the incoming comma-separated values as a subquery. Conditions, generally the front-end passes in less values, but in fact, after the HASH JOIN operation, the T table index cannot be used. Once the execution frequency is high, it will inevitably have a greater impact on the system. Why does ORACLE not know that the TABLE function passes in very few values? What's the value?

further analysis:

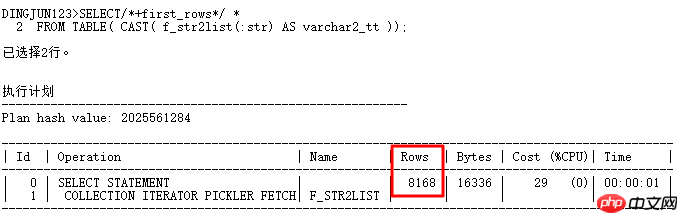

It can be seen from the above results that the default number of rows of the TABLE function is 8168 rows (the pseudo table created by the TABLE function has no statistical information). This value is not small, and is generally much more than the number of rows in actual applications. , often causing the execution plan to go through hash join instead of nested loop. How to change this situation? Of course, you can change the execution plan through hint prompts. For where in list, commonly used hints are:

first_rows, index, cardinality, use_nl, etc.

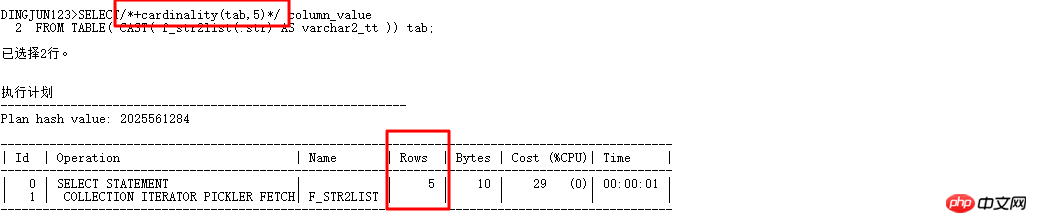

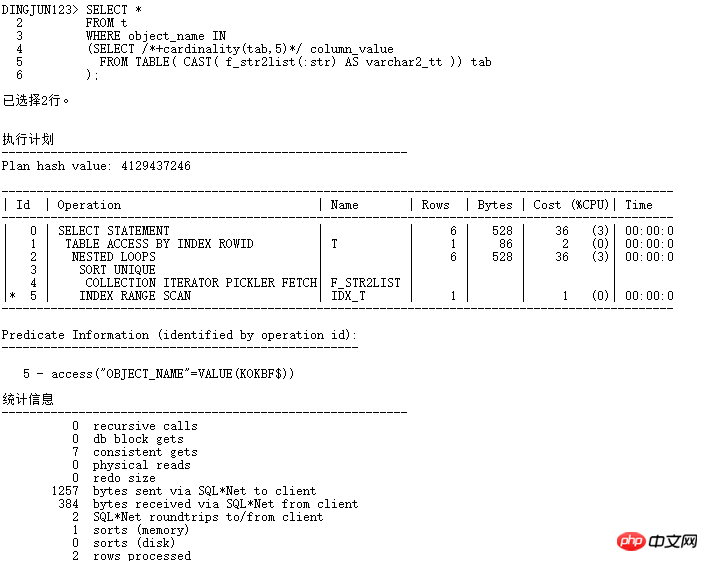

Here is a special introduction to cardinality(table|alias,n). This hint is very useful. It can make the CBO optimizer think that the number of rows in the table is n, so that the execution plan can be changed. Now rewrite the above query:

Added cardinality(tab,5) to automatically run the CBO optimizer. The optimizer regards the cardinality of the table as 5, and the previous where in When the list query base defaults to 8168, hash join is used. Now that cardinality is available, try it quickly:

Now use NESTED LOOPS operation, and child nodes can use INDEX RANGE SCAN, the logical read changes from 184 to 7, and the efficiency is improved dozens of times. Of course, in actual applications, it is best not to add hints, and you can use SQL PROFILER binding.

Oracle’s internal calculation of selectivity is calculated in numeric format. Therefore, when encountering a string type, the string will be converted into a RAW type, and then Convert the RAW type to a number, and ROUND to 15 digits from the left. If the converted number is very large, the original strings may be quite different, but the internally converted numbers are relatively close, which may cause inaccurate selective calculations. . For example:

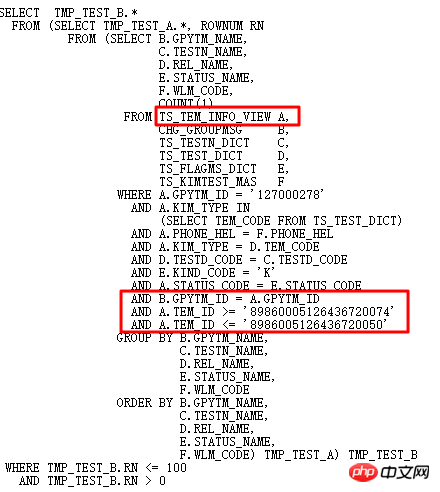

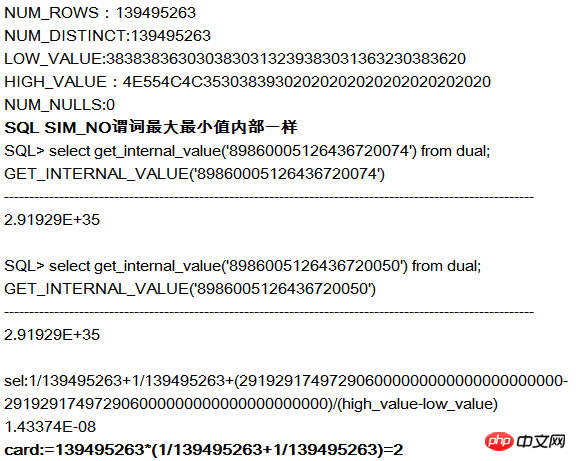

The execution plan is as follows:

The SQL execution plan uses the TEM_ID index and needs to run for more than 1 hour. The cardinality of the corresponding steps in the plan is very small (tens of levels), but the actual cardinality is very large (millions of levels), and the judgment statistical information is wrong.

Why go to the wrong index?

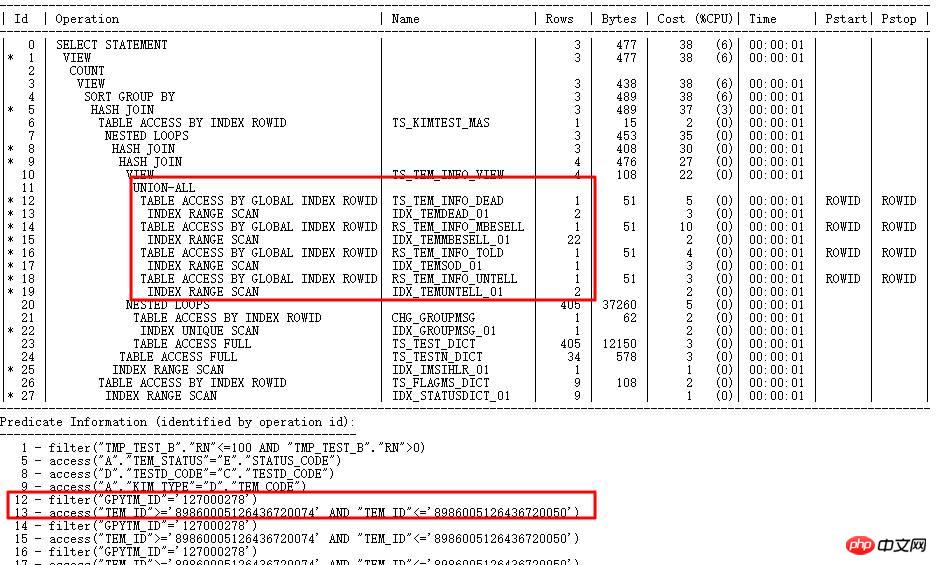

Since TEM_ID is a CHAR string type with a length of 20, CBO's internal calculation selectivity will first convert the string to RAW, and then convert RAW to a number, ROUND 15 digits from the left. Therefore, it is possible that the string values are very different, and the values are similar after conversion to numbers (because more than 15 digits are padded with 0), resulting in selective calculation errors. Take the TEM_ID column in TS_TEM_INFO_DEAD as an example:

The actual number of rows queried according to the conditions is 29737305. Therefore, the index goes wrong.

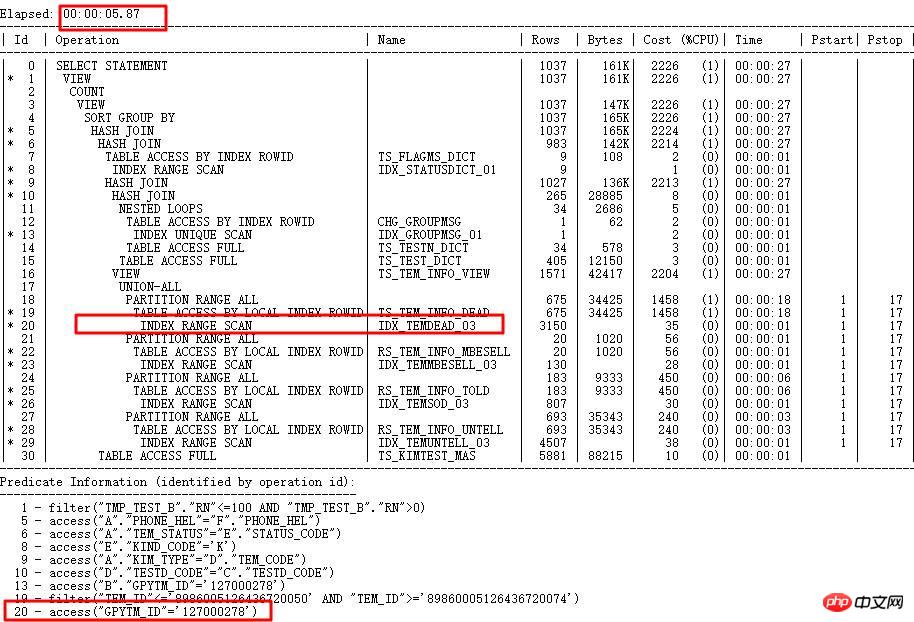

Solution:

Collect the TEM_ID column histogram. Due to certain limitations of the internal algorithm, strings with different values may have the same internally calculated values. Therefore, after collecting the histograms, for the string The values are different, but they are the same after being converted into numbers. ORACLE will store the actual value in ENDPOINT_ACTUAL_VALUE for verification and improve the accuracy of the execution plan. After correctly indexing GPYTM_ID, the running time ranges from more than 1 hour to less than 5 seconds.

Each version will introduce many new features. Improper use of new features may cause some serious problems. Common ones such as ACS and cardinality feedback lead to frequent changes in execution plans, affecting efficiency, and too many sub-cursors. Therefore, new features need to be used with caution, including the aforementioned 11g null aware anti join, which also has many bugs.

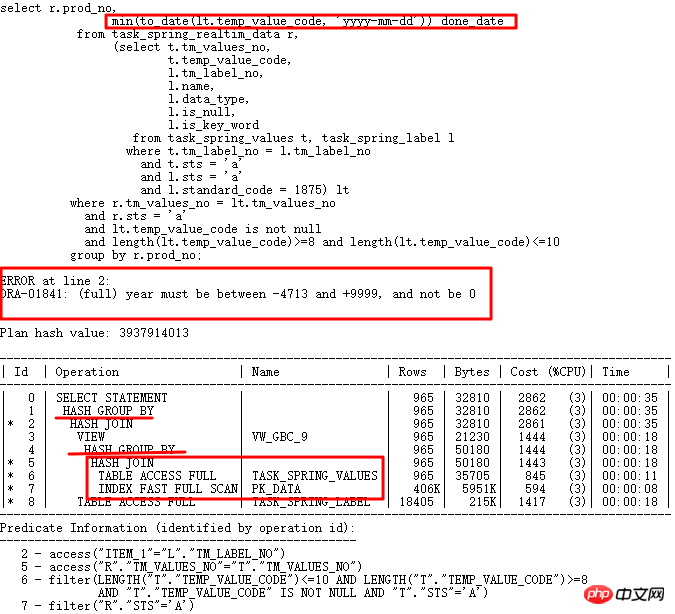

The case to be analyzed today is the SQL encountered during the major version upgrade from 10g to 11g. It ran normally in 10g, but executed incorrectly in 11g. The SQL is as follows:

10g is normal. After upgrading to 11g r2, the date conversion error occurs. temp_value_code stores multiple format strings. Correct execution plan LT related query is executed first and then related to the table. The wrong execution plan is that TASK_SPRING_VALUES is first associated with the table and then grouped, as a VIEW and then associated with TASK_SPRING_LABEL, and then grouped again. There are 2 GROUP BY operations here, which is different from the 10g execution plan with only 1 GROUP BY operation, which eventually leads to an error.

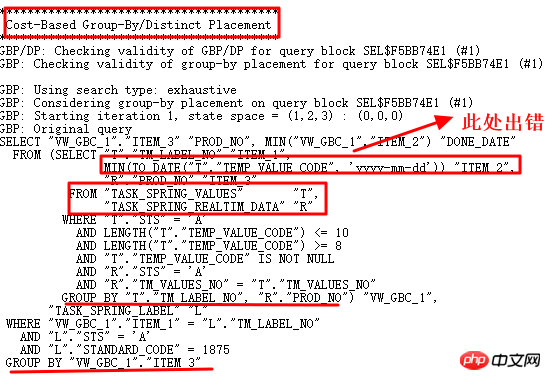

Obviously, we need to study why there are two GROUP BY operations. The first choice is 10053:

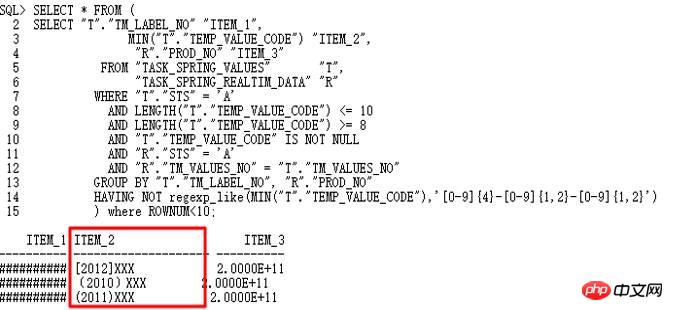

Analyze the operation according to 10053 and see if a non-date format is found. value:

A non-yyyy-mm-dd format string was indeed found, therefore, the to_date operation failed. As can be seen from 10053, the Group by/Distinct Placement operation is used here. Therefore, it is necessary to find the corresponding control parameters and turn off this query conversion.

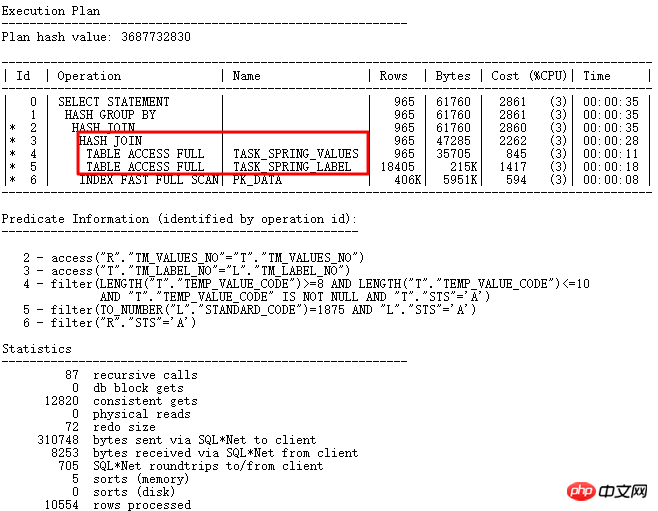

Correct after turning off GBP implicit parameters: _optimizer_group_by_placement. The correct execution plan is as follows:

Thinking: The essence of this problem lies in the unreasonable design of field usage, in which temp_value_code is used as varchar2 to store ordinary characters, numeric characters, and date format yyyy-mm -dd, there are to_number, to_date and other conversions in the program, which are very dependent on the order of table connections and conditions in the execution plan. Therefore, good design is very important, especially to ensure the consistency of the associated field types and the single role of the fields, and to meet the requirements of the paradigm.

Well-structured SQL can be easier to understand by CBO, so that it can better perform query conversion operations, thereby laying the foundation for subsequent generation of the best execution plan, and then practical application During the process, CBO was unable to do anything because it did not pay attention to SQL writing. The following is a case study of pagination writing.

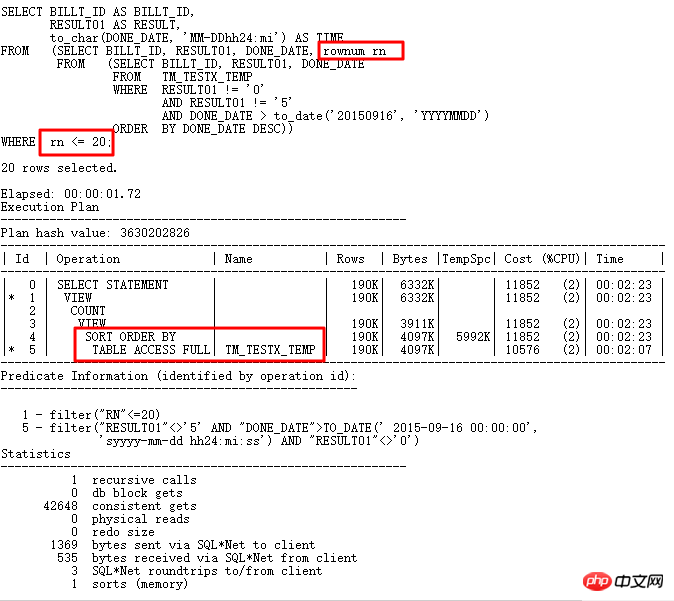

Inefficient paging writing method:

#Original writing method The innermost layer is queried based on use_date and other conditions, then sorted, rownum is obtained and aliased, and the outermost layer is used rnlaw. What's the problem?

If you write paging directly <,<=, you can get the rownum directly after sorting (two levels of nesting). If you need to get the interval value, get >,>= at the outermost level (three levels of nesting) nested).

This statement obtains <= and uses three levels of nesting, which makes it impossible to use the paging query STOPKEY algorithm because rownum will prevent predicate push, resulting in no STOPKEY operation in the execution plan.

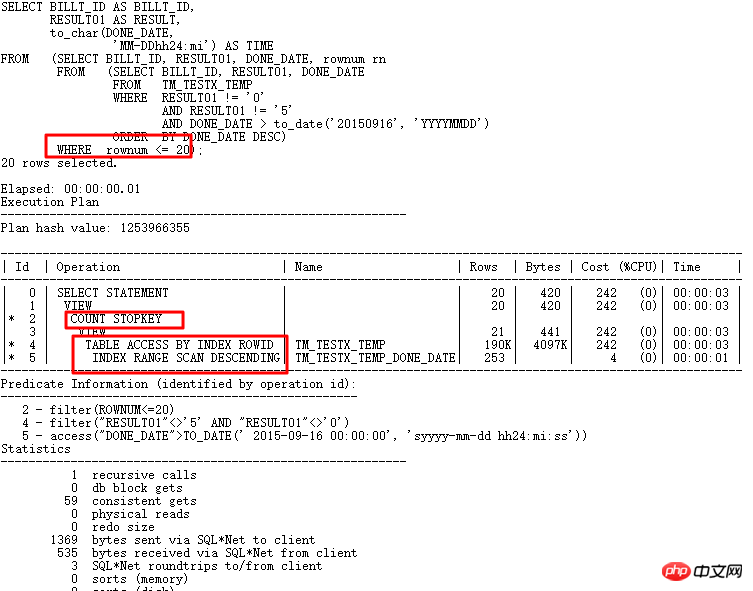

<=Paging only requires 2 levels of nesting, and the done_date column has an index. According to the condition done_date>to_date('20150916','YYYYMMDD') and only the first 20 rows are obtained, the index and STOPKEY algorithm can be efficiently utilized , after the rewriting is completed, index descending scan is used, the execution time is from 1.72s to 0.01s, and the logical IO is from 42648 to 59, as follows:

The efficient paging method should comply with the specification. And can make full use of indexes to eliminate sorting.

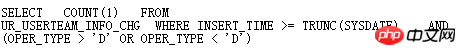

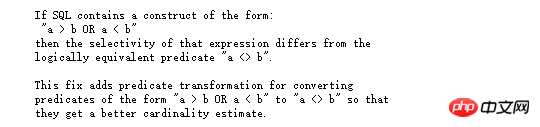

CBO BUG appears more often in query conversion. Once a BUG occurs, it may be difficult to find. At this time, you should quickly analyze 10053 or use SQLT XPLORE Find the source of the problem. For example:

The oper_type of this table has an index, and the condition oper_type>'D' or oper_type<'D' is better to use the index, but in fact Oracle has gone Full table scan, quick analysis through SQLT

#Obviously, _fix_control=8275054 is very suspicious. By querying MOS:

7 HASH collision problem

7 HASH collision problem

HASHJOIN is an efficient algorithm specially used for big data processing, and can only be used for equivalent join conditions, for table build table (hash table) and probe table Construct a HASH operation to find the result set that meets the conditions.

The general format is as follows:

Let’s use a small example to analyze hash collision:

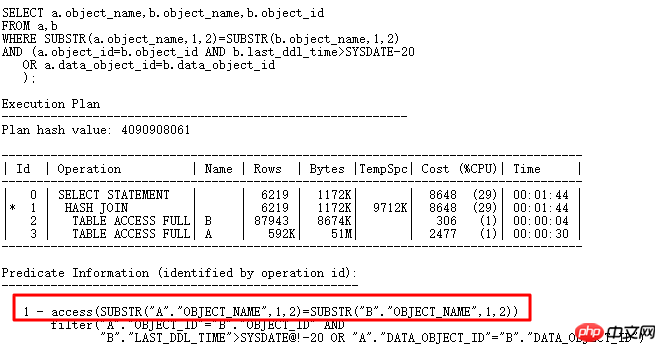

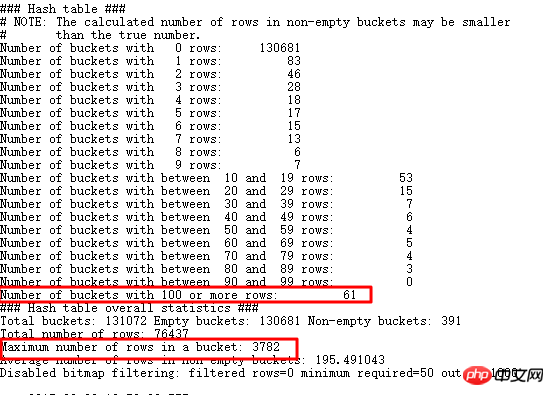

There are 61w multiple records in table a, and 7w multiple records in table b. This SQL result returns 80,000 multiple records. From the execution plan, there is no problem with HASH JOIN operation. However, the actual execution of this SQL was not completed for more than 10 minutes. The efficiency was very low, and the CPU usage increased suddenly, which was much longer than the time to access the two tables.

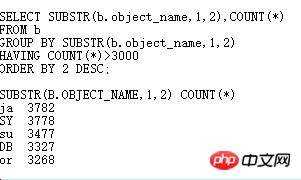

If you understand HASHJOIN, at this time, you should consider whether you have encountered hash collision. If a large amount of data is stored in many buckets, then searching for data in such hash buckets is similar to nested loops. , the efficiency will inevitably be greatly reduced. Further analysis is as follows:

Look for values that are greater than 3000 duplicate data. Sure enough, there are many. Of course, there are also many larger remaining data. Detection HASH JOIN, you can use EVENT 10104:

You can see that there are 61 buckets that store 100 rows+, and the largest bucket stores 3782 items, that is, and What we found out is consistent. Let’s go back to the original SQL:

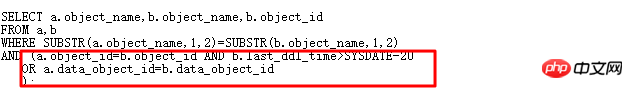

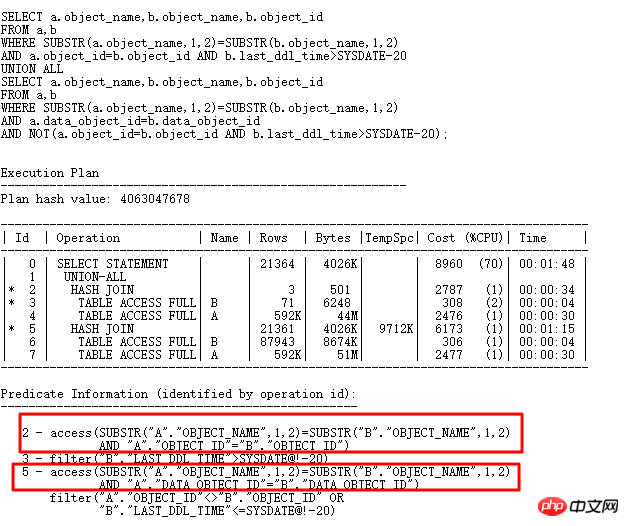

Why did Oralce choose substr(b.object_name,1,2) to build the HASH table? If OR can be expanded and the original SQL is changed to a UNION ALL form, then the HASH table It can be constructed using substr(b.object_name,1,2), b.object_id and data_object_id. Then the uniqueness must be very good, which should solve the hash collision problem. Rewrite it as follows:

The current SQL execution time has gone from the original 10 minutes with no results to 4 seconds after execution. Let’s look at the internally constructed HASHTABLE information:

The one with the most Only 6 pieces of data are stored in the bucket, so the performance must be much better than before. Hash collisions are very harmful. In practical applications, they may be more complicated. If you encounter hash collision problems, the best way is to rewrite the SQL. Try to analyze it from a business perspective and see if you can add other more selective columns for JOIN. .

Looking back, since I know that after rewriting it to UNION ALL, I will use 2 combined columns to build a better HASH table, so why doesn't Oracle do this? It's very simple. I'm just doing this deliberately as an example to illustrate the problem of HASH collision. For this kind of simple SQL, if you have more selective columns and collect statistical information, Oracle can OR expand the SQL. .

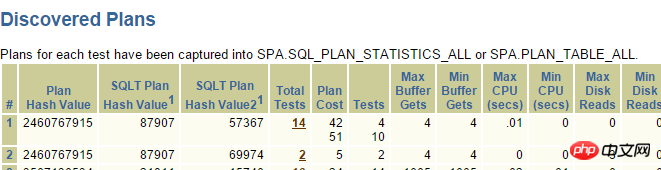

There are many SQLs in application systems. If you always play the role of a firefighter to solve online problems, it will obviously not be able to meet the rapid development of today's IT systems. According to the needs of database-based systems, the main performance problem lies in SQL statements. If the SQL statements can be reviewed during the development and testing phase, the SQL to be optimized can be found, and intelligent prompts can be given to quickly assist optimization, then numerous online question. In addition, online SQL statements can also be continuously monitored to promptly detect statements with performance problems, thereby achieving the purpose of full lifecycle management of SQL.

To this end, the company combined many years of operation and maintenance and optimization experience to independently develop SQL audit tools, which greatly improves the efficiency of SQL audit optimization and performance monitoring processing.

SQL audit tool adopts a four-step rule: SQL collection - SQL analysis - SQL optimization - online tracking. The four-step SQL audit method is different from the traditional SQL optimization method. It focuses on SQL analysis and optimization before the system goes online. Focus on solving SQL problems before the system goes online and nip performance problems in their infancy. As shown in the figure below:

The following problems can be solved through the SQL performance management platform:

Before: SQL performance audit before going online, nip performance problems in their infancy;

During: SQL performance monitoring processing, timely discover changes in SQL performance after going online, in SQL performance When changes occur and do not cause serious problems, resolve them in a timely manner;

Afterwards: TOPSQL monitoring and timely alarm processing.

SQL performance management platform realizes 360-degree full life cycle management and control of SQL performance, and through various intelligent prompts and processing, most of the performance problems originally caused by SQL are solved. Solve problems before they occur and improve system stability.

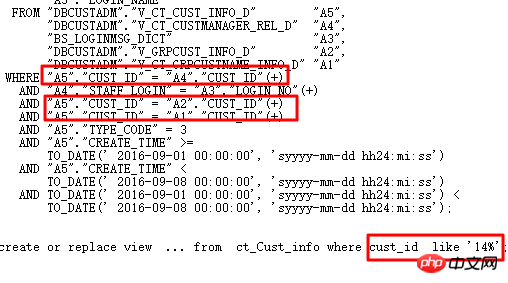

The following is a typical case of SQL audit:

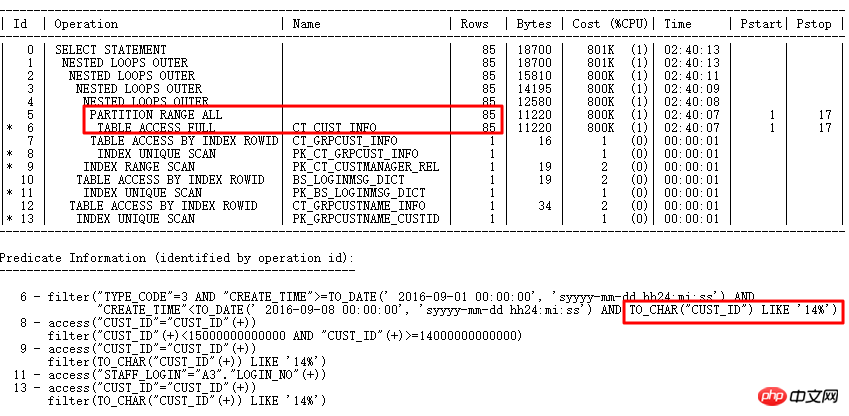

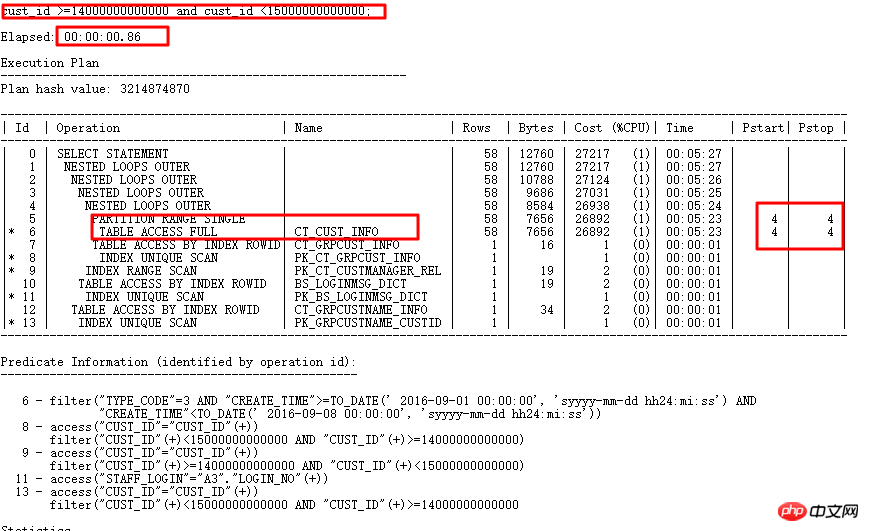

The execution plan is as follows:

The original SQL executes for 1688s. Accurately find optimization points through SQL audit intelligent optimization - partition columns have type conversion. 0.86s after optimization.

SQL audit is a module of the Xinju Database Performance Management Platform DPM. If you want to know more about DPM, you can join Master Zou Deyu (WeChat: carydy) for communication and discussion.

Today I mainly share with you some problems existing in the Oracle optimizer and solutions to common problems. Of course, the optimizer problems are not limited to those shared today. Although CBO is very powerful and has been greatly improved in 12c, However, there are many problems. Only by accumulating and observing more in daily life and mastering certain methods can we strategize after encountering problems and win the battle.

Q&A

Q1: Is hash join sorted? Can you briefly explain the principle of hash join?

A1: ORACLE HASH JOIN itself does not require sorting, which is one of the features that distinguishes SORTMERGE JOIN. The principle of ORACLE HASH JOIN is relatively complicated. You can refer to the HASH JOIN part of Jonathan Lewis's Cost-Based Oracle Fundamentals. The most important thing for HASH JOIN is to figure out when it will be slow based on the principle. For example, HASH_AREA_SIZE is too small and HASH TABLE cannot be completely placed. In the memory, disk HASH operation will occur, and the HASH collision mentioned above will occur.

Q2: When not to index?

A2: There are many cases where indexing is not used. The first reason is that the statistical information is inaccurate. The second reason is that the selectivity is too low. Indexing is less efficient than full scanning. Also A common problem is that operations are performed on index columns, resulting in the inability to index. There are many other reasons why the index cannot be used. For details, please refer to the MOS document: Diagnosing Why a Query is Not Using an Index (Document ID 67522.1).

The above is the detailed content of Solve the SQL optimization problem of CBO (detailed explanation with pictures and texts). For more information, please follow other related articles on the PHP Chinese website!