I woke up in the morning with nothing to do, and out of nowhere a joke from the Encyclopedia of Embarrassing Things popped up. Then I thought that since you sent it to me, I would write a crawler to crawl on your website, as a way to practice my skills. , and secondly, it’s a bit of fun.

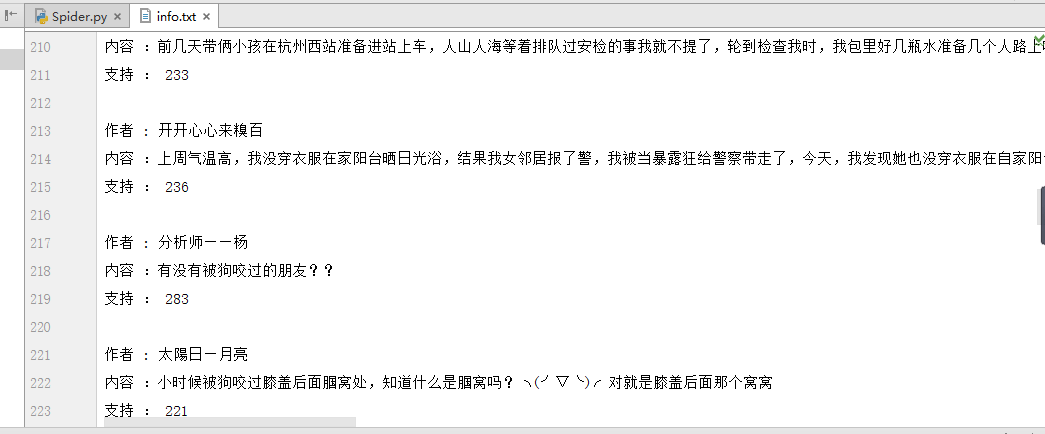

In fact, I have been exposed to the content of the database in the past two days. The crawled data can be saved in the database for future use. Okay, let’s not talk nonsense. Let’s take a look at the data results crawled by the program.

It is worth mentioning that I want to crawl embarrassing things in the program all at once There are 30 pages of content in the encyclopedia, but a connection error occurred. When I reduced the page number to 20 pages, the program can run normally. I don’t know the reason. If someone who is eager to know can tell me, I will be grateful. All.

The program is very simple, just upload the source code

# coding=utf8 import re import requests from lxml import etree from multiprocessing.dummy import Pool as ThreadPool import sys reload(sys) sys.setdefaultencoding('utf-8') def getnewpage(url, total): nowpage = int(re.search('(\d+)', url, re.S).group(1)) urls = [] for i in range(nowpage, total + 1): link = re.sub('(\d+)', '%s' % i, url, re.S) urls.append(link) return urls def spider(url): html = requests.get(url) selector = etree.HTML(html.text) author = selector.xpath('//*[@id="content-left"]/p/p[1]/a[2]/@title') content = selector.xpath('//*[@id="content-left"]/p/p[2]/text()') vote = selector.xpath('//*[@id="content-left"]/p/p[3]/span/i/text()') length = len(author) for i in range(0, length): f.writelines('作者 : ' + author[i] + '\n') f.writelines('内容 :' + str(content[i]).replace('\n','') + '\n') f.writelines('支持 : ' + vote[i] + '\n\n') if __name__ == '__main__': f = open('info.txt', 'a') url = 'http://www.qiushibaike.com/text/page/1/' urls = getnewpage(url, 20) pool = ThreadPool(4) pool.map(spider,urls) f.close()

If there are any parts you don’t understand, you can refer to my first three articles in sequence .

For more articles related to Python making embarrassing encyclopedia crawlers, please pay attention to the PHP Chinese website!

What software is Penguin?

What software is Penguin?

How to use a few thousand to make hundreds of thousands in the currency circle

How to use a few thousand to make hundreds of thousands in the currency circle

Introduction to hard disk performance indicators

Introduction to hard disk performance indicators

Causes and solutions of runtime errors

Causes and solutions of runtime errors

Standby shortcut key

Standby shortcut key

The latest price of fil currency

The latest price of fil currency

How to view Tomcat source code

How to view Tomcat source code

WeChat restore chat history

WeChat restore chat history