The following is an example of analyzing nginx logs using python pandas. It has good reference value and I hope it will be helpful to everyone. Let’s take a look together

Requirements

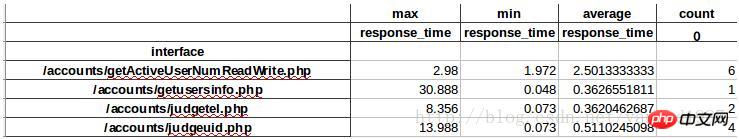

By analyzing the nginx access log, obtain the maximum, minimum, and average response time of each interface and visits.

Implementation principle

Store the nginx log uriuriupstream_response_time field in the pandas dataframe, and then implement it through grouping and data statistics functions.

Implementation

1. Preparation

#创建日志目录,用于存放日志 mkdir /home/test/python/log/log #创建文件,用于存放从nginx日志中提取的$uri $upstream_response_time字段 touch /home/test/python/log/log.txt #安装相关模块 conda create -n science numpy scipy matplotlib pandas #安装生成execl表格的相关模块 pip install xlwt

2. Code implementation

#!/usr/local/miniconda2/envs/science/bin/python

#-*- coding: utf-8 -*-

#统计每个接口的响应时间

#请提前创建log.txt并设置logdir

import sys

import os

import pandas as pd

mulu=os.path.dirname(__file__)

#日志文件存放路径

logdir="/home/test/python/log/log"

#存放统计所需的日志相关字段

logfile_format=os.path.join(mulu,"log.txt")

print "read from logfile \n"

for eachfile in os.listdir(logdir):

logfile=os.path.join(logdir,eachfile)

with open(logfile, 'r') as fo:

for line in fo:

spline=line.split()

#过滤字段中异常部分

if spline[6]=="-":

pass

elif spline[6]=="GET":

pass

elif spline[-1]=="-":

pass

else:

with open(logfile_format, 'a') as fw:

fw.write(spline[6])

fw.write('\t')

fw.write(spline[-1])

fw.write('\n')

print "output panda"

#将统计的字段读入到dataframe中

reader=pd.read_table(logfile_format,sep='\t',engine='python',names=["interface","reponse_time"] ,header=None,iterator=True)

loop=True

chunksize=10000000

chunks=[]

while loop:

try:

chunk=reader.get_chunk(chunksize)

chunks.append(chunk)

except StopIteration:

loop=False

print "Iteration is stopped."

df=pd.concat(chunks)

#df=df.set_index("interface")

#df=df.drop(["GET","-"])

df_groupd=df.groupby('interface')

df_groupd_max=df_groupd.max()

df_groupd_min= df_groupd.min()

df_groupd_mean= df_groupd.mean()

df_groupd_size= df_groupd.size()

#print df_groupd_max

#print df_groupd_min

#print df_groupd_mean

df_ana=pd.concat([df_groupd_max,df_groupd_min,df_groupd_mean,df_groupd_size],axis=1,keys=["max","min","average","count"])

print "output excel"

df_ana.to_excel("test.xls")##3. The printed form is as follows:

Key Points

1. When the log file is relatively large Do not use readlines() or readline() when reading, as this will read all the logs into the memory, causing the memory to become full. Therefore, the for line in fo iteration method is used here, which basically does not occupy memory. 2. To read nginx logs, you can use pd.read_table(log_file, sep=' ', iterator=True), but the sep we set here cannot match the split normally, so first split nginx with split , and then save it to pandas. 3. Pandas provides IO tools to read large files in chunks, use different chunk sizes to read and then call pandas.concat to connect to the DataFrameRelated recommendations:python3 pandas reads MySQL data and inserts

##

The above is the detailed content of Example of analyzing nginx logs with python+pandas. For more information, please follow other related articles on the PHP Chinese website!

nginx restart

nginx restart

Detailed explanation of nginx configuration

Detailed explanation of nginx configuration

Detailed explanation of nginx configuration

Detailed explanation of nginx configuration

What are the differences between tomcat and nginx

What are the differences between tomcat and nginx

Usage of between function

Usage of between function

How to solve garbled tomcat logs

How to solve garbled tomcat logs

What are the SEO keyword ranking tools?

What are the SEO keyword ranking tools?

Data encryption storage measures

Data encryption storage measures

How to delete blank pages in word

How to delete blank pages in word