The database is always the most critical part of the application. At the same time, the higher the concurrency stage, the database often becomes a bottleneck. If the database tables and indexes are not well designed at the beginning, the database will expand horizontally and sub-databases and sub-tables will be lost later. Encounter difficulties.

For Internet companies, MySQL database is generally used.

1. The overall structure of the database

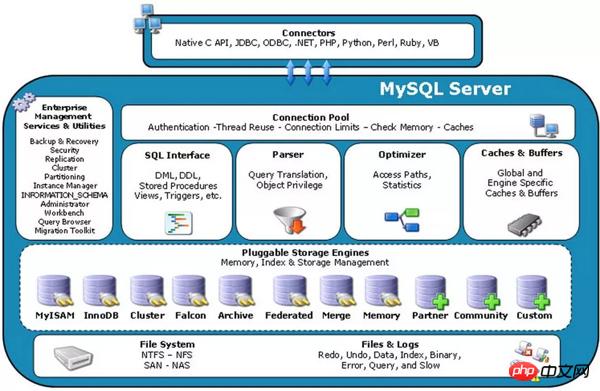

Let’s first look at the overall structure of MySQL data as follows:

This is a very classic picture The MySQL system architecture diagram shows the functions of each part of MySQL through this diagram.

When the client connects to the database, it first faces the connection pool, which is used to manage user connections and perform certain authentication and authorization.

After connecting to the database, the client will send SQL statements, and the SQL interface module is here to accept the user's SQL statements.

SQL statements often need to comply with strict grammar rules, so a grammar parser is required to parse the statement. The principle of parsing grammar is as learned in the compilation principle, from a statement to a syntax tree.

The queries that the user belongs to can be optimized so that the fastest query path can be selected. This is the role of the optimizer.

In order to speed up the query, there will be a query cache module. If the query cache has a hit query result, the query statement can directly fetch data from the query cache.

All the above components are the database service layer, followed by the database engine layer. The current mainstream database engine is InnoDB.

For any modifications to the database, the database service layer will have a binary log recorded, which is the basis for primary and secondary replication.

For the database engine layer, a famous diagram is as follows:

In the storage engine layer, there are also caches and logs, and the final data is dropped to the disk. of.

The cache of the storage engine layer is also used to improve performance, but it is different from the cache of the database service layer. The cache of the database service layer is a query cache, while the cache of the database engine layer caches both reading and writing. The cache of the database service layer is based on query logic, while the cache of the database engine is based on data pages, which can be said to be physical.

Even if the data is only written to the cache in the database engine layer, for the database service layer, even if it has been persisted, of course, this will cause the cache page and the page on the hard disk to be damaged. Data inconsistency is ensured by logs at the database engine layer to ensure integrity.

So the logs of the database engine layer are different from those of the database service layer. The logs of the service layer record the modification logic one by one, while the logs of the engine layer record the physical differences between cache pages and data pages.

2. Database workflow

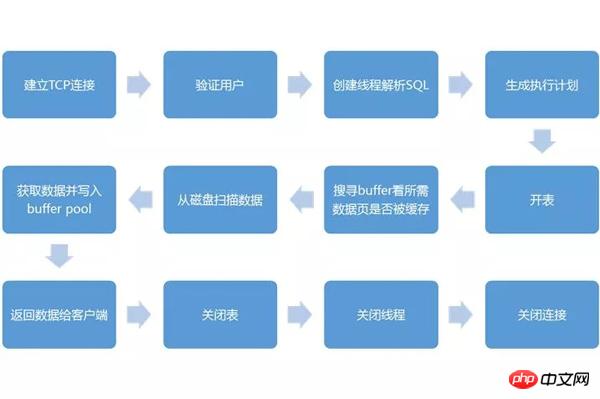

When receiving a query, the various components in the MySQL architecture work like this:

The client establishes a TCP connection with the database service layer. The connection management module will establish the connection and request a connection thread. If there is an idle connection thread in the connection pool, it is allocated to this connection. If not, a new connection thread is created to take charge of this client without exceeding the maximum number of connections.

Before the actual operation, the user module needs to be called for authorization check to verify whether the user has permissions. After passing, the service is provided, and the connection thread begins to receive and process the SQL statement from the client.

After the connection thread receives the SQL statement, it hands the statement to the SQL statement parsing module for syntax analysis and semantic analysis.

If it is a query statement, you can first check whether there are results in the query cache. If there are results, they can be returned directly to the client.

If there are no results in the query cache, you need to actually query the database engine layer, so it is sent to the SQL optimizer for query optimization. If it is a table change, it will be handed over to the insert, update, delete, create, and alter processing modules for processing.

The next step is to request the database engine layer, open the table, and obtain the corresponding lock if necessary.

The next processing process goes to the database engine layer, such as InnoDB.

At the database engine layer, you must first query whether there is corresponding data in the cache page. If there is, it can be returned directly. If not, it must be read from the disk.

When the corresponding data is found on the disk, it will be loaded into the cache, making subsequent queries more efficient. Due to limited memory, flexible LRU tables are often used to manage cache pages to ensure cache reliability. These are frequently accessed data.

After obtaining the data, return it to the client, close the connection, release the connection thread, and the process ends.

3. Principle of database index

In the whole process, what is most easily called the bottleneck point is the reading and writing of data, which often means reading and writing disks sequentially or randomly, and reading and writing Disks tend to be slower.

What if we speed up this process? I believe everyone has guessed that it is to create an index.

Why can indexes speed up this process?

I believe everyone has visited the food city. There are many restaurants in it. If you are not in a hurry, not hungry, and have no requirements for search performance, you can take your time in the mall and browse from one restaurant to another. , know how to find the restaurant you want to eat. But when you are hungry, or you have made an appointment at a restaurant, you definitely want to go straight to that restaurant. At this time, you will often look at the floor index map to quickly find the location of your target restaurant. Once you find it, go straight to the topic. It will save a lot of time, this is the role of the index.

So the index is to quickly find its location through the value, so that it can be accessed quickly.

Another function of the index is that you can make some judgments without actually looking at the data. For example, if there is a certain restaurant in the mall, you can just look at the index and you don’t have to actually go to the mall. , and if you want to find all the Sichuan restaurants, you only need to look at the index, and you don’t have to go from one Sichuan restaurant to another.

So how does the index work in MySQL?

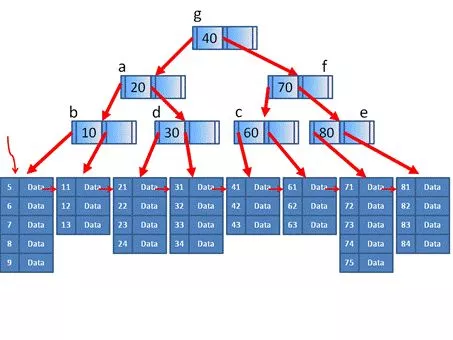

The index structure of MySQL is often a B+ tree.

An M-order B+ tree has the following properties:

1. The nodes are divided into index nodes and data nodes. The index node is equivalent to the internal node of the B-tree. All index nodes form a B-tree and have all the characteristics of the B-tree. In the index node, Key and pointer are stored, and no specific elements are stored. The data node is equivalent to the external node of the B tree. The external node of the B tree is empty and is used in the B+ tree to store real data elements. It contains the Key and other information of the element, but there is no pointer.

2. The B-tree composed of the entire index node is only used to find the external node where the data element with a certain Key is located. After the Key is found in the index node, the matter is not over. You must continue to find the data node, and then read out the elements in the data node, or perform a binary search, or sequential scanning to find the real data elements.

3. The order M is only used to control the degree of the index node part. As for how many elements each data node contains, it has nothing to do with M.

4. There is also a linked list that strings together all the data nodes and can be accessed sequentially.

This definition is relatively abstract, let’s look at a specific example.

We can see from the picture that this is a 3-order B+ tree, and an external data node contains up to 5 items. If the inserted data is in the data node and does not cause splitting and merging, the B-tree composed of index nodes will not change.

If an item 76 is inserted into an external node from 71 to 75, it will cause a split. 71, 72, and 73 become a data node, and 74, 75, and 76 become a data node, which is equivalent to an index node. In the process of inserting a Key of 74.

If 43 is deleted from the external nodes 41 to 43, it will cause a merger. 41, 42, 61, 62, and 63 will be merged into one node. For the index node, it is equivalent to the process of deleting Key 60. .

When searching, since the height of the B+ tree is very small, it can be positioned relatively quickly. For example, if we want to find the value 62, if it is found to be greater than 40 at the root node, we will access the right side, if it is less than 70, we will access the left side, and if it is greater than 60, we will access the right side. Then visit the right side, at the second leaf node, you will find 62, and you will successfully locate it.

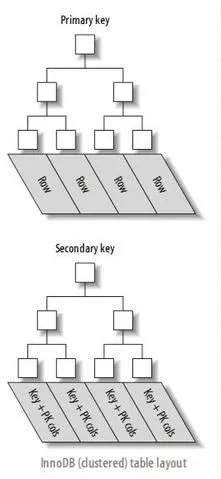

In MySQL's InnoDB, there are two types of B+ tree indexes, one is called a clustered index and the other is called a secondary index.

The leaf nodes of the clustered index are data nodes, often the primary key is used as the clustered index. The leaf nodes of the secondary index store the KEY field plus the primary key value. Therefore, accessing data through a secondary index requires accessing the index twice.

There is also a form of index called a composite index, or compound index, which can build indexes on multiple columns.

The sorting rule of this kind of index is to compare the first column first, and if the first column is equal, compare the second column, and so on.

4. Advantages and Disadvantages of Database Index

The most obvious advantage of database index is to reduce I/O. Several scenarios are analyzed below.

For fields with = conditions, you can directly search the B+ tree and use a very small number of hard disk reads (equivalent to the height of the B+ tree) to reach the leaf nodes and then directly locate the data. Location.

For range fields, since the B+ tree is sorted, the range can be quickly located through the tree.

Similarly for orderby, group by, distinct/max, min, since the B+ tree is sorted, the results can be obtained quickly.

There is also a common scenario called index covering data. For example, two fields A and B are used as condition fields, and A=a AND B=b often appear. When selecting C and D at the same time, a joint index (A, B) is often built, which is a secondary index, so when searching, The corresponding leaf nodes and records can be quickly found through the B+ tree of the secondary index, but some of the records contain IDs of the clustered index, so you need to search the B+ tree of the clustered index once to find the real records in the table, and then In the record, read C and D. If the joint index is (A, B, C, D) when establishing a joint index, then all the data will be in the B+ tree of the secondary index and can be returned directly, reducing the process of searching the tree.

Of course indexing must come at a price, there is no free lunch in the world.

The benefits brought by indexes are mostly the improvement of reading efficiency, while the price brought by indexes is the reduction of writing efficiency.

Inserting and modifying data may mean changes to the index.

When inserting, a clustered index is often built on the primary key, so it is best to use auto-increment for the primary key, so that the inserted data is always at the end, and it is sequential, which is more efficient. Do not use UUIDs for primary keys. This will cause random writes and poor efficiency. Do not use primary keys related to business, because being business-related means that they will be updated and will face deletion and re-insertion, which will result in poor efficiency.

Through the above introduction to the principle of B+ tree, we can see that the cost of splitting in B+ tree is still relatively high, and splitting often occurs during the insertion process.

As for the modification of data, it is basically equivalent to deleting and inserting again, and the cost is relatively high.

Secondary indexes on some string columns often cause random writing and reading, and put greater pressure on I/O.

5. Interpret the principles behind database military regulations

Understanding the principles of these two indexes, we can explain why many so-called database military regulations look like this. Let’s explain them one by one below.

Under what circumstances should a combined index be used instead of a separate index?

Suppose there is a conditional statement A=a AND B=b. If A and B are two separate indexes, only one index will work under the AND condition. For B, it must be judged one by one, and if a combination is used Index (A, B) only needs to traverse a tree, which greatly increases efficiency. But for A=a OR B=b, due to the OR relationship, the combined index does not work, so a separate index can be used. At this time, the two indexes can work at the same time.

Why should the index be differentiated? In the combined index, the differentiated index should be placed first?

If there is no distinction, such as using gender, it is equivalent to dividing the entire large table into two parts. Looking for data still requires traversing half of the table to find it, making the index meaningless.

If there is a composite index, do I still need a single column index?

If the combined index is (A, B), then this combined index can be used for the condition A=a, because the combined index is sorted according to the first column first, so there is no need to sort A Create a separate index, but it is not useful for B=b, because the second column is compared only when the first column is the same, so the second column is the same and can be distributed on different nodes. There is no way Rapid positioning.

Is the more indexes, the better?

Of course not, only add indexes where necessary. Indexes will not only reduce the efficiency of insertion and modification, but also have a query optimizer when querying. Too many indexes will confuse the optimizer. There may be no way to find the correct query path, so a slow index is chosen.

Why use auto-increment primary key?

Because string primary keys and random primary keys will cause data to be randomly inserted, the efficiency is relatively poor, and the primary keys should be updated less frequently to avoid B+ trees and frequent merges and splits.

Why try not to use NULL?

NULL is difficult to handle in B+ trees and often requires special logic to process, which in turn reduces efficiency.

Why not create indexes on frequently updated fields?

Updating a field means that the corresponding index must also be updated. Updating often means deleting and then inserting. The index is originally a certain data structure formed in the writing stage in advance, which makes the reading stage more efficient. High way, but if a field is written more and read less, it is not recommended to use an index.

Why not use functions in query conditions?

For example, for the condition ID+1=10, the index is generated when it is written in advance. During the query phase, the index cannot be used for operations such as ID+1. There is no way to add all indexes first. Do a calculation and compare. It's too expensive, so ID=10-1 should be used.

Why not use negative query conditions such as NOT?

You can imagine that for a B+ tree, the base node is 40. If your condition is equal to 20, go to the left to check. If your condition is equal to 50, go to the right to check. But your condition If it is not equal to 66, what should I do with the index? You don’t know until you go through it all.

Why should fuzzy queries not start with wildcard characters?

For a B+ tree, if the root is the character def, and if the wildcard character is at the back, such as abc%, then the left side should be searched, such as efg%, the right side should be searched, and if the wildcard character is at the front, %abc, then you don’t know Whichever side you should go to, it’s better to scan them all.

Why should OR be changed to IN, or use Union?

It is often difficult to find the best path when optimizing OR query conditions, especially when there are many OR conditions. For the same field, it is better to use IN. The database will The conditions are sorted and processed uniformly through binary search. For different fields, using Union allows each subquery to use an index.

Why should data types be as small as possible? Integer types are often used instead of character types. Prefix indexes can be considered for long character types?

Because the database is stored in pages, the size of each page is the same. If the data type is larger, the number of pages will be larger, the data placed on each page will be smaller, and the height of the tree will be smaller. It is relatively high, so the number of I/Os required to read the search data will be larger, and the nodes will be easily split during insertion, and the efficiency will be reduced. This is probably why we use integers instead of characters. Integers are more efficient for indexing, such as IP addresses. If there are long character types that need to be queried using an index, in order not to make the index too large, you can consider indexing the prefix of the field instead of the entire field.

6. Query Optimization Methodology

To find the SQL statements that need to be optimized, you must first collect problematic SQL statements.

MySQL database provides a slow SQL log function. Through the parameter slow_query_log, you can obtain a list of SQL quotes whose execution time exceeds a certain threshold.

SQL statements that do not use indexes can be turned on through the long_queries_not_using_indexes parameter.

min_examined_row_limit, only SQL statements with a number of scan records greater than this value will be recorded in the slow SQL log.

Find the problematic statement. The next step is to obtain the SQL execution plan through explainSQL. Whether to scan the record through the index, you can optimize the execution efficiency by creating an index. Whether there are too many scan records. Whether the lock is held for too long or whether there is a lock conflict. Whether the number of records returned is large.

Next, you can customize the optimization. For fields involved in filter conditions that are not covered by the index, create indexes on fields with greater distinction. If multiple fields are involved, try to create a joint index.

The number of scanned records is very large, but the number of returned records is small and the discrimination is poor. Re-evaluate the fields involved in the SQL statement and select multiple fields with high discrimination to create an index.

The number of scanned records is very large, and the number of returned records is also very large. The filtering conditions are not strong. Add the SQL filtering conditions

schema_redundant_indexes to see which redundant indexes there are.

If multiple indexes involve fields in the same order, you can form a joint index schema_unused_indexes to see which indexes have never been used.

7. The principle of separation of reading and writing

Databases often write less and read more, so the first step in performance optimization is to separate reading and writing.

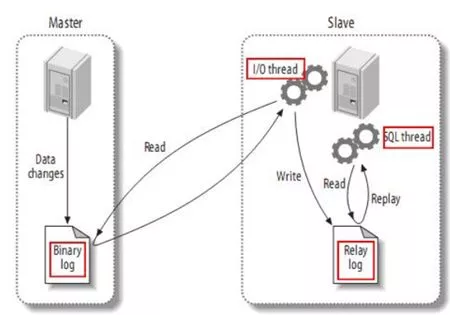

Master-slave replication is implemented based on the log of the service layer on the master node, and there is an IO thread on the slave node to read this log and then write it locally. Another thread reads from the local log and then re-executes it on the slave node.

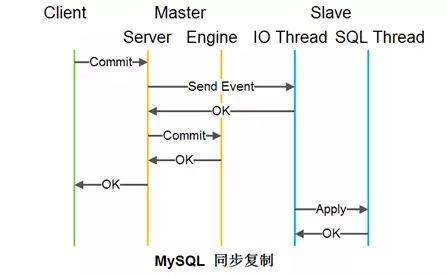

The picture shows the flow chart of master-slave asynchronous replication. After the master instance writes to the engine, it returns success, and then sends the event to the slave instance and executes it on the slave instance. This synchronization method is faster, but when the master hangs up, if there is no replication, there may be data loss problems.

Database synchronous replication is also different. It returns to the client after the slave node is downloaded. Of course, this will reduce performance. The NetEase database team submits through groups. Technologies such as parallel replication improve performance.

With master-slave replication, the read-write separation strategy can be set at the database DAO layer, and this can also be done through database middleware.

In fact, database logs have many other uses, such as using Canal (Alibaba open source project: incremental subscription & consumption based on MySQL database Binlog) to subscribe to the Binlog of the database, which can be used to update the cache, etc.