This article mainly introduces you in detailC#The second part of the series of seven classic sorting algorithms, direct insertion sorting, Hill sorting and merge sorting, has certain reference value, interested friends We can refer to

Today I will talk to you about the last three sorts: direct insertion sort, Hill sort and merge sort.

Direct insertion sorting:

This kind of sorting is actually quite easy to understand. A very realistic example is our Landlord fight. When we catch a random hand of cards, We have to sort out the poker cards according to size. After 30 seconds, the poker cards are sorted out, 4 3's, 5 s's, wow... Recall how we sorted it out at that time.

The leftmost card is 3, the second card is 5, the third card is 3 again, quickly insert it behind the first card, the fourth card is 3 again, great joy, hurry up Insert it after the second card, and the fifth card is 3 again. I am ecstatic, haha, a cannon is born.

How about it? Algorithms are everywhere in life and have already been integrated into our lives and blood.

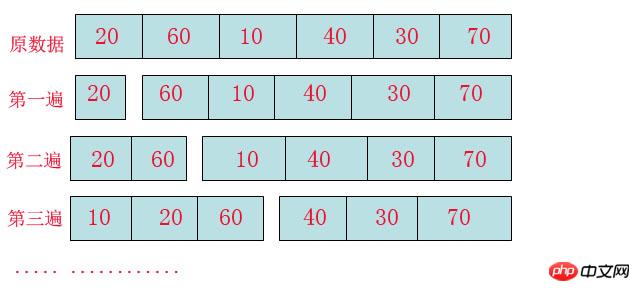

The following is an explanation of the above picture:

I don’t know if you can understand this picture. In insertion sort, Array It will be divided into two types, "ordered array block" and "unordered array block". Yes, in the first pass, a number 20 is extracted from the "unordered array block" as an ordered array block.

In the second pass, a number 60 is extracted from the "unordered array block" and placed in an orderly manner into the "ordered array block", that is, 20, 60.

The same goes for the third pass, but the difference is that 10 is found to be smaller than the value of the ordered array, so the 20 and 60 positions are moved back to free up a position for 10 to be inserted.

Then all insertions can be completed according to this rule.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 |

|

Hill sorting:

Look at "insertion sort": Actually it doesn't It is difficult to find that she has a shortcoming:

If the data is "5, 4, 3, 2, 1", at this time we will insert the records in the "unordered block" When we reach the "ordered block", we are expected to collapse, and the position will be moved every time we insert. At this time, the efficiency of insertion sort can be imagined.

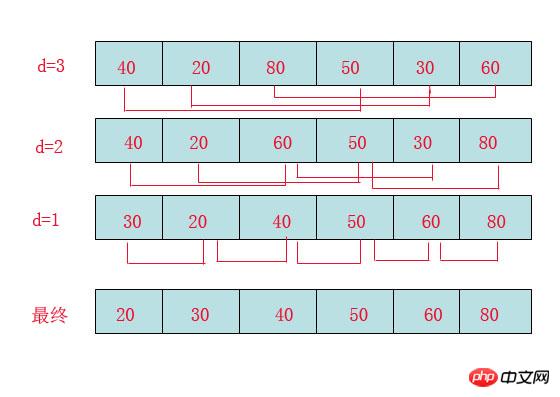

Shell has improved the algorithm based on this weakness and incorporated an idea called "Reduce incremental sorting method". It is actually quite simple, but something to note is:

The increment is not taken randomly, but has rules to follow.

First of all, we need to clarify how to choose the increment:

The method to choose the first increment is: d=count/2;

The method of taking the second incremental increase is: d = (count/2)/2;

## Finally until: d = 1;When d=3: Compare 40 with 50. Because 50 is larger, there is no exchange.

Comparing 20 with 30, because 30 is larger, there is no exchange.

Compare 80 with 60. Since 60 is smaller, exchange them.

When d=2: Compare 40 with 60 without exchanging, then exchange 60 with 30. At this time, the exchanged 30 is smaller than the previous 40, and 40 and 30 need to be exchanged, as shown in the picture above. .

Compare 20 with 50 without exchanging, and continue to compare 50 with 80 without exchanging.

d=1: This is the insertion sort mentioned earlier, but the sequence at this time is almost in order, so it brings a great performance improvement to the insertion sort.

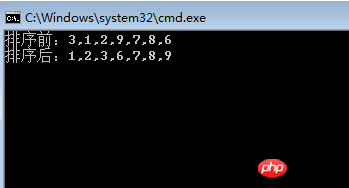

Since "Hill sort" is an improved version of "insertion sort", then we have to compare how much faster it can be among 10,000 numbers?

Let’s do a test:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 |

|

I hope you can see it The sorting has been optimized a lot. In the w-level sorting, the difference is more than 70 times.

I hope you can see it The sorting has been optimized a lot. In the w-level sorting, the difference is more than 70 times.

Personally feel that the sorting that we can easily understand is basically O (n^2), and the sorting that is more difficult to understand is basically O(n^2) It is N(LogN), so merge sorting is also difficult to understand, especially when it comes to writing the code

. It took me an entire afternoon to figure it out, hehe.

First look at the picture:

There are two things to do in merge sort:

There are two things to do in merge sort:

第一: “分”, 就是将数组尽可能的分,一直分到原子级别。

第二: “并”,将原子级别的数两两合并排序,最后产生结果。

代码:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 |

|

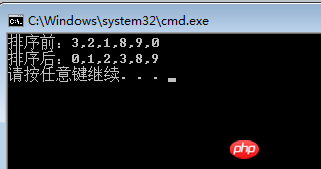

结果图:

ps:

插入排序的时间复杂度为:O(N^2)

希尔排序的时间复杂度为:平均为:O(N^3/2)

最坏:O(N^2)

归并排序时间复杂度为: O(NlogN)

空间复杂度为: O(N)

The above is the detailed content of Detailed explanation of graphic code of C# classic sorting algorithm (Part 2). For more information, please follow other related articles on the PHP Chinese website!