My First Lucky and Sad Hadoop Results

Recently I am playing with Hadoop per analyzing the data set I scraped from WEIBO.COM. After a couple of tryings, many are failed due to disk space shortage, after I decreased the input date set volumn, luckily I gained a completed Hadoop

Recently I am playing with Hadoop per analyzing the data set I scraped from WEIBO.COM. After a couple of tryings, many are failed due to disk space shortage, after I decreased the input date set volumn, luckily I gained a completed Hadoop Job results, but, sadly, with only 1000 lines of records processed.

Here is the Job Summary:

| Counter | Map | Reduce | Total |

| Bytes Read | 7,945,196 | 0 | 7,945,196 |

| FILE_BYTES_READ | 16,590,565,518 | 8,021,579,181 | 24,612,144,699 |

| HDFS_BYTES_READ | 7,945,580 | 0 | 7,945,580 |

| FILE_BYTES_WRITTEN | 24,612,303,774 | 8,021,632,091 | 32,633,935,865 |

| HDFS_BYTES_WRITTEN | 0 | 2,054,409,494 | 2,054,409,494 |

| Reduce input groups | 0 | 381,696,888 | 381,696,888 |

| Map output materialized bytes | 8,021,579,181 | 0 | 8,021,579,181 |

| Combine output records | 826,399,600 | 0 | 826,399,600 |

| Map input records | 1,000 | 0 | 1,000 |

| Reduce shuffle bytes | 0 | 8,021,579,181 | 8,021,579,181 |

| Physical memory (bytes) snapshot | 1,215,041,536 | 72,613,888 | 1,287,655,424 |

| Reduce output records | 0 | 381,696,888 | 381,696,888 |

| Spilled Records | 1,230,714,511 | 401,113,702 | 1,631,828,213 |

| Map output bytes | 7,667,457,405 | 0 | 7,667,457,405 |

| Total committed heap usage (bytes) | 1,038,745,600 | 29,097,984 | 1,067,843,584 |

| CPU time spent (ms) | 2,957,800 | 2,104,030 | 5,061,830 |

| Virtual memory (bytes) snapshot | 4,112,838,656 | 1,380,306,944 | 5,493,145,600 |

| SPLIT_RAW_BYTES | 384 | 0 | 384 |

| Map output records | 426,010,418 | 0 | 426,010,418 |

| Combine input records | 851,296,316 | 0 | 851,296,316 |

| Reduce input records | 0 | 401,113,702 | 401,113,702 |

From which we can see that, specially metrics which highlighted in bold style, I only passed in about 7MB data file with 1000 lines of records, but Reducer outputs 381,696,888 records, which are 2.1GB compressed gz file and some 9GB plain text when decompressed.

But clearly it’s not the problem of my code that leads to so much disk space usages, the above output metrics are all reasonable, although you may be surprised by the comparison between 7MB with only 1000 records input and 9GB with 381,696,888 records output. The truth is that I’m calculating co-appearance combination computation.

From this experimental I learned that my personal computer really cannot play with big elephant, input data records from the first 10 thousand down to 5 thousand to 3 thousand to ONE thousand at last, but data analytic should go on, I need to find a solution to work it out, actually I have 30 times of data need to process, that is 30 thousand records.

Yet still have a lot of work to do, and I plan to post some articles about what’s I have done with my big data :) and Hadoop so far.

---EOF---

原文地址:My First Lucky and Sad Hadoop Results, 感谢原作者分享。

Heiße KI -Werkzeuge

Undresser.AI Undress

KI-gestützte App zum Erstellen realistischer Aktfotos

AI Clothes Remover

Online-KI-Tool zum Entfernen von Kleidung aus Fotos.

Undress AI Tool

Ausziehbilder kostenlos

Clothoff.io

KI-Kleiderentferner

AI Hentai Generator

Erstellen Sie kostenlos Ai Hentai.

Heißer Artikel

Heiße Werkzeuge

Notepad++7.3.1

Einfach zu bedienender und kostenloser Code-Editor

SublimeText3 chinesische Version

Chinesische Version, sehr einfach zu bedienen

Senden Sie Studio 13.0.1

Leistungsstarke integrierte PHP-Entwicklungsumgebung

Dreamweaver CS6

Visuelle Webentwicklungstools

SublimeText3 Mac-Version

Codebearbeitungssoftware auf Gottesniveau (SublimeText3)

Heiße Themen

1378

1378

52

52

Java-Fehler: Hadoop-Fehler, wie man damit umgeht und sie vermeidet

Jun 24, 2023 pm 01:06 PM

Java-Fehler: Hadoop-Fehler, wie man damit umgeht und sie vermeidet

Jun 24, 2023 pm 01:06 PM

Java-Fehler: Hadoop-Fehler, wie man damit umgeht und sie vermeidet Wenn Sie Hadoop zur Verarbeitung großer Datenmengen verwenden, stoßen Sie häufig auf einige Java-Ausnahmefehler, die sich auf die Ausführung von Aufgaben auswirken und zum Scheitern der Datenverarbeitung führen können. In diesem Artikel werden einige häufige Hadoop-Fehler vorgestellt und Möglichkeiten aufgezeigt, mit ihnen umzugehen und sie zu vermeiden. Java.lang.OutOfMemoryErrorOutOfMemoryError ist ein Fehler, der durch unzureichenden Speicher der Java Virtual Machine verursacht wird. Wenn Hadoop ist

Verwendung von Hadoop und HBase in Beego für die Speicherung und Abfrage großer Datenmengen

Jun 22, 2023 am 10:21 AM

Verwendung von Hadoop und HBase in Beego für die Speicherung und Abfrage großer Datenmengen

Jun 22, 2023 am 10:21 AM

Mit dem Aufkommen des Big-Data-Zeitalters sind Datenverarbeitung und -speicherung immer wichtiger geworden und die effiziente Verwaltung und Analyse großer Datenmengen ist für Unternehmen zu einer Herausforderung geworden. Hadoop und HBase, zwei Projekte der Apache Foundation, bieten eine Lösung für die Speicherung und Analyse großer Datenmengen. In diesem Artikel wird erläutert, wie Sie Hadoop und HBase in Beego für die Speicherung und Abfrage großer Datenmengen verwenden. 1. Einführung in Hadoop und HBase Hadoop ist ein verteiltes Open-Source-Speicher- und Computersystem, das dies kann

Wie man PHP und Hadoop für die Big-Data-Verarbeitung verwendet

Jun 19, 2023 pm 02:24 PM

Wie man PHP und Hadoop für die Big-Data-Verarbeitung verwendet

Jun 19, 2023 pm 02:24 PM

Da die Datenmenge weiter zunimmt, sind herkömmliche Datenverarbeitungsmethoden den Herausforderungen des Big-Data-Zeitalters nicht mehr gewachsen. Hadoop ist ein Open-Source-Framework für verteiltes Computing, das das Leistungsengpassproblem löst, das durch Einzelknotenserver bei der Verarbeitung großer Datenmengen verursacht wird, indem große Datenmengen verteilt gespeichert und verarbeitet werden. PHP ist eine Skriptsprache, die in der Webentwicklung weit verbreitet ist und die Vorteile einer schnellen Entwicklung und einfachen Wartung bietet. In diesem Artikel wird die Verwendung von PHP und Hadoop für die Verarbeitung großer Datenmengen vorgestellt. Was ist HadoopHadoop ist

Entdecken Sie die Anwendung von Java im Bereich Big Data: Verständnis von Hadoop, Spark, Kafka und anderen Technologie-Stacks

Dec 26, 2023 pm 02:57 PM

Entdecken Sie die Anwendung von Java im Bereich Big Data: Verständnis von Hadoop, Spark, Kafka und anderen Technologie-Stacks

Dec 26, 2023 pm 02:57 PM

Java-Big-Data-Technologie-Stack: Verstehen Sie die Anwendung von Java im Bereich Big Data wie Hadoop, Spark, Kafka usw. Da die Datenmenge weiter zunimmt, ist die Big-Data-Technologie im heutigen Internetzeitalter zu einem heißen Thema geworden. Im Bereich Big Data hören wir oft die Namen Hadoop, Spark, Kafka und andere Technologien. Diese Technologien spielen eine entscheidende Rolle, und Java spielt als weit verbreitete Programmiersprache auch im Bereich Big Data eine große Rolle. Dieser Artikel konzentriert sich auf die Anwendung von Java im Großen und Ganzen

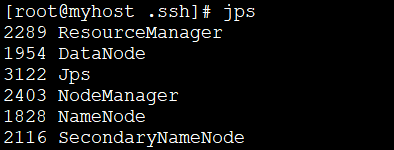

So installieren Sie Hadoop unter Linux

May 18, 2023 pm 08:19 PM

So installieren Sie Hadoop unter Linux

May 18, 2023 pm 08:19 PM

1: Installieren Sie JDK1. Führen Sie den folgenden Befehl aus, um das JDK1.8-Installationspaket herunterzuladen. wget--no-check-certificatehttps://repo.huaweicloud.com/java/jdk/8u151-b12/jdk-8u151-linux-x64.tar.gz2. Führen Sie den folgenden Befehl aus, um das heruntergeladene JDK1.8-Installationspaket zu dekomprimieren . tar-zxvfjdk-8u151-linux-x64.tar.gz3. Verschieben Sie das JDK-Paket und benennen Sie es um. mvjdk1.8.0_151//usr/java84. Konfigurieren Sie Java-Umgebungsvariablen. Echo'

Verwenden Sie PHP, um eine groß angelegte Datenverarbeitung zu erreichen: Hadoop, Spark, Flink usw.

May 11, 2023 pm 04:13 PM

Verwenden Sie PHP, um eine groß angelegte Datenverarbeitung zu erreichen: Hadoop, Spark, Flink usw.

May 11, 2023 pm 04:13 PM

Da die Datenmenge weiter zunimmt, ist die Datenverarbeitung in großem Maßstab zu einem Problem geworden, dem sich Unternehmen stellen und das sie lösen müssen. Herkömmliche relationale Datenbanken können diesen Bedarf nicht mehr decken. Für die Speicherung und Analyse großer Datenmengen sind verteilte Computerplattformen wie Hadoop, Spark und Flink die beste Wahl. Im Auswahlprozess von Datenverarbeitungstools erfreut sich PHP als einfach zu entwickelnde und zu wartende Sprache bei Entwicklern immer größerer Beliebtheit. In diesem Artikel werden wir untersuchen, wie und wie PHP für die Verarbeitung großer Datenmengen genutzt werden kann

Datenverarbeitungs-Engines in PHP (Spark, Hadoop usw.)

Jun 23, 2023 am 09:43 AM

Datenverarbeitungs-Engines in PHP (Spark, Hadoop usw.)

Jun 23, 2023 am 09:43 AM

Im aktuellen Internetzeitalter ist die Verarbeitung großer Datenmengen ein Problem, mit dem sich jedes Unternehmen und jede Institution auseinandersetzen muss. Als weit verbreitete Programmiersprache muss PHP auch in der Datenverarbeitung mit der Zeit gehen. Um große Datenmengen effizienter zu verarbeiten, hat die PHP-Entwicklung einige Big-Data-Verarbeitungstools wie Spark und Hadoop eingeführt. Spark ist eine Open-Source-Datenverarbeitungs-Engine, die für die verteilte Verarbeitung großer Datenmengen verwendet werden kann. Das größte Merkmal von Spark ist seine schnelle Datenverarbeitungsgeschwindigkeit und effiziente Datenspeicherung.

Vergleichs- und Anwendungsszenarien von Redis und Hadoop

Jun 21, 2023 am 08:28 AM

Vergleichs- und Anwendungsszenarien von Redis und Hadoop

Jun 21, 2023 am 08:28 AM

Redis und Hadoop sind beide häufig verwendete Systeme zur verteilten Datenspeicherung und -verarbeitung. Es gibt jedoch offensichtliche Unterschiede zwischen den beiden hinsichtlich Design, Leistung, Nutzungsszenarien usw. In diesem Artikel werden wir die Unterschiede zwischen Redis und Hadoop im Detail vergleichen und ihre anwendbaren Szenarien untersuchen. Redis-Übersicht Redis ist ein speicherbasiertes Open-Source-Datenspeichersystem, das mehrere Datenstrukturen und effiziente Lese- und Schreibvorgänge unterstützt. Zu den Hauptfunktionen von Redis gehören: Speicher: Redis