史上最简单的数据抽取

史上最简单的数据抽取 做为一名全职DBA,在之前的工作中,常会收到这样的需求,需要我配合开发定时的从几张目标表取出他们需要的数据,并存放到临时表中,开发们再从临时表中取出数据展现给前端页面。 收到这样的需求,我觉得应该考虑以下几点: (1)目标表有

史上最简单的数据抽取

做为一名全职DBA,在之前的工作中,常会收到这样的需求,需要我配合开发定时的从几张目标表取出他们需要的数据,并存放到临时表中,开发们再从临时表中取出数据展现给前端页面。

收到这样的需求,我觉得应该考虑以下几点:

(1)目标表有哪几张,它们的关联关系如何,这决定了我如何取数据

(2)定时抽取,意味着要周期性的提取数据,此抽取周期是多少?每次抽取数据的频率是多久?

(3)周期性的抽取数据,那么临时表的命名要有规则,通过就是"临时表名_日期时间",这样命名方便开发前端取数

(4)周期性的抽取数据,那么临时表的保留及清理也要考虑一下,防止表空间使用率过高

(5)如果每一次抽取数据消耗的时间比较长,那么要有1个监控的手段,方便查看本次抽取数据的进度

(6)数据抽取的过程中,如果本次抽取数据时发现有问题,那么你抽数的存储过程要可以复用。

也就是说,当前你第2次执行抽数的存储过程时,本次操作之前抽取出的脏数据要清空掉。

那么什么是数据抽取?

最简单的解释就是,从原始数据中抽取出感兴趣数据的过程。

针对上面我总结出的6点,咱们开始模拟一套最简单的数据抽取案例。

(一)、表结构及字段说明

(二)、模拟业务需求

(1)、从emp和dept表中抽取出:emp.empno、emp.ename、emp.job、emp.deptno、dept.dname、dept.loc、sysdate字段,构造产生临时表:T_EMP_DEPT (2)、emp和dept表的关联关系:emp表的deptno字段 参照引用 dept表的deptno字段 (3)、开发人员每天13点会查询使用当天产生的临时表 (4)、每次产生的临时表,保留周期是30天,超过30天的临时表可以被清理掉

(三)、给出解决方案

(1)、从emp和dept表关联查询出需要的字段,关联字段是deptno,并创建临时表:T_EMP_DEPT (2)、每天抽取一次,我们在每天13点之前把临时表创建好就可以了 (3)、临时表的命名规则:T_EMP_DEPT_yyyymmdd(取当前系统的年月日) (4)、抽取数据的存储过程中,加入逻辑判断,取当前系统时间,并将30天前的临时表清除(先truncate,再drop) (5)、单独写1个存储过程及表,用来保存每次抽取数据的进度情况,方便我们监控抽取数据的进度 (6)、在抽取数据的存储过程中,每次都要先truncate临时表、drop临时表,然后再进行本次的数据抽取。实现数据抽取的复用

既然全想清楚了,那么我们就开始操练起来吧

1、创建日志表(存储抽取进度)

650) this.width=650;" title="日志表.png" alt="wKioL1WOS2GjvszPAAUrB8R9cvE643.jpg" />

650) this.width=650;" title="日志表.png" alt="wKioL1WOS2GjvszPAAUrB8R9cvE643.jpg" />

2、创建监控进度的存储过程

650) this.width=650;" title="监控抽取进度存储过程.png" alt="wKiom1WOSdfCkIcaAASKc1-toA4015.jpg" />

650) this.width=650;" title="监控抽取进度存储过程.png" alt="wKiom1WOSdfCkIcaAASKc1-toA4015.jpg" />

3、创建抽取数据的存储过程

CREATE OR REPLACE PROCEDURE prc_emp_dept authid current_user is

table_name_1 varchar2(100); --临时表名

table_flag number; --标识临时表是否存在 0:不存在 1:存在

create_sql varchar2(5000);--创建临时表的SQL语句

insert_sql varchar2(5000);--Insert操作SQL语句

date_30 varchar2(20); --数据过期的时间 30天以前

date_cur varchar2(20); --当前日期

log_detail varchar2(4000); --日志明细参数

begin

date_cur := to_char(sysdate, 'yyyymmdd'); --当前日期

date_30 := to_char(sysdate - 30, 'yyyymmdd'); --30天以前的日期

table_flag := 0; --初始状态0,目标不存在

table_name_1 := 'T_EMP_DEPT'||date_cur;

---如果临时表存在先清空、再干掉(实现功能复用)

execute immediate 'select count(*) from user_tables

where table_name='''||table_name_1|| '''' into table_flag;

if table_flag = 1 then

execute immediate 'truncate table '||table_name_1;

execute immediate 'drop table '||table_name_1;

--日志明细信息

log_detail := '删除临时表的时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','00',log_detail);

end if;

---创建中间表T_EMP_DEPTyyyymmdd

create_sql :='create table '|| table_name_1 || ' nologging as

select

e.EMPNO ,

e.ENAME ,

e.JOB ,

e.MGR ,

e.HIREDATE,

e.SAL ,

e.COMM ,

e.DEPTNO ,

d.DNAME ,

d.LOC ,

sysdate as current_time

from emp e,dept d where e.deptno=d.deptno';

execute immediate create_sql;

--日志明细信息

log_detail := '中间表创建完毕时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','01',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第1次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','02',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第2次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','03',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第3次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','04',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第4次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','05',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第5次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','06',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第6次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','07',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第7次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','08',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第8次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','09',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第9次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','10',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第10次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','11',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第11次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','12',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第12次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','13',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第13次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','14',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第14次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','15',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第15次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','16',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第16次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','17',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第17次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','18',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第18次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','19',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第19次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','20',log_detail);

insert_sql :='insert into '|| table_name_1 ||' select * from '|| table_name_1;

execute immediate insert_sql;

commit;

--日志明细信息

log_detail := '第20次往中间表插入数据完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','21',log_detail);

P_INSERT_LOG(sysdate,'prc_emp_dept','22','抽取数据部分结束了!');

---删除30天以前的临时表

execute immediate 'select count(*) from user_TABLES

where table_name=''T_EMP_DEPT'||date_30 || '''' into table_flag;

if table_flag = 1 then --找到了30天前的表

execute immediate ' truncate table T_EMP_DEPT' ||date_30;

execute immediate ' drop table T_EMP_DEPT' ||date_30;

log_detail := '删除30天前临时表完成时间:' ||to_char(sysdate, 'yyyy-mm-dd hh24:mi:ss');

P_INSERT_LOG(sysdate,'prc_emp_dept','23',log_detail);

end if;

end prc_emp_dept;

/4、使用sys用户显示授权给scott,防止dbms_job调用存储过程时报没有权限

5、查看监控日志表(新表什么也没有)

6、手工执行抽取数据的存储过程

7、查看监控日志表(记录得比较详细)

朋友们,咱们的数据抽取功能、监控抽取进度、业务的需求咱们基本就全完成了。

现在还差1项就是把抽取数据的存储过程设置成定时任务,然后周期性的执行。

常用的定时任务有2种:

a.crontab (操作系统层面的)

b.dbms_job (oracle自带的)

将咱们的数据抽取存储过程加入到定时任务,让它自己周期性的执行就可以了。

8、我使用的是dbms_job,详细如下图

好了,朋友们至此,本套史上最简单的数据自动抽取文章就结束了!

此篇文章中涵盖了以下知识点:

1、SQL多表联合查询

2、关于业务的分析及思考

3、数据的自动抽取

4、数据抽取的进度监控

5、定时任务

6、表的管理

结束语:

相信大家已经发现了,本次文章中使用到的操作用户是scott,咱们oracle中基础练习的用户。

本篇文章是我上课的一个小案例,我的学生们反应不错,他们说接受起来比较容易。

所以我就把这个小案例移植到了51的博客上,分享给更多需要的朋友们!

其实每个人都有拖延症,都会害怕去尝试新鲜事物,所以我想说本篇文章:

送给想做数据抽取的朋友们、

送给对数据处理感兴趣的朋友们、

送给想学习oracle开发方向的朋友们、

送给想和做不同步的朋友们、

送给想学习的朋友们

附:本篇文章中的代码,全部手工测试过没有问题。如果朋友们在操作过程中发现报错,请好好检查一下代码。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Use ddrescue to recover data on Linux

Mar 20, 2024 pm 01:37 PM

Use ddrescue to recover data on Linux

Mar 20, 2024 pm 01:37 PM

DDREASE is a tool for recovering data from file or block devices such as hard drives, SSDs, RAM disks, CDs, DVDs and USB storage devices. It copies data from one block device to another, leaving corrupted data blocks behind and moving only good data blocks. ddreasue is a powerful recovery tool that is fully automated as it does not require any interference during recovery operations. Additionally, thanks to the ddasue map file, it can be stopped and resumed at any time. Other key features of DDREASE are as follows: It does not overwrite recovered data but fills the gaps in case of iterative recovery. However, it can be truncated if the tool is instructed to do so explicitly. Recover data from multiple files or blocks to a single

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

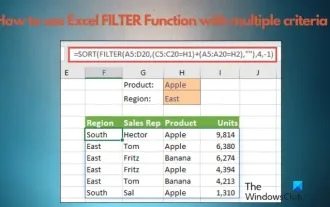

How to use Excel filter function with multiple conditions

Feb 26, 2024 am 10:19 AM

How to use Excel filter function with multiple conditions

Feb 26, 2024 am 10:19 AM

If you need to know how to use filtering with multiple criteria in Excel, the following tutorial will guide you through the steps to ensure you can filter and sort your data effectively. Excel's filtering function is very powerful and can help you extract the information you need from large amounts of data. This function can filter data according to the conditions you set and display only the parts that meet the conditions, making data management more efficient. By using the filter function, you can quickly find target data, saving time in finding and organizing data. This function can not only be applied to simple data lists, but can also be filtered based on multiple conditions to help you locate the information you need more accurately. Overall, Excel’s filtering function is a very practical

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Facing lag, slow mobile data connection on iPhone? Typically, the strength of cellular internet on your phone depends on several factors such as region, cellular network type, roaming type, etc. There are some things you can do to get a faster, more reliable cellular Internet connection. Fix 1 – Force Restart iPhone Sometimes, force restarting your device just resets a lot of things, including the cellular connection. Step 1 – Just press the volume up key once and release. Next, press the Volume Down key and release it again. Step 2 – The next part of the process is to hold the button on the right side. Let the iPhone finish restarting. Enable cellular data and check network speed. Check again Fix 2 – Change data mode While 5G offers better network speeds, it works better when the signal is weaker

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

Recently, the military circle has been overwhelmed by the news: US military fighter jets can now complete fully automatic air combat using AI. Yes, just recently, the US military’s AI fighter jet was made public for the first time and the mystery was unveiled. The full name of this fighter is the Variable Stability Simulator Test Aircraft (VISTA). It was personally flown by the Secretary of the US Air Force to simulate a one-on-one air battle. On May 2, U.S. Air Force Secretary Frank Kendall took off in an X-62AVISTA at Edwards Air Force Base. Note that during the one-hour flight, all flight actions were completed autonomously by AI! Kendall said - "For the past few decades, we have been thinking about the unlimited potential of autonomous air-to-air combat, but it has always seemed out of reach." However now,

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

This week, FigureAI, a robotics company invested by OpenAI, Microsoft, Bezos, and Nvidia, announced that it has received nearly $700 million in financing and plans to develop a humanoid robot that can walk independently within the next year. And Tesla’s Optimus Prime has repeatedly received good news. No one doubts that this year will be the year when humanoid robots explode. SanctuaryAI, a Canadian-based robotics company, recently released a new humanoid robot, Phoenix. Officials claim that it can complete many tasks autonomously at the same speed as humans. Pheonix, the world's first robot that can autonomously complete tasks at human speeds, can gently grab, move and elegantly place each object to its left and right sides. It can autonomously identify objects