决定彻底放弃对MooseFS的研究

虽然对 分布式 也有一些了解,但是一直没有深入到代码去 研究 具体的实现。在群里咨询了一下,自己也google了一些资料,最终 决定 从MooseFS入手来深入 研究 分布式 系统 。第一步,就是从网上找文档,自己动手安装部署一番。官方网站上有一个中文的安装手册

虽然对分布式也有一些了解,但是一直没有深入到代码去研究具体的实现。在群里咨询了一下,自己也google了一些资料,最终决定从MooseFS入手来深入研究分布式系统。第一步,就是从网上找文档,自己动手安装部署一番。官方网站上有一个中文的安装手册,非常nice,整个安装配置过程也非常顺利,感觉还不错,就是看它的代码实现。看完之后有些失望,在main函数中的第一个函数strerr_init()中看到了这样的代码:

for (n=0 ; errtab[n].str ; n++) {}static errent errtab[] = {

#ifdef E2BIG

{E2BIG,"E2BIG (Argument list too long)"},

#endif

#ifdef EACCES

{EACCES,"EACCES (Permission denied)"},

#endif

......

{0,NULL}

};另一个地方让我感觉作者在写代码的时候很不用心,cfg_reload()在解析配置文件时定义了一个缓冲区linebuff[1000]数组来存储每行读取的内容。mfs解析配置文件时是按行读取的,每行的配置内容类似“key=value"这样的形式。这个缓冲区的长度定义为1000,也让我很不解。因为在定义缓冲区或分配内存的时候,一般都会考虑到对齐,都会对齐到word size或者CPU cache line的长度。这里的1000让我很不解!

代码中还有很多无用的、多余的判断。例如下面的代码段:

logappname = cfg_getstr("SYSLOG_IDENT",STR(APPNAME));

if (rundaemon) {

if (logappname[0]) {

openlog(logappname, LOG_PID | LOG_NDELAY , LOG_DAEMON);

} else {

openlog(STR(APPNAME), LOG_PID | LOG_NDELAY , LOG_DAEMON);

}

} 判断仅一个空行之隔,没必要到极点了吧。

抛开这些细节性的东西不提,mfs选择的I/O复用机制也让人不敢恭维。现在需要监听套接字的读写事件,毫无疑问首选的eventpoll。如果没有记错的话,moosefs是07年开始的,这个时候内核中的eventpoll机制已经很完善了,实在不懂作者为什么要选用poll()来作为I/O复用的接口。在继续之前,先看一下下面的代码:

i = poll(pdesc,ndesc,50);

......

if (i 我不明白在poll()返回-1时,为什么要检查EAGAIN错误。查看了man手册,只有EFAULT、EINTR、EINVAL、ENOMEM错误,看了一下内核代码,也没有返回EAGAIN错误的地方。而且很不明白,为什么在EAGAIN的时候要调用usleep()等待100毫秒,不理解!

<p> 最终<strong>彻底</strong>击穿我的信心的是matoclserv_gotpacket()函数,这个函数通过判断eptr->registered的值来做不同的三种处理,这三个代码块中很多都是可以合并的。要么给每种处理封装成一个函数,要么将这三块代码合并成一块,这样看着不是更简洁么?很多switch-case分支中的处理也不用区分eptr->registered的值,为什么写的这么庞大?难道作者在写的时候为了方便,复制粘贴了一番?反正我是很不理解,这样的代码风格真是让我没办法看下去,我<strong>决定</strong><strong>放弃</strong>了。我知道半途而废很不好,本来自己写代码风格就不好,怕看完之后更偏了,所以果断<strong>放弃</strong>!</p>

<p> 下面是matoclserv_gotpacket()函数的代码,大家欣赏一下:</p>

<p></p><pre name="code" class="cpp">void matoclserv_gotpacket(matoclserventry *eptr,uint32_t type,const uint8_t *data,uint32_t length) {

if (type==ANTOAN_NOP) {

return;

}

if (eptr->registered==0) { // unregistered clients - beware that in this context sesdata is NULL

switch (type) {

case CLTOMA_FUSE_REGISTER:

matoclserv_fuse_register(eptr,data,length);

break;

case CLTOMA_CSERV_LIST:

matoclserv_cserv_list(eptr,data,length);

break;

case CLTOMA_SESSION_LIST:

matoclserv_session_list(eptr,data,length);

break;

case CLTOAN_CHART:

matoclserv_chart(eptr,data,length);

break;

case CLTOAN_CHART_DATA:

matoclserv_chart_data(eptr,data,length);

break;

case CLTOMA_INFO:

matoclserv_info(eptr,data,length);

break;

case CLTOMA_FSTEST_INFO:

matoclserv_fstest_info(eptr,data,length);

break;

case CLTOMA_CHUNKSTEST_INFO:

matoclserv_chunkstest_info(eptr,data,length);

break;

case CLTOMA_CHUNKS_MATRIX:

matoclserv_chunks_matrix(eptr,data,length);

break;

case CLTOMA_QUOTA_INFO:

matoclserv_quota_info(eptr,data,length);

break;

case CLTOMA_EXPORTS_INFO:

matoclserv_exports_info(eptr,data,length);

break;

case CLTOMA_MLOG_LIST:

matoclserv_mlog_list(eptr,data,length);

break;

default:

syslog(LOG_NOTICE,"main master server module: got unknown message from unregistered (type:%"PRIu32")",type);

eptr->mode=KILL;

}

} else if (eptr->registeredsesdata==NULL) {

syslog(LOG_ERR,"registered connection without sesdata !!!");

eptr->mode=KILL;

return;

}

switch (type) {

case CLTOMA_FUSE_REGISTER:

matoclserv_fuse_register(eptr,data,length);

break;

case CLTOMA_FUSE_RESERVED_INODES:

matoclserv_fuse_reserved_inodes(eptr,data,length);

break;

case CLTOMA_FUSE_STATFS:

matoclserv_fuse_statfs(eptr,data,length);

break;

case CLTOMA_FUSE_ACCESS:

matoclserv_fuse_access(eptr,data,length);

break;

case CLTOMA_FUSE_LOOKUP:

matoclserv_fuse_lookup(eptr,data,length);

break;

case CLTOMA_FUSE_GETATTR:

matoclserv_fuse_getattr(eptr,data,length);

break;

case CLTOMA_FUSE_SETATTR:

matoclserv_fuse_setattr(eptr,data,length);

break;

case CLTOMA_FUSE_READLINK:

matoclserv_fuse_readlink(eptr,data,length);

break;

case CLTOMA_FUSE_SYMLINK:

matoclserv_fuse_symlink(eptr,data,length);

break;

case CLTOMA_FUSE_MKNOD:

matoclserv_fuse_mknod(eptr,data,length);

break;

case CLTOMA_FUSE_MKDIR:

matoclserv_fuse_mkdir(eptr,data,length);

break;

case CLTOMA_FUSE_UNLINK:

matoclserv_fuse_unlink(eptr,data,length);

break;

case CLTOMA_FUSE_RMDIR:

matoclserv_fuse_rmdir(eptr,data,length);

break;

case CLTOMA_FUSE_RENAME:

matoclserv_fuse_rename(eptr,data,length);

break;

case CLTOMA_FUSE_LINK:

matoclserv_fuse_link(eptr,data,length);

break;

case CLTOMA_FUSE_GETDIR:

matoclserv_fuse_getdir(eptr,data,length);

break;

/* CACHENOTIFY

case CLTOMA_FUSE_DIR_REMOVED:

matoclserv_fuse_dir_removed(eptr,data,length);

break;

*/

case CLTOMA_FUSE_OPEN:

matoclserv_fuse_open(eptr,data,length);

break;

case CLTOMA_FUSE_READ_CHUNK:

matoclserv_fuse_read_chunk(eptr,data,length);

break;

case CLTOMA_FUSE_WRITE_CHUNK:

matoclserv_fuse_write_chunk(eptr,data,length);

break;

case CLTOMA_FUSE_WRITE_CHUNK_END:

matoclserv_fuse_write_chunk_end(eptr,data,length);

break;

// fuse - meta

case CLTOMA_FUSE_GETTRASH:

matoclserv_fuse_gettrash(eptr,data,length);

break;

case CLTOMA_FUSE_GETDETACHEDATTR:

matoclserv_fuse_getdetachedattr(eptr,data,length);

break;

case CLTOMA_FUSE_GETTRASHPATH:

matoclserv_fuse_gettrashpath(eptr,data,length);

break;

case CLTOMA_FUSE_SETTRASHPATH:

matoclserv_fuse_settrashpath(eptr,data,length);

break;

case CLTOMA_FUSE_UNDEL:

matoclserv_fuse_undel(eptr,data,length);

break;

case CLTOMA_FUSE_PURGE:

matoclserv_fuse_purge(eptr,data,length);

break;

case CLTOMA_FUSE_GETRESERVED:

matoclserv_fuse_getreserved(eptr,data,length);

break;

case CLTOMA_FUSE_CHECK:

matoclserv_fuse_check(eptr,data,length);

break;

case CLTOMA_FUSE_GETTRASHTIME:

matoclserv_fuse_gettrashtime(eptr,data,length);

break;

case CLTOMA_FUSE_SETTRASHTIME:

matoclserv_fuse_settrashtime(eptr,data,length);

break;

case CLTOMA_FUSE_GETGOAL:

matoclserv_fuse_getgoal(eptr,data,length);

break;

case CLTOMA_FUSE_SETGOAL:

matoclserv_fuse_setgoal(eptr,data,length);

break;

case CLTOMA_FUSE_APPEND:

matoclserv_fuse_append(eptr,data,length);

break;

case CLTOMA_FUSE_GETDIRSTATS:

matoclserv_fuse_getdirstats_old(eptr,data,length);

break;

case CLTOMA_FUSE_TRUNCATE:

matoclserv_fuse_truncate(eptr,data,length);

break;

case CLTOMA_FUSE_REPAIR:

matoclserv_fuse_repair(eptr,data,length);

break;

case CLTOMA_FUSE_SNAPSHOT:

matoclserv_fuse_snapshot(eptr,data,length);

break;

case CLTOMA_FUSE_GETEATTR:

matoclserv_fuse_geteattr(eptr,data,length);

break;

case CLTOMA_FUSE_SETEATTR:

matoclserv_fuse_seteattr(eptr,data,length);

break;

/* do not use in version before 1.7.x */

case CLTOMA_FUSE_QUOTACONTROL:

matoclserv_fuse_quotacontrol(eptr,data,length);

break;

/* for tools - also should be available for registered clients */

case CLTOMA_CSERV_LIST:

matoclserv_cserv_list(eptr,data,length);

break;

case CLTOMA_SESSION_LIST:

matoclserv_session_list(eptr,data,length);

break;

case CLTOAN_CHART:

matoclserv_chart(eptr,data,length);

break;

case CLTOAN_CHART_DATA:

matoclserv_chart_data(eptr,data,length);

break;

case CLTOMA_INFO:

matoclserv_info(eptr,data,length);

break;

case CLTOMA_FSTEST_INFO:

matoclserv_fstest_info(eptr,data,length);

break;

case CLTOMA_CHUNKSTEST_INFO:

matoclserv_chunkstest_info(eptr,data,length);

break;

case CLTOMA_CHUNKS_MATRIX:

matoclserv_chunks_matrix(eptr,data,length);

break;

case CLTOMA_QUOTA_INFO:

matoclserv_quota_info(eptr,data,length);

break;

case CLTOMA_EXPORTS_INFO:

matoclserv_exports_info(eptr,data,length);

break;

case CLTOMA_MLOG_LIST:

matoclserv_mlog_list(eptr,data,length);

break;

default:

syslog(LOG_NOTICE,"main master server module: got unknown message from mfsmount (type:%"PRIu32")",type);

eptr->mode=KILL;

}

} else { // old mfstools

if (eptr->sesdata==NULL) {

syslog(LOG_ERR,"registered connection (tools) without sesdata !!!");

eptr->mode=KILL;

return;

}

switch (type) {

// extra (external tools)

case CLTOMA_FUSE_REGISTER:

matoclserv_fuse_register(eptr,data,length);

break;

case CLTOMA_FUSE_READ_CHUNK: // used in mfsfileinfo

matoclserv_fuse_read_chunk(eptr,data,length);

break;

case CLTOMA_FUSE_CHECK:

matoclserv_fuse_check(eptr,data,length);

break;

case CLTOMA_FUSE_GETTRASHTIME:

matoclserv_fuse_gettrashtime(eptr,data,length);

break;

case CLTOMA_FUSE_SETTRASHTIME:

matoclserv_fuse_settrashtime(eptr,data,length);

break;

case CLTOMA_FUSE_GETGOAL:

matoclserv_fuse_getgoal(eptr,data,length);

break;

case CLTOMA_FUSE_SETGOAL:

matoclserv_fuse_setgoal(eptr,data,length);

break;

case CLTOMA_FUSE_APPEND:

matoclserv_fuse_append(eptr,data,length);

break;

case CLTOMA_FUSE_GETDIRSTATS:

matoclserv_fuse_getdirstats(eptr,data,length);

break;

case CLTOMA_FUSE_TRUNCATE:

matoclserv_fuse_truncate(eptr,data,length);

break;

case CLTOMA_FUSE_REPAIR:

matoclserv_fuse_repair(eptr,data,length);

break;

case CLTOMA_FUSE_SNAPSHOT:

matoclserv_fuse_snapshot(eptr,data,length);

break;

case CLTOMA_FUSE_GETEATTR:

matoclserv_fuse_geteattr(eptr,data,length);

break;

case CLTOMA_FUSE_SETEATTR:

matoclserv_fuse_seteattr(eptr,data,length);

break;

/* do not use in version before 1.7.x */

case CLTOMA_FUSE_QUOTACONTROL:

matoclserv_fuse_quotacontrol(eptr,data,length);

break;

/* ------ */

default:

syslog(LOG_NOTICE,"main master server module: got unknown message from mfstools (type:%"PRIu32")",type);

eptr->mode=KILL;

}

}

}

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1375

1375

52

52

A deep dive into models, data, and frameworks: an exhaustive 54-page review of efficient large language models

Jan 14, 2024 pm 07:48 PM

A deep dive into models, data, and frameworks: an exhaustive 54-page review of efficient large language models

Jan 14, 2024 pm 07:48 PM

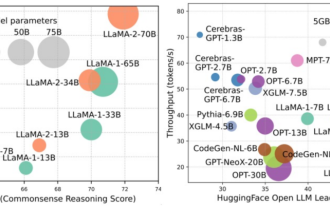

Large-scale language models (LLMs) have demonstrated compelling capabilities in many important tasks, including natural language understanding, language generation, and complex reasoning, and have had a profound impact on society. However, these outstanding capabilities require significant training resources (shown in the left image) and long inference times (shown in the right image). Therefore, researchers need to develop effective technical means to solve their efficiency problems. In addition, as can be seen from the right side of the figure, some efficient LLMs (LanguageModels) such as Mistral-7B have been successfully used in the design and deployment of LLMs. These efficient LLMs can significantly reduce inference memory while maintaining similar accuracy to LLaMA1-33B

How to completely remove Windows.old

Feb 18, 2024 pm 05:32 PM

How to completely remove Windows.old

Feb 18, 2024 pm 05:32 PM

The Windows.old folder is a folder generated in the version of the operating system before the Windows 10 update. This folder contains old Windows installation files, program files, and personal files, and it takes up a lot of disk space. When you have confirmed that you will not be rolling back to an older operating system version some time after using Windows 10 updates, you may consider deleting the Windows.old folder completely. Here are some methods for you to choose from

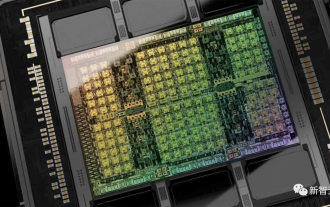

Crushing H100, Nvidia's next-generation GPU is revealed! The first 3nm multi-chip module design, unveiled in 2024

Sep 30, 2023 pm 12:49 PM

Crushing H100, Nvidia's next-generation GPU is revealed! The first 3nm multi-chip module design, unveiled in 2024

Sep 30, 2023 pm 12:49 PM

3nm process, performance surpasses H100! Recently, foreign media DigiTimes broke the news that Nvidia is developing the next-generation GPU, the B100, code-named "Blackwell". It is said that as a product for artificial intelligence (AI) and high-performance computing (HPC) applications, the B100 will use TSMC's 3nm process process, as well as more complex multi-chip module (MCM) design, and will appear in the fourth quarter of 2024. For Nvidia, which monopolizes more than 80% of the artificial intelligence GPU market, it can use the B100 to strike while the iron is hot and further attack challengers such as AMD and Intel in this wave of AI deployment. According to NVIDIA estimates, by 2027, the output value of this field is expected to reach approximately

I2V-Adapter from the SD community: no configuration required, plug and play, perfectly compatible with Tusheng video plug-in

Jan 15, 2024 pm 07:48 PM

I2V-Adapter from the SD community: no configuration required, plug and play, perfectly compatible with Tusheng video plug-in

Jan 15, 2024 pm 07:48 PM

The image-to-video generation (I2V) task is a challenge in the field of computer vision that aims to convert static images into dynamic videos. The difficulty of this task is to extract and generate dynamic information in the temporal dimension from a single image while maintaining the authenticity and visual coherence of the image content. Existing I2V methods often require complex model architectures and large amounts of training data to achieve this goal. Recently, a new research result "I2V-Adapter: AGeneralImage-to-VideoAdapter for VideoDiffusionModels" led by Kuaishou was released. This research introduces an innovative image-to-video conversion method and proposes a lightweight adapter module, i.e.

How to use Redis to achieve distributed data synchronization

Nov 07, 2023 pm 03:55 PM

How to use Redis to achieve distributed data synchronization

Nov 07, 2023 pm 03:55 PM

How to use Redis to achieve distributed data synchronization With the development of Internet technology and the increasingly complex application scenarios, the concept of distributed systems is increasingly widely adopted. In distributed systems, data synchronization is an important issue. As a high-performance in-memory database, Redis can not only be used to store data, but can also be used to achieve distributed data synchronization. For distributed data synchronization, there are generally two common modes: publish/subscribe (Publish/Subscribe) mode and master-slave replication (Master-slave).

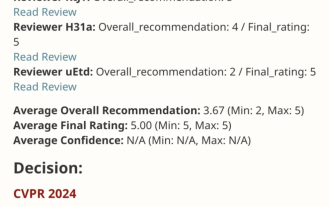

VPR 2024 perfect score paper! Meta proposes EfficientSAM: quickly split everything!

Mar 02, 2024 am 10:10 AM

VPR 2024 perfect score paper! Meta proposes EfficientSAM: quickly split everything!

Mar 02, 2024 am 10:10 AM

This work of EfficientSAM was included in CVPR2024 with a perfect score of 5/5/5! The author shared the result on a social media, as shown in the picture below: The LeCun Turing Award winner also strongly recommended this work! In recent research, Meta researchers have proposed a new improved method, namely mask image pre-training (SAMI) using SAM. This method combines MAE pre-training technology and SAM models to achieve high-quality pre-trained ViT encoders. Through SAMI, researchers try to improve the performance and efficiency of the model and provide better solutions for vision tasks. The proposal of this method brings new ideas and opportunities to further explore and develop the fields of computer vision and deep learning. by combining different

MiniGPT-5, which unifies image and text generation, is here: Token becomes Voken, and the model can not only continue writing, but also automatically add pictures.

Oct 11, 2023 pm 12:45 PM

MiniGPT-5, which unifies image and text generation, is here: Token becomes Voken, and the model can not only continue writing, but also automatically add pictures.

Oct 11, 2023 pm 12:45 PM

Large-scale models are making the leap between language and vision, promising to seamlessly understand and generate text and image content. In a series of recent studies, multimodal feature integration is not only a growing trend but has already led to key advances ranging from multimodal conversations to content creation tools. Large language models have demonstrated unparalleled capabilities in text understanding and generation. However, simultaneously generating images with coherent textual narratives is still an area to be developed. Recently, a research team at the University of California, Santa Cruz proposed MiniGPT-5, an innovative interleaving algorithm based on the concept of "generative vouchers". Visual language generation technology. Paper address: https://browse.arxiv.org/p

Google AI rising star switches to Pika: video generation Lumiere, serves as founding scientist

Feb 26, 2024 am 09:37 AM

Google AI rising star switches to Pika: video generation Lumiere, serves as founding scientist

Feb 26, 2024 am 09:37 AM

Video generation is progressing in full swing, and Pika has welcomed a great general - Google researcher Omer Bar-Tal, who serves as Pika's founding scientist. A month ago, Google released the video generation model Lumiere as a co-author, and the effect was amazing. At that time, netizens said: Google joins the video generation battle, and there is another good show to watch. Some people in the industry, including StabilityAI CEO and former colleagues from Google, sent their blessings. Lumiere's first work, Omer Bar-Tal, who just graduated with a master's degree, graduated from the Department of Mathematics and Computer Science at Tel Aviv University in 2021, and then went to the Weizmann Institute of Science to study for a master's degree in computer science, mainly focusing on research in the field of image and video synthesis. His thesis results have been published many times