公式化描述EMC中国实验室的"研究2.0"

今天让我来对EMC中国实验室的"研究2.0"作一个公式化的介绍. IBM 的 James Snell 对于 Web2.0 给出了一个有名的"程序员呆子"式的(geeky)定义: "Web2.0 = chmod 777 web". 我虽然不敢自我标榜为"程序员呆子",但曾经干过几天程序员的历史背景使我非常喜欢这个"

今天让我来对EMC中国实验室的"研究2.0"作一个公式化的介绍.

IBM 的 James Snell 对于 Web2.0 给出了一个有名的"程序员呆子"式的(geeky)定义: "Web2.0 = chmod 777 web". 我虽然不敢自我标榜为"程序员呆子",但曾经干过几天程序员的历史背景使我非常喜欢这个"程序员呆子"公式. 现对其作出如下延伸: “Research2.0 = chmod 777 Research”. 虽然充满"程序员呆子"气,这个公式却是对EMC中国实验室正在实践中的"研究2.0"尝试所作出的一个精辟的公式化描述.

首先,我们的研究人员与公司其它核心实验室(如著名的RSA实验室, 与EMC中国实验室同隶属于EMC全球创新网络)的同事们保持着密切的研究合作关系.这种公司内核心实验室之间的研究合作可由上述公式中的"7--"部分来表达. 同时我们也致力于把研究成果介绍给公司内位于全球各地的工程师们,并经常向他们征求反馈意见及用户需求.这种由核心实验室向公司各产品开发部门知识的交流与共享可由公式中的"-7-"部分来表达. 最后是十分重要的一条, 我们与全球大学及研究社区合作伙伴们的公开性合作研究(当然在中国我们与中国大学的合作更为广泛,深入而且密切).这一条也是使我们研究人员获得及生成知识的最有效途径,可以用上述公式中的"--7"部分来表达.

"程序员呆子"们可以跳过以下圆括号中的内容(请注意:7的二进制表达是111三个比特). Linux 或 Unix "程序员呆子"们可以跳过以下方括号中的内容[111三个比特中第一个比特代表"读"操作特权,第二个代表"写"操作特权,而第三个代表"执行"操作特权]

如上所述在三个范围内的全方位合作都是在知识共享的方式下进行的,所以每一个7都表示合作者们都具有"读"操作特权. 合作者们积极贡献知识的交流方式当然包含研究论文写作及发表,有用程序的设计及开发.所以每一个7都又表示合作者们都具有"写"操作特权, 即人人都是发布者. 至于每一个7都还表示合作者们的"执行"操作特权嘛, 就用我们与合作者们都特别喜欢倒弄开源软件这个事实来表达吧.(所谓倒弄开源软件, 是指包含使用,开发,试验开源软件,参与开源社区活动,并将我们的结果又以开源软件贡献回馈给开源社区).

基于"研究2.0"的理念,EMC中国实验室领导开发了道里维基网站: www.daoliproject.org 这是一个Web2.0式的网站. 欢迎大家来道里维基访问. 请记住, 您可以成为一个参与者, 请给自己设制上读,写,及执行的特权!

Extending James Snell’s geeky definition of web 2.0 as “chmod 777 web” to our notion of Research 2.0, we use “chmod 777 research” to describe our research practice in EMC Research China. It involves intra-EIN collaborations among core research labs (the 1st 7), cross-EMC-organization collaborations with globally distributed Advanced Development Groups and Centers of Excellence (the 2nd 7), and world-wide collaborations with universities and external business partners (the 3rd 7). Moreover, these collaborations feature openness in knowledge sharing (set all the read bits), ease of making contributions (set all the write bits), and roll-up-the-sleeves style of execution including getting our hands dirty with open-source code (set all the execution bits). ERC has already implemented an example of Research 2.0. The Daoli twiki www.daoliproject.org, which has been in the spotlight since its recent debut in early June, can be regarded as a prototype of our executing “chmod 777 research”. The Daoli twiki has more than 70 pages, which were created in less than one month through the collaborative efforts of ERC staff members and our partners at four universities working concurrently. It is kept up-to-date through efficient maintenance by distributed teams and monitored through email notifications used to prevent malicious modifications.

原文是作者当天在道里博客上发表的 http://daoliproject.org/wordpress/

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

A deep dive into models, data, and frameworks: an exhaustive 54-page review of efficient large language models

Jan 14, 2024 pm 07:48 PM

A deep dive into models, data, and frameworks: an exhaustive 54-page review of efficient large language models

Jan 14, 2024 pm 07:48 PM

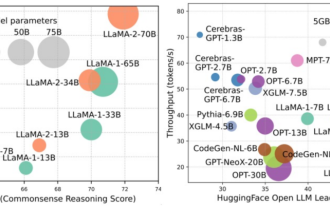

Large-scale language models (LLMs) have demonstrated compelling capabilities in many important tasks, including natural language understanding, language generation, and complex reasoning, and have had a profound impact on society. However, these outstanding capabilities require significant training resources (shown in the left image) and long inference times (shown in the right image). Therefore, researchers need to develop effective technical means to solve their efficiency problems. In addition, as can be seen from the right side of the figure, some efficient LLMs (LanguageModels) such as Mistral-7B have been successfully used in the design and deployment of LLMs. These efficient LLMs can significantly reduce inference memory while maintaining similar accuracy to LLaMA1-33B

Crushing H100, Nvidia's next-generation GPU is revealed! The first 3nm multi-chip module design, unveiled in 2024

Sep 30, 2023 pm 12:49 PM

Crushing H100, Nvidia's next-generation GPU is revealed! The first 3nm multi-chip module design, unveiled in 2024

Sep 30, 2023 pm 12:49 PM

3nm process, performance surpasses H100! Recently, foreign media DigiTimes broke the news that Nvidia is developing the next-generation GPU, the B100, code-named "Blackwell". It is said that as a product for artificial intelligence (AI) and high-performance computing (HPC) applications, the B100 will use TSMC's 3nm process process, as well as more complex multi-chip module (MCM) design, and will appear in the fourth quarter of 2024. For Nvidia, which monopolizes more than 80% of the artificial intelligence GPU market, it can use the B100 to strike while the iron is hot and further attack challengers such as AMD and Intel in this wave of AI deployment. According to NVIDIA estimates, by 2027, the output value of this field is expected to reach approximately

The most comprehensive review of multimodal large models is here! 7 Microsoft researchers cooperated vigorously, 5 major themes, 119 pages of document

Sep 25, 2023 pm 04:49 PM

The most comprehensive review of multimodal large models is here! 7 Microsoft researchers cooperated vigorously, 5 major themes, 119 pages of document

Sep 25, 2023 pm 04:49 PM

The most comprehensive review of multimodal large models is here! Written by 7 Chinese researchers at Microsoft, it has 119 pages. It starts from two types of multi-modal large model research directions that have been completed and are still at the forefront, and comprehensively summarizes five specific research topics: visual understanding and visual generation. The multi-modal large-model multi-modal agent supported by the unified visual model LLM focuses on a phenomenon: the multi-modal basic model has moved from specialized to universal. Ps. This is why the author directly drew an image of Doraemon at the beginning of the paper. Who should read this review (report)? In the original words of Microsoft: As long as you are interested in learning the basic knowledge and latest progress of multi-modal basic models, whether you are a professional researcher or a student, this content is very suitable for you to come together.

I2V-Adapter from the SD community: no configuration required, plug and play, perfectly compatible with Tusheng video plug-in

Jan 15, 2024 pm 07:48 PM

I2V-Adapter from the SD community: no configuration required, plug and play, perfectly compatible with Tusheng video plug-in

Jan 15, 2024 pm 07:48 PM

The image-to-video generation (I2V) task is a challenge in the field of computer vision that aims to convert static images into dynamic videos. The difficulty of this task is to extract and generate dynamic information in the temporal dimension from a single image while maintaining the authenticity and visual coherence of the image content. Existing I2V methods often require complex model architectures and large amounts of training data to achieve this goal. Recently, a new research result "I2V-Adapter: AGeneralImage-to-VideoAdapter for VideoDiffusionModels" led by Kuaishou was released. This research introduces an innovative image-to-video conversion method and proposes a lightweight adapter module, i.e.

MiniGPT-5, which unifies image and text generation, is here: Token becomes Voken, and the model can not only continue writing, but also automatically add pictures.

Oct 11, 2023 pm 12:45 PM

MiniGPT-5, which unifies image and text generation, is here: Token becomes Voken, and the model can not only continue writing, but also automatically add pictures.

Oct 11, 2023 pm 12:45 PM

Large-scale models are making the leap between language and vision, promising to seamlessly understand and generate text and image content. In a series of recent studies, multimodal feature integration is not only a growing trend but has already led to key advances ranging from multimodal conversations to content creation tools. Large language models have demonstrated unparalleled capabilities in text understanding and generation. However, simultaneously generating images with coherent textual narratives is still an area to be developed. Recently, a research team at the University of California, Santa Cruz proposed MiniGPT-5, an innovative interleaving algorithm based on the concept of "generative vouchers". Visual language generation technology. Paper address: https://browse.arxiv.org/p

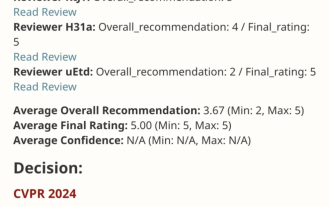

VPR 2024 perfect score paper! Meta proposes EfficientSAM: quickly split everything!

Mar 02, 2024 am 10:10 AM

VPR 2024 perfect score paper! Meta proposes EfficientSAM: quickly split everything!

Mar 02, 2024 am 10:10 AM

This work of EfficientSAM was included in CVPR2024 with a perfect score of 5/5/5! The author shared the result on a social media, as shown in the picture below: The LeCun Turing Award winner also strongly recommended this work! In recent research, Meta researchers have proposed a new improved method, namely mask image pre-training (SAMI) using SAM. This method combines MAE pre-training technology and SAM models to achieve high-quality pre-trained ViT encoders. Through SAMI, researchers try to improve the performance and efficiency of the model and provide better solutions for vision tasks. The proposal of this method brings new ideas and opportunities to further explore and develop the fields of computer vision and deep learning. by combining different

What are the Chinese Bitcoin trading platforms? Bitcoin Forex Trading Platform!

Feb 05, 2024 am 09:09 AM

What are the Chinese Bitcoin trading platforms? Bitcoin Forex Trading Platform!

Feb 05, 2024 am 09:09 AM

What are the Chinese Bitcoin trading platforms? Bitcoin Forex Trading Platform! With the rise and widespread application of Bitcoin, China has become one of the largest Bitcoin trading markets in the world. In China, there are many Bitcoin trading platforms that provide investors with convenient and safe Bitcoin trading services. This article will provide an in-depth analysis of China’s Bitcoin trading platforms and introduce some Bitcoin foreign exchange trading platforms. 1. Classification of Bitcoin trading platforms China’s Bitcoin trading platforms can be divided into two categories: centralized trading platforms and decentralized trading platforms. Centralized trading platforms refer to platforms operated by a company or institution. These platforms usually have relatively complete trading systems and trading tools, and provide a wealth of trading varieties and trading functions. Common centralized trading platforms include Huobi, Coin

Google AI rising star switches to Pika: video generation Lumiere, serves as founding scientist

Feb 26, 2024 am 09:37 AM

Google AI rising star switches to Pika: video generation Lumiere, serves as founding scientist

Feb 26, 2024 am 09:37 AM

Video generation is progressing in full swing, and Pika has welcomed a great general - Google researcher Omer Bar-Tal, who serves as Pika's founding scientist. A month ago, Google released the video generation model Lumiere as a co-author, and the effect was amazing. At that time, netizens said: Google joins the video generation battle, and there is another good show to watch. Some people in the industry, including StabilityAI CEO and former colleagues from Google, sent their blessings. Lumiere's first work, Omer Bar-Tal, who just graduated with a master's degree, graduated from the Department of Mathematics and Computer Science at Tel Aviv University in 2021, and then went to the Weizmann Institute of Science to study for a master's degree in computer science, mainly focusing on research in the field of image and video synthesis. His thesis results have been published many times