TMSC64XX DSP混合汇编2

自己研究了一个星期还是没什么进展!自己写的代码和大家分享,希望对大家有帮助! extern int a = 0; //定义外部变量,但要先赋值。 extern int asmfunc(int); //汇编函数 int main() { int ret = 1; ret = asmfunc(ret); printf("ret is %d/n",ret); } .glo

自己研究了一个星期还是没什么进展!自己写的代码和大家分享,希望对大家有帮助!

extern int a = 0; //定义外部变量,但要先赋值。

extern int asmfunc(int); //汇编函数

int main()

{

int ret = 1;

ret = asmfunc(ret);

printf("ret is %d/n",ret);

}

.global _asmfunc //调用C函数

.global _a //调用C的变量

_asmfunc:

mvk .S 12,a2

STW .D a2,*b14(_a) //给变量a赋值

LDW .D *+b14(_a),a3 //把变量的值赋给a3寄存器

NOP 4

add .L a3,a4,a3

STW .D a3,*b14(_a)

mv .S a3,a4

STW .D a3,*b14(_a)

mv .S a3,a4

mvk .S _a,a2

B .S B3

NOP 5

.end

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

A deep dive into models, data, and frameworks: an exhaustive 54-page review of efficient large language models

Jan 14, 2024 pm 07:48 PM

A deep dive into models, data, and frameworks: an exhaustive 54-page review of efficient large language models

Jan 14, 2024 pm 07:48 PM

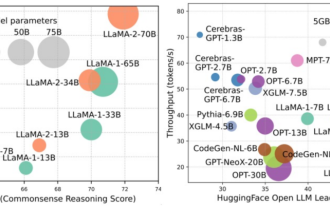

Large-scale language models (LLMs) have demonstrated compelling capabilities in many important tasks, including natural language understanding, language generation, and complex reasoning, and have had a profound impact on society. However, these outstanding capabilities require significant training resources (shown in the left image) and long inference times (shown in the right image). Therefore, researchers need to develop effective technical means to solve their efficiency problems. In addition, as can be seen from the right side of the figure, some efficient LLMs (LanguageModels) such as Mistral-7B have been successfully used in the design and deployment of LLMs. These efficient LLMs can significantly reduce inference memory while maintaining similar accuracy to LLaMA1-33B

Crushing H100, Nvidia's next-generation GPU is revealed! The first 3nm multi-chip module design, unveiled in 2024

Sep 30, 2023 pm 12:49 PM

Crushing H100, Nvidia's next-generation GPU is revealed! The first 3nm multi-chip module design, unveiled in 2024

Sep 30, 2023 pm 12:49 PM

3nm process, performance surpasses H100! Recently, foreign media DigiTimes broke the news that Nvidia is developing the next-generation GPU, the B100, code-named "Blackwell". It is said that as a product for artificial intelligence (AI) and high-performance computing (HPC) applications, the B100 will use TSMC's 3nm process process, as well as more complex multi-chip module (MCM) design, and will appear in the fourth quarter of 2024. For Nvidia, which monopolizes more than 80% of the artificial intelligence GPU market, it can use the B100 to strike while the iron is hot and further attack challengers such as AMD and Intel in this wave of AI deployment. According to NVIDIA estimates, by 2027, the output value of this field is expected to reach approximately

The powerful combination of diffusion + super-resolution models, the technology behind Google's image generator Imagen

Apr 10, 2023 am 10:21 AM

The powerful combination of diffusion + super-resolution models, the technology behind Google's image generator Imagen

Apr 10, 2023 am 10:21 AM

In recent years, multimodal learning has received much attention, especially in the two directions of text-image synthesis and image-text contrastive learning. Some AI models have attracted widespread public attention due to their application in creative image generation and editing, such as the text image models DALL・E and DALL-E 2 launched by OpenAI, and NVIDIA's GauGAN and GauGAN2. Not to be outdone, Google released its own text-to-image model Imagen at the end of May, which seems to further expand the boundaries of caption-conditional image generation. Given just a description of a scene, Imagen can generate high-quality, high-resolution

The most comprehensive review of multimodal large models is here! 7 Microsoft researchers cooperated vigorously, 5 major themes, 119 pages of document

Sep 25, 2023 pm 04:49 PM

The most comprehensive review of multimodal large models is here! 7 Microsoft researchers cooperated vigorously, 5 major themes, 119 pages of document

Sep 25, 2023 pm 04:49 PM

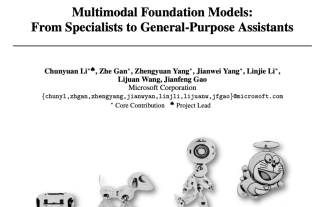

The most comprehensive review of multimodal large models is here! Written by 7 Chinese researchers at Microsoft, it has 119 pages. It starts from two types of multi-modal large model research directions that have been completed and are still at the forefront, and comprehensively summarizes five specific research topics: visual understanding and visual generation. The multi-modal large-model multi-modal agent supported by the unified visual model LLM focuses on a phenomenon: the multi-modal basic model has moved from specialized to universal. Ps. This is why the author directly drew an image of Doraemon at the beginning of the paper. Who should read this review (report)? In the original words of Microsoft: As long as you are interested in learning the basic knowledge and latest progress of multi-modal basic models, whether you are a professional researcher or a student, this content is very suitable for you to come together.

I2V-Adapter from the SD community: no configuration required, plug and play, perfectly compatible with Tusheng video plug-in

Jan 15, 2024 pm 07:48 PM

I2V-Adapter from the SD community: no configuration required, plug and play, perfectly compatible with Tusheng video plug-in

Jan 15, 2024 pm 07:48 PM

The image-to-video generation (I2V) task is a challenge in the field of computer vision that aims to convert static images into dynamic videos. The difficulty of this task is to extract and generate dynamic information in the temporal dimension from a single image while maintaining the authenticity and visual coherence of the image content. Existing I2V methods often require complex model architectures and large amounts of training data to achieve this goal. Recently, a new research result "I2V-Adapter: AGeneralImage-to-VideoAdapter for VideoDiffusionModels" led by Kuaishou was released. This research introduces an innovative image-to-video conversion method and proposes a lightweight adapter module, i.e.

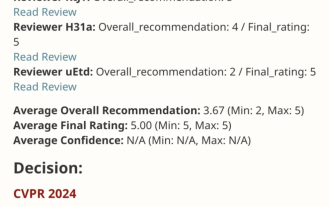

VPR 2024 perfect score paper! Meta proposes EfficientSAM: quickly split everything!

Mar 02, 2024 am 10:10 AM

VPR 2024 perfect score paper! Meta proposes EfficientSAM: quickly split everything!

Mar 02, 2024 am 10:10 AM

This work of EfficientSAM was included in CVPR2024 with a perfect score of 5/5/5! The author shared the result on a social media, as shown in the picture below: The LeCun Turing Award winner also strongly recommended this work! In recent research, Meta researchers have proposed a new improved method, namely mask image pre-training (SAMI) using SAM. This method combines MAE pre-training technology and SAM models to achieve high-quality pre-trained ViT encoders. Through SAMI, researchers try to improve the performance and efficiency of the model and provide better solutions for vision tasks. The proposal of this method brings new ideas and opportunities to further explore and develop the fields of computer vision and deep learning. by combining different

Tomohiko Sakamoto's algorithm - finding the day of the week

Sep 02, 2023 pm 07:09 PM

Tomohiko Sakamoto's algorithm - finding the day of the week

Sep 02, 2023 pm 07:09 PM

In this article, we will discuss what the Tomohiko Sakamoto algorithm is and how to use it to identify which day of the week a given date is. There are several algorithms to know the day of the week, but this algorithm is the most powerful one. This algorithm finds the day of the month in which this date occurs in the smallest possible time and with the smallest space complexity. Problem Statement - We are given a date based on the Georgian calendar and our task is to find out which day of the week the given date is using Tomohiko Sakamoto's algorithm. Example Input - Date=30, Month=04, Year=2020 Output - The given date is Monday Input - Date=15, Month=03, Year=2012 Output - The given date is Thursday

MiniGPT-5, which unifies image and text generation, is here: Token becomes Voken, and the model can not only continue writing, but also automatically add pictures.

Oct 11, 2023 pm 12:45 PM

MiniGPT-5, which unifies image and text generation, is here: Token becomes Voken, and the model can not only continue writing, but also automatically add pictures.

Oct 11, 2023 pm 12:45 PM

Large-scale models are making the leap between language and vision, promising to seamlessly understand and generate text and image content. In a series of recent studies, multimodal feature integration is not only a growing trend but has already led to key advances ranging from multimodal conversations to content creation tools. Large language models have demonstrated unparalleled capabilities in text understanding and generation. However, simultaneously generating images with coherent textual narratives is still an area to be developed. Recently, a research team at the University of California, Santa Cruz proposed MiniGPT-5, an innovative interleaving algorithm based on the concept of "generative vouchers". Visual language generation technology. Paper address: https://browse.arxiv.org/p