Greenplum分布式数据库开发入门到精通(架构、部署、管理、开发和

Greenplum 分布式数据库开发入门到精通(架构、部署、管理、开发和调优) 对这个课程有兴趣的朋友,可以加我qq2059055336和我联系 课程大纲 1 Greenplum架构 什么是Greenplum Greenplum体系结构 Greenplum高可用性架构 2 安装Greenplum 配置环境 安装并初始化G

Greenplum 分布式数据库开发入门到精通(架构、部署、管理、开发和调优)

对这个课程有兴趣的朋友,可以加我qq2059055336和我联系

课程大纲

1 Greenplum架构

什么是Greenplum

Greenplum体系结构

Greenplum高可用性架构

2 安装Greenplum

配置环境

安装并初始化GPDB系统

启停数据库

配置GP系统

3 分布式数据库存储

数据是如何存储的

分布策略

4 GBDB查询处理

查询命令的执行

SQL查询处理机制

并行查询计划

5 角色权限及客户端认证管理

客户端认证

管理用户和组

6 客户端接口和程序

pgAdmin III

PSQL

7 定义数据库对象

创建并管理数据库

创建并管理表空间

创建并管理模式

创建并管理表

分区表

数据分布与分区

压缩存储与行列存储

序列、索引与视图

8 管理数据

插入、更新、删除记录

事务管理

空间回收和统计

9 查询数据

定义查询

使用函数和运算符

查询分析

10 工作负载及资源管理

GP工作负载管理概述

配置工作负载管理

创建资源队列

分配资源队列

检查资源队列状态

11 装载和卸载数据

GP装载命令概述

装载数据到GP

从GP卸载数据

格式化数据文件

12 备份恢复

串行备份和恢复

并行恢复和恢复

13 性能调优

如何进行调优

常见的性能问题

14 GP系统配置参数

关于GP的Master参数与本地化参数

设置配置参数

配置参数种类

15 开启高可用性

GP高可用概述

开启GP的Mirror

获知Segment何时失败

恢复失败的Segment

恢复失败的Master

16 GP MapReduce

MapReduce基础

GP MapReduce编程

MapReduce作业执行和故障诊断

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Generate PPT with one click! Kimi: Let the 'PPT migrant workers' become popular first

Aug 01, 2024 pm 03:28 PM

Generate PPT with one click! Kimi: Let the 'PPT migrant workers' become popular first

Aug 01, 2024 pm 03:28 PM

Kimi: In just one sentence, in just ten seconds, a PPT will be ready. PPT is so annoying! To hold a meeting, you need to have a PPT; to write a weekly report, you need to have a PPT; to make an investment, you need to show a PPT; even when you accuse someone of cheating, you have to send a PPT. College is more like studying a PPT major. You watch PPT in class and do PPT after class. Perhaps, when Dennis Austin invented PPT 37 years ago, he did not expect that one day PPT would become so widespread. Talking about our hard experience of making PPT brings tears to our eyes. "It took three months to make a PPT of more than 20 pages, and I revised it dozens of times. I felt like vomiting when I saw the PPT." "At my peak, I did five PPTs a day, and even my breathing was PPT." If you have an impromptu meeting, you should do it

All CVPR 2024 awards announced! Nearly 10,000 people attended the conference offline, and a Chinese researcher from Google won the best paper award

Jun 20, 2024 pm 05:43 PM

All CVPR 2024 awards announced! Nearly 10,000 people attended the conference offline, and a Chinese researcher from Google won the best paper award

Jun 20, 2024 pm 05:43 PM

In the early morning of June 20th, Beijing time, CVPR2024, the top international computer vision conference held in Seattle, officially announced the best paper and other awards. This year, a total of 10 papers won awards, including 2 best papers and 2 best student papers. In addition, there were 2 best paper nominations and 4 best student paper nominations. The top conference in the field of computer vision (CV) is CVPR, which attracts a large number of research institutions and universities every year. According to statistics, a total of 11,532 papers were submitted this year, and 2,719 were accepted, with an acceptance rate of 23.6%. According to Georgia Institute of Technology’s statistical analysis of CVPR2024 data, from the perspective of research topics, the largest number of papers is image and video synthesis and generation (Imageandvideosyn

From bare metal to a large model with 70 billion parameters, here is a tutorial and ready-to-use scripts

Jul 24, 2024 pm 08:13 PM

From bare metal to a large model with 70 billion parameters, here is a tutorial and ready-to-use scripts

Jul 24, 2024 pm 08:13 PM

We know that LLM is trained on large-scale computer clusters using massive data. This site has introduced many methods and technologies used to assist and improve the LLM training process. Today, what we want to share is an article that goes deep into the underlying technology and introduces how to turn a bunch of "bare metals" without even an operating system into a computer cluster for training LLM. This article comes from Imbue, an AI startup that strives to achieve general intelligence by understanding how machines think. Of course, turning a bunch of "bare metal" without an operating system into a computer cluster for training LLM is not an easy process, full of exploration and trial and error, but Imbue finally successfully trained an LLM with 70 billion parameters. and in the process accumulate

AI in use | AI created a life vlog of a girl living alone, which received tens of thousands of likes in 3 days

Aug 07, 2024 pm 10:53 PM

AI in use | AI created a life vlog of a girl living alone, which received tens of thousands of likes in 3 days

Aug 07, 2024 pm 10:53 PM

Editor of the Machine Power Report: Yang Wen The wave of artificial intelligence represented by large models and AIGC has been quietly changing the way we live and work, but most people still don’t know how to use it. Therefore, we have launched the "AI in Use" column to introduce in detail how to use AI through intuitive, interesting and concise artificial intelligence use cases and stimulate everyone's thinking. We also welcome readers to submit innovative, hands-on use cases. Video link: https://mp.weixin.qq.com/s/2hX_i7li3RqdE4u016yGhQ Recently, the life vlog of a girl living alone became popular on Xiaohongshu. An illustration-style animation, coupled with a few healing words, can be easily picked up in just a few days.

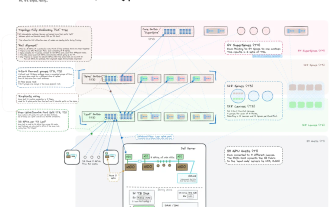

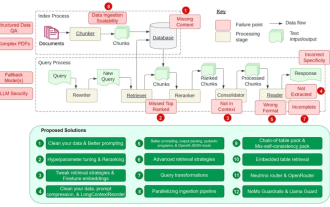

Counting down the 12 pain points of RAG, NVIDIA senior architect teaches solutions

Jul 11, 2024 pm 01:53 PM

Counting down the 12 pain points of RAG, NVIDIA senior architect teaches solutions

Jul 11, 2024 pm 01:53 PM

Retrieval-augmented generation (RAG) is a technique that uses retrieval to boost language models. Specifically, before a language model generates an answer, it retrieves relevant information from an extensive document database and then uses this information to guide the generation process. This technology can greatly improve the accuracy and relevance of content, effectively alleviate the problem of hallucinations, increase the speed of knowledge update, and enhance the traceability of content generation. RAG is undoubtedly one of the most exciting areas of artificial intelligence research. For more details about RAG, please refer to the column article on this site "What are the new developments in RAG, which specializes in making up for the shortcomings of large models?" This review explains it clearly." But RAG is not perfect, and users often encounter some "pain points" when using it. Recently, NVIDIA’s advanced generative AI solution

iOS 18 adds a new 'Recovered' album function to retrieve lost or damaged photos

Jul 18, 2024 am 05:48 AM

iOS 18 adds a new 'Recovered' album function to retrieve lost or damaged photos

Jul 18, 2024 am 05:48 AM

Apple's latest releases of iOS18, iPadOS18 and macOS Sequoia systems have added an important feature to the Photos application, designed to help users easily recover photos and videos lost or damaged due to various reasons. The new feature introduces an album called "Recovered" in the Tools section of the Photos app that will automatically appear when a user has pictures or videos on their device that are not part of their photo library. The emergence of the "Recovered" album provides a solution for photos and videos lost due to database corruption, the camera application not saving to the photo library correctly, or a third-party application managing the photo library. Users only need a few simple steps

How steep is the learning curve of golang framework architecture?

Jun 05, 2024 pm 06:59 PM

How steep is the learning curve of golang framework architecture?

Jun 05, 2024 pm 06:59 PM

The learning curve of the Go framework architecture depends on familiarity with the Go language and back-end development and the complexity of the chosen framework: a good understanding of the basics of the Go language. It helps to have backend development experience. Frameworks that differ in complexity lead to differences in learning curves.

Detailed tutorial on establishing a database connection using MySQLi in PHP

Jun 04, 2024 pm 01:42 PM

Detailed tutorial on establishing a database connection using MySQLi in PHP

Jun 04, 2024 pm 01:42 PM

How to use MySQLi to establish a database connection in PHP: Include MySQLi extension (require_once) Create connection function (functionconnect_to_db) Call connection function ($conn=connect_to_db()) Execute query ($result=$conn->query()) Close connection ( $conn->close())