Hadoop Tmp Dir

今天早上的报表没有出来,报表是从用 hadoop 进行跑的 一看 hadoop 相关日志,报如下错误 Message: org.apache.hadoop.ipc.RemoteException: java.io.IOException: org.apache.hadoop.fs.FSError: java.io.IOException: No space left on device 咋一看, 以为

今天早上的报表没有出来,报表是从用 hadoop 进行跑的

一看 hadoop 相关日志,报如下错误

<code>Message: org.apache.hadoop.ipc.RemoteException: java.io.IOException: org.apache.hadoop.fs.FSError: java.io.IOException: No space left on device </code>

咋一看, 以为是 hdfs没空间了,运行如下命令

<code> hadoop dfsadmin -report

Configured Capacity: 44302785945600 (40.29 TB)

Present Capacity: 42020351946752 (38.22 TB)

DFS Remaining: 8124859072512 (7.39 TB)

DFS Used: 33895492874240 (30.83 TB)

DFS Used%: 80.66%

Under replicated blocks: 1687

Blocks with corrupt replicas: 0

Missing blocks: 0

</code>从输出的结果来看,dfs的空间还很大,所以”No space left on device”这样的错误应该不是hdfs的问题

应该是本地磁盘的问题,发现hadoop运行时,要用到一个临时目录,可以在core-site.xml文件中配置

<code>hadoop.tmp.dir </code>

默认的路径是 /tmp/hadoop-${user.name}

看了一下 /tmp/的空间,确实很小了,把hadoop.tmp.dir挂到一个大一点的盘上就可以了

hadoop.tmp.dir是其它临时目录的父目录

但是一个问题还没有解决, hadoop.tmp.dir到底需要多大空间呢?怎么样计算呢?

common-question-and-requests-from-our-users

原文地址:Hadoop Tmp Dir, 感谢原作者分享。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1392

1392

52

52

The cleaning principle of /tmp/ folder in Linux system and the role of tmp file

Dec 21, 2023 pm 05:36 PM

The cleaning principle of /tmp/ folder in Linux system and the role of tmp file

Dec 21, 2023 pm 05:36 PM

Most of the .tmp files are files left behind due to abnormal shutdown or crash. These temporary scratch disks have no use after you restart the computer, so you can safely delete them. When you use the Windows operating system, you may often find some files with the suffix TMP in the root directory of the C drive, and you will also find a TEMP directory in the Windows directory. TMP files are temporary files generated by various software or systems. , also known as junk files. Temporary files generated by Windows are essentially the same as virtual memory, except that temporary files are more targeted than virtual memory and only serve a certain program. And its specificity has caused many novices to be intimidated by it and not delete it.

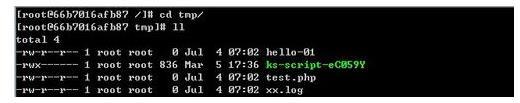

How to access and clean junk files in /tmp directory in CentOS 7?

Dec 27, 2023 pm 09:10 PM

How to access and clean junk files in /tmp directory in CentOS 7?

Dec 27, 2023 pm 09:10 PM

There is a lot of garbage in the tmp directory in the centos7 system. If you want to clear the garbage, how should you do it? Let’s take a look at the detailed tutorial below. To view the list of files in the tmp file directory, execute the command cdtmp/ to switch to the current file directory of tmp, and execute the ll command to view the list of files in the current directory. As shown below. Use the rm command to delete files. It should be noted that the rm command deletes files from the system forever. Therefore, it is recommended that when using the rm command, it is best to give a prompt before deleting the file. Use the command rm-i file name, wait for the user to confirm deletion (y) or skip deletion (n), and the system will perform corresponding operations. As shown below.

What does tmp mean in linux

Mar 10, 2023 am 09:26 AM

What does tmp mean in linux

Mar 10, 2023 am 09:26 AM

In Linux, tmp refers to a folder that stores temporary files. This folder contains temporary files created by the system and users; the default time limit of the tmp folder is 30 days. Files under tmp that are not accessed for 30 days will be automatically deleted by the system. of.

Java Errors: Hadoop Errors, How to Handle and Avoid

Jun 24, 2023 pm 01:06 PM

Java Errors: Hadoop Errors, How to Handle and Avoid

Jun 24, 2023 pm 01:06 PM

Java Errors: Hadoop Errors, How to Handle and Avoid When using Hadoop to process big data, you often encounter some Java exception errors, which may affect the execution of tasks and cause data processing to fail. This article will introduce some common Hadoop errors and provide ways to deal with and avoid them. Java.lang.OutOfMemoryErrorOutOfMemoryError is an error caused by insufficient memory of the Java virtual machine. When Hadoop is

What file is TmP?

Dec 25, 2023 pm 03:39 PM

What file is TmP?

Dec 25, 2023 pm 03:39 PM

The "tmp" file is a temporary file, usually generated by the operating system or program during operation, and is used to store temporary data or intermediate results when the program is running. These files are mainly used to help the program execute smoothly, but they are usually automatically deleted after the program is executed. tmp files can usually be found in the root directory of the C drive on Windows systems. However, tmp files are associated with a specific application or system, so their specific content and purpose may vary from application to application.

What file is tmp

Feb 22, 2023 pm 02:35 PM

What file is tmp

Feb 22, 2023 pm 02:35 PM

tmp is a temporary file generated by various software or systems, which is often called junk file. Usually, programs that create temporary files delete them when they are finished, but sometimes these files are retained. There may be many reasons why temporary files are retained: the program may be interrupted before completing the installation, or crash during restart; these files generally have little use value, and we can delete them directly.

Using Hadoop and HBase in Beego for big data storage and querying

Jun 22, 2023 am 10:21 AM

Using Hadoop and HBase in Beego for big data storage and querying

Jun 22, 2023 am 10:21 AM

With the advent of the big data era, data processing and storage have become more and more important, and how to efficiently manage and analyze large amounts of data has become a challenge for enterprises. Hadoop and HBase, two projects of the Apache Foundation, provide a solution for big data storage and analysis. This article will introduce how to use Hadoop and HBase in Beego for big data storage and query. 1. Introduction to Hadoop and HBase Hadoop is an open source distributed storage and computing system that can

How to use PHP and Hadoop for big data processing

Jun 19, 2023 pm 02:24 PM

How to use PHP and Hadoop for big data processing

Jun 19, 2023 pm 02:24 PM

As the amount of data continues to increase, traditional data processing methods can no longer handle the challenges brought by the big data era. Hadoop is an open source distributed computing framework that solves the performance bottleneck problem caused by single-node servers in big data processing through distributed storage and processing of large amounts of data. PHP is a scripting language that is widely used in web development and has the advantages of rapid development and easy maintenance. This article will introduce how to use PHP and Hadoop for big data processing. What is HadoopHadoop is