Hadoop Pig Udf Scheme

hadoop pig udf scheme 如果不指定 scheme 当你返回一个tuple里面有大于1个fields的时候, 就必须指定schemea 不然多个field就当作一个field register myudfs.jar; A = load 'student_data' as (name: chararray, age: int, gpa: float); B = foreach A gene

hadoop pig udf scheme

如果不指定 scheme 当你返回一个tuple里面有大于1个fields的时候,

就必须指定schemea 不然多个field就当作一个field

<code> register myudfs.jar;

A = load 'student_data' as (name: chararray, age: int, gpa: float);

B = foreach A generate flatten(myudfs.Swap(name, age)), gpa;

C = foreach B generate $2;

D = limit B 20;

dump D

</code>This script will result in the following error cause by line 4 ( C = foreach B generate $2;).

<code>java.io.IOException: Out of bound access. Trying to access non-existent column: 2. Schema {bytearray,gpa: float} has 2 column(s).

</code>This is because Pig is only aware of two columns in B while line 4 is requesting the third column of the tuple. (Column indexing in Pig starts with 0.) The function, including the schema, looks like this:

下面实现了一个schema,输出为4个参数,输出为两个参数,在android上面要用imei和mac去生成一个ukey,在ios平台上,要用 mac和openudid去生成一个ukey

最后返回的是一个platform,ukey

<code> package kload;

import java.io.IOException;

import org.apache.pig.EvalFunc;

import org.apache.pig.data.Tuple;

import org.apache.pig.data.TupleFactory;

import org.apache.pig.impl.logicalLayer.schema.Schema;

import org.apache.pig.data.DataType;

/**

*translate mac,imei,openudid to key

*/

public class KoudaiFormateUkey extends EvalFunc<tuple>{

private String ukey = null;

private String platform = null;

public Tuple exec(Tuple input) throws IOException {

if (input == null || input.size() == 0)

return null;

try{

String platform = (String)input.get(0);

String mac = (String)input.get(1);

String imei= (String)input.get(2);

String openudID = (String)input.get(3);

this.getUkey(platform,mac,imei,openudID);

if(this.platform == null || this.ukey == null){

return null;

}

Tuple output = TupleFactory.getInstance().newTuple(2);

output.set(0, this.platform);

output.set(1, this.ukey);

return output;

}catch(Exception e){

throw new IOException("Caught exception processing input row ", e);

}

}

private String getUkey(String platform, String mac, String imei, String openudID){

String tmpStr = null;

String ukey = null;

int pType=-1;

if(platform == null){

return null;

}

tmpStr = platform.toUpperCase();

if(tmpStr.indexOf("IPHONE") != -1){

this.platform = "iphone";

pType = 1001;

}else if(tmpStr.indexOf("ANDROID") != -1){

this.platform = "android";

pType = 1002;

}else if(tmpStr.indexOf("IPAD") != -1){

this.platform = "ipad";

pType = 1003;

}else{

this.platform = "unknow";

pType = 1004;

}

switch(pType){

case 1001:

case 1003:

if(mac == null && openudID == null){

return null;

}

ukey = String.format("%s_%s",mac,openudID);

break;

case 1002:

if(mac == null && imei== null){

return null;

}

ukey = String.format("%s_%s",mac,imei);

break;

case 1004:

if(mac == null && imei== null && openudID == null){

return null;

}

ukey = String.format("%s_%s_%s",mac,imei,openudID);

break;

default:

break;

}

if (ukey == null || ukey.length() == 0){

return null;

}

this.ukey = ukey.toUpperCase();

return this.ukey;

}

public Schema outputSchema(Schema input) {

try{

Schema tupleSchema = new Schema();

tupleSchema.add(input.getField(0));

tupleSchema.add(input.getField(1));

return new Schema(new

Schema.FieldSchema(getSchemaName(this.getClass().getName().toLowerCase(),

input),tupleSchema, DataType.TUPLE));

}catch (Exception e){

return null;

}

}

}

</tuple></code>原文地址:Hadoop Pig Udf Scheme, 感谢原作者分享。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Java Errors: Hadoop Errors, How to Handle and Avoid

Jun 24, 2023 pm 01:06 PM

Java Errors: Hadoop Errors, How to Handle and Avoid

Jun 24, 2023 pm 01:06 PM

Java Errors: Hadoop Errors, How to Handle and Avoid When using Hadoop to process big data, you often encounter some Java exception errors, which may affect the execution of tasks and cause data processing to fail. This article will introduce some common Hadoop errors and provide ways to deal with and avoid them. Java.lang.OutOfMemoryErrorOutOfMemoryError is an error caused by insufficient memory of the Java virtual machine. When Hadoop is

Using Hadoop and HBase in Beego for big data storage and querying

Jun 22, 2023 am 10:21 AM

Using Hadoop and HBase in Beego for big data storage and querying

Jun 22, 2023 am 10:21 AM

With the advent of the big data era, data processing and storage have become more and more important, and how to efficiently manage and analyze large amounts of data has become a challenge for enterprises. Hadoop and HBase, two projects of the Apache Foundation, provide a solution for big data storage and analysis. This article will introduce how to use Hadoop and HBase in Beego for big data storage and query. 1. Introduction to Hadoop and HBase Hadoop is an open source distributed storage and computing system that can

How to use PHP and Hadoop for big data processing

Jun 19, 2023 pm 02:24 PM

How to use PHP and Hadoop for big data processing

Jun 19, 2023 pm 02:24 PM

As the amount of data continues to increase, traditional data processing methods can no longer handle the challenges brought by the big data era. Hadoop is an open source distributed computing framework that solves the performance bottleneck problem caused by single-node servers in big data processing through distributed storage and processing of large amounts of data. PHP is a scripting language that is widely used in web development and has the advantages of rapid development and easy maintenance. This article will introduce how to use PHP and Hadoop for big data processing. What is HadoopHadoop is

Explore the application of Java in the field of big data: understanding of Hadoop, Spark, Kafka and other technology stacks

Dec 26, 2023 pm 02:57 PM

Explore the application of Java in the field of big data: understanding of Hadoop, Spark, Kafka and other technology stacks

Dec 26, 2023 pm 02:57 PM

Java big data technology stack: Understand the application of Java in the field of big data, such as Hadoop, Spark, Kafka, etc. As the amount of data continues to increase, big data technology has become a hot topic in today's Internet era. In the field of big data, we often hear the names of Hadoop, Spark, Kafka and other technologies. These technologies play a vital role, and Java, as a widely used programming language, also plays a huge role in the field of big data. This article will focus on the application of Java in large

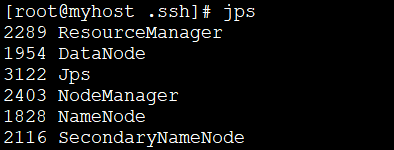

How to install Hadoop in linux

May 18, 2023 pm 08:19 PM

How to install Hadoop in linux

May 18, 2023 pm 08:19 PM

1: Install JDK1. Execute the following command to download the JDK1.8 installation package. wget--no-check-certificatehttps://repo.huaweicloud.com/java/jdk/8u151-b12/jdk-8u151-linux-x64.tar.gz2. Execute the following command to decompress the downloaded JDK1.8 installation package. tar-zxvfjdk-8u151-linux-x64.tar.gz3. Move and rename the JDK package. mvjdk1.8.0_151//usr/java84. Configure Java environment variables. echo'

Use PHP to achieve large-scale data processing: Hadoop, Spark, Flink, etc.

May 11, 2023 pm 04:13 PM

Use PHP to achieve large-scale data processing: Hadoop, Spark, Flink, etc.

May 11, 2023 pm 04:13 PM

As the amount of data continues to increase, large-scale data processing has become a problem that enterprises must face and solve. Traditional relational databases can no longer meet this demand. For the storage and analysis of large-scale data, distributed computing platforms such as Hadoop, Spark, and Flink have become the best choices. In the selection process of data processing tools, PHP is becoming more and more popular among developers as a language that is easy to develop and maintain. In this article, we will explore how to leverage PHP for large-scale data processing and how

Comparison and application scenarios of Redis and Hadoop

Jun 21, 2023 am 08:28 AM

Comparison and application scenarios of Redis and Hadoop

Jun 21, 2023 am 08:28 AM

Redis and Hadoop are both commonly used distributed data storage and processing systems. However, there are obvious differences between the two in terms of design, performance, usage scenarios, etc. In this article, we will compare the differences between Redis and Hadoop in detail and explore their applicable scenarios. Redis Overview Redis is an open source memory-based data storage system that supports multiple data structures and efficient read and write operations. The main features of Redis include: Memory storage: Redis

Data processing engines in PHP (Spark, Hadoop, etc.)

Jun 23, 2023 am 09:43 AM

Data processing engines in PHP (Spark, Hadoop, etc.)

Jun 23, 2023 am 09:43 AM

In the current Internet era, the processing of massive data is a problem that every enterprise and institution needs to face. As a widely used programming language, PHP also needs to keep up with the times in data processing. In order to process massive data more efficiently, PHP development has introduced some big data processing tools, such as Spark and Hadoop. Spark is an open source data processing engine that can be used for distributed processing of large data sets. The biggest feature of Spark is its fast data processing speed and efficient data storage.