伪分布式安装部署CDH4.2.1与Impala[原创实践]

参考资料: http://www.cloudera.com/content/cloudera-content/cloudera-docs/CDH4/latest/CDH4-Quick-Start/cdh4qs_topic_3_3.html http://www.cloudera.com/content/cloudera-content/cloudera-docs/Impala/latest/Installing-and-Using-Impala/Installing

参考资料:

http://www.cloudera.com/content/cloudera-content/cloudera-docs/CDH4/latest/CDH4-Quick-Start/cdh4qs_topic_3_3.html

http://www.cloudera.com/content/cloudera-content/cloudera-docs/Impala/latest/Installing-and-Using-Impala/Installing-and-Using-Impala.html

http://blog.cloudera.com/blog/2013/02/from-zero-to-impala-in-minutes/

什么是Impala?

Cloudera发布了实时查询开源项目Impala,根据多款产品实测表明,它比原来基于MapReduce的Hive SQL查询速度提升3~90倍。Impala是Google Dremel的模仿,但在SQL功能上青出于蓝胜于蓝。

1. 安装JDK

$ sudo yum install jdk-6u41-linux-amd64.rpm

2. 伪分布式模式安装CDH4

$ cd /etc/yum.repos.d/

$ sudo wget http://archive.cloudera.com/cdh4/redhat/6/x86_64/cdh/cloudera-cdh4.repo

$ sudo yum install hadoop-conf-pseudo

格式化NameNode.

$ sudo -u hdfs hdfs namenode -format

启动HDFS

$ for x in `cd /etc/init.d ; ls hadoop-hdfs-*` ; do sudo service $x start ; done

创建/tmp目录

$ sudo -u hdfs hadoop fs -rm -r /tmp

$ sudo -u hdfs hadoop fs -mkdir /tmp

$ sudo -u hdfs hadoop fs -chmod -R 1777 /tmp

创建YARN与日志目录

$ sudo -u hdfs hadoop fs -mkdir /tmp/hadoop-yarn/staging

$ sudo -u hdfs hadoop fs -chmod -R 1777 /tmp/hadoop-yarn/staging

$ sudo -u hdfs hadoop fs -mkdir /tmp/hadoop-yarn/staging/history/done_intermediate

$ sudo -u hdfs hadoop fs -chmod -R 1777 /tmp/hadoop-yarn/staging/history/done_intermediate

$ sudo -u hdfs hadoop fs -chown -R mapred:mapred /tmp/hadoop-yarn/staging

$ sudo -u hdfs hadoop fs -mkdir /var/log/hadoop-yarn

$ sudo -u hdfs hadoop fs -chown yarn:mapred /var/log/hadoop-yarn

检查HDFS文件树

$ sudo -u hdfs hadoop fs -ls -R /

1 2 3 4 5 6 7 8 |

|

启动YARN

$ sudo service hadoop-yarn-resourcemanager start

$ sudo service hadoop-yarn-nodemanager start

$ sudo service hadoop-mapreduce-historyserver start

创建用户目录(以用户dong.guo为例):

$ sudo -u hdfs hadoop fs -mkdir /user/dong.guo

$ sudo -u hdfs hadoop fs -chown dong.guo /user/dong.guo

测试上传文件

$ hadoop fs -mkdir input

$ hadoop fs -put /etc/hadoop/conf/*.xml input

$ hadoop fs -ls input

1 2 3 4 5 |

|

配置HADOOP_MAPRED_HOME环境变量

$ export HADOOP_MAPRED_HOME=/usr/lib/hadoop-mapreduce

运行一个测试Job

$ hadoop jar /usr/lib/hadoop-mapreduce/hadoop-mapreduce-examples.jar grep input output23 'dfs[a-z.]+'

Job完成后,可以看到以下目录

$ hadoop fs -ls

1 2 3 |

|

$ hadoop fs -ls output23

1 2 3 |

|

$ hadoop fs -cat output23/part-r-00000 | head

1 2 3 4 5 6 |

|

3. 安装 Hive

$ sudo yum install hive hive-metastore hive-server

$ sudo yum install mysql-server

$ sudo service mysqld start

$ cd ~

$ wget 'http://cdn.mysql.com/Downloads/Connector-J/mysql-connector-java-5.1.25.tar.gz'

$ tar xzf mysql-connector-java-5.1.25.tar.gz

$ sudo cp mysql-connector-java-5.1.25/mysql-connector-java-5.1.25-bin.jar /usr/lib/hive/lib/

$ sudo /usr/bin/mysql_secure_installation

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

|

$ mysql -u root -phadoophive

1 2 3 4 5 6 7 8 9 10 11 |

|

$ sudo mv /etc/hive/conf/hive-site.xml /etc/hive/conf/hive-site.xml.bak

$ sudo vim /etc/hive/conf/hive-site.xml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

|

$ sudo service hive-metastore start

1 |

|

$ sudo service hive-server start

1 |

|

$ sudo -u hdfs hadoop fs -mkdir /user/hive

$ sudo -u hdfs hadoop fs -chown hive /user/hive

$ sudo -u hdfs hadoop fs -mkdir /tmp

$ sudo -u hdfs hadoop fs -chmod 777 /tmp

$ sudo -u hdfs hadoop fs -chmod o+t /tmp

$ sudo -u hdfs hadoop fs -mkdir /data

$ sudo -u hdfs hadoop fs -chown hdfs /data

$ sudo -u hdfs hadoop fs -chmod 777 /data

$ sudo -u hdfs hadoop fs -chmod o+t /data

$ sudo chown -R hive:hive /var/lib/hive

$ sudo vim /tmp/kv1.txt

1 2 3 4 5 |

|

$ sudo -u hive hive

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

|

$ export HADOOP_MAPRED_HOME=/usr/lib/hadoop-mapreduce

4. 安装 Impala

$ cd /etc/yum.repos.d/

$ sudo wget http://archive.cloudera.com/impala/redhat/6/x86_64/impala/cloudera-impala.repo

$ sudo yum install impala impala-shell

$ sudo yum install impala-server impala-state-store

$ sudo vim /etc/hadoop/conf/hdfs-site.xml

1 2 3 4 5 6 7 8 9 |

|

$ sudo cp -rpa /etc/hadoop/conf/core-site.xml /etc/impala/conf/

$ sudo cp -rpa /etc/hadoop/conf/hdfs-site.xml /etc/impala/conf/

$ sudo service hadoop-hdfs-datanode restart

$ sudo service impala-state-store restart

$ sudo service impala-server restart

$ sudo /usr/java/default/bin/jps

5. 安装 Hbase

$ sudo yum install hbase

$ sudo vim /etc/security/limits.conf

1 2 |

|

$ sudo vim /etc/pam.d/common-session

1 |

|

$ sudo vim /etc/hadoop/conf/hdfs-site.xml

1 2 |

|

$ sudo cp /usr/lib/impala/lib/hive-hbase-handler-0.10.0-cdh4.2.0.jar /usr/lib/hive/lib/hive-hbase-handler-0.10.0-cdh4.2.0.jar

$ sudo /etc/init.d/hadoop-hdfs-namenode restart

$ sudo /etc/init.d/hadoop-hdfs-datanode restart

$ sudo yum install hbase-master

$ sudo service hbase-master start

$ sudo -u hive hive

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 |

|

6. 测试Impala性能

View parameters on http://ec2-204-236-182-78.us-west-1.compute.amazonaws.com:25000

$ impala-shell

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 |

|

通过两个COUNT的结果来看,Hive使用了 28.195 seconds 而 Impala仅使用了0.34s,由此可以看出Impala的性能确实要优于Hive。

原文地址:伪分布式安装部署CDH4.2.1与Impala[原创实践], 感谢原作者分享。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Solution to the problem that Win11 system cannot install Chinese language pack

Mar 09, 2024 am 09:48 AM

Solution to the problem that Win11 system cannot install Chinese language pack

Mar 09, 2024 am 09:48 AM

Solution to the problem that Win11 system cannot install Chinese language pack With the launch of Windows 11 system, many users began to upgrade their operating system to experience new functions and interfaces. However, some users found that they were unable to install the Chinese language pack after upgrading, which troubled their experience. In this article, we will discuss the reasons why Win11 system cannot install the Chinese language pack and provide some solutions to help users solve this problem. Cause Analysis First, let us analyze the inability of Win11 system to

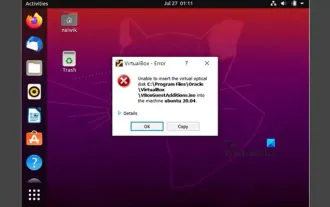

Unable to install guest additions in VirtualBox

Mar 10, 2024 am 09:34 AM

Unable to install guest additions in VirtualBox

Mar 10, 2024 am 09:34 AM

You may not be able to install guest additions to a virtual machine in OracleVirtualBox. When we click on Devices>InstallGuestAdditionsCDImage, it just throws an error as shown below: VirtualBox - Error: Unable to insert virtual disc C: Programming FilesOracleVirtualBoxVBoxGuestAdditions.iso into ubuntu machine In this post we will understand what happens when you What to do when you can't install guest additions in VirtualBox. Unable to install guest additions in VirtualBox If you can't install it in Virtua

What should I do if Baidu Netdisk is downloaded successfully but cannot be installed?

Mar 13, 2024 pm 10:22 PM

What should I do if Baidu Netdisk is downloaded successfully but cannot be installed?

Mar 13, 2024 pm 10:22 PM

If you have successfully downloaded the installation file of Baidu Netdisk, but cannot install it normally, it may be that there is an error in the integrity of the software file or there is a problem with the residual files and registry entries. Let this site take care of it for users. Let’s introduce the analysis of the problem that Baidu Netdisk is successfully downloaded but cannot be installed. Analysis of the problem that Baidu Netdisk downloaded successfully but could not be installed 1. Check the integrity of the installation file: Make sure that the downloaded installation file is complete and not damaged. You can download it again, or try to download the installation file from another trusted source. 2. Turn off anti-virus software and firewall: Some anti-virus software or firewall programs may prevent the installation program from running properly. Try disabling or exiting the anti-virus software and firewall, then re-run the installation

How to install Android apps on Linux?

Mar 19, 2024 am 11:15 AM

How to install Android apps on Linux?

Mar 19, 2024 am 11:15 AM

Installing Android applications on Linux has always been a concern for many users. Especially for Linux users who like to use Android applications, it is very important to master how to install Android applications on Linux systems. Although running Android applications directly on Linux is not as simple as on the Android platform, by using emulators or third-party tools, we can still happily enjoy Android applications on Linux. The following will introduce how to install Android applications on Linux systems.

How to install Podman on Ubuntu 24.04

Mar 22, 2024 am 11:26 AM

How to install Podman on Ubuntu 24.04

Mar 22, 2024 am 11:26 AM

If you have used Docker, you must understand daemons, containers, and their functions. A daemon is a service that runs in the background when a container is already in use in any system. Podman is a free management tool for managing and creating containers without relying on any daemon such as Docker. Therefore, it has advantages in managing containers without the need for long-term backend services. Additionally, Podman does not require root-level permissions to be used. This guide discusses in detail how to install Podman on Ubuntu24. To update the system, we first need to update the system and open the Terminal shell of Ubuntu24. During both installation and upgrade processes, we need to use the command line. a simple

How to Install and Run the Ubuntu Notes App on Ubuntu 24.04

Mar 22, 2024 pm 04:40 PM

How to Install and Run the Ubuntu Notes App on Ubuntu 24.04

Mar 22, 2024 pm 04:40 PM

While studying in high school, some students take very clear and accurate notes, taking more notes than others in the same class. For some, note-taking is a hobby, while for others, it is a necessity when they easily forget small information about anything important. Microsoft's NTFS application is particularly useful for students who wish to save important notes beyond regular lectures. In this article, we will describe the installation of Ubuntu applications on Ubuntu24. Updating the Ubuntu System Before installing the Ubuntu installer, on Ubuntu24 we need to ensure that the newly configured system has been updated. We can use the most famous "a" in Ubuntu system

Detailed steps to install Go language on Win7 computer

Mar 27, 2024 pm 02:00 PM

Detailed steps to install Go language on Win7 computer

Mar 27, 2024 pm 02:00 PM

Detailed steps to install Go language on Win7 computer Go (also known as Golang) is an open source programming language developed by Google. It is simple, efficient and has excellent concurrency performance. It is suitable for the development of cloud services, network applications and back-end systems. . Installing the Go language on a Win7 computer allows you to quickly get started with the language and start writing Go programs. The following will introduce in detail the steps to install the Go language on a Win7 computer, and attach specific code examples. Step 1: Download the Go language installation package and visit the Go official website

How to install Go language under Win7 system?

Mar 27, 2024 pm 01:42 PM

How to install Go language under Win7 system?

Mar 27, 2024 pm 01:42 PM

Installing Go language under Win7 system is a relatively simple operation. Just follow the following steps to successfully install it. The following will introduce in detail how to install Go language under Win7 system. Step 1: Download the Go language installation package. First, open the Go language official website (https://golang.org/) and enter the download page. On the download page, select the installation package version compatible with Win7 system to download. Click the Download button and wait for the installation package to download. Step 2: Install Go language