Real-time Profiling a MongoDB Cluster

by A. Jesse Jiryu Davis, Python Evangelist at 10gen In a sharded cluster of replica sets, which server or servers handle each of your queries? What about each insert, update, or command? If you know how a MongoDB cluster routes operations

by A. Jesse Jiryu Davis, Python Evangelist at 10gen

In a sharded cluster of replica sets, which server or servers handle each of your queries? What about each insert, update, or command? If you know how a MongoDB cluster routes operations among its servers, you can predict how your application will scale as you add shards and add members to shards.

Operations are routed according to the type of operation, your shard key, and your read preference. Let’s set up a cluster and use the system profiler to see where each operation is run. This is an interactive, experimental way to learn how your cluster really behaves and how your architecture will scale.

Setup

You’ll need a recent install of MongoDB (I’m using 2.4.4), Python, a recent version of PyMongo (at least 2.4—I’m using 2.5.2) and the code in my cluster-profile repository on GitHub. If you install the Colorama Python package you’ll get cute colored output. These scripts were tested on my Mac.

Sharded cluster of replica sets

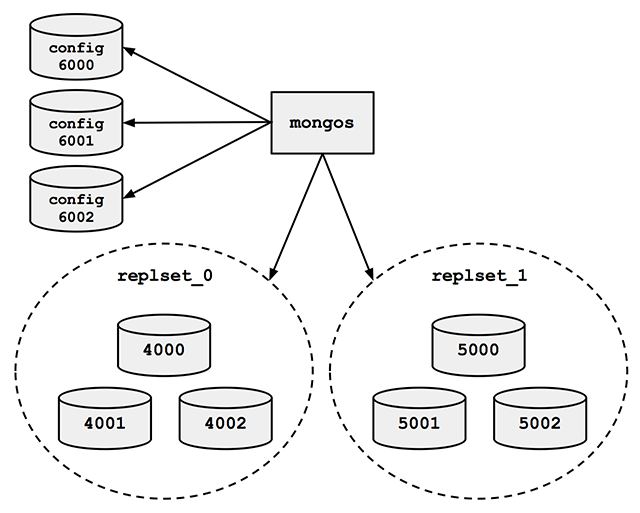

Run the cluster_setup.py script in my repository. It sets up a standard sharded cluster for you running on your local machine. There’s a mongos, three config servers, and two shards, each of which is a three-member replica set. The first shard’s replica set is running on ports 4000 through 4002, the second shard is on ports 5000 through 5002, and the three config servers are on ports 6000 through 6002:

For the finale, cluster_setup.py makes a collection named sharded_collection, sharded on a key named shard_key.

In a normal deployment, we’d let MongoDB’s balancer automatically distribute chunks of data among our two shards. But for this demo we want documents to be on predictable shards, so my script disables the balancer. It makes a chunk for all documents with shard_key less than 500 and another chunk for documents with shard_key greater than or equal to 500. It moves the high chunk to replset_1:

client = MongoClient() # Connect to mongos. admin = client.admin # admin database.

Pre-split.

admin.command(

'split', 'test.sharded_collection',

middle={'shard_key': 500})

admin.command(

'moveChunk', 'test.sharded_collection',

find={'shard_key': 500},

to='replset_1')If you connect to mongos with the MongoDB shell, sh.status() shows there’s one chunk on each of the two shards:

{ "shard_key" : { "$minKey" : 1 } } -->> { "shard_key" : 500 } on : replset_0 { "t" : 2, "i" : 1 }

{ "shard_key" : 500 } -->> { "shard_key" : { "$maxKey" : 1 } } on : replset_1 { "t" : 2, "i" : 0 }The setup script also inserts a document with a shard_key of 0 and another with a shard_key of 500. Now we’re ready for some profiling.

Profiling

Run the tail_profile.py script from my repository. It connects to all the replica set members. On each, it sets the profiling level to 2 (“log everything”) on the test database, and creates a tailable cursor on the system.profile collection. The script filters out some noise in the profile collection—for example, the activities of the tailable cursor show up in the system.profile collection that it’s tailing. Any legitimate entries in the profile are spat out to the console in pretty colors.

Experiments

Targeted queries versus scatter-gather

Let’s run a query from Python in a separate terminal:

>>> from pymongo import MongoClient

>>> # Connect to mongos.

>>> collection = MongoClient().test.sharded_collection

>>> collection.find_one({'shard_key': 0})

{'_id': ObjectId('51bb6f1cca1ce958c89b348a'), 'shard_key': 0}

tail_profile.py prints:

replset_0 primary on 4000: query test.sharded_collection {“shard_key”: 0}

The query includes the shard key, so mongos reads from the shard that can satisfy it. Adding shards can scale out your throughput on a query like this. What about a query that doesn’t contain the shard key?:

>>> collection.find_one({})mongos sends the query to both shards:

replset_0 primary on 4000: query test.sharded_collection {“shard_key”: 0}

replset_1 primary on 5000: query test.sharded_collection {“shard_key”: 500}

For fan-out queries like this, adding more shards won’t scale out your query throughput as well as it would for targeted queries, because every shard has to process every query. But we can scale throughput on queries like these by reading from secondaries.

Queries with read preferences

We can use read preferences to read from secondaries:

>>> from pymongo.read_preferences import ReadPreference

>>> collection.find_one({}, read_preference=ReadPreference.SECONDARY)tail_profile.py shows us that mongos chose a random secondary from each shard:

replset_0 secondary on 4001: query test.sharded_collection {“$readPreference”: {“mode”: “secondary”}, “$query”: {}}

replset_1 secondary on 5001: query test.sharded_collection {“$readPreference”: {“mode”: “secondary”}, “$query”: {}}

Note how PyMongo passes the read preference to mongos in the query, as the $readPreference field. mongos targets one secondary in each of the two replica sets.

Updates

With a sharded collection, updates must either include the shard key or be “multi-updates”. An update with the shard key goes to the proper shard, of course:

>>> collection.update({'shard_key': -100}, {'$set': {'field': 'value'}})replset_0 primary on 4000: update test.sharded_collection {“shard_key”: -100}

mongos only sends the update to replset_0, because we put the chunk of documents with shard_key less than 500 there.

A multi-update hits all shards:

>>> collection.update({}, {'$set': {'field': 'value'}}, multi=True)replset_0 primary on 4000: update test.sharded_collection {}

replset_1 primary on 5000: update test.sharded_collection {}

A multi-update on a range of the shard key need only involve the proper shard:

>>> collection.update({'shard_key': {'$gt': 1000}}, {'$set': {'field': 'value'}}, multi=True)replset_1 primary on 5000: update test.sharded_collection {“shard_key”: {“$gt”: 1000}}

So targeted updates that include the shard key can be scaled out by adding shards. Even multi-updates can be scaled out if they include a range of the shard key, but multi-updates without the shard key won’t benefit from extra shards.

Commands

In version 2.4, mongos can use secondaries not only for queries, but also for some commands. You can run count on secondaries if you pass the right read preference:

>>> cursor = collection.find(read_preference=ReadPreference.SECONDARY) >>> cursor.count()

replset_0 secondary on 4001: command count: sharded_collection

replset_1 secondary on 5001: command count: sharded_collection

Whereas findAndModify, since it modifies data, is run on the primaries no matter your read preference:

>>> db = MongoClient().test

>>> test.command(

... 'findAndModify',

... 'sharded_collection',

... query={'shard_key': -1},

... remove=True,

... read_preference=ReadPreference.SECONDARY)replset_0 primary on 4000: command findAndModify: sharded_collection

Go Forth And Scale

To scale a sharded cluster, you should understand how operations are distributed: are they scatter-gather, or targeted to one shard? Do they run on primaries or secondaries? If you set up a cluster and test your queries interactively like we did here, you can see how your cluster behaves in practice, and design your application for future growth.

Read Jesse’s blog, Emptysquare and follow him on Github

原文地址:Real-time Profiling a MongoDB Cluster, 感谢原作者分享。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

What to do if navicat expires

Apr 23, 2024 pm 12:12 PM

What to do if navicat expires

Apr 23, 2024 pm 12:12 PM

Solutions to resolve Navicat expiration issues include: renew the license; uninstall and reinstall; disable automatic updates; use Navicat Premium Essentials free version; contact Navicat customer support.

How to connect navicat to mongodb

Apr 24, 2024 am 11:27 AM

How to connect navicat to mongodb

Apr 24, 2024 am 11:27 AM

To connect to MongoDB using Navicat, you need to: Install Navicat Create a MongoDB connection: a. Enter the connection name, host address and port b. Enter the authentication information (if required) Add an SSL certificate (if required) Verify the connection Save the connection

What is the use of net4.0

May 10, 2024 am 01:09 AM

What is the use of net4.0

May 10, 2024 am 01:09 AM

.NET 4.0 is used to create a variety of applications and it provides application developers with rich features including: object-oriented programming, flexibility, powerful architecture, cloud computing integration, performance optimization, extensive libraries, security, Scalability, data access, and mobile development support.

Integration of Java functions and databases in serverless architecture

Apr 28, 2024 am 08:57 AM

Integration of Java functions and databases in serverless architecture

Apr 28, 2024 am 08:57 AM

In a serverless architecture, Java functions can be integrated with the database to access and manipulate data in the database. Key steps include: creating Java functions, configuring environment variables, deploying functions, and testing functions. By following these steps, developers can build complex applications that seamlessly access data stored in databases.

How to ensure high availability of MongoDB on Debian

Apr 02, 2025 am 07:21 AM

How to ensure high availability of MongoDB on Debian

Apr 02, 2025 am 07:21 AM

This article describes how to build a highly available MongoDB database on a Debian system. We will explore multiple ways to ensure data security and services continue to operate. Key strategy: ReplicaSet: ReplicaSet: Use replicasets to achieve data redundancy and automatic failover. When a master node fails, the replica set will automatically elect a new master node to ensure the continuous availability of the service. Data backup and recovery: Regularly use the mongodump command to backup the database and formulate effective recovery strategies to deal with the risk of data loss. Monitoring and Alarms: Deploy monitoring tools (such as Prometheus, Grafana) to monitor the running status of MongoDB in real time, and

How to configure MongoDB automatic expansion on Debian

Apr 02, 2025 am 07:36 AM

How to configure MongoDB automatic expansion on Debian

Apr 02, 2025 am 07:36 AM

This article introduces how to configure MongoDB on Debian system to achieve automatic expansion. The main steps include setting up the MongoDB replica set and disk space monitoring. 1. MongoDB installation First, make sure that MongoDB is installed on the Debian system. Install using the following command: sudoaptupdatesudoaptinstall-ymongodb-org 2. Configuring MongoDB replica set MongoDB replica set ensures high availability and data redundancy, which is the basis for achieving automatic capacity expansion. Start MongoDB service: sudosystemctlstartmongodsudosys

How to connect nodejs to database

Apr 21, 2024 am 06:16 AM

How to connect nodejs to database

Apr 21, 2024 am 06:16 AM

To connect to the database, Node.js provides multiple database connector packages for MySQL, PostgreSQL, MongoDB, and Redis. The connection steps include: 1. Install the corresponding connector package; 2. Create a connection pool to maintain reusable connections; 3. Establish a connection with the database. Note: The operation is asynchronous and errors need to be handled to ensure security and optimize performance.

Can navicat connect to mongodb?

Apr 23, 2024 pm 05:15 PM

Can navicat connect to mongodb?

Apr 23, 2024 pm 05:15 PM

Yes, Navicat can connect to MongoDB database. Specific steps include: Open Navicat and create a new connection. Select the database type as MongoDB. Enter the MongoDB host address, port, and database name. Enter your MongoDB username and password (if required). Click the "Connect" button.