自定义Hive权限控制(3) 扩展Hive以实现自定义权限控制

简介 前两篇文章已经将需要的数据进行了准备,比如用户权限配置信息等。本节主要介绍我们的使用场景,因为使用场景的问题,我们只针对select进行相应的 权限控制 ,insert,delete,drop等动作从数据库层面上进行了限定,非本部门的人员是只拥有查询权限的。

简介

前两篇文章已经将需要的数据进行了准备,比如用户权限配置信息等。本节主要介绍我们的使用场景,因为使用场景的问题,我们只针对select进行相应的权限控制,insert,delete,drop等动作从数据库层面上进行了限定,非本部门的人员是只拥有查询权限的。所以在处理上会相对简单一些。

首先,建立一个工具包,用来处理相应的数据方面的请求。主要是获取用户权限的对应关系,并组织成我需要的格式。

包括3个类:

HiveTable.java是针对hive的table建立的对象类。MakeMD5.Java 是针对MD5密码加密使用的工具类。UserAuthDataMode.java 是用于获取用户权限的方法类,本类实现了按照需要的格式获取数据库中的信息。

HiveTable类

package com.anyoneking.www;?import java.util.ArrayList;import java.util.List;?public class HiveTable { private int id ; private String tableName ; private int dbid ; private String dbName ; private List partitionList = new ArrayList(); public int getId() { return id; } public void setId(int id) { this.id = id; } public String getTableName() { return tableName; } public void setTableName(String tableName) { this.tableName = tableName; } public int getDbid() { return dbid; } public void setDbid(int dbid) { this.dbid = dbid; } public String getDbName() { return dbName; } public void setDbName(String dbName) { this.dbName = dbName; } public List getPartitionList() { return partitionList; } public void setPartitionList(List partitionList) { this.partitionList = partitionList; }? public String getFullName(){ return this.dbName+"."+this.tableName; }}UserAuthDataModel.java

package com.anyoneking.www;?import java.sql.Connection;import java.sql.DriverManager;import java.sql.ResultSet;import java.sql.Statement;import java.util.ArrayList;import java.util.Arrays;import java.util.HashMap;import java.util.List;import java.util.Map;?import org.apache.commons.logging.Log;import org.apache.commons.logging.LogFactory;import org.apache.hadoop.hive.conf.HiveConf;import org.apache.hadoop.hive.ql.Driver;/**?* 用户认证类,用于从数据库中提取相关的信息。?* @author songwei?*?*/public class UserAuthDataMode { static final private Log LOG = LogFactory.getLog(Driver.class.getName()); private HiveConf conf ; private boolean isSuperUser = false; private Map allTableMap =new HashMap(); //auth db name List private List dbNameList = new ArrayList(); //auth table name List ex:{"dbName.tableName":HiveTable} private Map tableMap = new HashMap();? //auth table excludeColumnList ex:{"dbName.tableName":["phone"]} private Map> excludeColumnList = new HashMap>(); //auth table includeColumnList ex:{"dbName.tableName":["ptdate","ptchannel"]} private Map> includeColumnList = new HashMap>();? private List ptchannelValueList = new ArrayList();? private String userName; private String password; private Connection conn ; private int userid ; private int maxMapCount =16; private int maxRedCount =16;? private void createConn() throws Exception{ Class.forName("com.mysql.jdbc.Driver"); String dbURL = HiveConf.getVar(this.conf,HiveConf.ConfVars.KUXUN_HIVESERVER_URL); String dbUserName = HiveConf.getVar(this.conf,HiveConf.ConfVars.KUXUN_HIVESERVER_USER); String dbPassword = HiveConf.getVar(this.conf,HiveConf.ConfVars.KUXUN_HIVESERVER_PASSWORD); this.conn = DriverManager.getConnection(dbURL,dbUserName, dbPassword); //this.conn = DriverManager.getConnection("jdbc:mysql://localhost:3306/test","test", "tset"); }? public UserAuthDataMode(String userName,String password,HiveConf conf) throws Exception{ this.userName = userName ; this.password = password ; this.conf = conf; this.createConn(); }? private ResultSet getResult(String sql) throws Exception{ Statement stmt = conn.createStatement(); ResultSet rs = stmt.executeQuery(sql); return rs; }? private void checkUser() throws Exception{ MakeMD5 md5 = new MakeMD5(); String sql = "select username,password,id,is_superuser from auth_user where username='"+this.userName+"'"; LOG.debug(sql); this.password = md5.makeMD5(this.password); ResultSet rs= this.getResult(sql); int size =0 ; boolean flag = false ; if(size != 0){ throw new Exception("username is error"); } while(rs.next()){ size +=1 ; this.userid = rs.getInt("id"); int superUser = rs.getInt("is_superuser"); if (superUser == 1){ this.isSuperUser = true ; }else{ this.isSuperUser = false ; } String db_password = rs.getString("password"); if(db_password.equals(this.password)){ flag = true ; } } if(size 0){ String[] pt = ptInfo.split(","); ht.setPartitionList(Arrays.asList(pt)); } this.allTableMap.put(tblid, ht); }? //处理有权限的db信息 String dbSql = " select t2.hivedb_id,(select name from hive_db where id = t2.hivedb_id) dbname" +" from hive_user_auth t1 join hive_user_auth_dbGroups t2" +" on (t1.id = t2.hiveuserauth_id)" +"where t1.user_id ="+this.userid ; ResultSet dbrs = this.getResult(dbSql); while(dbrs.next()){ this.dbNameList.add(dbrs.getString("dbname")); }? //处理有权限的表信息 String tableSql = "select t2.hivetable_id " +"from hive_user_auth t1 join hive_user_auth_tableGroups t2 " +"on (t1.id = t2.hiveuserauth_id) " +"where t1.user_id ="+this.userid ; ResultSet tablers = this.getResult(tableSql); while(tablers.next()){ int tableID = tablers.getInt("hivetable_id"); LOG.debug("-----"+tableID); HiveTable ht = this.allTableMap.get(tableID); LOG.debug("---table_name--"+ht.getTableName()); String tableFullName = ht.getFullName(); LOG.debug(tableFullName); this.tableMap.put(tableFullName, ht); }? //处理不允许操作的列 String exSql = "select col.name,col.table_id,col.column " +"from hive_user_auth t1 join hive_user_auth_exGroups t2 " +"on (t1.id = t2.hiveuserauth_id) " +"join hive_excludecolumn col " +"on (t2.excludecolumn_id = col.id) " +"where t1.user_id ="+this.userid ; ResultSet exrs = this.getResult(exSql); while(exrs.next()){ int tableID = exrs.getInt("table_id"); String column = exrs.getString("column"); HiveTable ht = this.allTableMap.get(tableID); String tableFullName = ht.getFullName(); String[] columnList = column.split(","); this.excludeColumnList.put(tableFullName, Arrays.asList(columnList)); }? //处理必须包含的列 String inSql = "select col.name,col.table_id,col.column " +"from hive_user_auth t1 join hive_user_auth_inGroups t2 " +"on (t1.id = t2.hiveuserauth_id) " +"join hive_includecolumn col " +"on (t2.includecolumn_id = col.id) " +"where t1.user_id ="+this.userid ; ResultSet inrs = this.getResult(inSql); while(inrs.next()){ int tableID = inrs.getInt("table_id"); String column = inrs.getString("column"); HiveTable ht = this.allTableMap.get(tableID); String tableFullName = ht.getFullName(); String[] columnList = column.split(","); this.includeColumnList.put(tableFullName, Arrays.asList(columnList)); }? //处理ptchannel的value String ptSql = "select val.name " +"from hive_user_auth t1 join hive_user_auth_ptGroups t2 " +"on (t1.id = t2.hiveuserauth_id) " +"join hive_ptchannel_value val " +"on (t2.hiveptchannelvalue_id = val.id) " +"where t1.user_id ="+this.userid ; ResultSet ptrs = this.getResult(ptSql); while(ptrs.next()){ String val = ptrs.getString("name"); this.ptchannelValueList.add(val); } }? public int getMaxMapCount() { return maxMapCount; }? public void setMaxMapCount(int maxMapCount) { this.maxMapCount = maxMapCount; }? public int getMaxRedCount() { return maxRedCount; }? public void setMaxRedCount(int maxRedCount) { this.maxRedCount = maxRedCount; }? public void run() throws Exception{ this.checkUser(); this.parseAuth(); this.checkData(); this.modifyConf(); this.clearData(); }? public void clearData() throws Exception{ this.conn.close(); }? private void modifyConf(){ this.conf.setInt("mapred.map.tasks",this.maxMapCount); //this.conf.setInt("hive.exec.reducers.ma", this.maxRedCount); HiveConf.setIntVar(this.conf,HiveConf.ConfVars.MAXREDUCERS,this.maxRedCount); }? private void checkData(){ LOG.debug(this.allTableMap.keySet().size()); LOG.debug(this.tableMap.keySet().size()); LOG.debug(this.dbNameList.size()); LOG.debug(this.excludeColumnList.size()); LOG.debug(this.includeColumnList.size()); LOG.debug(this.ptchannelValueList.size()); }???? public static void main(String[] args) throws Exception{ UserAuthDataMode ua = new UserAuthDataMode("swtest","swtest",null); ua.run(); }? public List getDbNameList() { return dbNameList; }? public void setDbNameList(List dbNameList) { this.dbNameList = dbNameList; }? public Map getTableMap() { return tableMap; }? public void setTableMap(Map tableMap) { this.tableMap = tableMap; }? public Map> getExcludeColumnList() { return excludeColumnList; }? public void setExcludeColumnList(Map> excludeColumnList) { this.excludeColumnList = excludeColumnList; }? public Map> getIncludeColumnList() { return includeColumnList; }? public void setIncludeColumnList(Map> includeColumnList) { this.includeColumnList = includeColumnList; }? public List getPtchannelValueList() { return ptchannelValueList; }? public void setPtchannelValueList(List ptchannelValueList) { this.ptchannelValueList = ptchannelValueList; }?}MakeMD5.java

package com.anyoneking.www;?import java.math.BigInteger;import java.security.MessageDigest;?public class MakeMD5 { public String makeMD5(String password) { MessageDigest md; try { // 生成一个MD5加密计算摘要 md = MessageDigest.getInstance("MD5"); // 同样可以使用SHA1 // 计算md5函数 md.update(password.getBytes()); // digest()最后确定返回md5 hash值,返回值为8为字符串。因为md5 hash值是16位的hex值,实际上就是8位的字符 // BigInteger函数则将8位的字符串转换成16位hex值,用字符串来表示;得到字符串形式的hash值 String pwd = new BigInteger(1, md.digest()).toString(16); // 参数也可不只用16可改动,当然结果也不一样了 return pwd; } catch (Exception e) { e.printStackTrace(); } return password; }? public static void main(String[] args) { MakeMD5 md5 = new MakeMD5(); md5.makeMD5("swtest"); }}原文地址:自定义Hive权限控制(3) 扩展Hive以实现自定义权限控制, 感谢原作者分享。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

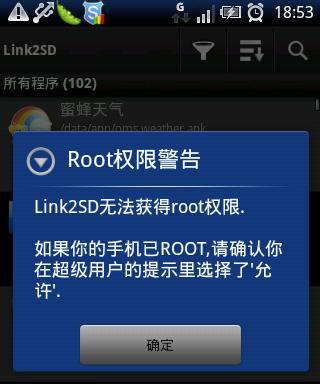

Enable root permissions with one click (quickly obtain root permissions)

Jun 02, 2024 pm 05:32 PM

Enable root permissions with one click (quickly obtain root permissions)

Jun 02, 2024 pm 05:32 PM

It allows users to perform more in-depth operations and customization of the system. Root permission is an administrator permission in the Android system. Obtaining root privileges usually requires a series of tedious steps, which may not be very friendly to ordinary users, however. By enabling root permissions with one click, this article will introduce a simple and effective method to help users easily obtain system permissions. Understand the importance and risks of root permissions and have greater freedom. Root permissions allow users to fully control the mobile phone system. Strengthen security controls, customize themes, and users can delete pre-installed applications. For example, accidentally deleting system files causing system crashes, excessive use of root privileges, and inadvertent installation of malware are also risky, however. Before using root privileges

How to implement dual WeChat login on Huawei mobile phones?

Mar 24, 2024 am 11:27 AM

How to implement dual WeChat login on Huawei mobile phones?

Mar 24, 2024 am 11:27 AM

How to implement dual WeChat login on Huawei mobile phones? With the rise of social media, WeChat has become one of the indispensable communication tools in people's daily lives. However, many people may encounter a problem: logging into multiple WeChat accounts at the same time on the same mobile phone. For Huawei mobile phone users, it is not difficult to achieve dual WeChat login. This article will introduce how to achieve dual WeChat login on Huawei mobile phones. First of all, the EMUI system that comes with Huawei mobile phones provides a very convenient function - dual application opening. Through the application dual opening function, users can simultaneously

Extensions and third-party modules for PHP functions

Apr 13, 2024 pm 02:12 PM

Extensions and third-party modules for PHP functions

Apr 13, 2024 pm 02:12 PM

To extend PHP function functionality, you can use extensions and third-party modules. Extensions provide additional functions and classes that can be installed and enabled through the pecl package manager. Third-party modules provide specific functionality and can be installed through the Composer package manager. Practical examples include using extensions to parse complex JSON data and using modules to validate data.

PHP Programming Guide: Methods to Implement Fibonacci Sequence

Mar 20, 2024 pm 04:54 PM

PHP Programming Guide: Methods to Implement Fibonacci Sequence

Mar 20, 2024 pm 04:54 PM

The programming language PHP is a powerful tool for web development, capable of supporting a variety of different programming logics and algorithms. Among them, implementing the Fibonacci sequence is a common and classic programming problem. In this article, we will introduce how to use the PHP programming language to implement the Fibonacci sequence, and attach specific code examples. The Fibonacci sequence is a mathematical sequence defined as follows: the first and second elements of the sequence are 1, and starting from the third element, the value of each element is equal to the sum of the previous two elements. The first few elements of the sequence

The operation process of edius custom screen layout

Mar 27, 2024 pm 06:50 PM

The operation process of edius custom screen layout

Mar 27, 2024 pm 06:50 PM

1. The picture below is the default screen layout of edius. The default EDIUS window layout is a horizontal layout. Therefore, in a single-monitor environment, many windows overlap and the preview window is in single-window mode. 2. You can enable [Dual Window Mode] through the [View] menu bar to make the preview window display the playback window and recording window at the same time. 3. You can restore the default screen layout through [View menu bar>Window Layout>General]. In addition, you can also customize the layout that suits you and save it as a commonly used screen layout: drag the window to a layout that suits you, then click [View > Window Layout > Save Current Layout > New], and in the pop-up [Save Current Layout] Layout] enter the layout name in the small window and click OK

How to implement the WeChat clone function on Huawei mobile phones

Mar 24, 2024 pm 06:03 PM

How to implement the WeChat clone function on Huawei mobile phones

Mar 24, 2024 pm 06:03 PM

How to implement the WeChat clone function on Huawei mobile phones With the popularity of social software and people's increasing emphasis on privacy and security, the WeChat clone function has gradually become the focus of people's attention. The WeChat clone function can help users log in to multiple WeChat accounts on the same mobile phone at the same time, making it easier to manage and use. It is not difficult to implement the WeChat clone function on Huawei mobile phones. You only need to follow the following steps. Step 1: Make sure that the mobile phone system version and WeChat version meet the requirements. First, make sure that your Huawei mobile phone system version has been updated to the latest version, as well as the WeChat App.

Python ORM Performance Benchmark: Comparing Different ORM Frameworks

Mar 18, 2024 am 09:10 AM

Python ORM Performance Benchmark: Comparing Different ORM Frameworks

Mar 18, 2024 am 09:10 AM

Object-relational mapping (ORM) frameworks play a vital role in python development, they simplify data access and management by building a bridge between object and relational databases. In order to evaluate the performance of different ORM frameworks, this article will benchmark against the following popular frameworks: sqlAlchemyPeeweeDjangoORMPonyORMTortoiseORM Test Method The benchmarking uses a SQLite database containing 1 million records. The test performed the following operations on the database: Insert: Insert 10,000 new records into the table Read: Read all records in the table Update: Update a single field for all records in the table Delete: Delete all records in the table Each operation

Application of Python ORM in big data projects

Mar 18, 2024 am 09:19 AM

Application of Python ORM in big data projects

Mar 18, 2024 am 09:19 AM

Object-relational mapping (ORM) is a programming technology that allows developers to use object programming languages to manipulate databases without writing SQL queries directly. ORM tools in python (such as SQLAlchemy, Peewee, and DjangoORM) simplify database interaction for big data projects. Advantages Code Simplicity: ORM eliminates the need to write lengthy SQL queries, which improves code simplicity and readability. Data abstraction: ORM provides an abstraction layer that isolates application code from database implementation details, improving flexibility. Performance optimization: ORMs often use caching and batch operations to optimize database queries, thereby improving performance. Portability: ORM allows developers to