Hadoop HelloWord Examples- 求平均数

? 另外一个hadoop的入门demo,求平均数。是对WordCount这个demo的一个小小的修改。输入一堆成绩单(人名,成绩),然后求每个人成绩平均数,比如: //? subject1.txt ? a 90 ? b 80 ? c 70 ?// subject2.txt ? a 100 ? b 90 ? c 80 ? 求a,b,c这三个人的平均

? 另外一个hadoop的入门demo,求平均数。是对WordCount这个demo的一个小小的修改。输入一堆成绩单(人名,成绩),然后求每个人成绩平均数,比如:

//? subject1.txt

? a 90

? b 80

? c 70

?// subject2.txt

? a 100

? b 90

? c 80

? 求a,b,c这三个人的平均分。解决思路很简单,在map阶段key是名字,value是成绩,直接output。reduce阶段得到了map输出的key名字,values是该名字对应的一系列的成绩,那么对其求平均数即可。

? 这里我们实现了两个版本的代码,分别用TextInputFormat和 KeyValueTextInputFormat来作为输入格式。

? TextInputFormat版本:

?

import java.util.*;

import java.io.*;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class AveScore {

public static class AveMapper extends Mapper

{

@Override

public void map(Object key, Text value, Context context) throws IOException, InterruptedException

{

String line = value.toString();

String[] strs = line.split(" ");

String name = strs[0];

int score = Integer.parseInt(strs[1]);

context.write(new Text(name), new IntWritable(score));

}

}

public static class AveReducer extends Reducer

{

@Override

public void reduce(Text key, Iterable values, Context context) throws IOException, InterruptedException

{

int sum = 0;

int count = 0;

for(IntWritable val : values)

{

sum += val.get();

count++;

}

int aveScore = sum / count;

context.write(key, new IntWritable(aveScore));

}

}

public static void main(String[] args) throws Exception

{

Configuration conf = new Configuration();

Job job = new Job(conf,"AverageScore");

job.setJarByClass(AveScore.class);

job.setMapperClass(AveMapper.class);

job.setReducerClass(AveReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit( job.waitForCompletion(true) ? 0 : 1);

}

}

KeyValueTextInputFormat版本;

import java.util.*;

import java.io.*;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.input.KeyValueTextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

public class AveScore_KeyValue {

public static class AveMapper extends Mapper

{

@Override

public void map(Text key, Text value, Context context) throws IOException, InterruptedException

{

int score = Integer.parseInt(value.toString());

context.write(key, new IntWritable(score) );

}

}

public static class AveReducer extends Reducer

{

@Override

public void reduce(Text key, Iterable values, Context context) throws IOException, InterruptedException

{

int sum = 0;

int count = 0;

for(IntWritable val : values)

{

sum += val.get();

count++;

}

int aveScore = sum / count;

context.write(key, new IntWritable(aveScore));

}

}

public static void main(String[] args) throws Exception

{

Configuration conf = new Configuration();

conf.set("mapreduce.input.keyvaluelinerecordreader.key.value.separator", " ");

Job job = new Job(conf,"AverageScore");

job.setJarByClass(AveScore_KeyValue.class);

job.setMapperClass(AveMapper.class);

job.setReducerClass(AveReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

job.setInputFormatClass(KeyValueTextInputFormat.class);

job.setOutputFormatClass(TextOutputFormat.class) ;

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit( job.waitForCompletion(true) ? 0 : 1);

}

}

输出结果为:

? a 95

? b 85

? c 75

?

作者:qiul12345 发表于2013-8-23 21:51:03 原文链接

阅读:113 评论:0 查看评论

原文地址:Hadoop HelloWord Examples- 求平均数, 感谢原作者分享。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Java Errors: Hadoop Errors, How to Handle and Avoid

Jun 24, 2023 pm 01:06 PM

Java Errors: Hadoop Errors, How to Handle and Avoid

Jun 24, 2023 pm 01:06 PM

Java Errors: Hadoop Errors, How to Handle and Avoid When using Hadoop to process big data, you often encounter some Java exception errors, which may affect the execution of tasks and cause data processing to fail. This article will introduce some common Hadoop errors and provide ways to deal with and avoid them. Java.lang.OutOfMemoryErrorOutOfMemoryError is an error caused by insufficient memory of the Java virtual machine. When Hadoop is

Using Hadoop and HBase in Beego for big data storage and querying

Jun 22, 2023 am 10:21 AM

Using Hadoop and HBase in Beego for big data storage and querying

Jun 22, 2023 am 10:21 AM

With the advent of the big data era, data processing and storage have become more and more important, and how to efficiently manage and analyze large amounts of data has become a challenge for enterprises. Hadoop and HBase, two projects of the Apache Foundation, provide a solution for big data storage and analysis. This article will introduce how to use Hadoop and HBase in Beego for big data storage and query. 1. Introduction to Hadoop and HBase Hadoop is an open source distributed storage and computing system that can

How to use PHP and Hadoop for big data processing

Jun 19, 2023 pm 02:24 PM

How to use PHP and Hadoop for big data processing

Jun 19, 2023 pm 02:24 PM

As the amount of data continues to increase, traditional data processing methods can no longer handle the challenges brought by the big data era. Hadoop is an open source distributed computing framework that solves the performance bottleneck problem caused by single-node servers in big data processing through distributed storage and processing of large amounts of data. PHP is a scripting language that is widely used in web development and has the advantages of rapid development and easy maintenance. This article will introduce how to use PHP and Hadoop for big data processing. What is HadoopHadoop is

Excel removes the highest score and the lowest score and calculates the average

Mar 20, 2024 am 09:45 AM

Excel removes the highest score and the lowest score and calculates the average

Mar 20, 2024 am 09:45 AM

Computers have become a standard configuration for modern work, so office software is also a basic operation that needs to be mastered at work. With the development of technology, the functions of office software are becoming increasingly powerful. Excel is often used in practical work due to its powerful functions. As a data display, Excel is clear and intuitive. As a calculation software, Excel is convenient and accurate. Excel can perform sums, summarization, and average calculations. Today we will teach you how to remove the highest score and the lowest score in Excel and calculate the average. After opening the table, I found that the highest score in the table was 100 points and the lowest score was 66 points. Therefore, we need to calculate the average of the other scores except these two scores. 2. Click the function icon (as shown below). 3. Use the TRIMMEAN function. 4.This

Explore the application of Java in the field of big data: understanding of Hadoop, Spark, Kafka and other technology stacks

Dec 26, 2023 pm 02:57 PM

Explore the application of Java in the field of big data: understanding of Hadoop, Spark, Kafka and other technology stacks

Dec 26, 2023 pm 02:57 PM

Java big data technology stack: Understand the application of Java in the field of big data, such as Hadoop, Spark, Kafka, etc. As the amount of data continues to increase, big data technology has become a hot topic in today's Internet era. In the field of big data, we often hear the names of Hadoop, Spark, Kafka and other technologies. These technologies play a vital role, and Java, as a widely used programming language, also plays a huge role in the field of big data. This article will focus on the application of Java in large

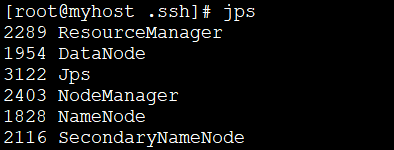

How to install Hadoop in linux

May 18, 2023 pm 08:19 PM

How to install Hadoop in linux

May 18, 2023 pm 08:19 PM

1: Install JDK1. Execute the following command to download the JDK1.8 installation package. wget--no-check-certificatehttps://repo.huaweicloud.com/java/jdk/8u151-b12/jdk-8u151-linux-x64.tar.gz2. Execute the following command to decompress the downloaded JDK1.8 installation package. tar-zxvfjdk-8u151-linux-x64.tar.gz3. Move and rename the JDK package. mvjdk1.8.0_151//usr/java84. Configure Java environment variables. echo'

Use PHP to achieve large-scale data processing: Hadoop, Spark, Flink, etc.

May 11, 2023 pm 04:13 PM

Use PHP to achieve large-scale data processing: Hadoop, Spark, Flink, etc.

May 11, 2023 pm 04:13 PM

As the amount of data continues to increase, large-scale data processing has become a problem that enterprises must face and solve. Traditional relational databases can no longer meet this demand. For the storage and analysis of large-scale data, distributed computing platforms such as Hadoop, Spark, and Flink have become the best choices. In the selection process of data processing tools, PHP is becoming more and more popular among developers as a language that is easy to develop and maintain. In this article, we will explore how to leverage PHP for large-scale data processing and how

Data processing engines in PHP (Spark, Hadoop, etc.)

Jun 23, 2023 am 09:43 AM

Data processing engines in PHP (Spark, Hadoop, etc.)

Jun 23, 2023 am 09:43 AM

In the current Internet era, the processing of massive data is a problem that every enterprise and institution needs to face. As a widely used programming language, PHP also needs to keep up with the times in data processing. In order to process massive data more efficiently, PHP development has introduced some big data processing tools, such as Spark and Hadoop. Spark is an open source data processing engine that can be used for distributed processing of large data sets. The biggest feature of Spark is its fast data processing speed and efficient data storage.