Mongodb 数据架构与表结构建模 分享

下面是我之前在公司内进行的有关Mongodb 数据架构与表结构建模的分享PPT。 Mongodb Data model schema design 主要从 Instance dbs collections document 四个层面来进行分享。 只看PPT会有些粗略,周末会加上详细解说做成视频,进行分享。 原文地址:Mongod

下面是我之前在公司内进行的有关Mongodb 数据架构与表结构建模的分享PPT。

Mongodb Data model & schema design

主要从

- Instance

- dbs

- collections

- document

四个层面来进行分享。

只看PPT会有些粗略,周末会加上详细解说做成视频,进行分享。

原文地址:Mongodb 数据架构与表结构建模 分享, 感谢原作者分享。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

AI startups collectively switched jobs to OpenAI, and the security team regrouped after Ilya left!

Jun 08, 2024 pm 01:00 PM

AI startups collectively switched jobs to OpenAI, and the security team regrouped after Ilya left!

Jun 08, 2024 pm 01:00 PM

Last week, amid the internal wave of resignations and external criticism, OpenAI was plagued by internal and external troubles: - The infringement of the widow sister sparked global heated discussions - Employees signing "overlord clauses" were exposed one after another - Netizens listed Ultraman's "seven deadly sins" Rumors refuting: According to leaked information and documents obtained by Vox, OpenAI’s senior leadership, including Altman, was well aware of these equity recovery provisions and signed off on them. In addition, there is a serious and urgent issue facing OpenAI - AI safety. The recent departures of five security-related employees, including two of its most prominent employees, and the dissolution of the "Super Alignment" team have once again put OpenAI's security issues in the spotlight. Fortune magazine reported that OpenA

How steep is the learning curve of golang framework architecture?

Jun 05, 2024 pm 06:59 PM

How steep is the learning curve of golang framework architecture?

Jun 05, 2024 pm 06:59 PM

The learning curve of the Go framework architecture depends on familiarity with the Go language and back-end development and the complexity of the chosen framework: a good understanding of the basics of the Go language. It helps to have backend development experience. Frameworks that differ in complexity lead to differences in learning curves.

70B model generates 1,000 tokens in seconds, code rewriting surpasses GPT-4o, from the Cursor team, a code artifact invested by OpenAI

Jun 13, 2024 pm 03:47 PM

70B model generates 1,000 tokens in seconds, code rewriting surpasses GPT-4o, from the Cursor team, a code artifact invested by OpenAI

Jun 13, 2024 pm 03:47 PM

70B model, 1000 tokens can be generated in seconds, which translates into nearly 4000 characters! The researchers fine-tuned Llama3 and introduced an acceleration algorithm. Compared with the native version, the speed is 13 times faster! Not only is it fast, its performance on code rewriting tasks even surpasses GPT-4o. This achievement comes from anysphere, the team behind the popular AI programming artifact Cursor, and OpenAI also participated in the investment. You must know that on Groq, a well-known fast inference acceleration framework, the inference speed of 70BLlama3 is only more than 300 tokens per second. With the speed of Cursor, it can be said that it achieves near-instant complete code file editing. Some people call it a good guy, if you put Curs

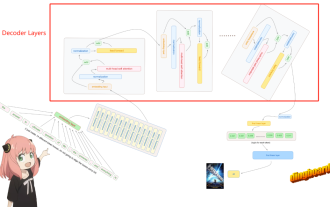

Hand-tearing Llama3 layer 1: Implementing llama3 from scratch

Jun 01, 2024 pm 05:45 PM

Hand-tearing Llama3 layer 1: Implementing llama3 from scratch

Jun 01, 2024 pm 05:45 PM

1. Architecture of Llama3 In this series of articles, we implement llama3 from scratch. The overall architecture of Llama3: Picture the model parameters of Llama3: Let's take a look at the actual values of these parameters in the Llama3 model. Picture [1] Context window (context-window) When instantiating the LlaMa class, the variable max_seq_len defines context-window. There are other parameters in the class, but this parameter is most directly related to the transformer model. The max_seq_len here is 8K. Picture [2] Vocabulary-size and AttentionL

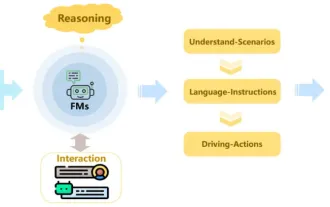

Review! Comprehensively summarize the important role of basic models in promoting autonomous driving

Jun 11, 2024 pm 05:29 PM

Review! Comprehensively summarize the important role of basic models in promoting autonomous driving

Jun 11, 2024 pm 05:29 PM

Written above & the author’s personal understanding: Recently, with the development and breakthroughs of deep learning technology, large-scale foundation models (Foundation Models) have achieved significant results in the fields of natural language processing and computer vision. The application of basic models in autonomous driving also has great development prospects, which can improve the understanding and reasoning of scenarios. Through pre-training on rich language and visual data, the basic model can understand and interpret various elements in autonomous driving scenarios and perform reasoning, providing language and action commands for driving decision-making and planning. The base model can be data augmented with an understanding of the driving scenario to provide those rare feasible features in long-tail distributions that are unlikely to be encountered during routine driving and data collection.

China Mobile: Humanity is entering the fourth industrial revolution and officially announced 'three plans”

Jun 27, 2024 am 10:29 AM

China Mobile: Humanity is entering the fourth industrial revolution and officially announced 'three plans”

Jun 27, 2024 am 10:29 AM

According to news on June 26, at the opening ceremony of the 2024 World Mobile Communications Conference Shanghai (MWC Shanghai), China Mobile Chairman Yang Jie delivered a speech. He said that currently, human society is entering the fourth industrial revolution, which is dominated by information and deeply integrated with information and energy, that is, the "digital intelligence revolution", and the formation of new productive forces is accelerating. Yang Jie believes that from the "mechanization revolution" driven by steam engines, to the "electrification revolution" driven by electricity, internal combustion engines, etc., to the "information revolution" driven by computers and the Internet, each round of industrial revolution is based on "information and "Energy" is the main line, bringing productivity development

An American professor used his 2-year-old daughter to train an AI model to appear in Science! Human cubs use head-mounted cameras to train new AI

Jun 03, 2024 am 10:08 AM

An American professor used his 2-year-old daughter to train an AI model to appear in Science! Human cubs use head-mounted cameras to train new AI

Jun 03, 2024 am 10:08 AM

Unbelievably, in order to train an AI model, a professor from the State University of New York strapped a GoPro-like camera to his daughter’s head! Although it sounds incredible, this professor's behavior is actually well-founded. To train the complex neural network behind LLM, massive data is required. Is our current LLM training process necessarily the simplest and most efficient way? Certainly not! Scientists have discovered that in human toddlers, the brain absorbs water like a sponge, quickly forming a coherent worldview. Although LLM performs amazingly at times, over time, human children become smarter and more creative than the model! The secret of children mastering language. How to train LLM in a better way? When scientists are puzzled by the solution,

The inside story of Google's search algorithm was revealed, and 2,500 pages of documents were leaked with real names! Search Ranking Lies Exposed

Jun 11, 2024 am 09:14 AM

The inside story of Google's search algorithm was revealed, and 2,500 pages of documents were leaked with real names! Search Ranking Lies Exposed

Jun 11, 2024 am 09:14 AM

Recently, 2,500 pages of internal Google documents were leaked, revealing how search, "the Internet's most powerful arbiter," operates. SparkToro's co-founder and CEO is an anonymous person. He published a blog post on his personal website, claiming that "an anonymous person shared with me thousands of pages of leaked Google Search API documentation that everyone in SEO should read." Go to them! "For many years, RandFishkin has been the top spokesperson in the field of SEO (Search Engine Optimization, search engine optimization), and he proposed the concept of "website authority" (DomainRating). Since he is highly respected in this field, RandFishkin