Web Front-end

Web Front-end

JS Tutorial

JS Tutorial

Usage examples of NodeJS's url interception module url-extract_Basic knowledge

Usage examples of NodeJS's url interception module url-extract_Basic knowledge

Usage examples of NodeJS's url interception module url-extract_Basic knowledge

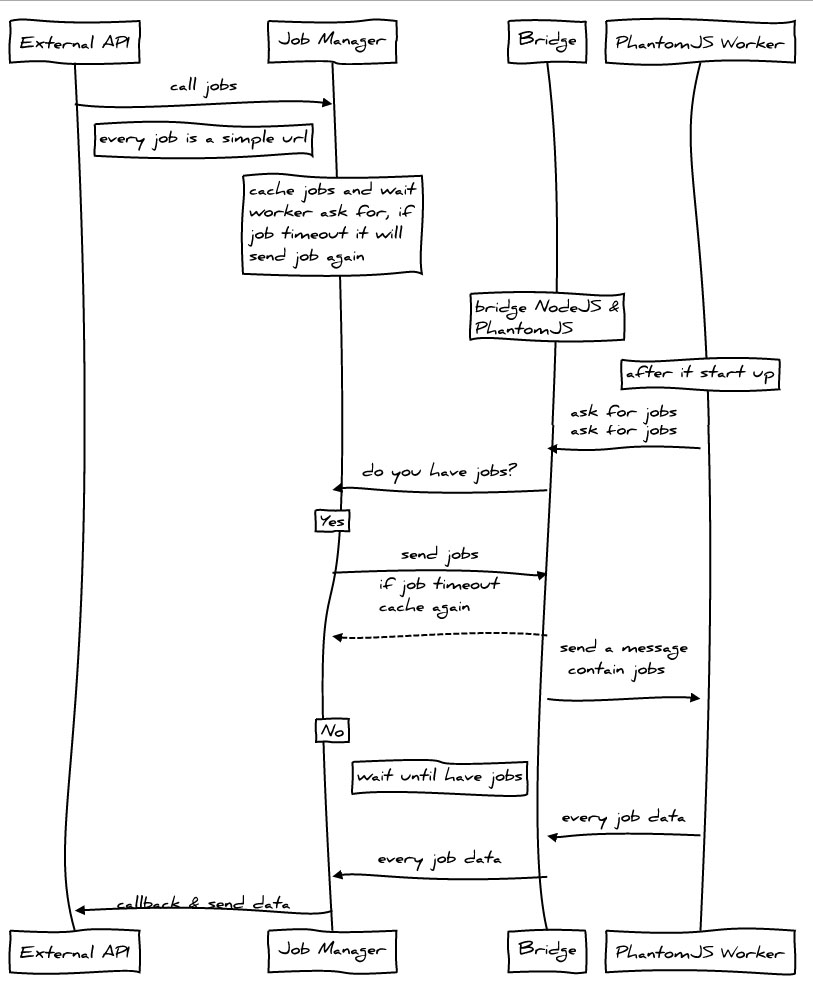

Last time we introduced how to use NodeJS PhantomJS to take screenshots, but since a PhantomJS process is enabled for each screenshot operation, the efficiency is worrying when the concurrency increases, so we rewrote all the code and made it independent Become a module for easy calling.

How to improve? Control the number of threads and the number of URLs processed by a single thread. Use Standard Output & WebSocket for communication. Add caching mechanism, currently using Javascript Object. Provides a simple interface to the outside world.

Design

Dependencies & Installation

Since PhantomJS 1.9.0 only started to support Websocket, we must first make sure that the PhantomJS in PATH is version 1.9.0 or above. At the command line type:

$ phantomjs -v

If the version number 1.9.x can be returned, you can continue the operation. If the version is too low or an error occurs, please go to PhantomJS official website to download the latest version.

If you have Git installed, or have a Git Shell, then type at the command line:

$ npm install url-extract

Proceed to install.

A simple example

For example, if we want to intercept the Baidu homepage, we can do this:

The following is the print:

Among them, the image attribute is the address of the screenshot relative to the working path. We can use the getData interface of Job to get clearer data, for example:

The printing will look like this:

image represents the address of the screenshot relative to the working path, status represents whether the status is normal, true represents normal, and false represents the screenshot failed.

For more examples, please see: https://github.com/miniflycn/url-extract/tree/master/examples

Main API

.snapshot

url snapshot

.snapshot(url, [callback]).snapshot(urls, [callback]).snapshot(url, [option]).snapshot(urls, [option])Copy code The code is as follows:url {String} The address to be intercepted urls {Array} The address to be intercepted Address array callback {Function} Callback function option {Object} Optional parameters ┝ id {String} The id of the custom url. If the first parameter is urls, this parameter is invalid ┝ image {String} The saving address of the custom screenshot, if The first parameter is urls, this parameter is invalid┝ groupId {String} defines the groupId of a group of URLs, used to identify which group of URLs it is when returning ┝ ignoreCache {Boolean} whether to ignore the cache┗ callback {Function} callback function.extract

Grab url information and get snapshots

.extract(url, [callback]).extract(urls, [callback]).extract(url, [option]).extract( urls, [option])url {String} The address to be intercepted

urls {Array} Array of addresses to intercept

callback {Function} callback function

option {Object} optional parameter

┝ id {String} The id of the custom URL. If the first parameter is urls, this parameter is invalid

┝ image {String} Customize the saving address of the screenshot. If the first parameter is urls, this parameter is invalid

┝ groupId {String} defines the groupId of a group of URLs, used to identify which group of URLs it is when returning

┝ignoreCache {Boolean} Whether to ignore the cache

┗ callback {Function} callback function

Job (class)

Each URL corresponds to a job object, and the relevant information of the URL is stored in the job object.

Field

url {String} link address content {Boolean} whether to crawl the page's title and description information id {String} job's idgroupId {String} group id of a bunch of jobs cache {Boolean} whether to enable caching callback {Function} callback function image {String} Image address status {Boolean} Whether the job is currently normalPrototype

getData() gets job related dataGlobal configuration

The config file in the root directory of url-extract can be configured globally. The default is as follows:

module.exports = { wsPort: 3001, maxJob: 100, maxQueueJob: 400, cache: 'object', maxCache: 10000, workerNum: 0};Copy after loginwsPort {Number} The port address occupied by websocket maxJob {Number} The number of concurrent workers that each PhantomJS thread can have maxQueueJob {Number} The maximum number of waiting jobs, 0 means no limit, beyond this number, any job will directly return to failure (i.e. status = false) cache {String} cache implementation, currently only object implements maxCache {Number} maximum number of cache links workerNum {Number} PhantomJS thread number, 0 means the same as the number of CPUsA simple service example

https://github.com/miniflycn/url-extract-server-example

Note that connect and url-extract need to be installed:

$ npm install

If you downloaded the network disk file, please install connect:

$ npm install connect

Then type:

$ node bin/server

Open:

View the effect.

;

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

What should I do if I encounter garbled code printing for front-end thermal paper receipts?

Apr 04, 2025 pm 02:42 PM

What should I do if I encounter garbled code printing for front-end thermal paper receipts?

Apr 04, 2025 pm 02:42 PM

Frequently Asked Questions and Solutions for Front-end Thermal Paper Ticket Printing In Front-end Development, Ticket Printing is a common requirement. However, many developers are implementing...

Who gets paid more Python or JavaScript?

Apr 04, 2025 am 12:09 AM

Who gets paid more Python or JavaScript?

Apr 04, 2025 am 12:09 AM

There is no absolute salary for Python and JavaScript developers, depending on skills and industry needs. 1. Python may be paid more in data science and machine learning. 2. JavaScript has great demand in front-end and full-stack development, and its salary is also considerable. 3. Influencing factors include experience, geographical location, company size and specific skills.

How to merge array elements with the same ID into one object using JavaScript?

Apr 04, 2025 pm 05:09 PM

How to merge array elements with the same ID into one object using JavaScript?

Apr 04, 2025 pm 05:09 PM

How to merge array elements with the same ID into one object in JavaScript? When processing data, we often encounter the need to have the same ID...

Demystifying JavaScript: What It Does and Why It Matters

Apr 09, 2025 am 12:07 AM

Demystifying JavaScript: What It Does and Why It Matters

Apr 09, 2025 am 12:07 AM

JavaScript is the cornerstone of modern web development, and its main functions include event-driven programming, dynamic content generation and asynchronous programming. 1) Event-driven programming allows web pages to change dynamically according to user operations. 2) Dynamic content generation allows page content to be adjusted according to conditions. 3) Asynchronous programming ensures that the user interface is not blocked. JavaScript is widely used in web interaction, single-page application and server-side development, greatly improving the flexibility of user experience and cross-platform development.

The difference in console.log output result: Why are the two calls different?

Apr 04, 2025 pm 05:12 PM

The difference in console.log output result: Why are the two calls different?

Apr 04, 2025 pm 05:12 PM

In-depth discussion of the root causes of the difference in console.log output. This article will analyze the differences in the output results of console.log function in a piece of code and explain the reasons behind it. �...

How to achieve parallax scrolling and element animation effects, like Shiseido's official website?

or:

How can we achieve the animation effect accompanied by page scrolling like Shiseido's official website?

Apr 04, 2025 pm 05:36 PM

How to achieve parallax scrolling and element animation effects, like Shiseido's official website?

or:

How can we achieve the animation effect accompanied by page scrolling like Shiseido's official website?

Apr 04, 2025 pm 05:36 PM

Discussion on the realization of parallax scrolling and element animation effects in this article will explore how to achieve similar to Shiseido official website (https://www.shiseido.co.jp/sb/wonderland/)...

Can PowerPoint run JavaScript?

Apr 01, 2025 pm 05:17 PM

Can PowerPoint run JavaScript?

Apr 01, 2025 pm 05:17 PM

JavaScript can be run in PowerPoint, and can be implemented by calling external JavaScript files or embedding HTML files through VBA. 1. To use VBA to call JavaScript files, you need to enable macros and have VBA programming knowledge. 2. Embed HTML files containing JavaScript, which are simple and easy to use but are subject to security restrictions. Advantages include extended functions and flexibility, while disadvantages involve security, compatibility and complexity. In practice, attention should be paid to security, compatibility, performance and user experience.

Is JavaScript hard to learn?

Apr 03, 2025 am 12:20 AM

Is JavaScript hard to learn?

Apr 03, 2025 am 12:20 AM

Learning JavaScript is not difficult, but it is challenging. 1) Understand basic concepts such as variables, data types, functions, etc. 2) Master asynchronous programming and implement it through event loops. 3) Use DOM operations and Promise to handle asynchronous requests. 4) Avoid common mistakes and use debugging techniques. 5) Optimize performance and follow best practices.