PHP3中文文档(转)

第1章 PHP3 入门

什么是PHP3?

PHP3.0版本是一种服务器端HTML-嵌入式脚本描述语言。

PHP3能做什么?

也许PHP3最强大和最重要的特征是他的数据库集成层,使用它完成一个含有数据库功能的网页是不可置信的简单。目前支持下面所列的数据库。

Oracle

Adabas D

Sybase

FilePro

MSQL

Velocis

MySQL

Informix

Solid

dBase

ODBC

Unix dbm

PostgreSQL

PHP的简要历史

PHP从1994年秋天开始孕育,他的创始人是Rasmus Lerdorf。早期没有发布的版本是被他用在自己的网页上来跟踪有谁来参观过他的在线个人简历。被其他人使用的第一个版本是在1995年发布的,当时叫做Personal Home Page Tools。他包含了一个非常简单的语法分析引擎,只能理解一些指定的宏和一些Home Page后台的常见功能,如留言本,计数器和一些其他的素材。在1995年中期,重写了这个语法分析引擎并且命名为PHP/FI 2.0版本。FI来源于Rasmus所写的另一个可以接受Html表单数据的程序包。他组合了Personal Home Page Tools 脚本和Form Interpreter,并且加入了对mSQL的支持,于是PHP/FI 2.0诞生了。PHP/FI以惊人的速度发展,并且其他的人也开始对他的源码加以改进和修改。

很难给出任何精确的统计数字,但是据估计到1996年末至少有15,000个WEB站点在使用PHP/FI 2.0,到了1997年中,这个数字已经成长为50,000个,1997年中PHP的发展也已经有了一些变化,他已经从Rasmus的宠物项目变成了更加有组织的团体项目。语法分析引擎也由Zeev Suraski 和 Andi Gutmans进行了重新改写,这个引擎构成了PHP3的基础。PHP/FI中的大部分通用代码都经过改写后引入了PHP3中。

今天(1998年中),有许多商业的产品如C2's StrongHold web server和Red Hat Linux都开始支持PHP3或PHP/FI,根据由NetCraft提供的数字进行保守的推断,现在在世界各地大概有150,000个WEB站点在使用PHP或PHP/FI。从前景上看,在InterNet上这些站点远远比运行Netscape's flagship Enterprise server的要多。

使用PHP3进行HTTP认证

只有在PHP以Apache的模块方式运行的时候才可以使用HTTP认证的功能。在Apache的模块PHP脚本中,可以使用Header()函数向客户断浏览器发送一个”Authentication Required”的消息,使浏览器弹出一个用户名/密码(username/password)的输入窗口,当用户输入用户名和密码后,包含PHP脚本的URL将会被再次调用,使用分别代表用户名,密码,和确认方式的$PHP_AUTH_USER, $PHP_AUTH_PW,$PHP_AUTH_TYPE变量。现在只有”BASIC”的确认方式被支持。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

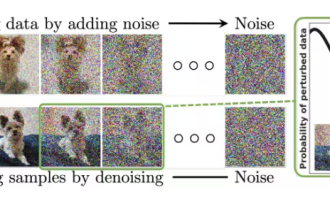

A Diffusion Model Tutorial Worth Your Time, from Purdue University

Apr 07, 2024 am 09:01 AM

A Diffusion Model Tutorial Worth Your Time, from Purdue University

Apr 07, 2024 am 09:01 AM

Diffusion can not only imitate better, but also "create". The diffusion model (DiffusionModel) is an image generation model. Compared with the well-known algorithms such as GAN and VAE in the field of AI, the diffusion model takes a different approach. Its main idea is a process of first adding noise to the image and then gradually denoising it. How to denoise and restore the original image is the core part of the algorithm. The final algorithm is able to generate an image from a random noisy image. In recent years, the phenomenal growth of generative AI has enabled many exciting applications in text-to-image generation, video generation, and more. The basic principle behind these generative tools is the concept of diffusion, a special sampling mechanism that overcomes the limitations of previous methods.

Generate PPT with one click! Kimi: Let the 'PPT migrant workers' become popular first

Aug 01, 2024 pm 03:28 PM

Generate PPT with one click! Kimi: Let the 'PPT migrant workers' become popular first

Aug 01, 2024 pm 03:28 PM

Kimi: In just one sentence, in just ten seconds, a PPT will be ready. PPT is so annoying! To hold a meeting, you need to have a PPT; to write a weekly report, you need to have a PPT; to make an investment, you need to show a PPT; even when you accuse someone of cheating, you have to send a PPT. College is more like studying a PPT major. You watch PPT in class and do PPT after class. Perhaps, when Dennis Austin invented PPT 37 years ago, he did not expect that one day PPT would become so widespread. Talking about our hard experience of making PPT brings tears to our eyes. "It took three months to make a PPT of more than 20 pages, and I revised it dozens of times. I felt like vomiting when I saw the PPT." "At my peak, I did five PPTs a day, and even my breathing was PPT." If you have an impromptu meeting, you should do it

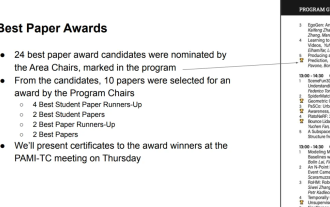

All CVPR 2024 awards announced! Nearly 10,000 people attended the conference offline, and a Chinese researcher from Google won the best paper award

Jun 20, 2024 pm 05:43 PM

All CVPR 2024 awards announced! Nearly 10,000 people attended the conference offline, and a Chinese researcher from Google won the best paper award

Jun 20, 2024 pm 05:43 PM

In the early morning of June 20th, Beijing time, CVPR2024, the top international computer vision conference held in Seattle, officially announced the best paper and other awards. This year, a total of 10 papers won awards, including 2 best papers and 2 best student papers. In addition, there were 2 best paper nominations and 4 best student paper nominations. The top conference in the field of computer vision (CV) is CVPR, which attracts a large number of research institutions and universities every year. According to statistics, a total of 11,532 papers were submitted this year, and 2,719 were accepted, with an acceptance rate of 23.6%. According to Georgia Institute of Technology’s statistical analysis of CVPR2024 data, from the perspective of research topics, the largest number of papers is image and video synthesis and generation (Imageandvideosyn

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

Regarding Llama3, new test results have been released - the large model evaluation community LMSYS released a large model ranking list. Llama3 ranked fifth, and tied for first place with GPT-4 in the English category. The picture is different from other benchmarks. This list is based on one-on-one battles between models, and the evaluators from all over the network make their own propositions and scores. In the end, Llama3 ranked fifth on the list, followed by three different versions of GPT-4 and Claude3 Super Cup Opus. In the English single list, Llama3 overtook Claude and tied with GPT-4. Regarding this result, Meta’s chief scientist LeCun was very happy and forwarded the tweet and

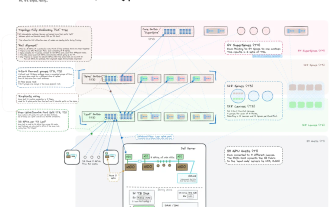

From bare metal to a large model with 70 billion parameters, here is a tutorial and ready-to-use scripts

Jul 24, 2024 pm 08:13 PM

From bare metal to a large model with 70 billion parameters, here is a tutorial and ready-to-use scripts

Jul 24, 2024 pm 08:13 PM

We know that LLM is trained on large-scale computer clusters using massive data. This site has introduced many methods and technologies used to assist and improve the LLM training process. Today, what we want to share is an article that goes deep into the underlying technology and introduces how to turn a bunch of "bare metals" without even an operating system into a computer cluster for training LLM. This article comes from Imbue, an AI startup that strives to achieve general intelligence by understanding how machines think. Of course, turning a bunch of "bare metal" without an operating system into a computer cluster for training LLM is not an easy process, full of exploration and trial and error, but Imbue finally successfully trained an LLM with 70 billion parameters. and in the process accumulate

AI in use | AI created a life vlog of a girl living alone, which received tens of thousands of likes in 3 days

Aug 07, 2024 pm 10:53 PM

AI in use | AI created a life vlog of a girl living alone, which received tens of thousands of likes in 3 days

Aug 07, 2024 pm 10:53 PM

Editor of the Machine Power Report: Yang Wen The wave of artificial intelligence represented by large models and AIGC has been quietly changing the way we live and work, but most people still don’t know how to use it. Therefore, we have launched the "AI in Use" column to introduce in detail how to use AI through intuitive, interesting and concise artificial intelligence use cases and stimulate everyone's thinking. We also welcome readers to submit innovative, hands-on use cases. Video link: https://mp.weixin.qq.com/s/2hX_i7li3RqdE4u016yGhQ Recently, the life vlog of a girl living alone became popular on Xiaohongshu. An illustration-style animation, coupled with a few healing words, can be easily picked up in just a few days.

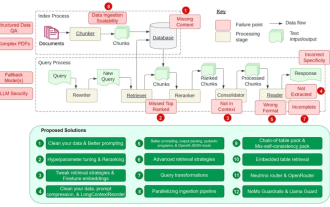

Counting down the 12 pain points of RAG, NVIDIA senior architect teaches solutions

Jul 11, 2024 pm 01:53 PM

Counting down the 12 pain points of RAG, NVIDIA senior architect teaches solutions

Jul 11, 2024 pm 01:53 PM

Retrieval-augmented generation (RAG) is a technique that uses retrieval to boost language models. Specifically, before a language model generates an answer, it retrieves relevant information from an extensive document database and then uses this information to guide the generation process. This technology can greatly improve the accuracy and relevance of content, effectively alleviate the problem of hallucinations, increase the speed of knowledge update, and enhance the traceability of content generation. RAG is undoubtedly one of the most exciting areas of artificial intelligence research. For more details about RAG, please refer to the column article on this site "What are the new developments in RAG, which specializes in making up for the shortcomings of large models?" This review explains it clearly." But RAG is not perfect, and users often encounter some "pain points" when using it. Recently, NVIDIA’s advanced generative AI solution

How to check the version of Douyin

Apr 15, 2024 pm 12:06 PM

How to check the version of Douyin

Apr 15, 2024 pm 12:06 PM

1. Open the Douyin app and click [Me] in the lower right corner to enter the personal page. 2. Click the [Three Stripes] icon in the upper right corner and select the [Settings] option in the pop-up menu bar. 3. In the settings page, scroll to the bottom to view the current version number information of Douyin.