Technology peripherals

Technology peripherals

AI

AI

Li Feifei interprets the entrepreneurial direction 'spatial intelligence' to allow AI to truly understand the world

Li Feifei interprets the entrepreneurial direction 'spatial intelligence' to allow AI to truly understand the world

Li Feifei interprets the entrepreneurial direction 'spatial intelligence' to allow AI to truly understand the world

李飞飞创业选择的「空间智能」,完整的 TED 解读视频公布了。

前段时间,路透社独家报道了知名「AI 教母」李飞飞正在创建一家初创公司,并完成了种子轮融资。

在介绍这家初创公司时,一位信息人士引用了李飞飞在温哥华 TED 上的一次演讲,表明他在此次 TED 演讲中介绍了空间智能的概念。

就在今天,李飞飞在 X 上放出了她在温哥华 TED 上的完整演讲视频。 她在 X 上介绍称,「空间智能是人工智能拼图中的关键一环。这是我 2024 年有关从进化到人工智能历程的 TED 演讲,也涉及到我们如何构建空间智能。从看到变为洞察,洞察转变为理解,理解引导为行动。所有这些带来智能。」

她在 X 上介绍称,「空间智能是人工智能拼图中的关键一环。这是我 2024 年有关从进化到人工智能历程的 TED 演讲,也涉及到我们如何构建空间智能。从看到变为洞察,洞察转变为理解,理解引导为行动。所有这些带来智能。」

李飞飞 TED 演讲链接:

https://www.ted.com/talks/fei_fei_li_with_spatial_intelligence_ai_will_understand_the_real_world/transcript

为了进一步解释“空间智能”这一概念,他展示了一张猫伸出爪子将玻璃杯推向桌子边缘的图片。他表示,在一瞬间,人类大脑可以评估“这个玻璃杯的几何形状,它在三维空间中的位置,它与桌子、猫和所有其他东西的关系”,然后预测会发生什么,并采取行动加以阻止。

她说:“大自然创造了一个以空间智能为动力的观察和行动的良性循环。”她补充说,她所在的斯坦福大学实验室正在尝试教计算机“如何在三维世界中行动”,例如,使用大型语言模型让一个机器臂根据口头指令执行开门、做三明治等任务。

以下为李飞飞在 2024TED 的演讲实录:

我先给大家展示一下,这是 5.4 亿年前的世界,充满了纯粹而无尽的黑暗。这种黑暗并非因为缺乏光源,而是因为缺少观察的眼睛。尽管阳光穿透了海洋表面,深入到 1000 米之下,来自海底热液喷口的光线照亮了充满生命力的海底,但在这些古老水域中,找不到一只眼睛,没有视网膜,没有角膜,没有晶状体。因此,所有的光线,所有的生命体都是不可视的。

曾经有一个时代,「看见」这个概念本身并不存在,直到三叶虫的出现,它们是第一批能够感知光线的生物,标志着一个全新世界的开始。它们首次意识到,除了自己,还有更广阔的世界存在。

这种视觉能力可能催生了寒武纪大爆发,让大量动物物种开始在化石记录中留下痕迹。从被动地感受光线,到主动地用视觉去理解世界,生物的神经系统开始进化,视觉转化为洞察力,进而引导行动,最终产生了智能。

如今,我们不再满足于自然界赋予的视觉智能,而是渴望创造能像我们一样,甚至更智能地「看」的机器。

九年前,我在这个舞台上介绍了计算机视觉领域的早期进展,这是人工智能的一个子领域。那时,神经网络算法、图形处理器(GPU)和大数据首次结合,共同开启了现代人工智能的新纪元。例如我的实验室花费数年整理的含有 1500 万张图像的数据集,即 ImageNet 数据集。我们的进步非常迅速,从最初的图像标注到现在,算法的速度和准确性都有了显著提升。我们甚至开发了能够识别图像中的对象并预测它们之间关系的算法。这些工作是由我的学生和合作者完成的。

Think back to the last time I showed you the first computer vision algorithm that can describe photos in human natural language. That was work I did with my student Andrej Karpathy. At that point, I took a chance and said, "Andrej, can we build a reverse computer?" Andrej said, "Haha, it's impossible." As you can see from this post, this latest one is impossible. It has become possible. This is all thanks to a family of diffusion models that power today's generative AI algorithms, which turn human-prompted sentences into entirely new photos and videos.

#Many of you have witnessed the amazing video work created by OpenAI’s Sora. However, even without massive GPU resources, my students and our collaborators were able to successfully develop a generative video model called Walt a few months before Sora.

Despite this, we are still exploring and improving. We noticed that there were still some imperfections in the resulting video, such as the detail in the cat's eyes and how it moved through the waves without getting wet. But as past experience has taught us, we will learn from these mistakes, continue to improve, and create the future of our dreams. In that future, we hope that AI will do more things for us, or help us do them better.

I have been saying for years that taking pictures and actually "seeing" and understanding are two different things. Today, I would like to add something. Merely seeing is not enough. Real "seeing" is for action and learning. When taking action in three dimensions of space and time, we learn how to do it better through observation. Nature creates a virtuous cycle through "spatial intelligence" that links vision and action.

To illustrate how spatial intelligence works, take a look at this photo. If you have a sudden urge to do something, it means that your brain has instantaneously analyzed the geometry of the glass, its position in space, and its relationship to surrounding objects. This urge to act is inherent in all creatures with spatial intelligence, and it closely links perception and action.

If we want artificial intelligence to surpass its current capabilities, we not only need it to see and speak, but also to act. We've made exciting progress on this front. The latest spatial intelligence milestone is to teach computers to see, learn, and act, and continually learn how to see and act better. This is not easy because it took nature millions of years to evolve the ability to rely on the eyes to receive light and convert two-dimensional images. Spatial intelligence converted into three-dimensional information.

Only recently, a team of researchers from Google developed an algorithm to transform a set of photos into a three-dimensional space, like the example we show here. My students and our collaborators took it a step further and created an algorithm that takes as input only an image and converts it into a three-dimensional shape. Here are some more examples.

Recall that we talked about a computer program that could convert human verbal descriptions into videos. A team of researchers at the University of Michigan has found a way to translate a sentence into a three-dimensional room layout. My colleagues at Stanford and our students and I developed an algorithm that takes as input only one image and creates an infinite number of possible spaces for the viewer to explore.

#These are exciting advances we have made in the field of spatial intelligence, and they also herald the possibilities of our future world. By then, humans will be able to transform the entire world into digital form, a digital world capable of simulating the richness and nuances of the real world.

As the progress of spatial intelligence accelerates, this new era of virtuous cycle is unfolding before our eyes. This back-and-forth interaction is catalyzing robot learning, a key component of any embodied intelligence system that needs to understand and interact with the three-dimensional world.

Ten years ago, ImageNet, developed in my lab, enabled a database of millions of high-quality photos for training computer vision. Today, we are collecting behavioral "ImageNet" of behaviors and actions to train computers and robots how to act in a three-dimensional world. But this time we are not collecting static images, but building a simulation environment driven by a three-dimensional spatial model. This gives the computer an infinite number of possibilities for learning how to act.

We are also making exciting progress in robotic language intelligence. Using input based on large language models, my students and collaborators became the first team to create a robot arm that can perform a variety of tasks based on verbal commands, such as opening a drawer or unplugging a phone from charging. line, or it can make a sandwich with bread, lettuce, tomato, and even put a napkin on it for you. Normally I'd probably be more demanding on a sandwich than a robotic arm would make, but it's a good start.

In our ancient times, in that primitive ocean, the ability to observe and perceive the surrounding environment started the explosion of biological species in the Cambrian period. Today, this light is touching "life in digital form." Spatial intelligence allows machines to interact not only with each other, but also with humans or with the three-dimensional world in real or virtual form. As this future takes shape, it will be important to many people. Have a profound impact on lives.

Let’s take healthcare as an example. Over the past decade, my lab has taken the first steps in exploring how to apply artificial intelligence to influence the effectiveness of patient treatments and how to combat health care worker fatigue. challenges.

We are piloting smart sensors with collaborators at Stanford School of Medicine and other hospitals. It can detect when clinicians enter patient rooms without proper hand washing and track surgical instruments or alert care teams when patients are at risk, such as in the event of a fall. These technologies are a kind of ambient intelligence, like an extra pair of eyes, that can really bring changes to the world. I would prefer more interactive assistance for our patients, clinicians and caregivers who desperately need an extra pair of hands. Imagine an autonomous robot delivering medical supplies while caregivers focus on the patient, or in augmented reality, guiding a surgeon through safer, faster, less invasive procedures.

Or imagine a scenario where severely paralyzed patients could control robots with their thoughts. That’s right, using brain waves to complete the everyday tasks you and I take for granted. You can get a glimpse of this future possibility in this recent experiment from my lab. In this video, a robotic arm cooking a Japanese sukiyaki is controlled entirely by electrical signals from the brain, which are non-invasively collected via an EEG cap.

About 500 million years ago, the emergence of vision upended the dark world and triggered the most profound evolutionary process: the development of intelligence in the animal world. The staggering progress in artificial intelligence over the past decade has been equally astonishing. But I believe the full potential of this digital Cambrian explosion will not be fully realized until we have computers and robots powered by spatial intelligence, just as nature has done with humans.

This will be an exciting time as our digital companions learn to reason and interact with the beautiful three-dimensional space that is the human world, while also creating more new worlds for us to explore. Achieving this future will not be easy. It requires careful thought and always developing technology with people at the heart. But if we get it right, computers and robots powered by spatial intelligence will become not only useful tools, but also trustworthy partners, boosting human productivity and promoting harmonious coexistence. At the same time, our personal dignity will be more prominent, leading to the common prosperity of human society.

What excites me most about the future is that AI will become sharper, more insightful, and spatially aware. They will walk with humans and constantly pursue better ways to create a better world.

The above is the detailed content of Li Feifei interprets the entrepreneurial direction 'spatial intelligence' to allow AI to truly understand the world. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

But maybe he can’t defeat the old man in the park? The Paris Olympic Games are in full swing, and table tennis has attracted much attention. At the same time, robots have also made new breakthroughs in playing table tennis. Just now, DeepMind proposed the first learning robot agent that can reach the level of human amateur players in competitive table tennis. Paper address: https://arxiv.org/pdf/2408.03906 How good is the DeepMind robot at playing table tennis? Probably on par with human amateur players: both forehand and backhand: the opponent uses a variety of playing styles, and the robot can also withstand: receiving serves with different spins: However, the intensity of the game does not seem to be as intense as the old man in the park. For robots, table tennis

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

On August 21, the 2024 World Robot Conference was grandly held in Beijing. SenseTime's home robot brand "Yuanluobot SenseRobot" has unveiled its entire family of products, and recently released the Yuanluobot AI chess-playing robot - Chess Professional Edition (hereinafter referred to as "Yuanluobot SenseRobot"), becoming the world's first A chess robot for the home. As the third chess-playing robot product of Yuanluobo, the new Guoxiang robot has undergone a large number of special technical upgrades and innovations in AI and engineering machinery. For the first time, it has realized the ability to pick up three-dimensional chess pieces through mechanical claws on a home robot, and perform human-machine Functions such as chess playing, everyone playing chess, notation review, etc.

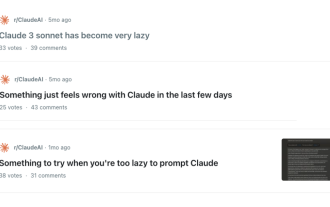

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

The start of school is about to begin, and it’s not just the students who are about to start the new semester who should take care of themselves, but also the large AI models. Some time ago, Reddit was filled with netizens complaining that Claude was getting lazy. "Its level has dropped a lot, it often pauses, and even the output becomes very short. In the first week of release, it could translate a full 4-page document at once, but now it can't even output half a page!" https:// www.reddit.com/r/ClaudeAI/comments/1by8rw8/something_just_feels_wrong_with_claude_in_the/ in a post titled "Totally disappointed with Claude", full of

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference being held in Beijing, the display of humanoid robots has become the absolute focus of the scene. At the Stardust Intelligent booth, the AI robot assistant S1 performed three major performances of dulcimer, martial arts, and calligraphy in one exhibition area, capable of both literary and martial arts. , attracted a large number of professional audiences and media. The elegant playing on the elastic strings allows the S1 to demonstrate fine operation and absolute control with speed, strength and precision. CCTV News conducted a special report on the imitation learning and intelligent control behind "Calligraphy". Company founder Lai Jie explained that behind the silky movements, the hardware side pursues the best force control and the most human-like body indicators (speed, load) etc.), but on the AI side, the real movement data of people is collected, allowing the robot to become stronger when it encounters a strong situation and learn to evolve quickly. And agile

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Deep integration of vision and robot learning. When two robot hands work together smoothly to fold clothes, pour tea, and pack shoes, coupled with the 1X humanoid robot NEO that has been making headlines recently, you may have a feeling: we seem to be entering the age of robots. In fact, these silky movements are the product of advanced robotic technology + exquisite frame design + multi-modal large models. We know that useful robots often require complex and exquisite interactions with the environment, and the environment can be represented as constraints in the spatial and temporal domains. For example, if you want a robot to pour tea, the robot first needs to grasp the handle of the teapot and keep it upright without spilling the tea, then move it smoothly until the mouth of the pot is aligned with the mouth of the cup, and then tilt the teapot at a certain angle. . this

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

At this ACL conference, contributors have gained a lot. The six-day ACL2024 is being held in Bangkok, Thailand. ACL is the top international conference in the field of computational linguistics and natural language processing. It is organized by the International Association for Computational Linguistics and is held annually. ACL has always ranked first in academic influence in the field of NLP, and it is also a CCF-A recommended conference. This year's ACL conference is the 62nd and has received more than 400 cutting-edge works in the field of NLP. Yesterday afternoon, the conference announced the best paper and other awards. This time, there are 7 Best Paper Awards (two unpublished), 1 Best Theme Paper Award, and 35 Outstanding Paper Awards. The conference also awarded 3 Resource Paper Awards (ResourceAward) and Social Impact Award (

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

This afternoon, Hongmeng Zhixing officially welcomed new brands and new cars. On August 6, Huawei held the Hongmeng Smart Xingxing S9 and Huawei full-scenario new product launch conference, bringing the panoramic smart flagship sedan Xiangjie S9, the new M7Pro and Huawei novaFlip, MatePad Pro 12.2 inches, the new MatePad Air, Huawei Bisheng With many new all-scenario smart products including the laser printer X1 series, FreeBuds6i, WATCHFIT3 and smart screen S5Pro, from smart travel, smart office to smart wear, Huawei continues to build a full-scenario smart ecosystem to bring consumers a smart experience of the Internet of Everything. Hongmeng Zhixing: In-depth empowerment to promote the upgrading of the smart car industry Huawei joins hands with Chinese automotive industry partners to provide

The first large UI model in China is released! Motiff's large model creates the best assistant for designers and optimizes UI design workflow

Aug 19, 2024 pm 04:48 PM

The first large UI model in China is released! Motiff's large model creates the best assistant for designers and optimizes UI design workflow

Aug 19, 2024 pm 04:48 PM

Artificial intelligence is developing faster than you might imagine. Since GPT-4 introduced multimodal technology into the public eye, multimodal large models have entered a stage of rapid development, gradually shifting from pure model research and development to exploration and application in vertical fields, and are deeply integrated with all walks of life. In the field of interface interaction, international technology giants such as Google and Apple have invested in the research and development of large multi-modal UI models, which is regarded as the only way forward for the mobile phone AI revolution. In this context, the first large-scale UI model in China was born. On August 17, at the IXDC2024 International Experience Design Conference, Motiff, a design tool in the AI era, launched its independently developed UI multi-modal model - Motiff Model. This is the world's first UI design tool