Technology peripherals

Technology peripherals

AI

AI

More than just 3D Gaussian! Latest overview of state-of-the-art 3D reconstruction techniques

More than just 3D Gaussian! Latest overview of state-of-the-art 3D reconstruction techniques

More than just 3D Gaussian! Latest overview of state-of-the-art 3D reconstruction techniques

Written before&The author’s personal understanding

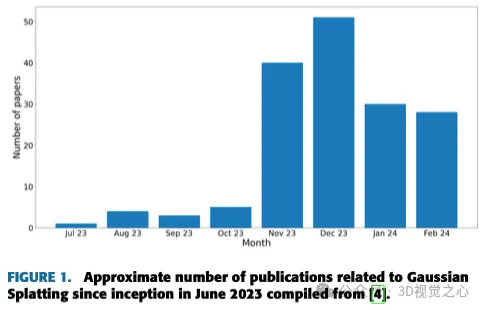

Image-based 3D reconstruction is a challenging task that involves inferring a target or scene from a set of input images 3D shape. Learning-based methods have attracted attention due to their ability to directly estimate 3D shapes. This review paper focuses on state-of-the-art 3D reconstruction techniques, including generating novel, unseen views. An overview of recent developments in Gaussian splash methods is provided, including input types, model structures, output representations, and training strategies. Unresolved challenges and future directions are also discussed. Given the rapid progress in this field and the numerous opportunities to enhance 3D reconstruction methods, a thorough examination of the algorithm seems crucial. Therefore, this study provides a comprehensive overview of recent advances in Gaussian scattering.

(Swipe up with your thumb, click on the top card to follow me, The whole operation will only take you 1.328 seconds, and then it will take you Go to the future, all, free dry goods, in case any content is helpful to you~)

Introduction to 3D reconstruction and new view synthesis

3D reconstruction and NVS are two closely related fields in computer graphics that aim to capture and render realistic 3D representations of physical scenes. 3D reconstruction involves extracting geometric and appearance information from a series of 2D images, usually captured from different viewpoints. Although there are many techniques for 3D scanning, this capture of different 2D images is a very simple and computationally cheap way to collect information about a 3D environment. This information can then be used to create a 3D model of the scene, which can be used for various purposes such as virtual reality (VR) applications, augmented reality (AR) overlays, or computer-aided design (CAD) modeling.

NVS, on the other hand, focuses on generating a new 2D view of the scene from a previously acquired 3D model. This allows the creation of photorealistic images of a scene from any desired viewpoint, even if the original image was not taken from that angle. Recent advances in deep learning have led to significant improvements in 3D reconstruction and NVS. Deep learning models can be used to efficiently extract 3D geometry and appearance from images, and such models can also be used to generate realistic novel views from 3D models. As a result, these technologies are becoming increasingly popular in various applications, and they are expected to play an even more important role in the future.

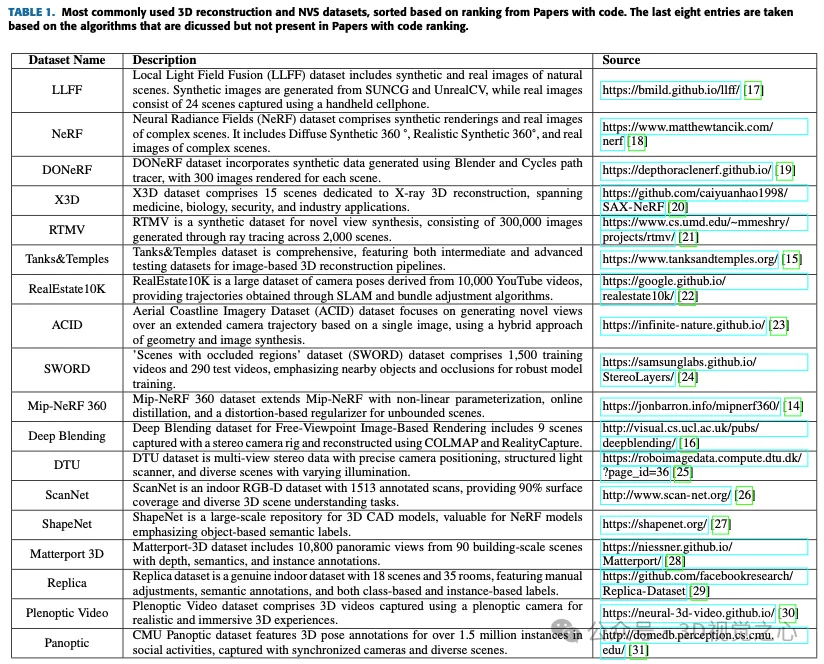

This section will introduce how to store or represent 3D data, then introduce the most commonly used public datasets for this task, and then will expand on various algorithms, focusing mainly on Gaussian splash.

3D Data Representation

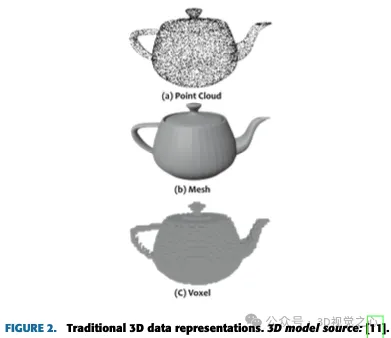

The complex spatial nature of three-dimensional data, including volumetric dimensions, provides a detailed representation of targets and environments. This is crucial for creating immersive simulations and accurate models in various research areas. The multidimensional structure of three-dimensional data allows the combination of depth, width and height, leading to significant advances in disciplines such as architectural design and medical imaging technology.

The choice of data representation plays a crucial role in the design of many 3D deep learning systems. Point clouds lack a grid-like structure and generally cannot be directly convolved. On the other hand, voxel representations characterized by grid-like structures often incur high computational memory requirements.

The evolution of 3D representation comes with the way 3D data or models are stored. The most commonly used 3D data representations can be divided into traditional and novel methods.

Traditional Approaches:

- Point cloud

- Mesh

- Voxel

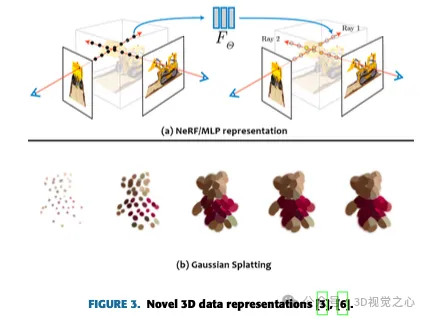

Novel Approaches:

- Neural Network/Multi layer perceptron (MLP)

- Gaussian Splats

Dataset

##3D Reconstruction and NVS Technology

In order to evaluate the current progress in this field, a A literature study identified and scrutinized relevant academic works. The analysis focuses in particular on two key areas: 3D reconstruction and NVS. The development of 3D volumetric reconstruction from multiple camera images spans decades, with diverse applications in computer graphics, robotics, and medical imaging. The next section explores the current status of this technology. Photogrammetry: Since the 1980s, advanced photogrammetry and stereo vision technology have emerged to automatically identify corresponding points in stereo image pairs. Photogrammetry is a method that combines photography and computer vision to generate 3D models of objects or scenes. It requires capturing images from various angles, utilizing software such as Agisoft Metashape to estimate camera position and generate point clouds. This point cloud is then converted into a textured 3D mesh, enabling the creation of detailed and photorealistic visualizations of reconstructed objects or scenes.Structure from motion: In the 1990s, SFM technology gained prominence, enabling the reconstruction of 3D structure and camera motion from 2D image sequences. SFM is the process of estimating the 3D structure of a scene from a set of 2D images. SFM requires point correlations between images. Find corresponding points by matching features or tracking points in multiple images and triangulate to find 3D locations.

Deep Learning: In recent years, deep learning technology, especially convolutional neural networks (CNNs), has been integrated. Deep learning-based methods are gathering pace in 3D reconstruction. The most notable is the 3D Occupancy Network, a neural network architecture designed for 3D scene understanding and reconstruction. It operates by dividing 3D space into small volumetric units, or voxels, with each voxel representing whether it contains a target or is empty space. These networks use deep learning techniques, such as 3D convolutional neural networks, to predict voxel occupancy, making them valuable for applications such as robotics, autonomous vehicles, augmented reality, and 3D scene reconstruction. These networks rely heavily on convolutions and transformers. They are critical for tasks such as collision avoidance, path planning, and real-time interaction with the physical world. Furthermore, 3D occupancy networks can estimate uncertainty but may have computational limitations when dealing with dynamic or complex scenes. Advances in neural network architecture continue to improve their accuracy and efficiency.

Neural Radiation Field: Launched in 2020, NeRF combines neural networks with classic 3D reconstruction principles and has attracted significant attention in computer vision and graphics. It reconstructs detailed 3D scenes by modeling volumetric functions and predicting color and density through neural networks. NeRFs are widely used in computer graphics and virtual reality. Recently, NeRF has improved accuracy and efficiency through extensive research. Recent research has also explored the applicability of NeRF in underwater scenarios. While providing a robust representation of 3D scene geometry, challenges such as computational requirements still exist. Future NeRF research needs to focus on interpretability, real-time rendering, novel applications, and scalability, opening the way for virtual reality, gaming, and robotics.

Gaussian Scattering: Finally, in 2023, 3D Gaussian Scattering emerged as a new real-time 3D rendering technology. In the next section, this approach is discussed in detail.

The basis of GAUSSIAN SPLATTING

Gaussian Splash uses many 3D Gaussians or particles to depict a 3D scene. Each Gaussian or particle is equipped with a position, direction, scale, different Transparency and color information. To render these particles, convert them to 2D space and organize them strategically for optimal rendering.

Figure 4 shows the architecture of the Gaussian splash algorithm. In the original algorithm, the following steps are taken:

- Structure from motion

- Convert to gaussian splats

- Training

- Differentiable Gaussian rasterization

STATE OF ART

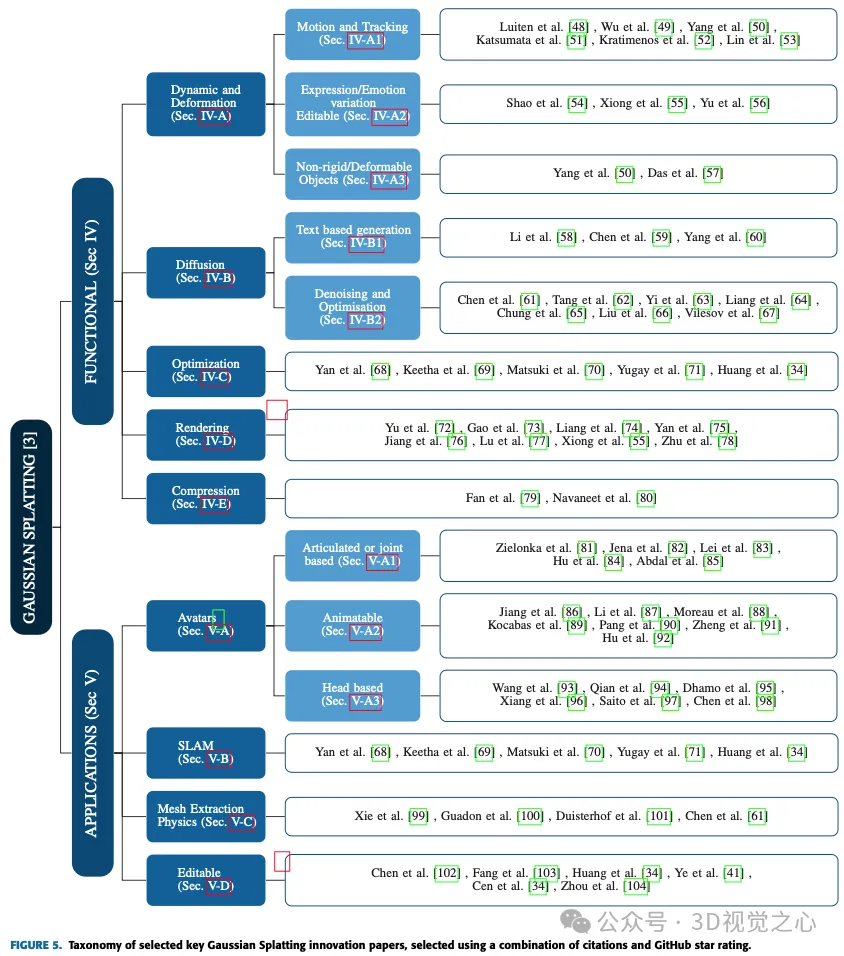

In the next two sections, various applications and advances of Gaussian splash will be explored, and its application in autonomous driving, avatars, Different implementations in areas such as compression, diffusion, dynamics and deformation, editing, text-based generation, mesh extraction and physics, regularization and optimization, rendering, sparse representation, and simultaneous localization and mapping (SLAM). Each subcategory will be examined to provide insight into the versatility of Gaussian splash methods in addressing specific challenges and achieving significant progress in these diverse areas. Figure 5 shows the complete list of all methods.

FUNCTIONAL ADVANCEMENTS

This section examines the progress made in functional capabilities since the Gaussian splash algorithm was first introduced.

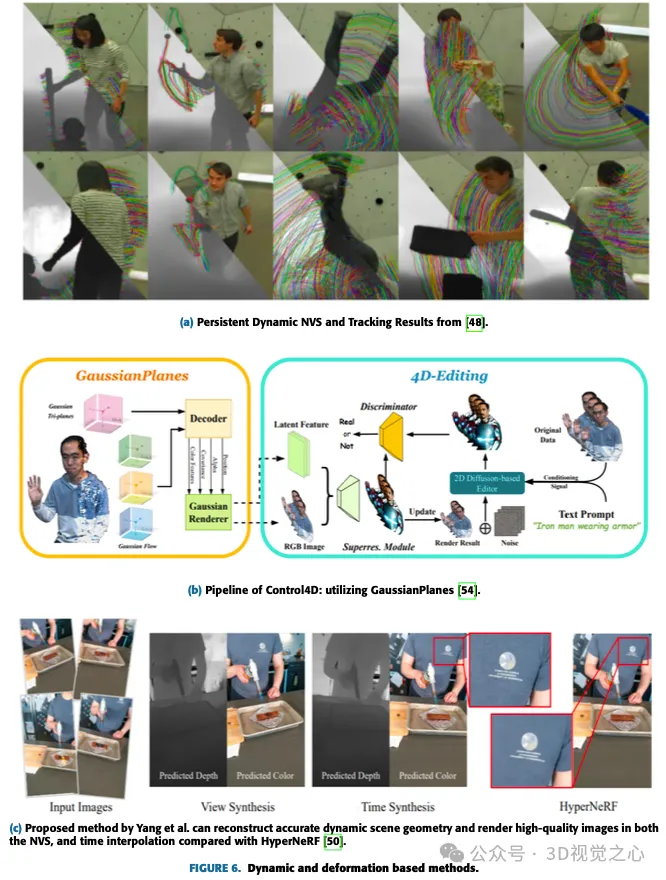

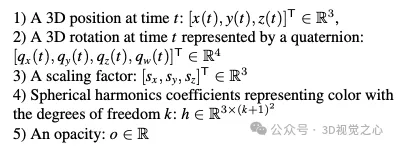

Dynamics and Deformation

Compared with the general Gaussian splash, where all parameters of the 3D covariance matrix only depend on the input image, in this case, in order Captures the dynamics of splashing over time, with some parameters depending on time or time step. For example, the position depends on the time step or frame. This position can be updated by the next frame in a time-consistent manner. It is also possible to learn some underlying encodings that can be used to edit or propagate Gaussians at each time step during rendering to achieve certain effects such as expression changes in avatars, and applying forces to non-rigid bodies. Figure 6 shows some dynamics and deformation based methods.

Dynamic and deformable models can be easily represented with slight modifications to the original Gaussian splash representation:

Motion and Tracking

Most work related to dynamic Gaussian splashing extends to 3D Gaussian motion tracking across time steps, rather than each time step Long has a separate splash. Katsumata et al. proposed the Fourier approximation of position and the linear approximation of rotation quaternion.

The paper by Luiten et al. introduces a method to capture the full 6 degrees of freedom of all 3D points in dynamic scenes. By incorporating local stiffness constraints, the dynamic 3D Gaussian represents consistent spatial rotation, enabling dense 6-DOF tracking and reconstruction without the need for correspondence or streaming input. This method outperforms PIP in 2D tracking, achieving 10 times lower median trajectory error, higher trajectory accuracy, and 100% survival rate. This versatile representation facilitates applications such as 4D video editing, first-person view synthesis, and dynamic scene generation.

Lin et al. introduced a new dual-domain deformation model (DDDM) that is explicitly designed to model the attribute deformation of each Gaussian point. The model uses a Fourier series fit in the frequency domain and a polynomial fit in the time domain to capture time-dependent residuals. DDDM excels at handling deformations in complex video scenes without the need to train a separate 3D Gaussian Splash (3D-GS) model for each frame. Notably, discrete Gaussian point explicit deformation modeling guarantees fast training and 4D scene rendering, similar to the original 3D-GS for static 3D reconstruction. This approach has significant efficiency improvements, with training almost 5 times faster compared to 3D-GS modeling. However, there are opportunities for enhancements in maintaining high-fidelity thin structures in the final render.

Expression or Emotion variation and Editable in Avatars

##Shao et al. introduced GaussianPlanes, which is a method that passes through three-dimensional space and time 4D representation based on plane decomposition improves the effectiveness of 4D editing. Additionally, Control4D utilizes a 4D generator to optimize the continuous creation space of inconsistent photos, resulting in better consistency and quality. The proposed method uses GaussianPlanes to train implicit representations of 4D portrait scenes, which are then rendered into latent features and RGB images using Gaussian rendering. A generative adversarial network (GAN)-based generator and a 2D diffusion-based editor refine the dataset and generate real and fake images for differentiation. The discriminant results contribute to the iterative updating of the generator and discriminator. However, this approach faces challenges in handling fast and extensive non-rigid motions due to its reliance on canonical Gaussian point clouds with flow representations. This method is subject to ControlNet, limiting editing to a coarse level and preventing precise expression or action editing. Additionally, the editing process requires iterative optimization, lacking a single-step solution.Non-Rigid or deformable objects

Implicit neural representation brings significant changes in dynamic scene reconstruction and rendering. However, contemporary dynamic neural rendering methods encounter challenges in capturing complex details and achieving real-time rendering of dynamic scenes. To address these challenges, Yang et al. proposed a deformable 3D Gaussian for high-fidelity monocular dynamic scene reconstruction. A new deformable 3D-GS method is proposed. The method utilizes 3D Gaussians learned in a canonical space with a deformation field specifically designed for monocular dynamic scenes. This method introduces an annealing smooth training (AST) mechanism tailored for real-world monocular dynamic scenes, effectively solving the impact of incorrect poses on the temporal interpolation task without introducing additional training overhead. By using a differential Gaussian rasterizer, Deformable 3D Gaussian not only improves rendering quality but also achieves real-time speed, surpassing existing methods in both aspects. This method has proven to be well suited for tasks such as NVS and offers versatility for post-production tasks due to its point-based nature. Experimental results highlight the superior rendering effects and real-time performance of this method, confirming its effectiveness in dynamic scene modeling.DIFFUSION##Diffusion and Gaussian Splash is a powerful technique for generating 3D objects from textual descriptions/hints. It combines the advantages of two different methods: diffusion models and Gaussian scattering. Diffusion models are neural networks that learn to generate images from noisy inputs. By feeding the model a series of increasingly cleaner images, the model learns to reverse the process of image corruption, eventually generating clean images from completely random inputs. This can be used to generate images from text descriptions, as the model can learn to associate words with corresponding visual features. The text-to-3D pipeline with diffusion and Gaussian splash works by first generating an initial 3D point cloud from a text description using a diffusion model. Gaussian scattering is then used to convert the point cloud into a set of Gaussian spheres. Finally, the Gaussian sphere is rendered to generate a 3D image of the target.

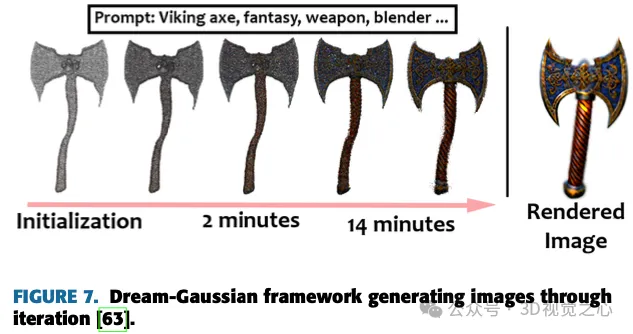

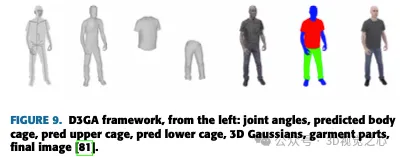

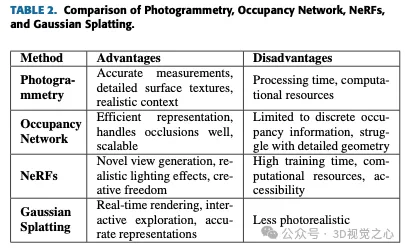

Text based generation The work of Yi et al. introduces Gaussian Dreamer, a text-to-3D method that seamlessly connects 3D and 2D diffusion models through Gaussian splitting, ensuring 3D consistency and complex detail generation. Figure 7 shows the proposed model for generating images. To further enrich the content, noise point growth and color perturbation are introduced to supplement the initialized 3D Gaussian. This method is characterized by being simple and effective, generating 3D instances within 15 minutes on a single GPU, which is superior in speed compared to previous methods. The generated three-dimensional instances can be directly rendered in real time, highlighting the practicality of this method. The overall framework includes initialization using a 3D diffusion model prior and optimization using a 2D diffusion model, enabling the creation of high-quality and diverse 3D assets from text cues by leveraging the advantages of both diffusion models. Chen et al. proposed Gaussian scattering-based text-to-3D generation (GSGEN), which is a text-to-3D generation method that utilizes 3D Gaussians as representations. By leveraging geometric priors, the unique advantages of Gaussian scatter in text-to-3D generation are highlighted. The two-stage optimization strategy combines joint guidance of 2D and 3D diffusion to form a coherent rough structure in geometry optimization, which is then densified in compactness-based appearance refinement. Denoising and Optimisation Li et al.’s GaussianDiffusion framework represents a novel text-to-3D approach utilizing Gaussian splash and Langevin dynamics Learn diffusion models to speed up rendering and achieve unparalleled realism. The introduction of structured noise solves the multi-view geometry challenge, while the variational Gaussian scattering model alleviates convergence issues and artifacts. While the current results show improved realism, ongoing research aims to refine the blurriness and haze introduced by variational Gaussians for further enhancement. Yang et al. conducted a thorough examination of existing diffusion priors and proposed a unified framework to improve these priors by optimizing denoising scores. The approach's versatility extends to a variety of use cases, consistently delivering substantial performance enhancements. In experimental evaluations, our approach achieves unprecedented performance, surpassing contemporary methods. Despite its success in refining 3D generated textures, there is still room for improvement in enhancing the geometry of the generated 3D models. This subsection discusses techniques developed by researchers for faster training and/or inference speeds. In the study by Chung et al., a method is introduced to optimize Gaussian scattering for 3D scene representation using a limited number of images while mitigating the overfitting problem. The traditional method of representing 3D scenes with Gaussian scatter points can lead to overfitting, especially when the available images are limited. This technique uses depth maps from a pre-trained monocular depth estimation model as geometric guides and aligns them with sparse feature points from an SFM pipeline. These help optimize 3D Gaussian scattering, reduce floating artifacts and ensure geometric coherence. The proposed depth-guided optimization strategy is tested on the LLFF dataset, showing improved geometry compared to using images only. The research includes the introduction of an early stopping strategy and a smoothing term for depth maps, both of which help improve performance. However, limitations are also acknowledged, such as reliance on the accuracy of the monocular depth estimation model and reliance on the performance of COLMAP. Future work is recommended to explore the interdependence of estimated depths and address the challenges of depth estimation in difficult regions, such as textureless plains or sky. Fu et al. introduced COLMAP Free 3D Gaussian Splatting (CF-3DGS), a new end-to-end framework for simultaneous camera pose estimation and NVS from sequence images, solving the previous Challenges posed by the large amount of camera movement and long training duration in the method. Unlike NeRF's implicit representation, CF-3DGS utilizes explicit point clouds to represent the scene. The method sequentially processes input frames and progressively expands the 3D Gaussian to reconstruct the entire scene, demonstrating enhanced performance and robustness on challenging scenes such as 360° videos. This method jointly optimizes camera poses and 3D-GS in a sequential manner, making it particularly suitable for video streaming or ordered image acquisition. The use of Gaussian splashing enables fast training and inference speeds, demonstrating the advantages of this approach over previous methods. While demonstrating effectiveness, it is acknowledged that sequential optimization limits applications primarily to ordered image collections, leaving room to explore extensions to unordered image collections in future research. Yu et al. observed in 3D-GS that artifacts appeared in NVS especially when changing the sampling rate. The introduced solution consists of incorporating a 3D smoothing filter to adjust the maximum frequency of the 3D Gaussian primitives, thus addressing artifacts in out-of-distribution rendering. Additionally, the 2D dilation filter was replaced by a 2D mip filter to address aliasing and dilation issues. Evaluation on benchmark datasets demonstrates the effectiveness of Mip Splatting, especially when modifying the sampling rate. The proposed modifications are principled, straightforward and require minimal changes to the original 3D-GS code. However, there are recognized limitations, such as the error introduced by the Gaussian filter approximation and a slight increase in training overhead. This study presents Mip Splatting as a competitive solution, demonstrating performance parity with state-of-the-art methods and superior generalization in out-of-distribution scenarios, demonstrating its ability to achieve alias-free rendering at any scale. aspect potential. Gao et al. proposed a new 3D point cloud rendering method that is able to decompose materials and lighting from multi-view images. The framework enables scene editing, ray tracing, and real-time relighting in a distinguishable manner. Each point in the scene is represented by a "re-illuminable" 3D Gaussian, carrying information about its normal direction, material properties such as bidirectional reflection distribution function (BRDF), and incoming light from different directions. For accurate illumination estimation, the incident light is divided into global and local components, and visibility based on the viewing angle is taken into account. Scene optimization utilizes 3D Gaussian splashing, while physically based differentiable rendering handles BRDF and lighting decomposition. An innovative point-based ray tracing approach leverages bounding volume hierarchies to enable efficient visibility baking and realistic shadows during real-time rendering. Experiments show that BRDF estimation and view rendering are better compared to existing methods. However, challenges still exist for scenes that do not have clear boundaries and require target masks during optimization. Future work could explore integrating multi-view stereo (MVS) cues to improve the geometric accuracy of point clouds generated by 3D Gaussian scattering. This "Reliable 3D Gaussian" pipeline demonstrates promising real-time rendering capabilities and opens the door to revolutionary mesh-based graphics via a point cloud-based approach that allows for relighting, editing, and ray tracing. Fan et al. introduce a new technique for compressing 3D Gaussian representations used in rendering. Their method identifies and removes redundant Gaussians based on their importance, similar to network pruning, ensuring minimal impact on visual quality. Leveraging knowledge extraction and pseudo-view enhancement, LightGaussian delivers information to a lower complexity representation with fewer spherical harmonics, further reducing redundancy. Furthermore, a hybrid scheme called VecTree quantization optimizes the representation by quantizing attribute values, thereby achieving smaller sizes without significant loss in accuracy. Compared with standard methods, LightGaussian achieves an average compression ratio of more than 15 times, and significantly increases rendering speed from 139 FPS to 215 FPS on datasets such as Mip NeRF 360 and Tanks&Temples. The key steps involved are computing global saliency, pruning Gaussians, extracting knowledge with pseudo-views, and quantifying attributes using VecTree. Overall, LightGaussian provides a breakthrough solution for converting large point-based representations into a compact format, significantly reducing data redundancy and greatly improving rendering efficiency. This section delves into the significant advancements in the application of the Gaussian Splash algorithm since its inception in July 2023. These advances have specific uses in various fields such as avatars, SLAM, mesh extraction, and physics simulations. When applied to these specialized use cases, Gaussian Splatting demonstrates its versatility and effectiveness in different application scenarios. With the rise of AR/VR application craze, a lot of research of Gauss Splash is focused on developing digital avatars of humans. Capturing a subject from fewer viewpoints and building a 3D model is a challenging task, and Gaussian Splash is helping researchers and industry achieve this goal. Joint angles or articulation This Gaussian scattering technique focuses on modeling the human body based on joint angles. Some parameters of this type of model reflect the positions, angles and other similar parameters of three-dimensional joints. Decode the input frame to find out the 3D joint positions and angles of the current frame. Zielonka et al. proposed a human body representation model using Gaussian scattering and realized real-time rendering using innovative 3D-GS technology. Unlike existing photorealistic drivable avatars, Drivable 3D Gaussian Splash (D3GA) does not rely on precise 3D registration during training or dense input images during testing. Instead, it utilizes densely calibrated multi-view video for real-time rendering and introduces tetrahedral cage-based deformations driven by keypoints and angles in joints, making it effective for applications involving communication, as shown in Figure 9. Animatable These methods typically train pose-dependent Gaussians to capture complex dynamic appearances, including finer details in clothing, resulting in high-quality avatars. Some of these methods also support real-time rendering capabilities. Jiang et al. proposed HiFi4G, a method that can effectively render real humans. HiFi4G combines 3D Gaussian representation with non-rigid tracking, employing a dual graph mechanism with motion priors and 4D Gaussian optimization with an adaptive spatiotemporal regularizer. HiFi4G achieves approximately 25 times the compression rate, requires less than 2MB of storage space per frame, and performs well in terms of optimization speed, rendering quality, and storage overhead, as shown in Figure 10. It proposes a compact 4D Gaussian representation that bridges Gaussian splashing and non-rigid tracking. However, the dependence on segmentation, susceptibility to poor segmentation leading to artifacts, and the need for per-frame reconstruction and grid tracking pose limitations. Future research may focus on accelerating the optimization process and reducing GPU ordering dependence for wider deployment on web viewers and mobile devices. Head based Previous head incarnation methods mostly relied on fixed explicit primitives (grids, points) or implicit Surface (SDF). Gaussian scattering-based models will pave the way for the rise of AR/VR and filter-based applications, allowing users to try different makeup looks, tones, hairstyles, etc. Wang et al. utilized canonical Gaussian transformation to represent dynamic scenes. Using an explicit "dynamic" triplane as an efficient container for the parameterized head geometry, well aligned with the underlying geometry and factors in the triplane, the authors obtained aligned regularization factors for regular Gaussians. Using a tiny MLP, the factors are decoded into opacity and spherical harmonic coefficients of 3D Gaussian primitives. Quin et al. created ultra-realistic head avatars with controllable perspective, pose, and expression. During the avatar reconstruction process, the author simultaneously optimized the deformation model parameters and Gaussian splat parameters. The work showcases the avatar's ability to animate in a variety of challenging scenarios. Dhamo et al. proposed HeadGaS, a hybrid model that extends the explicit representation of 3D-GS based on learnable latent features. These features can then be linearly blended with low-dimensional parameters from the parametric head model to derive final expression-dependent color and opacity values. Figure 11 shows some example images. SLAM SLAM is a technology used in self-driving cars to simultaneously build a map and determine where the vehicle is within that map Location. It enables vehicles to navigate and map unknown environments. As the name suggests, visual SLAM (vSLAM) relies on images from cameras and various image sensors. This method works with a variety of camera types, including simple, compound-eye and RGB-D cameras, making it a cost-effective solution. Through the camera, landmark detection can be combined with graph-based optimization to enhance the flexibility of SLAM implementation. Monocular SLAM is a subset of vSLAM that uses a single camera and faces challenges in depth perception, which can be solved by incorporating additional sensors, such as odometry and encoders of an inertial measurement unit (IMU). Key technologies related to vSLAM include SFM, visual odometry and beam adjustment. Visual SLAM algorithms are divided into two major categories: sparse methods, which employ feature point matching (e.g., parallel tracking and mapping, ORB-SLAM), and dense methods, which utilize overall image brightness (e.g., DTAM, LSD-SLAM, DSO, SVO). Gaussian scattering can be used for physically based simulations and renderings. By adding more parameters to the 3D Gaussian kernel, velocity, strain, and other mechanical properties can be modeled. That's why various methods were developed within a few months, including simulating physics using Gaussian scattering. Xie et al. introduced a three-dimensional Gaussian kinematics method based on continuum mechanics, using partial differential equations (PDE) to drive the Gaussian kernel and its associated spherical harmonics. evolution. This innovation allows the use of a unified simulation rendering pipeline, simplifying motion generation by eliminating the need for explicit target meshes. Their approach demonstrates versatility through comprehensive benchmarking and experiments on a variety of materials, demonstrating real-time performance in scenarios with simple dynamics. The authors introduce PhysGaussian, a framework that simultaneously and seamlessly generates physically based dynamics and photorealistic renderings. While acknowledging the limitations of the framework such as the lack of shadow evolution and the use of single-point quadrature for volume integration, the authors suggest avenues for future work, including employing higher-order quadrature in the material point method (MPM) and exploring the use of neural networks Integrated for more realistic modeling. The framework can be extended to handle a variety of materials, such as liquids, and incorporate advanced user controls utilizing large language models (LLMs). Figure 13 shows the training process of the PhysGaussian framework. Gaussian Splash also extends its wings to 3D editing and point manipulation of scenes. Tip-based 3D editing of scenes is even possible using the latest advances that will be discussed. These methods not only represent the scene as a 3D Gaussian map, but also have semantic and contentious understanding of the scene. Chen et al. introduced GaussianEditor, a new three-dimensional editing algorithm based on Gaussian Splatting, which aims to overcome the limitations of traditional three-dimensional editing methods. While traditional methods that rely on meshes or point clouds struggle to achieve realistic depictions, implicit 3D representations like NeRF face the challenges of slow processing speed and limited control. GaussianEditor solves these problems by leveraging 3D-GS, enhancing accuracy and control with Gaussian semantic tracking, and introducing Hierarchical Gaussian Splash (HGS) for stable and refined results under generative guidance. The algorithm includes a specialized 3D repair method for efficient object removal and integration, demonstrating superior control, efficacy, and fast performance in extensive experiments. Figure 14 shows the various text prompts tested by Chen et al. GaussianEditor marks a major advancement in 3D editing, providing enhanced effectiveness, speed and control. The contributions of this research include the introduction of Gaussian semantic tracking for detailed editing control, the proposal of HGS to achieve stable convergence under generation guidance, the development of a 3D repair algorithm for rapid deletion and addition of targets, and extensive experiments demonstrating that this method is superior to previous 3D editing methods. . Despite the progress of GaussianEditor, it relies on a 2D diffusion model for effective supervision and has limitations in handling complex cues, which is a common challenge faced by other 3D editing methods based on similar models. Traditionally, 3D scenes are represented using meshes and points due to their explicit nature and Compatibility with fast GPU/CUDA based rasterization. However, recent advances, such as NeRF methods, focus on continuous scene representation, employing techniques such as multi-layer perceptron optimization and novel view synthesis via volumetric ray marching. While continuous representation helps with optimization, the random sampling required for rendering introduces expensive noise. Gaussian Splash bridges this gap by leveraging 3D Gaussian representations optimized to achieve state-of-the-art visual quality and competitive training times. Additionally, a tile-based splash solution ensures top-quality real-time rendering. Gaussian Splash provides some of the best results in terms of quality and efficiency when rendering 3D scenes. Gaussian Splash has been developed to handle dynamic and deformable targets by modifying their original representation. This involves incorporating parameters such as 3D position, rotation, scaling factors and spherical harmonic coefficients for color and opacity. Recent advances in this area include the introduction of sparsity losses to encourage ba-sis trajectory sharing, the introduction of dual-domain deformation models to capture time-dependent residuals, and Gaussian shell mapping that connects generator networks with 3D Gaussian rendering. Efforts are also being made to address challenges such as non-rigid tracking, avatar expression changes, and efficient rendering of realistic human performance. Together, these advancements work toward real-time rendering, optimized efficiency, and high-quality results when working with dynamic and deformable targets. On the other hand, Diffusion and Gaussian Splash work together to create 3D targets from text cues. A diffusion model is a neural network that learns to generate images from noisy input by reversing the process of image corruption through a series of increasingly cleaner images. In the text-to-3D pipeline, a diffusion model generates an initial 3D point cloud based on the text description, which is then converted into a Gaussian sphere using Gaussian scattering. The rendered Gaussian sphere generates the final 3D target image. Advances in this area include using structured noise to address multi-view geometry challenges, introducing variational Gaussian scattering models to address convergence issues, and optimizing denoising scores to enhance diffusion priors, aiming to achieve unparalleled realism in text-based 3D generation. sex and performance. Gaussian Splash has been widely used in the creation of digital avatars for AR/VR applications. This involves capturing an object from a minimum number of viewpoints and building a 3D model. The technology has been used to model human joints, joint angles and other parameters, enabling the generation of expressive and controllable avatars. Advances in this area include developing methods to capture high-frequency facial details, preserve exaggerated expressions, and effectively morph avatars. Furthermore, hybrid models are proposed that combine explicit representations with learnable latent features to achieve expression-dependent final color and opacity values. These advancements aim to enhance the geometry and texture of generated 3D models to meet the growing demand for realistic and controllable avatars in AR/VR applications. Gaussian Splatting also finds versatile applications in SLAM, providing real-time tracking and mapping capabilities on the GPU. By using a 3D Gaussian representation and a differentiable splash rasterization pipeline, it enables fast and photorealistic rendering of real-world and synthetic scenes. The technique extends to mesh extraction and physics-based simulation, allowing mechanical properties to be modeled without an explicit target mesh. Advances in continuum mechanics and partial differential equations have allowed the evolution of Gaussian kernels, simplifying motion generation. Notably, the optimization involves efficient data structures such as OpenVDB, regularization terms for alignment, and physics-inspired terms for error reduction, thereby improving overall efficiency and accuracy. Other work has been done on compression and improving Gaussian scattering rendering efficiency. It is clear from Table 2 that at the time of writing this article, Gaussian Splash is the closest to real-time rendering and Options for dynamic scene representation. Occupying the network is simply not tailor-made for NVS use cases. Photogrammetry is ideal for creating highly accurate and realistic models with a strong sense of context. NeRF excels at generating novel views and realistic lighting effects, providing creative freedom and handling complex scenes. Gaussian Splash shines in its real-time rendering capabilities and interactive exploration, making it suitable for dynamic applications. Each method has its niche and complements each other, providing a wide variety of tools for 3D reconstruction and visualization. Although Gaussian Splash is a very robust technique, it does have some caveats. Some of them are listed below: Real-time 3D reconstruction technology will realize a variety of functions in computer graphics and related fields, such as real-time interactive exploration of 3D scenes or models, Manipulate viewpoint and aim with instant feedback. It can also render dynamic scenes with moving targets or changing environments in real time, enhancing realism and immersion. Real-time 3D reconstruction can be used in simulation and training environments to provide realistic visual feedback for virtual scenes in fields such as automotive, aerospace and medicine. It will also support real-time rendering of immersive AR and VR experiences, where users can interact with virtual targets or environments in real time. Overall, real-time Gaussian Splash enhances efficiency, interactivity, and realism for a variety of applications in computer graphics, visualization, simulation, and immersive technologies. In this article, we discussed various functional and application aspects related to Gaussian scattering for 3D reconstruction and new view synthesis. It covers dynamic and deformable modeling, motion tracking, non-rigid/deformable targets, expression/emotion changes, text-based generative diffusion, denoising, optimization, avatars, animatable targets, head-based modeling, simultaneous localization and topics such as planning, mesh extraction and physics, optimization techniques, editing capabilities, rendering methods, compression, and more. Specifically, this article delves into the challenges and progress of image-based 3D reconstruction, the role of learning-based methods in improving 3D shape estimation, and the application of Gaussian splash technology in handling dynamic scenes, interactive target manipulation , potential applications and future directions in 3D segmentation and scene editing. Gaussian Splash is transformative in diverse fields including computer-generated imagery, VR/AR, robotics, film and animation, automotive design, retail, environmental research, and aerospace applications. However, it is worth noting that Gaussian scattering may have limitations in achieving realism compared to other methods such as NeRFs. Additionally, challenges related to overfitting, computational resources, and rendering quality limitations should also be considered. Despite these limitations, ongoing research and advances in Gaussian scattering continue to address these challenges and further improve the effectiveness and applicability of the method.

OPTIMIZATION AND SPEED

RENDERING AND SHADING METHODS

COMPRESSION

Applications and Case Studies

AVATARS

Mesh extraction and physics

Editing

Discussion

Comparison

Challenges and Limitations

Future Direction

Conclusion

The above is the detailed content of More than just 3D Gaussian! Latest overview of state-of-the-art 3D reconstruction techniques. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Written above & the author’s personal understanding Three-dimensional Gaussiansplatting (3DGS) is a transformative technology that has emerged in the fields of explicit radiation fields and computer graphics in recent years. This innovative method is characterized by the use of millions of 3D Gaussians, which is very different from the neural radiation field (NeRF) method, which mainly uses an implicit coordinate-based model to map spatial coordinates to pixel values. With its explicit scene representation and differentiable rendering algorithms, 3DGS not only guarantees real-time rendering capabilities, but also introduces an unprecedented level of control and scene editing. This positions 3DGS as a potential game-changer for next-generation 3D reconstruction and representation. To this end, we provide a systematic overview of the latest developments and concerns in the field of 3DGS for the first time.

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

Written above & the author’s personal understanding: At present, in the entire autonomous driving system, the perception module plays a vital role. The autonomous vehicle driving on the road can only obtain accurate perception results through the perception module. The downstream regulation and control module in the autonomous driving system makes timely and correct judgments and behavioral decisions. Currently, cars with autonomous driving functions are usually equipped with a variety of data information sensors including surround-view camera sensors, lidar sensors, and millimeter-wave radar sensors to collect information in different modalities to achieve accurate perception tasks. The BEV perception algorithm based on pure vision is favored by the industry because of its low hardware cost and easy deployment, and its output results can be easily applied to various downstream tasks.

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

0.Written in front&& Personal understanding that autonomous driving systems rely on advanced perception, decision-making and control technologies, by using various sensors (such as cameras, lidar, radar, etc.) to perceive the surrounding environment, and using algorithms and models for real-time analysis and decision-making. This enables vehicles to recognize road signs, detect and track other vehicles, predict pedestrian behavior, etc., thereby safely operating and adapting to complex traffic environments. This technology is currently attracting widespread attention and is considered an important development area in the future of transportation. one. But what makes autonomous driving difficult is figuring out how to make the car understand what's going on around it. This requires that the three-dimensional object detection algorithm in the autonomous driving system can accurately perceive and describe objects in the surrounding environment, including their locations,

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

Project link written in front: https://nianticlabs.github.io/mickey/ Given two pictures, the camera pose between them can be estimated by establishing the correspondence between the pictures. Typically, these correspondences are 2D to 2D, and our estimated poses are scale-indeterminate. Some applications, such as instant augmented reality anytime, anywhere, require pose estimation of scale metrics, so they rely on external depth estimators to recover scale. This paper proposes MicKey, a keypoint matching process capable of predicting metric correspondences in 3D camera space. By learning 3D coordinate matching across images, we are able to infer metric relative

'Minecraft' turns into an AI town, and NPC residents role-play like real people

Jan 02, 2024 pm 06:25 PM

'Minecraft' turns into an AI town, and NPC residents role-play like real people

Jan 02, 2024 pm 06:25 PM

Please note that this square man is frowning, thinking about the identities of the "uninvited guests" in front of him. It turned out that she was in a dangerous situation, and once she realized this, she quickly began a mental search to find a strategy to solve the problem. Ultimately, she decided to flee the scene and then seek help as quickly as possible and take immediate action. At the same time, the person on the opposite side was thinking the same thing as her... There was such a scene in "Minecraft" where all the characters were controlled by artificial intelligence. Each of them has a unique identity setting. For example, the girl mentioned before is a 17-year-old but smart and brave courier. They have the ability to remember and think, and live like humans in this small town set in Minecraft. What drives them is a brand new,