The security issues of artificial intelligence (AI) are being discussed globally with unprecedented attention.

Before OpenAI founder and chief scientist Ilya Sutskever and OpenAI super-alignment team co-leader Jan Leike left OpenAI one after another, Leike even published a series of posts on X, saying that OpenAI and its Leadership ignored safety in favor of glossy products. This has attracted widespread attention in the industry, highlighting the seriousness of current AI security issues to a certain extent.

On May 21, an article published in Science magazine called on world leaders to take stronger action against the risks of artificial intelligence (AI). The article pointed out that authoritative scientists and scholars, including Turing Award winners Yoshua Bengio, Geoffrey Hinton and Yao Qizhi, believe that the progress made in recent months is not enough. Their view is that artificial intelligence technology is developing rapidly, but there are many potential risks in the development and application of AI, including data privacy, abuse of artificial intelligence weapons, and the impact of artificial intelligence on the job market. Therefore, governments must strengthen supervision and legislation and formulate appropriate policies to manage and guide the development of artificial intelligence. In addition, the article also

We believe that the uncontrolled development of AI is likely to eventually lead to a large-scale destruction of life and the biosphere loss, and the marginalization or extinction of humanity.

In their view, the security issues of AI models have risen to a level that is enough to threaten the future survival of mankind.

Similarly, the security issue of AI models has become a topic that can affect everyone and everyone needs to be concerned about.

May 22 is destined to be a major moment in the history of artificial intelligence: OpenAI, Google, Microsoft and Zhipu AI and other companies from different countries and regions jointly signed the Frontier Artificial Intelligence Agreement Frontier AI Safety Commitments; the European Council has officially approved the Artificial Intelligence Act (AI Act), and the world's first comprehensive AI regulatory regulations are about to take effect.

Once again, the safety issue of AI is mentioned at the policy level.

In the "Declaration" with the theme of "Safety, Innovation, and Inclusion" At the "AI Seoul Summit" (AI Seoul Summit), 16 companies from North America, Asia, Europe and the Middle East reached an agreement on security commitments for AI development and jointly signed a cutting-edge artificial intelligence security commitment, including the following points:

Turing Award winner Yoshua Bengio believes that the signing of the Frontier Artificial Intelligence Safety Pledge "marks an important step in establishing an international governance system to promote artificial intelligence safety." .

As a large model company from China, Zhipu AI has also signed this new cutting-edge artificial intelligence security commitment. The complete list of signatories is as follows:

In this regard, Anna Makanju, Vice President of Global Affairs at OpenAI, said, “The Frontier Artificial Intelligence Security Commitment is important to promote the wider implementation of advanced AI system security practices. "These commitments will help establish important cutting-edge AI security best practices among leading developers," said Tom Lue, general counsel and director of governance at Google DeepMind. Along with advanced technology comes the important responsibility of ensuring AI security."

Recently, Zhipu AI was also invited to appear at the top AI conference ICLR 2024, and presented a speech titled "The ChatGLM's. Road to AGI" shared their specific practices for AI safety in the keynote speech.

They believe that Superalignment technology will help improve the security of large models, and have launched a Superalignment program similar to OpenAI, hoping to let machines learn to learn and judge by themselves. This enables learning of safe content.

They revealed that these safety measures are built into the GLM-4V to prevent harmful or unethical behavior. Protect user privacy and data security at the same time; the subsequent upgraded version of GLM-4, namely GLM-4.5 and its upgraded model, should also be based on superintelligence and super alignment technology.

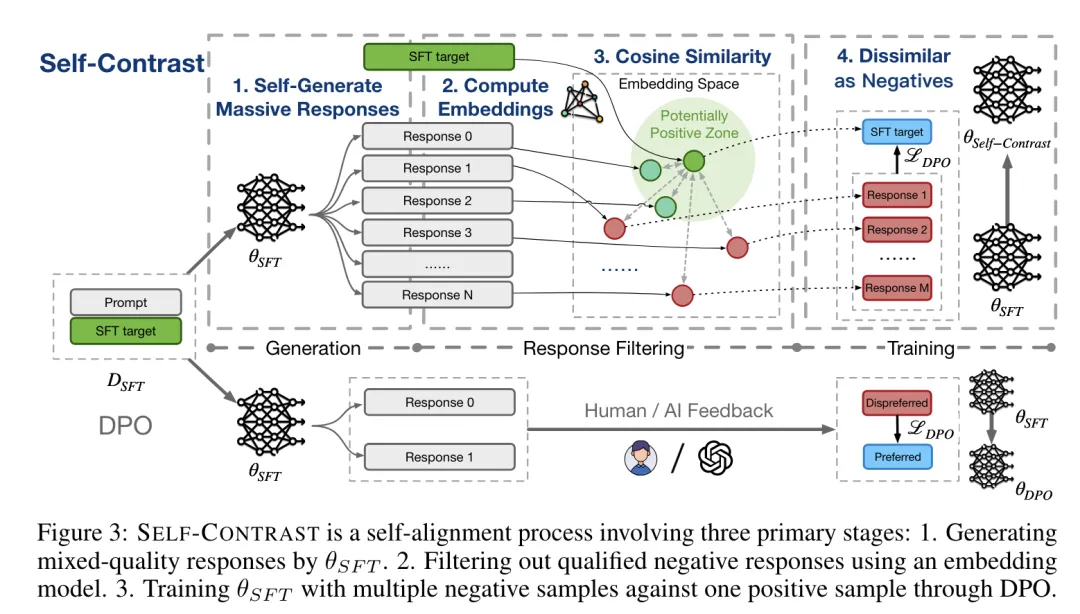

We also found that in a recently published paper, Zhipu AI and Tsinghua University teams introduced a feedback-free method that uses a large number of self-generated negative words. -free) Large language model alignment method - Self-Contrast.

According to the paper description, with only the supervised fine-tuning (SFT) target, Self-Contrast can use LLM itself to generate a large number of different candidate words, and use the pre-trained embedding model to determine the text similarity Filter multiple negative words.

Direct preference optimization (DPO) experiments on three datasets show that Self-Contrast can consistently outperform SFT and standard DPO training by a large margin. Moreover, the performance of Self-Contrast continues to improve as the number of self-generated negative samples increases.

The above is the detailed content of OpenAI, Microsoft, Zhipu AI and other 16 companies around the world signed the Frontier Artificial Intelligence Security Commitment. For more information, please follow other related articles on the PHP Chinese website!

Application of artificial intelligence in life

Application of artificial intelligence in life

What is the basic concept of artificial intelligence

What is the basic concept of artificial intelligence

How to insert video in html

How to insert video in html

Data Structure and Algorithm Tutorial

Data Structure and Algorithm Tutorial

netframework

netframework

How to upgrade Hongmeng system on Honor mobile phone

How to upgrade Hongmeng system on Honor mobile phone

How to refresh bios

How to refresh bios

Connected but unable to access the internet

Connected but unable to access the internet

How to solve the 504 error in cdn

How to solve the 504 error in cdn