Technology peripherals

Technology peripherals

AI

AI

MIT's latest masterpiece: using GPT-3.5 to solve the problem of time series anomaly detection

MIT's latest masterpiece: using GPT-3.5 to solve the problem of time series anomaly detection

MIT's latest masterpiece: using GPT-3.5 to solve the problem of time series anomaly detection

Jun 08, 2024 pm 06:09 PM Picture

Picture

Paper title: Large language models can be zero-shot anomaly detectors for time series?

Download address: https: //arxiv.org/pdf/2405.14755v1

1. Overall introduction to this article from

, based on LLM (such as GPT-3.5-turbo, MISTRAL, etc.) for time series anomaly detection . The core lies in the design of the pipeline, which is mainly divided into two parts.

Time series data processing: Convert the original time series into LLM understandable input through discretization and other methods;

LM-based anomaly detection Pipeline has designed two prompt-based anomaly detection pipeline, one is a prompt-based method that asks the large model for the abnormal location, and the large model gives the index of the abnormal location; the other is a prediction-based method that allows the large model to perform time series predictions based on the difference between the predicted value and the actual value. Perform abnormal location.

Picture

Picture

2. Time series data processing

In order to adapt the time series to the LLM input, the article converts the time series into numbers, by Numbers serve as input to LLM. The core here is how to retain as much original time series information as possible with the shortest length.

First, uniformly subtract the minimum value from the original time series to prevent the occurrence of negative values. Negative value indexes will occupy a token. At the same time, the decimal points of the values are uniformly moved back, and each value is retained to a fixed number of digits (such as 3 decimal places). Since GPT has restrictions on the maximum length of input, this paper adopts a dynamic window strategy to divide the original sequence into overlapping subsequences and input them into the large model.

Due to different LLM tokenizers, in order to prevent the numbers from being completely separated, a space is added in the middle of each number in the text to force the distinction. Subsequent verification of the effect also showed that the method of adding spaces is better than not adding spaces. The following example is the processing result:

Picture

Picture

Different data processing methods, used for different large models, will produce different results, as shown in the figure below Show.

Picture

Picture

3. Anomaly detection Pipeline

The article proposes two anomaly detection pipelines based on LLM, the first one is PROMPTER , convert the anomaly detection problem into a prompt and input it into the large model, and let the model directly give the answer; the other is DETECTOR, which allows the large model to perform time series prediction, and then determine the abnormal points through the difference between the prediction result and the real value.

Picture

Picture

PROMPTER: The following table is the process of prompt iteration in the article. Starting from the simplest prompt, we continue to find problems with the results given by LLM. And improved the prompt, and after 5 versions of iteration, the final prompt was formed. Using this prompt, the model can directly output the index information of the abnormal location.

Picture

Picture

DETECTOR: There has been a lot of previous work using large models for time series forecasting. The processed time series in this article can directly allow large models to generate prediction results. Take the median of multiple results generated by different windows, and then use the difference between the predicted results and the real results as the basis for anomaly detection.

4. Experimental results

Through experimental comparison, it is found that the anomaly detection method based on large models can improve the effect by 12.5% due to the anomaly detection model based on Transformer. AER (AER: Auto-Encoder with Regression for Time Series Anomaly Detection) is the most effective deep learning-based anomaly detection method and is still 30% better than the LLM-based method. In addition, the pipeline method based on DIRECTOR is better than the method based on PROMTER.

Picture

Picture

In addition, the article also visualizes the anomaly detection process of the large model, as shown below.

picture

picture

The above is the detailed content of MIT's latest masterpiece: using GPT-3.5 to solve the problem of time series anomaly detection. For more information, please follow other related articles on the PHP Chinese website!

Hot Article

Hot tools Tags

Hot Article

Hot Article Tags

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

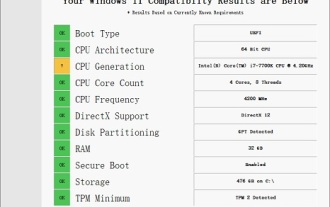

Solution to i7-7700 unable to upgrade to Windows 11

Dec 26, 2023 pm 06:52 PM

Solution to i7-7700 unable to upgrade to Windows 11

Dec 26, 2023 pm 06:52 PM

Solution to i7-7700 unable to upgrade to Windows 11

Methods to solve Java reflection exception (ReflectiveOperationException)

Aug 26, 2023 am 09:55 AM

Methods to solve Java reflection exception (ReflectiveOperationException)

Aug 26, 2023 am 09:55 AM

Methods to solve Java reflection exception (ReflectiveOperationException)

CMU conducted a detailed comparative study and found that GPT-3.5 is superior to Gemini Pro, ensuring fair, transparent and reproducible performance

Dec 21, 2023 am 08:13 AM

CMU conducted a detailed comparative study and found that GPT-3.5 is superior to Gemini Pro, ensuring fair, transparent and reproducible performance

Dec 21, 2023 am 08:13 AM

CMU conducted a detailed comparative study and found that GPT-3.5 is superior to Gemini Pro, ensuring fair, transparent and reproducible performance

MIT's latest masterpiece: using GPT-3.5 to solve the problem of time series anomaly detection

Jun 08, 2024 pm 06:09 PM

MIT's latest masterpiece: using GPT-3.5 to solve the problem of time series anomaly detection

Jun 08, 2024 pm 06:09 PM

MIT's latest masterpiece: using GPT-3.5 to solve the problem of time series anomaly detection

How to solve Java thread interrupt timeout exception (ThreadInterruptedTimeoutExceotion)

Aug 18, 2023 pm 01:57 PM

How to solve Java thread interrupt timeout exception (ThreadInterruptedTimeoutExceotion)

Aug 18, 2023 pm 01:57 PM

How to solve Java thread interrupt timeout exception (ThreadInterruptedTimeoutExceotion)

A guide to the unusual missions in the Rise of Ronin Pool

Mar 26, 2024 pm 08:06 PM

A guide to the unusual missions in the Rise of Ronin Pool

Mar 26, 2024 pm 08:06 PM

A guide to the unusual missions in the Rise of Ronin Pool

How to solve Java network connection reset exception (ConnectionResetException)

Aug 26, 2023 pm 07:57 PM

How to solve Java network connection reset exception (ConnectionResetException)

Aug 26, 2023 pm 07:57 PM

How to solve Java network connection reset exception (ConnectionResetException)

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

Improved detection algorithm: for target detection in high-resolution optical remote sensing images