Produced | 51CTO Technology Stack (WeChat ID: blog51cto)

Mistral released its first code model Codestral-22B!

The crazy thing about this model is not only that it is trained on more than 80 programming languages, including Swift, etc. that many code models ignore.

Their speeds are not exactly the same. It is required to write a "publish/subscribe" system using Go language. The GPT-4o here is being output, and Codestral is handing in the paper so fast that it’s hard to see!

Since the model has just been launched, it has not yet been publicly tested. But according to the person in charge of Mistral, Codestral is currently the best-performing open source code model.

Picture

Picture

Interested friends can move to:

-hug Hugging face: https://huggingface.co/mistralai/Codestral-22B-v0.1

-Blog: https://mistral.ai/news/codestral/

Judging from the blog, Codestral has surpassed its opponents in long text and multiple programming language performance tests, including CodeLlama 70B, Deepseek Coder 33B and Llama 3 70B.

Picture

Picture

Let’s take a closer look at the “king” of the code model, Codestral is strong where.

As a 22B model, Codestral sets the performance/latency space for code generation A new standard. At its core, Codestral 22B features a 32K context length, providing developers with the ability to write and interact with code in a variety of programming environments and projects.

Image

Image

Above: Codestral has a larger context window of 32k (unlike its competitors’ 4k, 8k or 16k ), outperforms all other models in remote evaluation RepoBench for code generation.

Codestral is insanely trained on datasets from over 80 programming languages, making it suitable for a variety of programming tasks, including generating code from scratch, completing coding functions, Write tests and use the intermediate padding mechanism to complete any part of the code.

The programming languages it covers include popular SQL, Python, Java, C and C++, as well as more specific Swift and Fortran, etc., becoming a generalist in the programming world.

Mistral said that Codestral can help developers improve their coding skills, speed up workflow, and save a lot of time and effort when building applications. Not to mention, it can also help reduce the risk of errors and vulnerabilities.

Above picture: HumanEval evaluation of Codestral performance on different programming languages

Above picture: HumanEval evaluation of Codestral performance on different programming languages

Evaluation on HumanEval When testing Python output prediction on Python code generation and CruxEval, the model outperformed the competition with scores of 81.1% and 51.3% respectively. It even achieved first place in HumanEval for Bash, Java and PHP.

It is worth noting that the model did not perform the best on HumanEval for C++, C and Typescript, but the average score of all tests was the highest at 61.5%, which was slightly higher 61.2% of Llama 3 70B. In the Spider evaluation, which evaluates SQL performance, it ranked second with a score of 63.5%.

Some popular developer productivity and artificial intelligence application development tools have begun testing Codestral. This includes big names like LlamaIndex, LangChain, Continue.dev, Tabnine and JetBrains.

"From our initial testing, it is a good choice for the generated code workflow because it is fast, has a favorable context window, and guides the use of version support tools. We Self-correcting code generation was tested using LangGraph, using the guided Codestral tool usage for output, and it worked really well out of the box," said Harrison Chase, CEO and co-founder of LangChain.

In addition, Codestral has cooperated with several industry partners including JetBrains, SourceGraph and LlamaIndex. Jerry Liu, CEO of LlamaIndex, said of his testing of Codestral, "So far, it has always produced highly accurate and usable code, even for complex tasks. For example, when I asked it to complete a task of creating a new When LlamaIndex queries the engine's non-verbose functions, the code it generates works seamlessly despite being based on an older code base."

Mistral offers Codestral 22B on Hugging Face under its own non-commercial license, allowing developers to use the technology for non-commercial purposes, testing and supporting research efforts.

The company also makes the model available through two API endpoints: codestral.mistral.ai and api.mistral.ai.

The former is designed for users who want to use Codestral's guided or middle-of-the-road filler route inside the IDE. It comes with a personal-level API key, without the usual organizational rate limits, and is free to use during an eight-week test period. While api.mistral.ai is the general endpoint for broader research, batch queries, or third-party application development, queries will be billed per token.

What’s more interesting is that Mistral has released a guided version of Codestral on Le Chat, allowing access to Codestral through their free conversational interface Le Chat. Developers can interact with Codestral naturally and intuitively, taking full advantage of the model's capabilities.

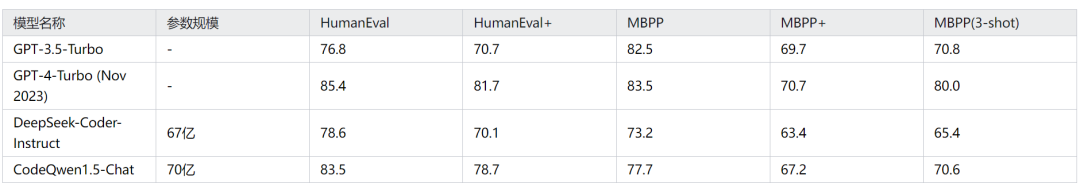

There are also code models with amazing performance among the domestic large models, such as Ali’s soon The former open source 7 billion parameter large model CodeQwen1.5-7B.

In the HumanEval test, the score of CodeQwen1.5-7B-Chat version even exceeded the early version of GPT-4 and was slightly lower than GPT-4-Turbo (November 2023 version) Low.

Picture

Picture

Picture

Picture

The above is the detailed content of The Mistral open source code model takes the throne! Codestral is crazy about training in over 80 languages, and domestic Tongyi developers are asking to participate!. For more information, please follow other related articles on the PHP Chinese website!

Introduction to the usage of vbs whole code

Introduction to the usage of vbs whole code

How to open ai format in windows

How to open ai format in windows

How to solve errorreport

How to solve errorreport

How to refund Douyin recharged Doucoin

How to refund Douyin recharged Doucoin

How to solve unrecognized usb device

How to solve unrecognized usb device

how to change ip address

how to change ip address

httpstatus500 error solution

httpstatus500 error solution

Functions of tracert command

Functions of tracert command

The difference between ms office and wps office

The difference between ms office and wps office