Hardware Tutorial

Hardware Tutorial

Hardware News

Hardware News

It is expected to become the first GPU of Intel's next generation independent graphics, and the bmg_g21 core is the first to appear in LLVM update.

It is expected to become the first GPU of Intel's next generation independent graphics, and the bmg_g21 core is the first to appear in LLVM update.

It is expected to become the first GPU of Intel's next generation independent graphics, and the bmg_g21 core is the first to appear in LLVM update.

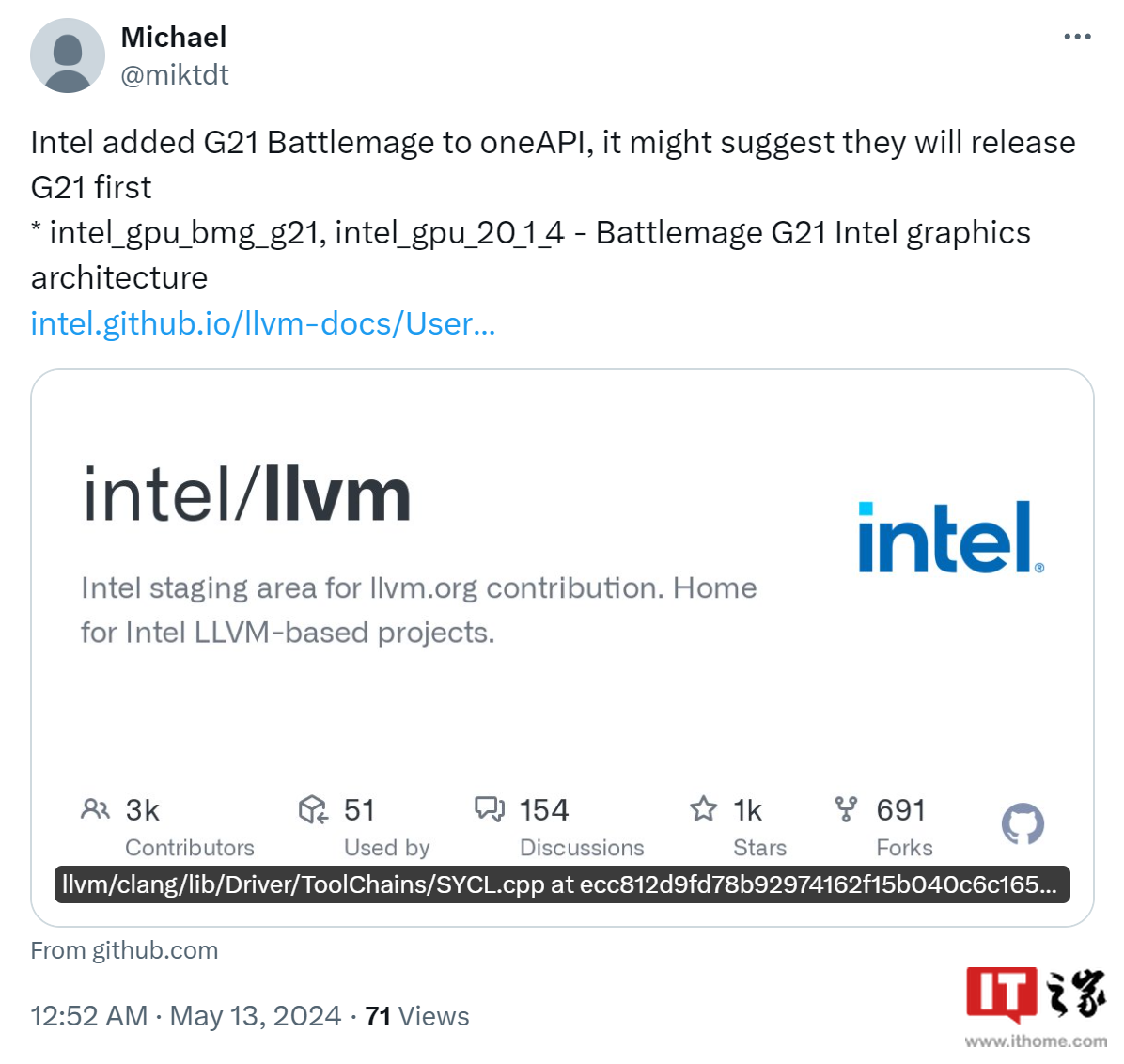

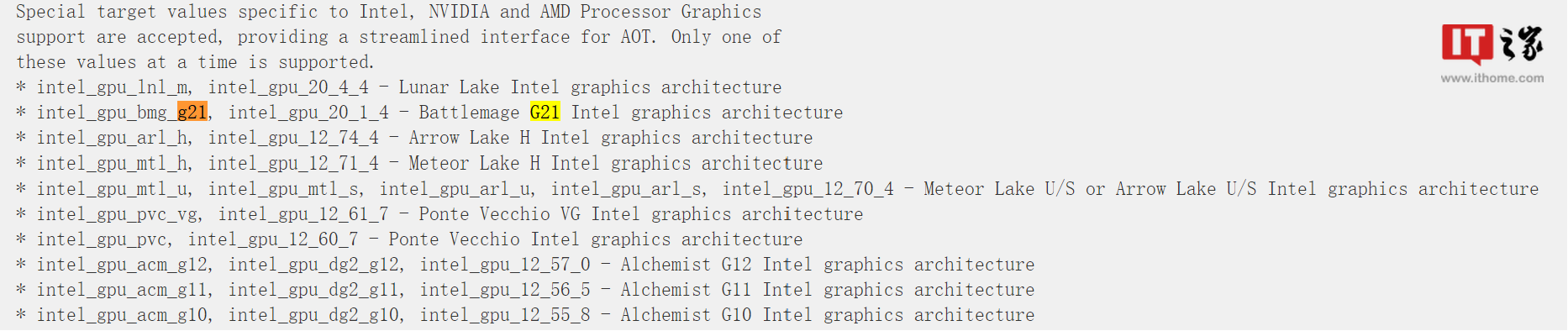

News from this site on May 13, X platform people @miktdt discovered that Intel recently added bmg_g21 (Battlemage G21, BMG-G21) core related code to the LLVM document of the oneAPI DPC++ compiler.

This is also the first time that code related to the Battlemage architecture independent graphics appears in the document, suggesting that the BattlemageG21 GPU is expected to become the first model of Intel's next generation independent graphics.

This site noticed that the GPU architecture of bmg_g21 is called intel_gpu_20_1_4 in the document, which is different from the lnl_m (Lunar Lake-M) processor that is believed to use the nuclear version of the Battlemage architecture. Share the same "intel_gpu_20" prefix.

According to the general naming rules of Intel graphics architecture, Battlemage architecture is expected to be called Gen20 internally by Intel, while bmg_g21 and lnl_m adopt Gen20.1 and Gen20.4 variants respectively.

Intel’s first-generation discrete graphics card was released in 2022, roughly 2 years ago. It seems that Intel is expected to launch a new generation of discrete graphics cards before the Western shopping season at the end of this year. It is unclear whether it will be released before the Lunar Lake processor.

The above is the detailed content of It is expected to become the first GPU of Intel's next generation independent graphics, and the bmg_g21 core is the first to appear in LLVM update.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1664

1664

14

14

1423

1423

52

52

1318

1318

25

25

1269

1269

29

29

1248

1248

24

24

How to turn off win10gpu shared memory

Jan 12, 2024 am 09:45 AM

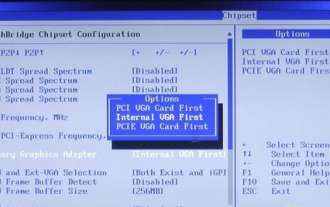

How to turn off win10gpu shared memory

Jan 12, 2024 am 09:45 AM

Friends who know something about computers must know that GPUs have shared memory, and many friends are worried that shared memory will reduce the number of memory and affect the computer, so they want to turn it off. Here is how to turn it off. Let's see. Turn off win10gpu shared memory: Note: The shared memory of the GPU cannot be turned off, but its value can be set to the minimum value. 1. Press DEL to enter the BIOS when booting. Some motherboards need to press F2/F9/F12 to enter. There are many tabs at the top of the BIOS interface, including "Main, Advanced" and other settings. Find the "Chipset" option. Find the SouthBridge setting option in the interface below and click Enter to enter.

News says AMD will launch new RX 7700M / 7800M laptop GPU

Jan 06, 2024 pm 11:30 PM

News says AMD will launch new RX 7700M / 7800M laptop GPU

Jan 06, 2024 pm 11:30 PM

According to news from this site on January 2, according to TechPowerUp, AMD will soon launch notebook graphics cards based on Navi32 GPU. The specific models may be RX7700M and RX7800M. Currently, AMD has launched a variety of RX7000 series notebook GPUs, including the high-end RX7900M (72CU) and the mainstream RX7600M/7600MXT (28/32CU) series and RX7600S/7700S (28/32CU) series. Navi32GPU has 60CU. AMD may make it into RX7700M and RX7800M, or it may make a low-power RX7900S model. AMD is expected to

What does shared gpu memory mean?

Mar 07, 2023 am 10:17 AM

What does shared gpu memory mean?

Mar 07, 2023 am 10:17 AM

Shared gpu memory means the priority memory capacity specially divided by the WINDOWS10 system for the graphics card; when the graphics card memory is not enough, the system will give priority to this part of the "shared GPU memory"; in the WIN10 system, half of the physical memory capacity will be divided into "Shared GPU memory".

Do I need to enable GPU hardware acceleration?

Feb 26, 2024 pm 08:45 PM

Do I need to enable GPU hardware acceleration?

Feb 26, 2024 pm 08:45 PM

Is it necessary to enable hardware accelerated GPU? With the continuous development and advancement of technology, GPU (Graphics Processing Unit), as the core component of computer graphics processing, plays a vital role. However, some users may have questions about whether hardware acceleration needs to be turned on. This article will discuss the necessity of hardware acceleration for GPU and the impact of turning on hardware acceleration on computer performance and user experience. First, we need to understand how hardware-accelerated GPUs work. GPU is a specialized

Beelink EX graphics card expansion dock promises zero GPU performance loss

Aug 11, 2024 pm 09:55 PM

Beelink EX graphics card expansion dock promises zero GPU performance loss

Aug 11, 2024 pm 09:55 PM

One of the standout features of the recently launched Beelink GTi 14is that the mini PC has a hidden PCIe x8 slot underneath. At launch, the company said that this would make it easier to connect an external graphics card to the system. Beelink has n

AMD FSR 3.1 launched: frame generation feature also works on Nvidia GeForce RTX and Intel Arc GPUs

Jun 29, 2024 am 06:57 AM

AMD FSR 3.1 launched: frame generation feature also works on Nvidia GeForce RTX and Intel Arc GPUs

Jun 29, 2024 am 06:57 AM

AMD delivers on its initial March ‘24 promise to launch FSR 3.1 in Q2 this year. What really sets the 3.1 release apart is the decoupling of the frame generation side from the upscaling one. This allows Nvidia and Intel GPU owners to apply the FSR 3.

OpenGL rendering gpu should choose automatic or graphics card?

Feb 27, 2023 pm 03:35 PM

OpenGL rendering gpu should choose automatic or graphics card?

Feb 27, 2023 pm 03:35 PM

Select "Auto" for opengl rendering gpu; generally select the automatic mode for opengl rendering. The rendering will be automatically selected according to the actual hardware of the computer; if you want to specify, then specify the appropriate graphics card, because the graphics card is more suitable for rendering 2D and 3D vector graphics Content, support for OpenGL general computing API is stronger than CPU.

Honor X7b 5G officially released with 6000mAh battery + 100 million pixels!

Apr 03, 2024 am 08:20 AM

Honor X7b 5G officially released with 6000mAh battery + 100 million pixels!

Apr 03, 2024 am 08:20 AM

Recently, CNMO noticed that Honor X7b5G was officially released in overseas markets. Many configurations of this model are similar to the Honor Play 50Plus on the Chinese market, but it has changes in the imaging system and some designs. Honor X7b5G is equipped with MediaTek’s Dimensity 700 processor. Dimensity 700 is built using TSMC’s 7nm process and has an 8-core CPU design, consisting of 2 A76 large cores + 6 A55 small cores. Among them, the A76 core frequency is as high as 2.2GHz, and the A55 core frequency is 2.0GHz. In terms of GPU, Dimensity 700 has built-in Mali-G57MC2 with a frequency of up to 950MHz. The processor has been optimized to have excellent performance and low energy consumption, and can bring Honor X7b5G