Technology peripherals

Technology peripherals

AI

AI

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

On May 30, Tencent announced a comprehensive upgrade of its Hunyuan model. The App "Tencent Yuanbao" based on the Hunyuan model was officially launched. Both Apple and Android app stores Available for download .

Compared with the Hunyuan applet version in the previous testing phase, Tencent Yuanbao provides AI search, AI summary, and AI writing for work efficiency scenarios. and other core capabilities; for daily life scenarios, Yuanbao's gameplay is also more abundant, providing multiple featured AI applications, and adding new gameplay such as creating personal agents.

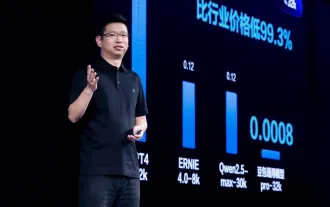

"Tencent does not strive to be the first to make large models." Liu Yuhong, vice president of Tencent Cloud and head of Tencent Hunyuan large models, said: "In the past year, We continue to advance the capabilities of Tencent Hunyuan's large model, polish the technology in rich and massive business scenarios, and gain insight into the real needs of users. We hope that Tencent Yuanbao can become a good partner and helper in users' lives, and ultimately serve everyone. The life of an ordinary person

##Currently, large models are still in a period of rapid development, from model capabilities to application implementation. There is a large "delay". Data shows that when people currently use large model-related products, more than 65% of the demand is concentrated in work/study efficiency scenarios, but related AI product solutions are not yet mature.

At this stage, large models have achieved remarkable results in speech recognition, natural language processing and other fields, such as voice assistants and intelligent translation applications. However, large models still face some challenges when faced with complex real-world scenarios. For example, there is still room for improvement in large models in processing multilingual and diversified data; there is also room for further improvement in processing negative emotions and semantic understanding.

##Currently, large models are still in a period of rapid development, from model capabilities to application implementation. There is a large "delay". Data shows that when people currently use large model-related products, more than 65% of the demand is concentrated in work/study efficiency scenarios, but related AI product solutions are not yet mature.

At this stage, large models have achieved remarkable results in speech recognition, natural language processing and other fields, such as voice assistants and intelligent translation applications. However, large models still face some challenges when faced with complex real-world scenarios. For example, there is still room for improvement in large models in processing multilingual and diversified data; there is also room for further improvement in processing negative emotions and semantic understanding.

Three Cores for Efficiency Scenarios Demand: Information acquisition, processing and production

, Tencent Yuanbao has all conducted product exploration. In terms of AI search, Tencent Yuanbao is connected to search engines such as WeChat Souyisou and Sogou Search, and is enhanced through AI search to improve the effect of current and knowledge-based questions, making it more efficient than traditional search; at the same time, the content covers the WeChat public Tencent ecological content and authoritative Internet sources such as the account will provide higher accuracy of answers; in addition, Yuanbao will also provide reference materials cited and give relevant recommendations to facilitate quick traceability and extended reading. In terms of AI summary, Yuanbao can

upload up to 10 documents in various formats such as PDF, word, txt, etc., and can Comprehensive analysis of multiple WeChat public account links and URLs, supporting 256K native window context, which is equivalent to a "Romance of the Three Kingdoms" or the original English version of "Harry Potter" Complete collection. Whether you want to quickly understand a book or a new field, or deal with complex reports and documents, Yuanbao can help. In terms of AI writing, Yuanbao not only supports multiple rounds of questions and answers, but can also appropriately organize the content of the conversation into a report and perform structured output as required, greatly improving the process from information acquisition to processing and then to production efficiency.

In addition to meeting efficiency needs, Tencent Yuanbao also provides a wealth of applications and gameplay in daily life scenarios. The "Discovery" column has been fully upgraded, and multiple special applications such as ever-changing AI avatars, spoken language training, and super translators have been launched, all of which are free and open to the public. With just one photo, users can experience multiple styles in the ever-changing AI avatar; the super translator can recognize 15 mainstream languages, translate text, pictures and documents, and also supports simultaneous interpretation in Chinese and English; spoken language training is like An exclusive private foreign teacher will give you suggestions for improving your spoken English while practicing, to help users learn and improve.

In addition to meeting efficiency needs, Tencent Yuanbao also provides a wealth of applications and gameplay in daily life scenarios. The "Discovery" column has been fully upgraded, and multiple special applications such as ever-changing AI avatars, spoken language training, and super translators have been launched, all of which are free and open to the public. With just one photo, users can experience multiple styles in the ever-changing AI avatar; the super translator can recognize 15 mainstream languages, translate text, pictures and documents, and also supports simultaneous interpretation in Chinese and English; spoken language training is like An exclusive private foreign teacher will give you suggestions for improving your spoken English while practicing, to help users learn and improve.

At the same time, Yuanbao also supports users to quickly create personal agents based on personalized needs, assign role settings,

or AI automatically generates agent-related information, , and reproduces its own timbre. Combined with Tencent's ecological scenarios, Yuanbao will also launch special intelligence agents such as Tencent News Brother and the theme of "Celebrating More Than Years" in the near future. Li Meng, a big data expert at the Chinese Academy of Sciences, said: "Tencent Yuanbao is a powerful and easy-to-use AI assistant product that brings users a convenient and efficient intelligent experience. It can Understand natural language and provide intelligent answers and suggestions, which saves users a lot of time and energy in many scenarios.”

In addition to providing customized intelligent bodies on Yuanbao, Tencent Hunyuan is also actively developing an intelligent agent ecosystem, launching Tencent Yuanqi, a one-stop intelligent agent creation and distribution platform for developers and enterprises. At present, Tencent Yuanqi has been fully opened to users.

Tencent Yuanqi provides a wealth of official plug-ins and knowledge bases, allowing users to create customized agents with a low threshold, which can be distributed to Tencent Yuanbao, WeChat customer service, QQ, and Tencent Cloud with one click etc. Tencent ecological channels, and will support distribution to WeChat official accounts and mini programs in June. All Hunyuan model resources are free. At the same time, Yuanqi also supports users to distribute agents to various scenarios in the form of API, and the free token limit has been increased from the previous 1 million to 100 million.

Behind the upgrade of Tencent Yuanbao’s product capabilities is the continuous iteration of Hunyuan’s underlying model.

Since its debut in September 2023, the parameter scale of Tencent’s Hunyuan large model has been upgraded from 100 billion to 1 trillion, and the pre-training corpus has been upgraded from 1 trillion to 7 trillion tokens. , and was the first to upgrade to the multi-expert model structure (MoE), with the overall performance improved by more than 50% compared to the Dense version. In addition to continuously improving general large model capabilities, Tencent Hunyuan also supports role-playing, FunctionCall, code generation and other fields, and its mathematical capabilities have increased by 50%.

In terms of multi-modality, Tencent’s Hunyuanwenshengtu large model is the industry’s first Chinese native DiT architecture model, adopting the same architecture of top industry products such as Sora and Stable Diffusion 3. , the generation effect is improved by more than 20% compared to the previous generation. At present, the model has been fully open sourced and has received 2000+ stars on Github. Related capabilities have also been fully integrated into Tencent Yuanbao.

In addition, Tencent Hunyuan Large Model has also continued to explore aspects such as video and 3D generation. It currently supports 16s video generation, and a single image can generate a 3D model in just 30 seconds. Related The ability will also be launched in Yuanbao later.

Currently, more than 600 businesses and scenarios within Tencent have been connected to Tencent Hunyuan. Tencent advertising, WeChat reading, Tencent meetings, Tencent documents, Tencent customer service, etc. are all based on Hunyuan has achieved intelligent upgrade. Tencent's wide range of application scenarios has further contributed to the improvement of large model capabilities.

## It is understood that in order to meet the needs of developers and enterprise customers for general model capabilities, Tencent Hunyuan Large Model has Open to the outside world through Tencent Cloud, it can be called through API or used as a base model to build exclusive applications for different industrial scenarios.

The above is the detailed content of Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

Jun 09, 2024 pm 10:38 PM

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

Jun 09, 2024 pm 10:38 PM

On May 30, Tencent announced a comprehensive upgrade of its Hunyuan model. The App "Tencent Yuanbao" based on the Hunyuan model was officially launched and can be downloaded from Apple and Android app stores. Compared with the Hunyuan applet version in the previous testing stage, Tencent Yuanbao provides core capabilities such as AI search, AI summary, and AI writing for work efficiency scenarios; for daily life scenarios, Yuanbao's gameplay is also richer and provides multiple features. AI application, and new gameplay methods such as creating personal agents are added. "Tencent does not strive to be the first to make large models." Liu Yuhong, vice president of Tencent Cloud and head of Tencent Hunyuan large model, said: "In the past year, we continued to promote the capabilities of Tencent Hunyuan large model. In the rich and massive Polish technology in business scenarios while gaining insights into users’ real needs

Bytedance Beanbao large model released, Volcano Engine full-stack AI service helps enterprises intelligently transform

Jun 05, 2024 pm 07:59 PM

Bytedance Beanbao large model released, Volcano Engine full-stack AI service helps enterprises intelligently transform

Jun 05, 2024 pm 07:59 PM

Tan Dai, President of Volcano Engine, said that companies that want to implement large models well face three key challenges: model effectiveness, inference costs, and implementation difficulty: they must have good basic large models as support to solve complex problems, and they must also have low-cost inference. Services allow large models to be widely used, and more tools, platforms and applications are needed to help companies implement scenarios. ——Tan Dai, President of Huoshan Engine 01. The large bean bag model makes its debut and is heavily used. Polishing the model effect is the most critical challenge for the implementation of AI. Tan Dai pointed out that only through extensive use can a good model be polished. Currently, the Doubao model processes 120 billion tokens of text and generates 30 million images every day. In order to help enterprises implement large-scale model scenarios, the beanbao large-scale model independently developed by ByteDance will be launched through the volcano

Benchmark GPT-4! China Mobile's Jiutian large model passed dual registration

Apr 04, 2024 am 09:31 AM

Benchmark GPT-4! China Mobile's Jiutian large model passed dual registration

Apr 04, 2024 am 09:31 AM

According to news on April 4, the Cyberspace Administration of China recently released a list of registered large models, and China Mobile’s “Jiutian Natural Language Interaction Large Model” was included in it, marking that China Mobile’s Jiutian AI large model can officially provide generative artificial intelligence services to the outside world. . China Mobile stated that this is the first large-scale model developed by a central enterprise to have passed both the national "Generative Artificial Intelligence Service Registration" and the "Domestic Deep Synthetic Service Algorithm Registration" dual registrations. According to reports, Jiutian’s natural language interaction large model has the characteristics of enhanced industry capabilities, security and credibility, and supports full-stack localization. It has formed various parameter versions such as 9 billion, 13.9 billion, 57 billion, and 100 billion, and can be flexibly deployed in Cloud, edge and end are different situations

Advanced practice of industrial knowledge graph

Jun 13, 2024 am 11:59 AM

Advanced practice of industrial knowledge graph

Jun 13, 2024 am 11:59 AM

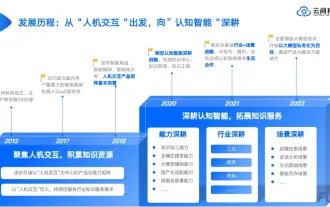

1. Background Introduction First, let’s introduce the development history of Yunwen Technology. Yunwen Technology Company...2023 is the period when large models are prevalent. Many companies believe that the importance of graphs has been greatly reduced after large models, and the preset information systems studied previously are no longer important. However, with the promotion of RAG and the prevalence of data governance, we have found that more efficient data governance and high-quality data are important prerequisites for improving the effectiveness of privatized large models. Therefore, more and more companies are beginning to pay attention to knowledge construction related content. This also promotes the construction and processing of knowledge to a higher level, where there are many techniques and methods that can be explored. It can be seen that the emergence of a new technology does not necessarily defeat all old technologies. It is also possible that the new technology and the old technology will be integrated with each other.

New test benchmark released, the most powerful open source Llama 3 is embarrassed

Apr 23, 2024 pm 12:13 PM

New test benchmark released, the most powerful open source Llama 3 is embarrassed

Apr 23, 2024 pm 12:13 PM

If the test questions are too simple, both top students and poor students can get 90 points, and the gap cannot be widened... With the release of stronger models such as Claude3, Llama3 and even GPT-5 later, the industry is in urgent need of a more difficult and differentiated model Benchmarks. LMSYS, the organization behind the large model arena, launched the next generation benchmark, Arena-Hard, which attracted widespread attention. There is also the latest reference for the strength of the two fine-tuned versions of Llama3 instructions. Compared with MTBench, which had similar scores before, the Arena-Hard discrimination increased from 22.6% to 87.4%, which is stronger and weaker at a glance. Arena-Hard is built using real-time human data from the arena and has a consistency rate of 89.1% with human preferences.

GPT Store can't even open its doors. How dare this domestic platform take this path? ?

Apr 19, 2024 pm 09:30 PM

GPT Store can't even open its doors. How dare this domestic platform take this path? ?

Apr 19, 2024 pm 09:30 PM

Pay attention, this man has connected more than 1,000 large models, allowing you to plug in and switch seamlessly. Recently, a visual AI workflow has been launched: giving you an intuitive drag-and-drop interface, you can drag, pull, and drag to arrange your own workflow on an infinite canvas. As the saying goes, war costs speed, and Qubit heard that within 48 hours of this AIWorkflow going online, users had already configured personal workflows with more than 100 nodes. Without further ado, what I want to talk about today is Dify, an LLMOps company, and its CEO Zhang Luyu. Zhang Luyu is also the founder of Dify. Before joining the business, he had 11 years of experience in the Internet industry. I am engaged in product design, understand project management, and have some unique insights into SaaS. Later he

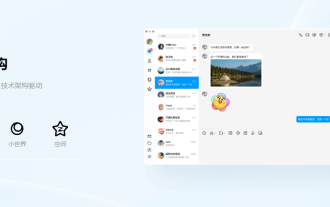

Tencent QQ NT architecture version memory optimization progress announced, chat scenes are controlled within 300M

Mar 05, 2024 pm 03:52 PM

Tencent QQ NT architecture version memory optimization progress announced, chat scenes are controlled within 300M

Mar 05, 2024 pm 03:52 PM

It is understood that Tencent QQ desktop client has undergone a series of drastic reforms. In response to user issues such as high memory usage, oversized installation packages, and slow startup, the QQ technical team has made special optimizations on memory and has made phased progress. Recently, the QQ technical team published an introductory article on the InfoQ platform, sharing its phased progress in special optimization of memory. According to reports, the memory challenges of the new version of QQ are mainly reflected in the following four aspects: Product form: It consists of a complex large panel (100+ modules of varying complexity) and a series of independent functional windows. There is a one-to-one correspondence between windows and rendering processes. The number of window processes greatly affects Electron’s memory usage. For that complex large panel, once there is no

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A