Technology peripherals

Technology peripherals

AI

AI

In addition to RAG, there are five ways to eliminate the illusion of large models

In addition to RAG, there are five ways to eliminate the illusion of large models

In addition to RAG, there are five ways to eliminate the illusion of large models

Produced by 51CTO Technology Stack (WeChat ID: blog51cto)

It is well known that LLM can produce hallucinations - that is, generate incorrect, misleading or meaningless information.

Interestingly, some people, such as OpenAI CEO Sam Altman, view the imagination of AI as creativity, while others believe that imagination may help make new scientific discoveries.

In most cases, however, it is crucial to provide the correct answer, and hallucinations are not a feature, but a flaw.

So, how to reduce the illusion of LLM? Long context? RAG? Fine-tuning?

In fact, long context LLMs are not foolproof, vector search RAG is not satisfactory, and fine-tuning comes with its own challenges and limitations.

The following are some advanced techniques that can be used to reduce the LLM illusion.

1. Advanced prompts

There is indeed a lot of discussion about whether using better or more advanced prompts can solve the hallucination problem of large language models (LLM).

Picture

Picture

Some people think that writing more detailed prompt words will not help solve the (hallucination) problem, but the co-founder of Google Brain (Google Brain) But people like Andrew Ng saw the potential. They proposed a new method that uses deep learning technology to generate prompt words to help people solve problems better. This method utilizes a large amount of data and powerful computing power to automatically generate prompt words related to the problem, thereby improving the efficiency of problem solving. Although the field

Ng believes that the inference capabilities of GPT-4 and other advanced models make them very good at interpreting complex prompt words with detailed instructions.

Picture

Picture

“With multi-example learning, developers can give dozens, or even hundreds, of examples in a prompt word, which is better than less Learning by example is more effective,” he writes.

Picture

Picture

In order to improve prompt words, many new developments are constantly emerging. For example, Anthropic released a new The “Prompt Generator” tool converts simple descriptions into high-level prompts optimized for large language models (LLMs). Through the Anthropic console, you can generate prompt words for production.

Recently, Marc Andreessen also said that with the right prompts, we can unlock the potential super genius in AI models. "Prompting techniques in different areas could unlock this potential super-genius," he added.

2.Meta AI’s Chain-of-Verification (CoVe)

Meta AI’s Chain-of-Verification (CoVe) is another technology. This approach reduces hallucinations in large language models (LLMs) by breaking down fact-checking into manageable steps, improving response accuracy, and aligning with human-driven fact-checking processes.

Picture

Picture

CoVe involves generating an initial response, planning validation questions, answering those questions independently, and generating a final validated response. This approach significantly improves the accuracy of the model by systematically validating and correcting its output.

It improves performance in a variety of tasks such as list-based questions, closed-book question answering, and long-form text generation by reducing hallucinations and increasing factual correctness.

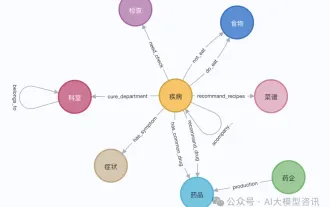

3. Knowledge Graph

RAG (Retrieval Enhanced Generation) is no longer limited to vector database matching. Many advanced RAG technologies have been introduced to significantly improve the retrieval effect.

Picture

Picture

For example, integrate knowledge graphs (KGs) into RAG. By leveraging structured and interconnected data in knowledge graphs, the reasoning capabilities of current RAG systems can be greatly enhanced.

4.Raptor

Another technique is Raptor, which handles problems that span multiple documents by creating a higher level of abstraction. It is particularly useful when answering queries involving multiple document concepts.

Picture

Picture

Approaches like Raptor fit well with long-context large language models (LLMs) because you can directly embed the entire document without chunking .

This method reduces the hallucination phenomenon by integrating an external retrieval mechanism with the transformer model. When a query is received, Raptor first retrieves relevant and verified information from external knowledge bases.

These retrieved data are then embedded into the context of the model along with the original query. By basing the model's responses on facts and relevant information, Raptor ensures that the content generated is both accurate and contextual.

5. Conformal Abstention

The paper "Relieving the Hallucination Phenomenon of Large Language Models through Conformal Abstention" introduces a method to determine the model by applying conformal prediction technology Methods to reduce hallucinations in large language models (LLMs) when responses should be avoided.

Picture

Picture

By using self-consistency to evaluate the similarity of responses and leveraging conformal prediction for strict guarantees, this method ensures that the model only Only respond when you are confident in its accuracy.

This method effectively limits the incidence of hallucinations while maintaining a balanced withdrawal rate, which is especially beneficial for tasks that require long answers. It significantly improves the reliability of model output by avoiding erroneous or illogical responses.

6.RAG reduces the hallucination phenomenon in structured output

Recently, ServiceNow reduces the hallucination phenomenon in structured output through RAG, improves the performance of large language models (LLM), and achieves It achieves out-of-domain generalization while minimizing resource usage.

Picture

Picture

The technology involves a RAG system that retrieves relevant JSON objects from an external knowledge base before generating the text. This ensures that the generation process is based on accurate and relevant data.

Picture

Picture

By incorporating this pre-retrieval step, the model is less likely to produce false or fabricated information, thereby reducing the phenomenon of hallucinations. Furthermore, this approach allows the use of smaller models without sacrificing performance, making it both efficient and effective.

To learn more about AIGC, please visit:

https:// www.51cto.com/aigc/

The above is the detailed content of In addition to RAG, there are five ways to eliminate the illusion of large models. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

Jun 09, 2024 pm 10:38 PM

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

Jun 09, 2024 pm 10:38 PM

On May 30, Tencent announced a comprehensive upgrade of its Hunyuan model. The App "Tencent Yuanbao" based on the Hunyuan model was officially launched and can be downloaded from Apple and Android app stores. Compared with the Hunyuan applet version in the previous testing stage, Tencent Yuanbao provides core capabilities such as AI search, AI summary, and AI writing for work efficiency scenarios; for daily life scenarios, Yuanbao's gameplay is also richer and provides multiple features. AI application, and new gameplay methods such as creating personal agents are added. "Tencent does not strive to be the first to make large models." Liu Yuhong, vice president of Tencent Cloud and head of Tencent Hunyuan large model, said: "In the past year, we continued to promote the capabilities of Tencent Hunyuan large model. In the rich and massive Polish technology in business scenarios while gaining insights into users’ real needs

Bytedance Beanbao large model released, Volcano Engine full-stack AI service helps enterprises intelligently transform

Jun 05, 2024 pm 07:59 PM

Bytedance Beanbao large model released, Volcano Engine full-stack AI service helps enterprises intelligently transform

Jun 05, 2024 pm 07:59 PM

Tan Dai, President of Volcano Engine, said that companies that want to implement large models well face three key challenges: model effectiveness, inference costs, and implementation difficulty: they must have good basic large models as support to solve complex problems, and they must also have low-cost inference. Services allow large models to be widely used, and more tools, platforms and applications are needed to help companies implement scenarios. ——Tan Dai, President of Huoshan Engine 01. The large bean bag model makes its debut and is heavily used. Polishing the model effect is the most critical challenge for the implementation of AI. Tan Dai pointed out that only through extensive use can a good model be polished. Currently, the Doubao model processes 120 billion tokens of text and generates 30 million images every day. In order to help enterprises implement large-scale model scenarios, the beanbao large-scale model independently developed by ByteDance will be launched through the volcano

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

GraphRAG enhanced for knowledge graph retrieval (implemented based on Neo4j code)

Jun 12, 2024 am 10:32 AM

GraphRAG enhanced for knowledge graph retrieval (implemented based on Neo4j code)

Jun 12, 2024 am 10:32 AM

Graph Retrieval Enhanced Generation (GraphRAG) is gradually becoming popular and has become a powerful complement to traditional vector search methods. This method takes advantage of the structural characteristics of graph databases to organize data in the form of nodes and relationships, thereby enhancing the depth and contextual relevance of retrieved information. Graphs have natural advantages in representing and storing diverse and interrelated information, and can easily capture complex relationships and properties between different data types. Vector databases are unable to handle this type of structured information, and they focus more on processing unstructured data represented by high-dimensional vectors. In RAG applications, combining structured graph data and unstructured text vector search allows us to enjoy the advantages of both at the same time, which is what this article will discuss. structure

Using Shengteng AI technology, the Qinling·Qinchuan transportation model helps Xi'an build a smart transportation innovation center

Oct 15, 2023 am 08:17 AM

Using Shengteng AI technology, the Qinling·Qinchuan transportation model helps Xi'an build a smart transportation innovation center

Oct 15, 2023 am 08:17 AM

"High complexity, high fragmentation, and cross-domain" have always been the primary pain points on the road to digital and intelligent upgrading of the transportation industry. Recently, the "Qinling·Qinchuan Traffic Model" with a parameter scale of 100 billion, jointly built by China Vision, Xi'an Yanta District Government, and Xi'an Future Artificial Intelligence Computing Center, is oriented to the field of smart transportation and provides services to Xi'an and its surrounding areas. The region will create a fulcrum for smart transportation innovation. The "Qinling·Qinchuan Traffic Model" combines Xi'an's massive local traffic ecological data in open scenarios, the original advanced algorithm self-developed by China Science Vision, and the powerful computing power of Shengteng AI of Xi'an Future Artificial Intelligence Computing Center to provide road network monitoring, Smart transportation scenarios such as emergency command, maintenance management, and public travel bring about digital and intelligent changes. Traffic management has different characteristics in different cities, and the traffic on different roads

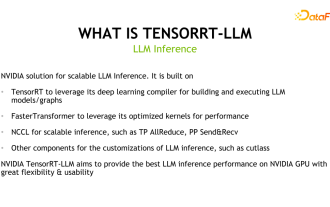

Uncovering the NVIDIA large model inference framework: TensorRT-LLM

Feb 01, 2024 pm 05:24 PM

Uncovering the NVIDIA large model inference framework: TensorRT-LLM

Feb 01, 2024 pm 05:24 PM

1. Product positioning of TensorRT-LLM TensorRT-LLM is a scalable inference solution developed by NVIDIA for large language models (LLM). It builds, compiles and executes calculation graphs based on the TensorRT deep learning compilation framework, and draws on the efficient Kernels implementation in FastTransformer. In addition, it utilizes NCCL for communication between devices. Developers can customize operators to meet specific needs based on technology development and demand differences, such as developing customized GEMM based on cutlass. TensorRT-LLM is NVIDIA's official inference solution, committed to providing high performance and continuously improving its practicality. TensorRT-LL

Benchmark GPT-4! China Mobile's Jiutian large model passed dual registration

Apr 04, 2024 am 09:31 AM

Benchmark GPT-4! China Mobile's Jiutian large model passed dual registration

Apr 04, 2024 am 09:31 AM

According to news on April 4, the Cyberspace Administration of China recently released a list of registered large models, and China Mobile’s “Jiutian Natural Language Interaction Large Model” was included in it, marking that China Mobile’s Jiutian AI large model can officially provide generative artificial intelligence services to the outside world. . China Mobile stated that this is the first large-scale model developed by a central enterprise to have passed both the national "Generative Artificial Intelligence Service Registration" and the "Domestic Deep Synthetic Service Algorithm Registration" dual registrations. According to reports, Jiutian’s natural language interaction large model has the characteristics of enhanced industry capabilities, security and credibility, and supports full-stack localization. It has formed various parameter versions such as 9 billion, 13.9 billion, 57 billion, and 100 billion, and can be flexibly deployed in Cloud, edge and end are different situations

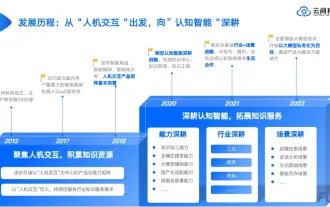

Advanced practice of industrial knowledge graph

Jun 13, 2024 am 11:59 AM

Advanced practice of industrial knowledge graph

Jun 13, 2024 am 11:59 AM

1. Background Introduction First, let’s introduce the development history of Yunwen Technology. Yunwen Technology Company...2023 is the period when large models are prevalent. Many companies believe that the importance of graphs has been greatly reduced after large models, and the preset information systems studied previously are no longer important. However, with the promotion of RAG and the prevalence of data governance, we have found that more efficient data governance and high-quality data are important prerequisites for improving the effectiveness of privatized large models. Therefore, more and more companies are beginning to pay attention to knowledge construction related content. This also promotes the construction and processing of knowledge to a higher level, where there are many techniques and methods that can be explored. It can be seen that the emergence of a new technology does not necessarily defeat all old technologies. It is also possible that the new technology and the old technology will be integrated with each other.