The AIxiv column is a column where this site publishes academic and technical content. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com

#In today’s digital era, the construction of 3D assets in the metaverse, the realization of digital twins, and virtual reality and augmented reality It plays an important role in the application of mobile phones and promotes technological innovation and improvement of user experience.

Existing 3D asset generation methods usually use generative models to infer the material properties of surface locations under preset lighting conditions based on the spatially varying bidirectional reflection distribution function (SVBRDF). However, these methods rarely take into account the strong and rich prior knowledge built by people's perception of the surface materials of common objects around them (for example, car tires should be metal wheels wrapped with rubber tread on the outer edge), and ignore the material Should be decoupled from the RGB color of the object itself. Without changing the original meaning, the existing 3D asset generation methods often utilize generative models based on spatially varying bidirectional reflectance distribution function (SVBRDF) to infer material properties given the surface positions under predefined lighting conditions. However, these methods rarely take into account the strong and rich prior knowledge that people have in constructing the surface materials of common objects around us (such as the fact that car tires should have rubber tread covering metal rims on the outer edge), and they disregard the decoupling between material and the RGB color of objects themselves.

Therefore, how to effectively integrate human's prior knowledge of object surface materials into the material generation process, thereby improving the overall quality of existing 3D assets, has become an important topic of current research.

Regarding this problem, recently, research teams from Beijing and Hong Kong, including the Institute of Automation of the Chinese Academy of Sciences, Beijing University of Posts and Telecommunications, and the Hong Kong Polytechnic University, released a paper called "MaterialSeg3D: Segmenting Dense Materials from 2D Priors for 3D Assets" paper constructs the first 2D material segmentation data set MIO for multiple types of complex material objects, which contains pixels of a single object under multiple semantic categories and from various camera angles Level material label. This research proposes a material generation scheme that can leverage 2D semantic priors to infer the surface material of 3D assets in UV space - MaterialSeg3D.

Paper: https://arxiv.org/pdf/2404.13923

Code address: https ://github.com/PROPHETE-pro/MaterialSeg3D_

Therefore, this article focuses on how to introduce prior knowledge about materials in 2D images into the task of solving the definition of material information for 3D assets.

MIO Dataset

This paper first attempts to extract prior knowledge of material classification from existing 3D asset data sets. However, due to too few samples in the data set and a single style, it is difficult for the segmentation model to learn correct prior knowledge.

Compared to 3D assets, 2D images are more widely available on public websites or data sets. However, there is a large gap in the distribution of existing annotated 2D image datasets and 3D asset renderings, and cannot directly provide sufficient prior knowledge of materials.

Therefore, this article constructed a customized data set MIO (Materialized Individual Objects), which is currently the largest 2D material segmentation data set of multi-category single complex material assets, including images sampled from various camera angles. And accurately annotated by a professional team.

Visual example of material class annotation and PBR material sphere mapping.

When constructing this data set, this article follows the following rules:

Each sampled image contains only one prominent foreground object

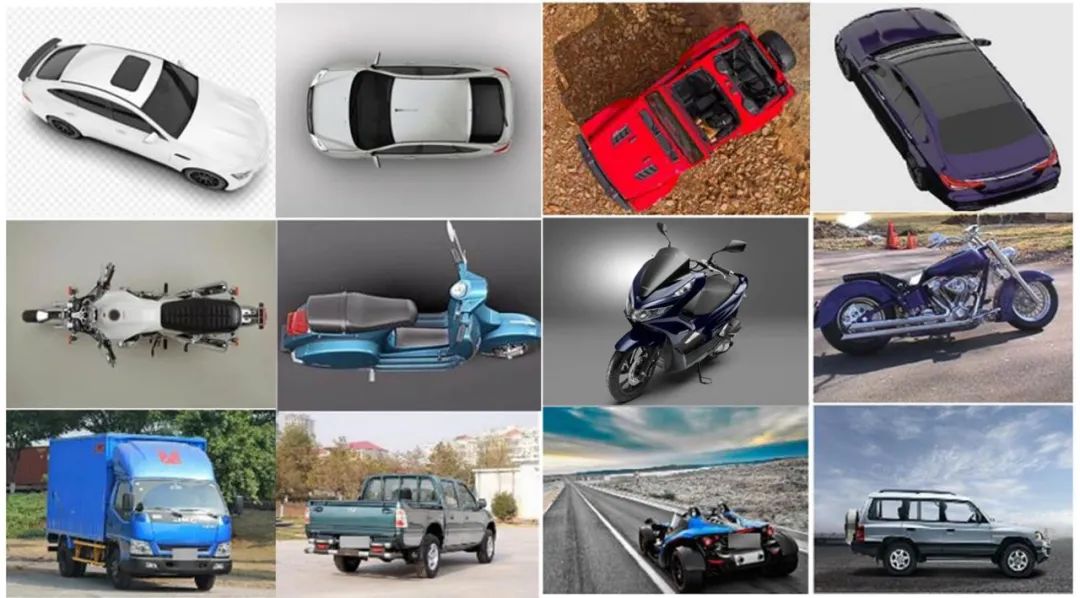

Collect a similar number of real scene 2D pictures and 3D asset renderings

Collect image samples from various camera angles, including top and bottom views, etc. Special perspective

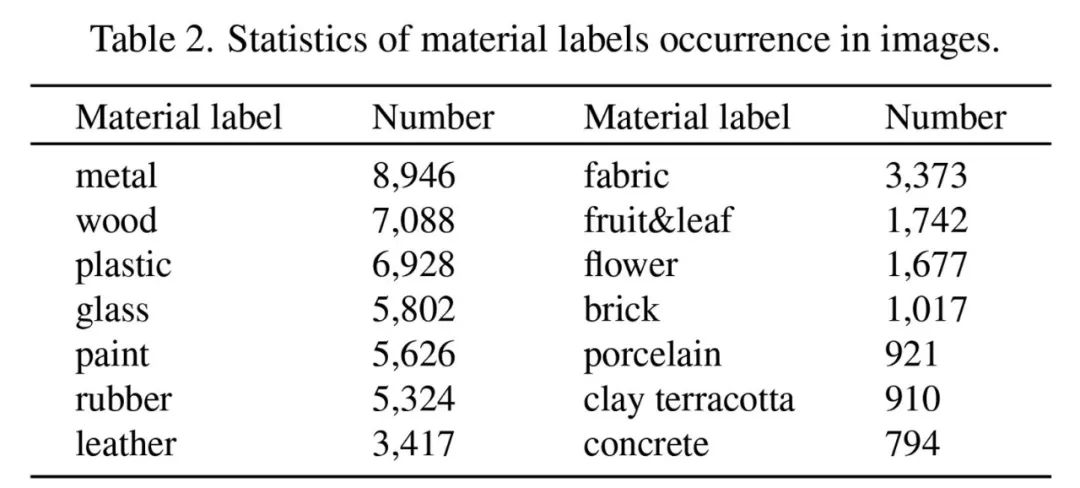

The uniqueness of the MIO data set is that it not only constructs the pixel-level labels of each material category, but also constructs each material category and PBR material value separately. one-to-one mapping relationship between. These mapping relationships were determined after discussions among nine professional 3D modelers. This article collected more than 1,000 real PBR material balls from the public material library as candidate materials, and screened and specified them based on the professional knowledge of the modeler. Finally, 14 material categories were determined and their mapping relationship with the PBR material was as The label space of the dataset.

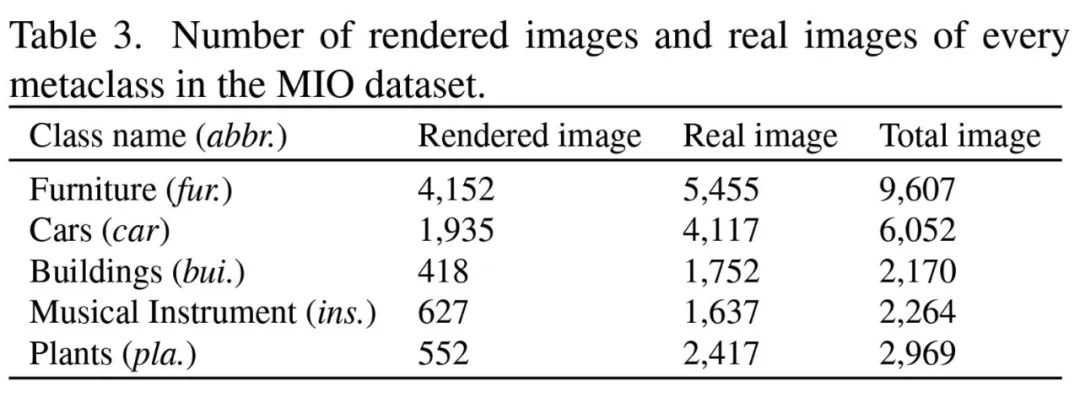

#The MIO data set contains a total of 23,062 multi-view images of a single complex object, divided into 5 major meta-categories: furniture, cars, buildings, musical instruments and plants, specifically It can be divided into 20 specific categories. It is particularly worth mentioning that the MIO data set contains approximately 4000 top-view images, providing a unique perspective that rarely appears in existing 2D data sets.

##MaterialSeg3D

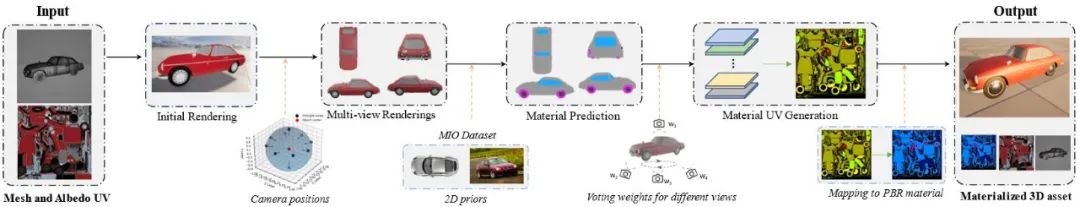

With the MIO data set as reliable prior knowledge of material information Source, this paper then proposes a new paradigm for 3D asset surface material prediction called MaterialSeg3D, which generates reasonable PBR materials for a given asset surface, thereby being able to realistically simulate the physical properties of the object, including lighting, shadows and reflections. It enables 3D objects to show a high degree of authenticity and consistency in various environments, and provides an effective solution to the problem of lack of material information in existing 3D assets. MaterialSeg3D The entire processing flow includes three parts: multi-view rendering of 3D assets, material prediction under multi-view and 3D material UV generation. In the multi-view rendering stage, camera poses for top view, side view, and 12 surround angles were determined, as well as random pitch angles to generate 2D rendered images. In the material prediction stage, the material segmentation model trained based on the MIO data set is used to predict pixel-level material labels for multi-view renderings. In the material UV generation stage, the material prediction results are mapped to the temporary UV map, and the final material label UV is obtained through a weighted voting mechanism and converted into a PBR material map.

Visualization effects and experiments

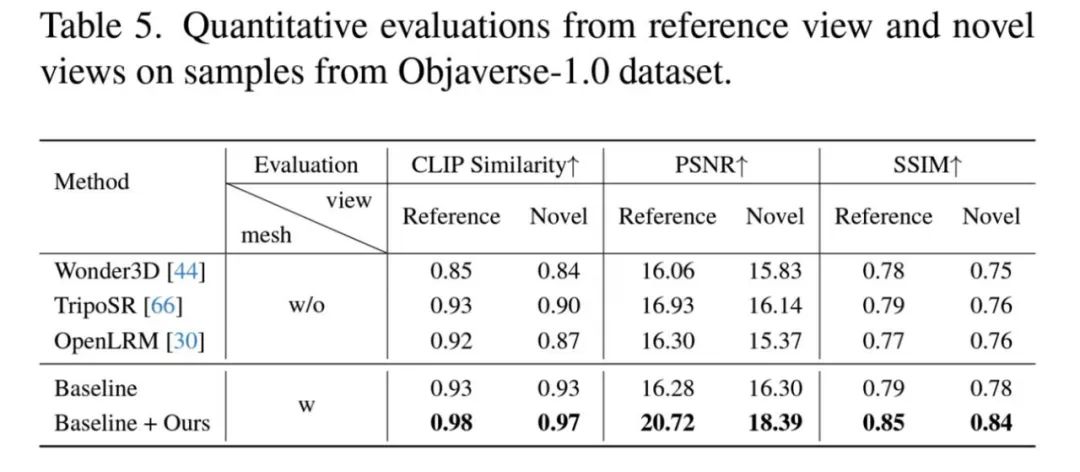

To evaluate the effectiveness of MaterialSeg3D, this paper conducts quantitative and qualitative experimental analyzes similar to recent work, focusing on three aspects: single image to 3D asset generation method, texture generation, and public 3D assets. For single image to 3D asset generation methods, comparisons were made with Wonder3D, TripoSR and OpenLRM, which take a certain reference view of the asset as input and directly generate 3D objects with texture features. It is observed through the visual images that the assets processed by MaterialSeg3D have a significant improvement in rendering realism compared to previous work. The paper also compares existing texture generation methods, such as Fantasia3D, Text2Tex, and the online functionality provided by the Meshy website, which can generate texture results based on text prompt information.

On this basis, MaterialSeg3D can generate accurate PBR material information under different lighting conditions, making the rendering effect more realistic.

The quantitative experiment uses CLIP Similarity, PSNR, and SSIM as evaluation indicators, selects assets in the Objaverse-1.0 data set as test samples, and randomly selects three camera angles as new views.

These experiments demonstrate the effectiveness of MaterialSeg3D. It can generate PBR material information missing from public 3D assets, providing more high-quality assets for modelers and subsequent research work.

Summary and Outlook

This paper explores the problem of surface material generation for 3D assets and builds a customized 2D material segmentation data set MIO. With the support of this reliable data set, a new 3D asset surface material generation paradigm MaterialSeg3D is proposed, which can generate decoupled independent PBR material information for a single 3D asset, significantly enhancing the performance of existing 3D assets under different lighting conditions. The rendering is realistic and reasonable.

The author points out that future research will focus on expanding the number of object metaclasses in the data set, expanding the size of the data set by generating pseudo labels, and self-training the material segmentation model so that this generation paradigm can be directly applied to absolute Most kinds of 3D assets.

The above is the detailed content of Good news in the field of 3D asset generation: The Institute of Automation and Beijing University of Posts and Telecommunications teams jointly create a new paradigm of material generation. For more information, please follow other related articles on the PHP Chinese website!

How to flash Xiaomi phone

How to flash Xiaomi phone

How to center div in css

How to center div in css

How to open rar file

How to open rar file

Methods for reading and writing java dbf files

Methods for reading and writing java dbf files

How to solve the problem that the msxml6.dll file is missing

How to solve the problem that the msxml6.dll file is missing

Commonly used permutation and combination formulas

Commonly used permutation and combination formulas

Virtual mobile phone number to receive verification code

Virtual mobile phone number to receive verification code

dynamic photo album

dynamic photo album