In February of this year, Google launched the multi-modal large model Gemini1.5, which greatly improved performance and speed through engineering and infrastructure optimization, MoE architecture and other strategies. With longer context, stronger reasoning capabilities, and better handling of cross-modal content.

This Friday, Google DeepMind officially released the technical report of Gemini 1.5, which covers the Flash version and other recent upgrades. The document is 153 pages long.

Technical report link: https://storage.googleapis.com/deepmind-media/gemini/gemini_v1_5_report.pdf

In this report, Google introduces the Gemini 1.5 series models. It represents the next generation of highly computationally efficient multi-modal large models, capable of recalling fine-grained information and reasoning from the context of millions of tokens, including multiple long documents and hours of video. Gemini 1.5 series models have multiple language and visual reasoning capabilities, making them widely used in the fields of natural language processing and computer vision. The model is capable of extracting key information from text and performing inferences, as well as comprehensively analyzing multiple long documents. Additionally, it supports the processing of large amounts of visual data and is capable of processing large amounts of visual data for hours.

The series includes two new models:

Regarding the Flash version mentioned at this week’s Google I/O conference, the report stated that Gemini 1.5 Flash is a Transformer decoder model with the same features as Gemini 1.5 Pro 2M+ contextual and multi-modal features. Efficiently utilizes tensor processing units (TPUs) and has low model serving latency. For example, Gemini 1.5 Flash can calculate attention and feed-forward components in parallel, and is also a Gemini 1.5 Pro model with larger network online extraction capabilities. It is trained using high-order preprocessing methods to improve quality.

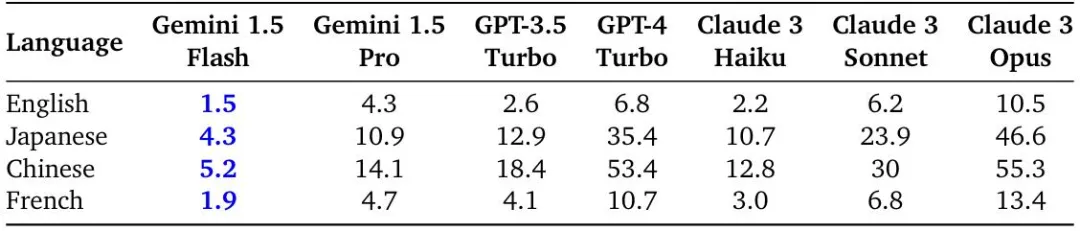

The report evaluates the average time per output character for English, Chinese, Japanese, and French queries taken from Gemini 1.5 and the Vertex AI Streaming API.

Time per output character in milliseconds for English, Chinese, Japanese, and French responses, with 10,000 characters entered, Gemini 1.5 Flash achieved the fastest build speeds of all languages tested.

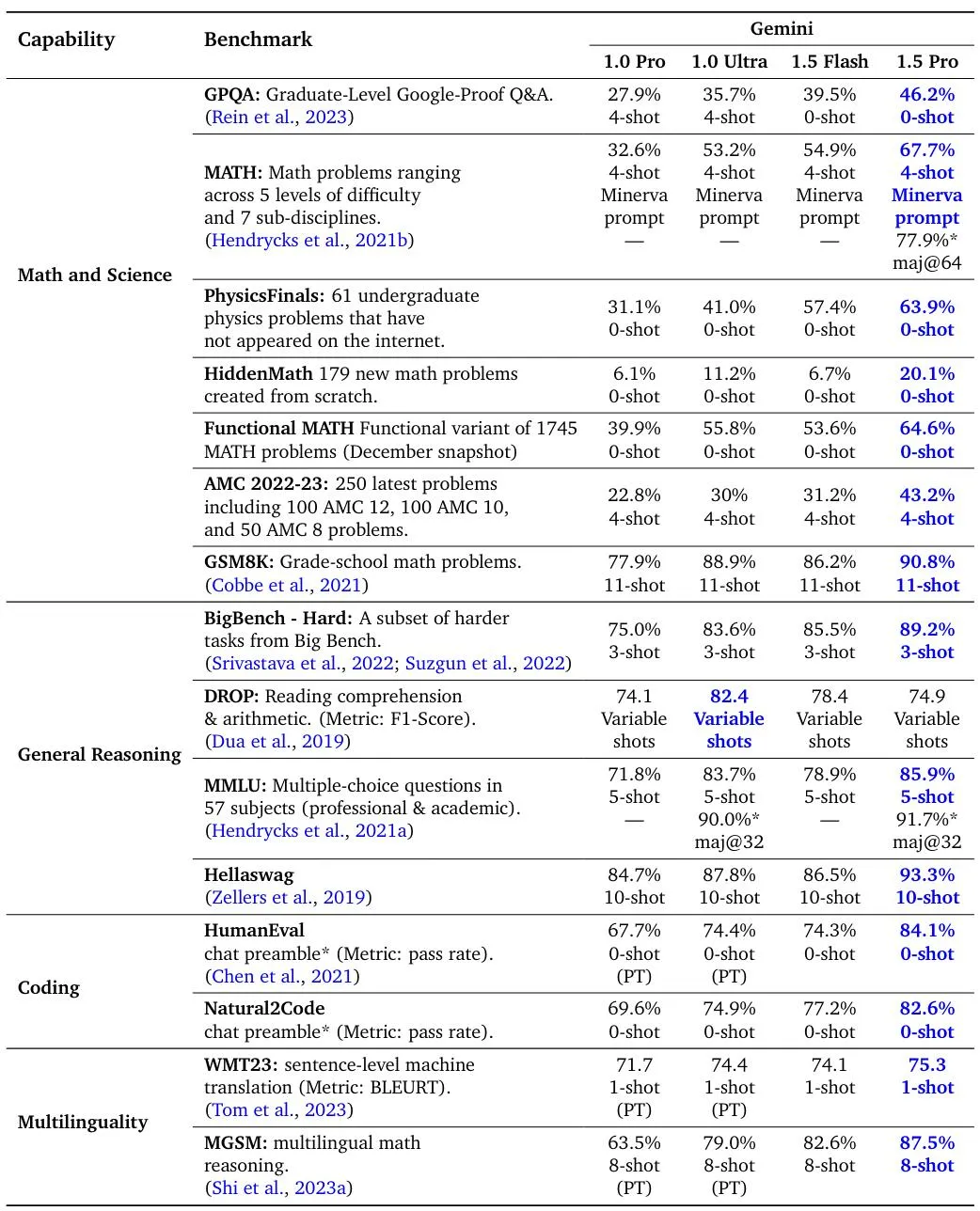

Evaluation results of Gemini 1.5 Pro, 1.5 Flash, and Gemini 1.0 models on standard coding, multilingual, and math, science, and reasoning benchmarks. All numbers for the 1.5 Pro and 1.5 Flash are obtained after command adjustments.

## Gemini 1.5 Pro compared to Gemini 1.0 Pro and Ultra on video understanding benchmarks.

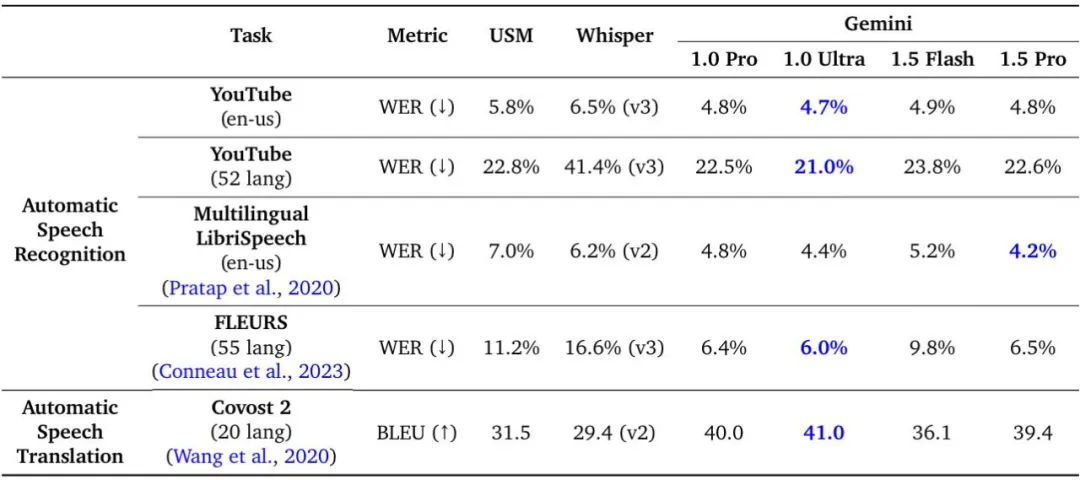

Comparison of Gemini 1.5 Pro with USM, Whisper, Gemini 1.0 Pro, and Gemini 1.0 Ultra on audio understanding tasks.

Gemini 1.5 model achieves near-perfect recall on cross-modal long context retrieval tasks, improving long document QA, long video QA and long context state-of-the-art performance across a wide range of benchmarks. In addition, Google also stated that as of May this year, the performance of Gemini 1.5 has been significantly improved compared to February.

Gemini 1.5 Pro (May) versus initial release (February) on multiple benchmarks. The latest Gemini 1.5 Pro delivers improvements across all inference, encoding, vision and video benchmarks, while audio and translation performance remains unchanged. Note that for FLEURS, lower scores are better.

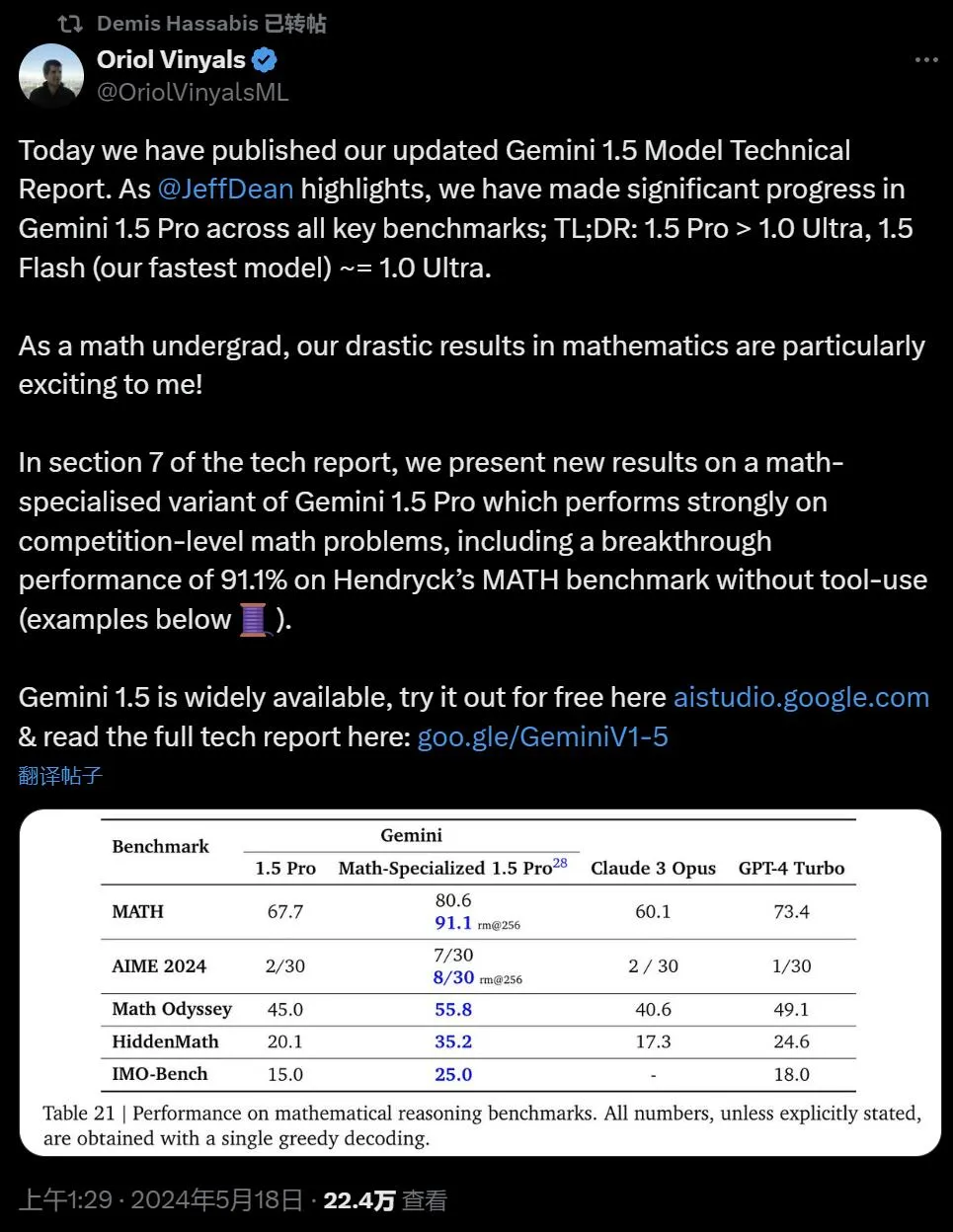

Oriol Vinyals, vice president of Google DeepMind and co-lead of the Gemini project, concluded that Gemini 1.5 Pro > 1.0 Ultra, 1.5 Flash (currently the fastest model) ~= 1.0 Ultra.

By studying the limits of Gemini 1.5's long context capabilities, we can see that next token prediction and near-perfect retrieval (>99% ) and continue to improve. A generational leap over existing models such as Claude 3.0 (200k) and GPT-4 Turbo (128k).

In the seventh chapter of the report, Google introduced the running scores of the Gemini 1.5 Pro math-enhanced version, which performed well on competition-level math problems, including without using tools. It achieved a breakthrough performance of 91.1% in Hendryck's MATH benchmark test.

The following are some examples of the model solving Asia Pacific Mathematical Olympiad (APMO) problems that previous models clearly could not solve. Oriol Vinyals says this answer is great because it's a proof (rather than a calculation), the solution is to the point, and it's "beautiful."

Finally, Google highlighted real-world use cases for large models, such as Gemini 1.5, which works with professionals to complete tasks and achieve goals at 10 Time savings of 26-75% can be achieved across different job categories.

This cutting-edge large language model also demonstrates some surprising new features. When given a grammar manual for Kalamang, a language spoken by fewer than 200 people in western Papua New Guinea, the model could learn to translate English into Kalamang at a similar level to humans learning from the same content.

The above is the detailed content of Google Gemini 1.5 technical report: Easily prove Mathematical Olympiad questions, the Flash version is 5 times faster than GPT-4 Turbo. For more information, please follow other related articles on the PHP Chinese website!