How to Use Nightshade to Protect Your Artwork From Generative AI

AI tools are revolutionary and can now hold conversations, generate human-like text, and create images based on a single word. However, the training data these AI tools use often comes from copyrighted sources, especially when it comes to text-to-image generators like DALL-E, Midjourney, and others.

Stopping generative AI tools using copyright images to train is difficult, and artists from all walks of life have struggled to protect their work from AI training datasets. But now, that's all changing with the advent of Nightshade, a free AI tool built to poison the output of generative AI tools—and finally let artists take some power back.

What Is AI Poisoning?

AI poisoning is the act of "poisoning" the training dataset of an AI algorithm. This is similar to providing wrong information to the AI on purpose, resulting in the trained AI malfunctioning or failing to detect an image. Tools like Nightshade alter the pixels in a digital image in such a manner that it appears to be completely different to the AI training on it, but largely unchanged from the original to the human eye.

For example, if you upload a poisoned image of a car to the internet, it will look the same to us humans, but an AI attempting to train itself to identify cars by looking at images of cars on the internet will see something completely different.

A large enough sample size of these fake or poisoned images in an AI's training data can damage its ability to generate accurate images from a given prompt as the AI's understanding of the object is compromised.

There are still a few questions on what the future holds for Generative AI, but protecting original digital work is a definite priority. This can even damage future iterations of the model as the training data upon which the model's foundation is built isn't 100% correct.

Using this technique, digital creators who do not consent for their images to be used in AI datasets can protect them from being fed to generative AI without permission. Some platforms provide creators the option to opt out of including their artwork in AI training datasets. However, such opt-out lists have been disregarded by AI model trainers in the past and continue to be disregarded with little to no consequence.

Compared to other digital artwork protection tools like Glaze, Nightshade is offensive. Glaze prevents AI algorithms from mimicking the style of a particular image, while Nightshade changes the image's appearance to the AI. Both tools are built by Ben Zhao, Professor of Computer Science at the University of Chicago.

How to Use Nightshade

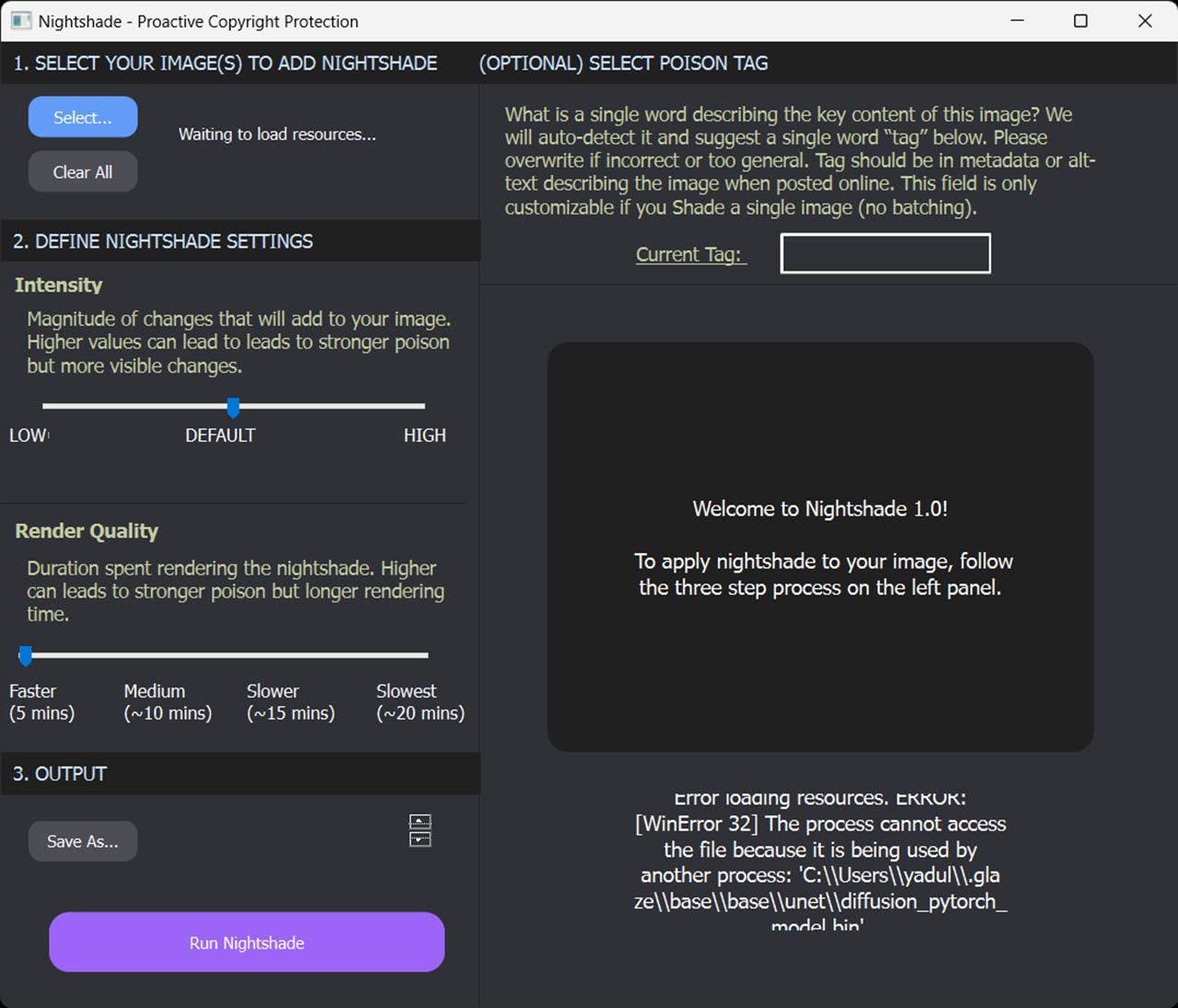

While the creator of the tool recommends Nightshade be used alongside Glaze, it can also be used as a standalone tool to protect your artwork. Using the tool is also fairly easy, considering there are only three steps to protecting your images with Nightshade.

However, there are a few things you need to keep in mind before getting started.

Nightshade is only available for Windows and MacOS with limited GPU support and a minimum of 4GB VRAM required. Non-Nvidia GPUs and Intel Macs aren't supported at the moment. Here's a list of supported Nvidia GPUs according to the Nightshade team (the GTX and RTX GPUs are found in the "CUDA-Enabled GeForce and TITAN Products" section). Alternatively, you can run Nightshade on your CPU, but it'll result in slower performance. If you have a GTX 1660, 1650, or 1550, a bug in the PyTorch library can prevent you from launching or using Nightshade properly. The team behind Nightshade may fix it in the future by moving from PyTorch to Tensorflow, but there are no workarounds at the moment. The issue also extends to the Ti variants of these cards. I launched the program by providing administrator access on my Windows 11 PC and waiting a few minutes for it to open. Your mileage may vary. If your artwork has lots of solid shapes or backgrounds, you may experience some artifacts. This can be countered by using a lower intensity of "poisoning."As far as protecting your images with Nightshade goes, here's what you need to do. Keep in mind that this guide uses the Windows version, but these steps also apply to the macOS version.

Download the Windows or macOS version from the Nightshade download page. Nightshade downloads as an archived folder with no installation required. Once the download is complete, extract the ZIP folder and double-click Nightshade.exe to run the program. Select the image you want to protect by clicking the Select button in the top-left. You can also select multiple images at once for batch processing. Adjust the Intensity and Render Quality dials according to your preferences. Higher values add stronger poisoning but can also introduce artifacts in the output image. Next, click the Save As button under the Output section to select a destination for the output file. Click the Run Nightshade button at the bottom to run the program and poison your images.

Adjust the Intensity and Render Quality dials according to your preferences. Higher values add stronger poisoning but can also introduce artifacts in the output image. Next, click the Save As button under the Output section to select a destination for the output file. Click the Run Nightshade button at the bottom to run the program and poison your images. Optionally, you can also select a poison tag. Nightshade will automatically detect and suggest a single word tag if you don't, but you can change it if it's incorrect or too general. Keep in mind this setting is only available when you process a single image in Nightshade.

If all goes well, you should get an image that looks identical to the original one to the human eye but completely different to an AI algorithm—protecting your artwork from generative AI.

The above is the detailed content of How to Use Nightshade to Protect Your Artwork From Generative AI. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1664

1664

14

14

1421

1421

52

52

1315

1315

25

25

1266

1266

29

29

1239

1239

24

24

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Meta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

Hey there, Coding ninja! What coding-related tasks do you have planned for the day? Before you dive further into this blog, I want you to think about all your coding-related woes—better list those down. Done? – Let’

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

This week's AI landscape: A whirlwind of advancements, ethical considerations, and regulatory debates. Major players like OpenAI, Google, Meta, and Microsoft have unleashed a torrent of updates, from groundbreaking new models to crucial shifts in le

Selling AI Strategy To Employees: Shopify CEO's Manifesto

Apr 10, 2025 am 11:19 AM

Selling AI Strategy To Employees: Shopify CEO's Manifesto

Apr 10, 2025 am 11:19 AM

Shopify CEO Tobi Lütke's recent memo boldly declares AI proficiency a fundamental expectation for every employee, marking a significant cultural shift within the company. This isn't a fleeting trend; it's a new operational paradigm integrated into p

GPT-4o vs OpenAI o1: Is the New OpenAI Model Worth the Hype?

Apr 13, 2025 am 10:18 AM

GPT-4o vs OpenAI o1: Is the New OpenAI Model Worth the Hype?

Apr 13, 2025 am 10:18 AM

Introduction OpenAI has released its new model based on the much-anticipated “strawberry” architecture. This innovative model, known as o1, enhances reasoning capabilities, allowing it to think through problems mor

A Comprehensive Guide to Vision Language Models (VLMs)

Apr 12, 2025 am 11:58 AM

A Comprehensive Guide to Vision Language Models (VLMs)

Apr 12, 2025 am 11:58 AM

Introduction Imagine walking through an art gallery, surrounded by vivid paintings and sculptures. Now, what if you could ask each piece a question and get a meaningful answer? You might ask, “What story are you telling?

Newest Annual Compilation Of The Best Prompt Engineering Techniques

Apr 10, 2025 am 11:22 AM

Newest Annual Compilation Of The Best Prompt Engineering Techniques

Apr 10, 2025 am 11:22 AM

For those of you who might be new to my column, I broadly explore the latest advances in AI across the board, including topics such as embodied AI, AI reasoning, high-tech breakthroughs in AI, prompt engineering, training of AI, fielding of AI, AI re

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

SQL's ALTER TABLE Statement: Dynamically Adding Columns to Your Database In data management, SQL's adaptability is crucial. Need to adjust your database structure on the fly? The ALTER TABLE statement is your solution. This guide details adding colu