The performance exceeds Llama-3 and is mainly used for synthetic data.

NVIDIA’s general large model Nemotron has open sourced the latest 340 billion parameter version. This Friday, NVIDIA announced the launch of the Nemotron-4 340B. It contains a series of open models that developers can use to generate synthetic data for training large language models (LLM), which can be used for commercial applications in all industries such as healthcare, finance, manufacturing, and retail. High-quality training data plays a vital role in the responsiveness, accuracy, and quality of custom LLMs—but powerful datasets are often expensive and difficult to access. Through a unique open model license, Nemotron-4 340B provides developers with a free, scalable way to generate synthetic data to help people build powerful LLMs. The Nemotron-4 340B series includes Base, Instruct, and Reward models, which form a pipeline for generating synthetic data for training and improving LLM. These models are optimized for use with NVIDIA NeMo, an open source framework for end-to-end model training, including data management, customization, and evaluation. They are also optimized for inference with the open source NVIDIA TensorRT-LLM library. Nvidia says the Nemotron-4 340B is now available for download from Hugging Face. Developers will soon be able to access these models at ai.nvidia.com, where they will be packaged as NVIDIA NIM microservices with standard application programming interfaces that can be deployed anywhere. Hugging Face Download: https://huggingface.co/collections/nvidia/nemotron-4-340b-666b7ebaf1b3867caf2f1911 Navigating Nemotron to generate synthetic dataLarge language models can help developers without access to large, diverse labeled datasets Generate synthetic training data. Nemotron-4 340B Instruct model creates diverse synthetic data that mimics the characteristics of real-world data, helping to improve data quality and thereby improve the performance of custom LLM Performance and robustness in all areas. To improve the quality of AI-generated data, developers can use the Nemotron-4 340B Reward model to filter for high-quality responses. Nemotron-4 340B Reward scores responses based on five attributes: usability, correctness, coherence, complexity, and verbosity. It currently ranks No. 1 on the Hugging Face RewardBench ranking created by AI2, which evaluates the power, security, and flaws of reward models. In this synthetic data pipeline, (1) the Nemotron-4 340B Instruct model is used to generate text-based synthetic output. Then, the evaluation model (2) Nemotron-4 340B Reward evaluates the generated text and provides feedback to guide iterative improvements and ensure the accuracy of the synthesized data. Researchers can also customize the Nemotron-4 340B base model using their own proprietary data, combined with the included HelpSteer2 data set, to Create your own Instruct model or bonus model. ## Thesis Address: https://d1qx31qr3h6wln.cloudfront.net/publications/nemotron_4_340b_8t_8t_0.pdf  #ision #Method Introduction##################The Nemotron-4-340B-Base model architecture is a standard decoder-only Transformer architecture with causal attention Force mask, rotation position embedding (RoPE), SentencePiece tokenizer, etc. The hyperparameters of Nemotron-4-340B-Base are shown in Table 1. It has 9.4 billion embedded parameters and 331.6 billion non-embedded parameters. ###############The following table shows some training details of the Nemotron-4-340B-Base model. The table summarizes the 3 stages of batch size gradient, including each iteration time and model FLOP/s utilization. ###############To develop powerful reward models, NVIDIA collected a dataset of 10k human preference data called HelpSteer2 and released it publicly.Dataset address: https://huggingface.co/datasets/nvidia/HelpSteer2Regression Reward Model Nemotron-4-340B-Reward builds on the Nemotron-4-340B-Base model and replaces the last softmax layer with a new reward header. This header is a linear projection that maps the hidden state of the last layer into a five-dimensional vector of HelpSteer properties (usefulness, correctness, coherence, complexity, verbosity). During the inference process, these attribute values can be aggregated into an overall reward through a weighted sum. This bonus mode provides a solid foundation for training the Nemotron-4-340B-Instruct. The study found that such a model performed very well on RewardBench: Fine-tuned with NeMo, Optimize inference with TensorRT-LLMUsing open source NVIDIA NeMo and NVIDIA TensorRT-LLM, developers can optimize the efficiency of their guidance and reward models to Generate synthetic data and score responses. All Nemotron-4 340B models are optimized using TensorRT-LLM to exploit tensor parallelism, a type of model parallelism in which a single weight matrix is Segmentation across multiple GPUs and servers enables efficient inference at scale. Nemotron-4 340B Base is trained on 9 trillion tokens and can be customized using the NeMo framework to fit specific use cases or domains. This fine-tuning process benefits from large amounts of pre-training data and provides more accurate output for specific downstream tasks. Among these, the NeMo framework provides a variety of customization methods, including supervised fine-tuning and parameter-efficient fine-tuning methods, such as low-rank adaptation (LoRA). To improve model quality, developers can align their models using NeMo Aligner and datasets annotated by Nemotron-4 340B Reward. Alignment is a critical step in training large language models, where model behavior is fine-tuned using algorithms like RLHF to ensure that its output is safe, accurate, contextual, and consistent with its stated goals. Enterprises seeking enterprise-grade support and secure production environments can also access NeMo and TensorRT-LLM through the cloud-native NVIDIA AI Enterprise software platform. The platform provides an accelerated and efficient runtime environment for generative AI base models. Figure 1 highlights the performance of the Nemotron-4 340B model family in Accuracy in selected tasks. Specifically: Nemotron-4-340B-Base outperforms Llama-3 70B, Mixtral 8x22B and Comparable to open access base models such as Qwen-2 72B. The Nemotron-4-340B-Instruct surpasses corresponding instruction models when it comes to instruction following and chat capabilities. Nemotron-4-340B Reward achieves the highest accuracy on RewardBench, even surpassing proprietary models such as GPT-4o-0513 and Gemini 1.5 Pro-0514. After the launch of Nemotron-4-340B, the evaluation platform immediately released its benchmark results. It can be seen that its performance exceeded that in hard benchmark tests such as Arena-Hard-Auto. Llama-3-70b#Does this mean that a new, most powerful model in the industry has emerged? ##https://blogs.nvidia. com/blog/nemotron-4-synthetic-data-generation-llm-training/https://x.com/lmsysorg/status/1801682893988892716

#ision #Method Introduction##################The Nemotron-4-340B-Base model architecture is a standard decoder-only Transformer architecture with causal attention Force mask, rotation position embedding (RoPE), SentencePiece tokenizer, etc. The hyperparameters of Nemotron-4-340B-Base are shown in Table 1. It has 9.4 billion embedded parameters and 331.6 billion non-embedded parameters. ###############The following table shows some training details of the Nemotron-4-340B-Base model. The table summarizes the 3 stages of batch size gradient, including each iteration time and model FLOP/s utilization. ###############To develop powerful reward models, NVIDIA collected a dataset of 10k human preference data called HelpSteer2 and released it publicly.Dataset address: https://huggingface.co/datasets/nvidia/HelpSteer2Regression Reward Model Nemotron-4-340B-Reward builds on the Nemotron-4-340B-Base model and replaces the last softmax layer with a new reward header. This header is a linear projection that maps the hidden state of the last layer into a five-dimensional vector of HelpSteer properties (usefulness, correctness, coherence, complexity, verbosity). During the inference process, these attribute values can be aggregated into an overall reward through a weighted sum. This bonus mode provides a solid foundation for training the Nemotron-4-340B-Instruct. The study found that such a model performed very well on RewardBench: Fine-tuned with NeMo, Optimize inference with TensorRT-LLMUsing open source NVIDIA NeMo and NVIDIA TensorRT-LLM, developers can optimize the efficiency of their guidance and reward models to Generate synthetic data and score responses. All Nemotron-4 340B models are optimized using TensorRT-LLM to exploit tensor parallelism, a type of model parallelism in which a single weight matrix is Segmentation across multiple GPUs and servers enables efficient inference at scale. Nemotron-4 340B Base is trained on 9 trillion tokens and can be customized using the NeMo framework to fit specific use cases or domains. This fine-tuning process benefits from large amounts of pre-training data and provides more accurate output for specific downstream tasks. Among these, the NeMo framework provides a variety of customization methods, including supervised fine-tuning and parameter-efficient fine-tuning methods, such as low-rank adaptation (LoRA). To improve model quality, developers can align their models using NeMo Aligner and datasets annotated by Nemotron-4 340B Reward. Alignment is a critical step in training large language models, where model behavior is fine-tuned using algorithms like RLHF to ensure that its output is safe, accurate, contextual, and consistent with its stated goals. Enterprises seeking enterprise-grade support and secure production environments can also access NeMo and TensorRT-LLM through the cloud-native NVIDIA AI Enterprise software platform. The platform provides an accelerated and efficient runtime environment for generative AI base models. Figure 1 highlights the performance of the Nemotron-4 340B model family in Accuracy in selected tasks. Specifically: Nemotron-4-340B-Base outperforms Llama-3 70B, Mixtral 8x22B and Comparable to open access base models such as Qwen-2 72B. The Nemotron-4-340B-Instruct surpasses corresponding instruction models when it comes to instruction following and chat capabilities. Nemotron-4-340B Reward achieves the highest accuracy on RewardBench, even surpassing proprietary models such as GPT-4o-0513 and Gemini 1.5 Pro-0514. After the launch of Nemotron-4-340B, the evaluation platform immediately released its benchmark results. It can be seen that its performance exceeded that in hard benchmark tests such as Arena-Hard-Auto. Llama-3-70b#Does this mean that a new, most powerful model in the industry has emerged? ##https://blogs.nvidia. com/blog/nemotron-4-synthetic-data-generation-llm-training/https://x.com/lmsysorg/status/1801682893988892716The above is the detailed content of NVIDIA's most powerful open source universal model Nemotron-4 340B. For more information, please follow other related articles on the PHP Chinese website!

#ision

#ision

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

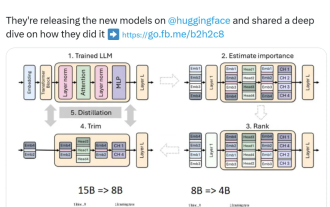

Nvidia plays with pruning and distillation: halving the parameters of Llama 3.1 8B to achieve better performance with the same size

Aug 16, 2024 pm 04:42 PM

Nvidia plays with pruning and distillation: halving the parameters of Llama 3.1 8B to achieve better performance with the same size

Aug 16, 2024 pm 04:42 PM

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM