Technology peripherals

Technology peripherals

AI

AI

All CVPR 2024 awards announced! Nearly 10,000 people attended the conference offline, and a Chinese researcher from Google won the best paper award

All CVPR 2024 awards announced! Nearly 10,000 people attended the conference offline, and a Chinese researcher from Google won the best paper award

All CVPR 2024 awards announced! Nearly 10,000 people attended the conference offline, and a Chinese researcher from Google won the best paper award

In the early morning of June 20th, Beijing time, CVPR 2024, the top international computer vision conference held in Seattle, officially announced the best paper and other awards.

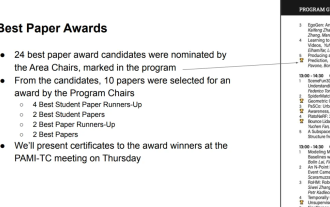

A total of 10 papers won awards this year, including 2 best papers, 2 best student papers, in addition to 2 best paper nominations and 4 best student paper nominations.

The top conference in the field of computer vision (CV) is CVPR, which attracts a large number of research institutions and universities to participate every year. According to statistics, a total of 11,532 papers were submitted this year, 2,719 of which were accepted, with an acceptance rate of 23.6%.

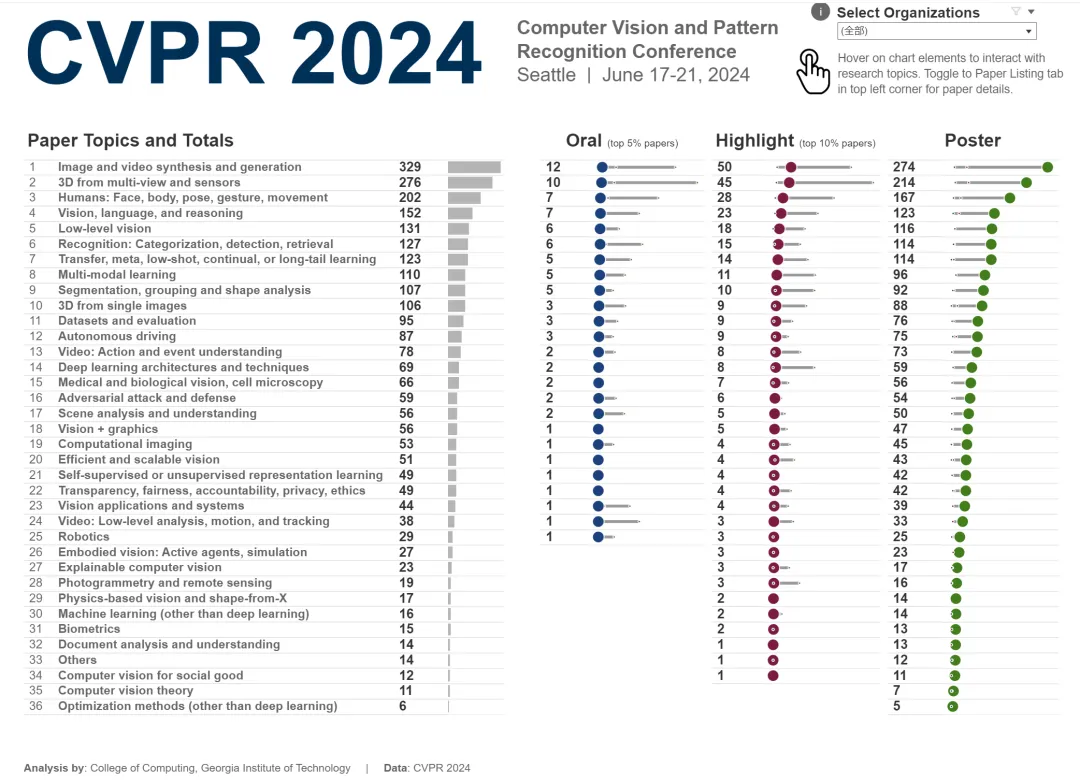

According to Georgia Institute of Technology’s statistical analysis of CVPR 2024 data, in terms of research topics, the largest number of papers is the topic of Image and video synthesis and generation, with a total of 329 papers.

The total number of participants this year is higher than in previous years, and more and more people choose to participate offline.

Best Paper

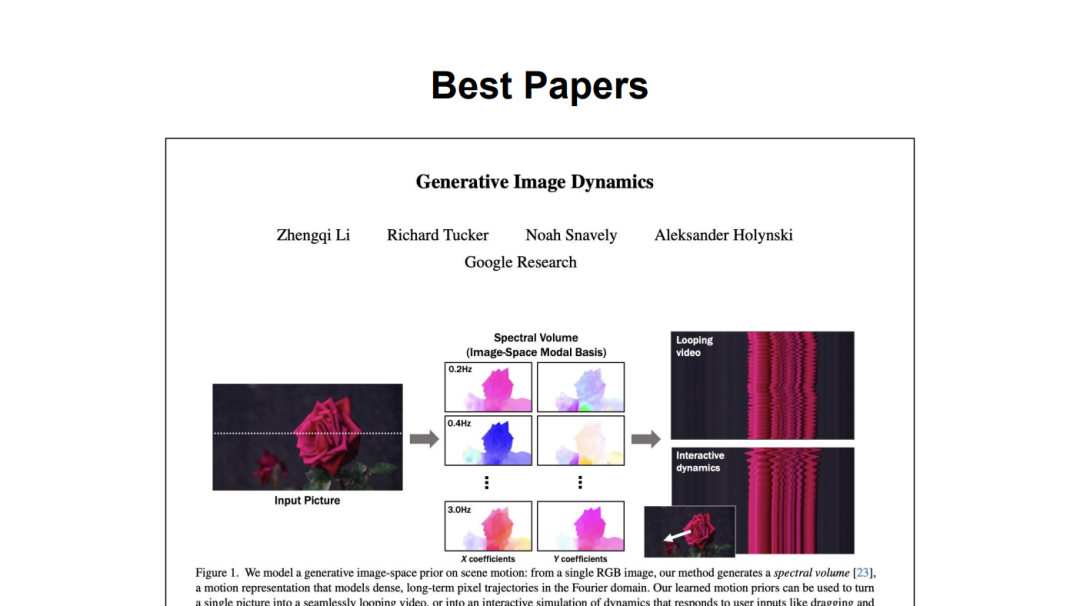

Paper 1: Generative Image Dynamics

Authors: Zhengqi Li, Richard Tucker, Noah Snavely, Aleksander Holynski

-

Institution: Google Research

Paper address: https://arxiv.org/pdf/2309.07906

Zhengqi Li is a research scientist at Google DeepMind. Previously, he received his PhD in Computer Science from Cornell University, where he studied under Professor Noah Snavely. His research has received several awards, including the 2020 Google PhD Fellowship, the 2020 Adobe Research Fellowship, CVPR 2019 and CVPR 2023 Best Paper Honors, and ICCV 2023 Best Student Paper Award.

Abstract: This study proposes an image-space prior method for modeling scene motion. The prior is learned from a collection of motion trajectories extracted from real video sequences, depicting the natural oscillation dynamics of objects such as trees, clothing, etc. swaying in the wind. The study models dense long-term motion in the Fourier domain as a spectral volume, which the team found is well suited to prediction with diffusion models.

Given a single image, the model trained in this study uses a frequency-coordinated diffusion sampling process to predict the spectral volume, which can also be converted into a motion texture that spans the entire video.运动 This study can reduce (top) or zoom (bottom) animation motion by adjusting the amplitude of the motion texture.

Paper 2: Rich Human Feedback for Text-to-Image Generation

- Institution: University of California, San Diego Branch campus, Google Research, University of Southern California, University of Cambridge, Brandeis University

- Paper address: https://arxiv.org/pdf/2312.10240

-

From the author column of the paper, we can It can be seen that many Chinese participated in this research. Among them, Youwei Liang is a doctoral student in the Department of Electrical and Computer Engineering at the University of California, San Diego. Before that, he was an undergraduate student majoring in information and computer science at South China Agricultural University; Junfeng He comes from Google. He previously graduated with a master's degree from Tsinghua University.

Abstract: Recently, text-to-image (T2I) generative models have made significant progress, capable of generating high-resolution images from text descriptions. However, many generated images still suffer from artifacts/untrustworthy, factual inconsistency, and poor aesthetics.

Inspired by the successful use of reinforcement learning with human feedback (RLHF) for large language models, this research enriches the feedback signal by:

marking untrustworthy or misaligned image areas with text;

pairs Annotate situations where words in the text prompt are distorted or missing on the image.

This study created the 18K generated image dataset RichHF-18K, collected rich human feedback on RichHF-18K, and trained a multi-modal transformer to automatically predict feedback. The study demonstrates that predicted human feedback can be used to improve image generation, such as by selecting high-quality training data to fine-tune and improve generative models, or by creating masks to repair problematic image areas.

Best Paper Runner-up

Paper 1: EventPS: Real-Time Photometric Stereo Using an Event Camera

Authors: Bohan Yu, Jieji Ren, Jin Han, Feishi Wang, Jinxiu Liang, Boxin Shi

Institutions: Peking University, Shanghai Jiao Tong University, etc.

Paper address: https://openaccess.thecvf.com/content/CVPR2024/papers/Yu_EventPS_Real-Time_Photometric_Stereo_Using_an_Event_Camera_CVPR_2024_paper.pdf

Paper 2: pixelSplat: 3D Gaussian Splats from Image Pairs for Scalable Generalizable 3D Reconstruction

Authors: David Charatan, Sizhe Lester Li, Andrea Tagliasacchi, Vincent Sitzmann

Institution: MIT, Simon Fraser University, Toronto University

Paper address: https://openaccess.thecvf.com/content/CVPR2024/papers/Charatan_pixelSplat_3D_Gaussian_Splats_from_Image_Pairs_for_Scalable_Generalizable_CVPR_2024_paper.pdf

Best Student Paper

Paper 1: BioCLIP: A Vision Foundation Model for the Tree of Life

Authors: Samuel Stevens , Jiaman Wu , Matthew J Thompson , Elizabeth G Campolongo , Chan Hee Song , David Edward Carlyn , Li Dong , Wasila M Dahdul , Charles Stewart , Tanya Berger-Wolf , Wei-Lun Chao, Yu Su

Institution: Ohio State University, Microsoft Research, University of California, Irvine, Rensselaer Polytechnic Institute

Paper address: https://arxiv.org/pdf/2311.18803

Abstract: Images of the natural world collected by cameras ranging from drones to personal cell phones are increasingly becoming a rich source of biological information. There is an explosion of computational methods and tools, especially computer vision, for extracting biologically relevant information from scientific and conservation images. However, most of them are customized methods designed for specific tasks and are not easily adapted or extended to new problems, contexts, and data sets. Researchers urgently need a visual model for general biological problems of organisms on images.

To achieve this goal, the research curated and released TREEOFLIFE-10M, the largest and most diverse ML-ready biological image dataset. Based on this, the researchers developed the basic model BIOCLIP, which is mainly used to construct the tree of life (tree of life), using the unique attributes of biology captured by TREEOFLIFE-10M, that is, the richness and diversity of images of plants, animals and fungi, and rich knowledge of structured biology. Tree diagram of 108 gates in TREEOFLIFE-10M.

The researchers rigorously benchmarked our method on various fine-grained biological classification tasks and found that BIOCLIP consistently performed significantly better than existing baselines (16% to 17% higher in absolute value).

Intrinsic evaluation shows that BIOCLIP has learned a hierarchical representation consistent with the Tree of Life, revealing its strong generality.

Paper 2: Mip-Splatting: Alias-free 3D Gaussian Splatting Paper authors: Zehao Yu, Anpei Chen, Binbin Huang, Torsten Sattler, Andreas Geiger Institutions: University of Tübingen, Tübingen Artificial Intelligence Center, Shanghai University of Science and Technology, Breitning, Czech Technical University in Prague

Paper address: https://arxiv.org/abs/2311.16493

Abstract: Recently, 3D Gaussian splattering techniques have demonstrated impressive results in novel view synthesis, reaching high fidelity and efficiency levels. However, when changing the sampling rate (for example by changing the focal length or camera distance), strong artifacts may appear.

3D Gaussian splatter works by representing a 3D object as a 3D Gaussian function that is projected onto the image plane, followed by a 2D dilation in screen space, as shown in Figure (a). The inherent shrinkage bias of this method causes the degenerate 3D Gaussian function to exceed the sampling limit, as shown by the δ function in Figure (b), while it renders similar to 2D due to the dilation operation. However, when changing the sampling rate (either via focal length or camera distance), strong dilation effects (c) and high-frequency artifacts (d) are observed.

The research team found that the reason for this phenomenon can be attributed to the lack of 3D frequency constraints and the use of a 2D dilation filter. To solve this problem, they introduced a 3D smoothing filter that constrains the size of 3D Gaussian primitives according to the maximum sampling frequency induced by the input view, thereby eliminating high-frequency artifacts when zooming in.

In addition, the author team replaced the 2D dilation filter with a 2D Mip filter, which simulates the 2D box filter and effectively alleviates the aliasing and expansion problems. The researchers verified the effectiveness of this method based on evaluations, including scenarios such as training on single-scale images and multi-scale testing.

Runner-up for Best Student Paper

Paper: SpiderMatch: 3D Shape Matching with Global Optimality and Geometric Consistency

Author: Paul Roetzer, Florian Bernard

Institution: University of Bonn

Link : https://openaccess.thecvf.com/content/CVPR2024/papers/Roetzer_SpiderMatch_3D_Shape_Matching_with_Global_Optimality_and_Geometric_Consistency_CVPR_2024_paper.pdf

Paper: Image Processing GNN: Breaking Rigidity in Super-Resolution

Author: Yuchuan Tian, Hanting Chen, Chao Xu, Yunhe Wang

Institution: Peking University, Huawei Noah's Ark Laboratory

Link: https://openaccess.thecvf.com/content/CVPR2024/papers/Tian_Image_Processing_GNN_Breaking_Rigidity_in_Super-Resolution_CVPR_2024_paper.pdf

Paper: Objects as volumes: A stochastic geometry view of opaque solids

Authors: Bailey Miller, Hanyu Chen, Alice Lai, Ioannis Gkioulekas

-

Institution: Carnegie Mellon University

Link: https://arxiv.org/pdf/2312.15406v2

Paper: Comparing the Decision-Making Mechanisms by Transformers and CNNs via Explanation Methods

Author: Mingqi Jiang , Saeed Khorram, Li Fuxin

Institution: Oregon State University

Link: https://openaccess.thecvf.com/content/CVPR2024/papers/Jiang_Comparing_the_Decision-Making_Mechanisms_by_Transformers_and_CNNs_via_Explanation_CVPR_2024_paper .pdf

Others Awards

The conference also announced the PAMI TC awards, including the Longuet-Higgins Award, Young Investigator Award, and Thomas S. Huang Memorial Award.

Longuet-Higgins Award

The Longuet-Higgins Award is the "Computer Vision Fundamental Contribution Award" awarded by the IEEE Computer Society Pattern Analysis and Machine Intelligence (PAMI) Technical Committee at the annual CVPR to recognize the contributions to computer vision ten years ago. CVPR papers that have had a significant impact on computer vision research. The award is named for theoretical chemist and cognitive scientist H. Christopher Longuet-Higgins.

This year’s award-winning paper is "Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation".

Authors: Ross Girshick, Jeff Donahue, Trevor Darrell and Jitendra Malik

Institution: UC Berkeley

Paper link: https://arxiv.org/abs/1311.2524

Young Researcher Awards

The Young Researcher Awards aim to recognize young scientists and encourage them to continue to make groundbreaking work. The criteria for selection is that the recipient has had less than 7 years of experience earning his or her PhD.

This year’s winners are Angjoo Kanazawa (UC Berkeley) and Carl Vondrick (Columbia University).

Also, Katie Bouman (Caltech) received an honorable mention for the Young Investigator Award.

Thomas Huang Memorial Award

At CVPR 2020, in memory of Professor Thomas S. Huang (Huang Xutao), the PAMITC Awards Committee approved the establishment of the Thomas S. Huang Memorial Award in recognition of CV research, education and service Researchers who are recognized as role models. The award will be awarded starting in 2021. Recipients need to have held their PhD for at least 7 years, preferably in mid-career (no more than 25 years).

This year’s winner is Oxford University professor Andrea Vedaldi.

For more information, please refer to: https://media.eventhosts.cc/Conferences/CVPR2024/OpeningRemarkSlides.pdf

Reference link:

https://public.tableau.com /views/CVPR2024/CVPRtrends?%3AshowVizHome=no&continueFlag=6a947f6367e90acd982f7ee49a495fe2

The above is the detailed content of All CVPR 2024 awards announced! Nearly 10,000 people attended the conference offline, and a Chinese researcher from Google won the best paper award. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1664

1664

14

14

1421

1421

52

52

1315

1315

25

25

1266

1266

29

29

1239

1239

24

24

A Diffusion Model Tutorial Worth Your Time, from Purdue University

Apr 07, 2024 am 09:01 AM

A Diffusion Model Tutorial Worth Your Time, from Purdue University

Apr 07, 2024 am 09:01 AM

Diffusion can not only imitate better, but also "create". The diffusion model (DiffusionModel) is an image generation model. Compared with the well-known algorithms such as GAN and VAE in the field of AI, the diffusion model takes a different approach. Its main idea is a process of first adding noise to the image and then gradually denoising it. How to denoise and restore the original image is the core part of the algorithm. The final algorithm is able to generate an image from a random noisy image. In recent years, the phenomenal growth of generative AI has enabled many exciting applications in text-to-image generation, video generation, and more. The basic principle behind these generative tools is the concept of diffusion, a special sampling mechanism that overcomes the limitations of previous methods.

Generate PPT with one click! Kimi: Let the 'PPT migrant workers' become popular first

Aug 01, 2024 pm 03:28 PM

Generate PPT with one click! Kimi: Let the 'PPT migrant workers' become popular first

Aug 01, 2024 pm 03:28 PM

Kimi: In just one sentence, in just ten seconds, a PPT will be ready. PPT is so annoying! To hold a meeting, you need to have a PPT; to write a weekly report, you need to have a PPT; to make an investment, you need to show a PPT; even when you accuse someone of cheating, you have to send a PPT. College is more like studying a PPT major. You watch PPT in class and do PPT after class. Perhaps, when Dennis Austin invented PPT 37 years ago, he did not expect that one day PPT would become so widespread. Talking about our hard experience of making PPT brings tears to our eyes. "It took three months to make a PPT of more than 20 pages, and I revised it dozens of times. I felt like vomiting when I saw the PPT." "At my peak, I did five PPTs a day, and even my breathing was PPT." If you have an impromptu meeting, you should do it

All CVPR 2024 awards announced! Nearly 10,000 people attended the conference offline, and a Chinese researcher from Google won the best paper award

Jun 20, 2024 pm 05:43 PM

All CVPR 2024 awards announced! Nearly 10,000 people attended the conference offline, and a Chinese researcher from Google won the best paper award

Jun 20, 2024 pm 05:43 PM

In the early morning of June 20th, Beijing time, CVPR2024, the top international computer vision conference held in Seattle, officially announced the best paper and other awards. This year, a total of 10 papers won awards, including 2 best papers and 2 best student papers. In addition, there were 2 best paper nominations and 4 best student paper nominations. The top conference in the field of computer vision (CV) is CVPR, which attracts a large number of research institutions and universities every year. According to statistics, a total of 11,532 papers were submitted this year, and 2,719 were accepted, with an acceptance rate of 23.6%. According to Georgia Institute of Technology’s statistical analysis of CVPR2024 data, from the perspective of research topics, the largest number of papers is image and video synthesis and generation (Imageandvideosyn

PyCharm Community Edition Installation Guide: Quickly master all the steps

Jan 27, 2024 am 09:10 AM

PyCharm Community Edition Installation Guide: Quickly master all the steps

Jan 27, 2024 am 09:10 AM

Quick Start with PyCharm Community Edition: Detailed Installation Tutorial Full Analysis Introduction: PyCharm is a powerful Python integrated development environment (IDE) that provides a comprehensive set of tools to help developers write Python code more efficiently. This article will introduce in detail how to install PyCharm Community Edition and provide specific code examples to help beginners get started quickly. Step 1: Download and install PyCharm Community Edition To use PyCharm, you first need to download it from its official website

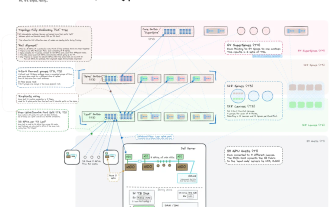

From bare metal to a large model with 70 billion parameters, here is a tutorial and ready-to-use scripts

Jul 24, 2024 pm 08:13 PM

From bare metal to a large model with 70 billion parameters, here is a tutorial and ready-to-use scripts

Jul 24, 2024 pm 08:13 PM

We know that LLM is trained on large-scale computer clusters using massive data. This site has introduced many methods and technologies used to assist and improve the LLM training process. Today, what we want to share is an article that goes deep into the underlying technology and introduces how to turn a bunch of "bare metals" without even an operating system into a computer cluster for training LLM. This article comes from Imbue, an AI startup that strives to achieve general intelligence by understanding how machines think. Of course, turning a bunch of "bare metal" without an operating system into a computer cluster for training LLM is not an easy process, full of exploration and trial and error, but Imbue finally successfully trained an LLM with 70 billion parameters. and in the process accumulate

AI in use | AI created a life vlog of a girl living alone, which received tens of thousands of likes in 3 days

Aug 07, 2024 pm 10:53 PM

AI in use | AI created a life vlog of a girl living alone, which received tens of thousands of likes in 3 days

Aug 07, 2024 pm 10:53 PM

Editor of the Machine Power Report: Yang Wen The wave of artificial intelligence represented by large models and AIGC has been quietly changing the way we live and work, but most people still don’t know how to use it. Therefore, we have launched the "AI in Use" column to introduce in detail how to use AI through intuitive, interesting and concise artificial intelligence use cases and stimulate everyone's thinking. We also welcome readers to submit innovative, hands-on use cases. Video link: https://mp.weixin.qq.com/s/2hX_i7li3RqdE4u016yGhQ Recently, the life vlog of a girl living alone became popular on Xiaohongshu. An illustration-style animation, coupled with a few healing words, can be easily picked up in just a few days.

A must-read for technical beginners: Analysis of the difficulty levels of C language and Python

Mar 22, 2024 am 10:21 AM

A must-read for technical beginners: Analysis of the difficulty levels of C language and Python

Mar 22, 2024 am 10:21 AM

Title: A must-read for technical beginners: Difficulty analysis of C language and Python, requiring specific code examples In today's digital age, programming technology has become an increasingly important ability. Whether you want to work in fields such as software development, data analysis, artificial intelligence, or just learn programming out of interest, choosing a suitable programming language is the first step. Among many programming languages, C language and Python are two widely used programming languages, each with its own characteristics. This article will analyze the difficulty levels of C language and Python

Five programming software for getting started with learning C language

Feb 19, 2024 pm 04:51 PM

Five programming software for getting started with learning C language

Feb 19, 2024 pm 04:51 PM

As a widely used programming language, C language is one of the basic languages that must be learned for those who want to engage in computer programming. However, for beginners, learning a new programming language can be difficult, especially due to the lack of relevant learning tools and teaching materials. In this article, I will introduce five programming software to help beginners get started with C language and help you get started quickly. The first programming software was Code::Blocks. Code::Blocks is a free, open source integrated development environment (IDE) for