Technology peripherals

Technology peripherals

AI

AI

Runway and Luma are fighting again! Yann LeCun bombards: No matter how good you are, you are not a 'world model'

Runway and Luma are fighting again! Yann LeCun bombards: No matter how good you are, you are not a 'world model'

Runway and Luma are fighting again! Yann LeCun bombards: No matter how good you are, you are not a 'world model'

Machine Power Report

Editor: Yang Wen

The wave of artificial intelligence represented by large models and AIGC has quietly changed the way we live and work, but Most people still don't know how to use it.

Therefore, we have launched the "AI in Use" column to introduce the use of AI in detail through intuitive, interesting and concise artificial intelligence use cases and stimulate everyone's thinking.

We also welcome readers to submit innovative use cases that they have personally practiced.

The AI video industry is "fighting" again!

On June 29, the well-known generative AI platform Runway announced that its latest model Gen-3 Alpha has started testing for some users.

On the same day, Luma launched a new keyframe function and is open to all users for free.

It can be said that "you have a good plan, I have a ladder", the two are fighting endlessly.

This made netizens extremely happy, "June, what a wonderful month!"

"Crazy May, crazy June, so crazy that I can't stop!"

-1-

Runway kills Hollywood

Two weeks ago, when the AI video "King" Runway launched a new video generation model Gen-3 Alpha, it Preview -

will first be available to paying users "within a few days", and the free version will also be open to all users at some point in the future.

On June 29, Runway fulfilled its promise and announced that its latest Gen-3 Alpha has started testing for some users.

Gen-3 Alpha is highly sought after because compared with the previous generation, it has achieved significant improvements in terms of light and shadow, quality, composition, text semantic restoration, physical simulation, and action consistency. Even the slogan is "For artists, by artists (born for artists, born by artists)".

What is the effect of Gen-3 Alpha? Netizens who are involved in flower arrangements have always had the most say. Next, please enjoy -

Movie footage of a terrifying monster rising from the Thames River in London:

A sad teddy bear is crying, crying until he is sad and blowing his nose with a tissue:

A British girl in a gorgeous dress is walking on the street where the castle stands, with speeding vehicles and slow horses beside her:

A huge lizard, studded with gorgeous jewelry and pearls, walks through Dense vegetation. The lizards sparkle in the light, and the footage is as realistic as a documentary.

There is also a diamond-encrusted toad covered in rubies and sapphires:

In the city streets at night, the rain creates the reflection of neon lights.

The camera starts from the light reflected in the puddle, slowly rises to show the glowing neon billboard, and then continues to zoom back to show the entire street soaked by rain.

The movement of the camera: first aiming at the reflection in the puddle, then lifting it up and pulling it back in one go to show the urban scenery on this rainy night.

Video link: https://www.php.cn/link/dbf138511ed1d9278bde43cc0000e49a

The yellow mold growing in the petri dish, under the dim and mysterious light, shows a cool color and full of dynamics.

In the autumn forest, the ground is covered with various orange, yellow and red fallen leaves.

A gentle breeze blew by, and the camera moved forward close to the ground. A whirlwind began to form, picking up the fallen leaves and forming a spiral. The camera rises with the fallen leaves and revolves around the rotating column of leaves.

Video link: https://www.php.cn/link/dbf138511ed1d9278bde43cc0000e49a

Starting from a low perspective of a graffiti-covered tunnel, the camera advances steadily along the road, through a short, dark section tunnel, the camera quickly rises as it appears on the other side, showing a large field of colorful wildflowers surrounded by snow-capped mountains.

Video link: https://www.php.cn/link/dbf138511ed1d9278bde43cc0000e49a

A close-up shot of playing the piano, fingers jumping on the keys, no hand distortion, smooth movements, the only shortcoming is , there is no ring on the ring finger, but the shadow "comes out of nothing".

The netizens also exposed Runway co-founder Cristóbal Valenzuela, who generated a video for his homemade bee camera.

Put the camera on the back of the bee, and the scene captured is like this:

Video link: https://www.php.cn/link/dbf138511ed1d9278bde43cc0000e49a

Put it on the face of the bee It’s purple:

Video link: https://www.php.cn/link/dbf138511ed1d9278bde43cc0000e49a

So, what does this pocket camera look like?

If AI continues to evolve like this, Hollywood actors will go on strike again.

-2-

Luma’s new keyframe function, smooth picture transition

On June 29, Luma AI launched the keyframe function, and with a wave of your hand, it was directly open to all users for free.

Users only need to upload the starting and ending images and add text descriptions, and Luma can generate Hollywood-level special effects videos.

For example, X netizen @hungrydonke uploaded two keyframe photos:

|

|

Then enter the prompt word: A bunch of black confetti suddenly falls (Suddenly, a bunch of black confetti suddenly falls) The effect is as follows -

Netizen @JonathanSolder3 first used midjourney to generate two pictures:

|

|

Then use the Luma keyframe function to generate an animation of Super Saiyan transformation. According to the author, Luma does not need a power-up prompt, just enter "Super Saiyan".

Video link: https://www.php.cn/link/dbf138511ed1d9278bde43cc0000e49a

Some netizens use this function to complete the transition of each shot, thereby mixing and matching classic fairy tales to generate a segment called "The Wolf" , The Warrior, and The Wardrobe” animation.

Video link: https://www.php.cn/link/dbf138511ed1d9278bde43cc0000e49a

Devil turns into angel:

Orange turns into chick:

Starbucks logo transformation:

Video link: https://www.php.cn/link/dbf138511ed1d9278bde43cc0000e49a

The AI video industry is so anxious. God knows how Sora can keep his composure and not show up until now.-3-

Yann LeCun "Bombardment": They don't understand physics at all

When Sora was released at the beginning of the year, "world model" suddenly became a hot concept.

Later, Google’s Genie also used the banner of “world model”. When Runway launched Gen-3 Alpha this time, the official said it “took an important step towards building a universal world model.”

What exactly is a world model?

In fact, there is no standard definition for this, but AI scientists believe that humans and animals will subtly grasp the operating rules of the world, so that they can "predict" what will happen next and take action. The study of world models is to let AI learn this ability.

Many people believe that the videos generated by applications such as Sora, Luma, and Runway are quite realistic and can also generate new video content in chronological order. They seem to have learned the ability to "predict" the development of things. This coincides with the goal pursued by world model research.

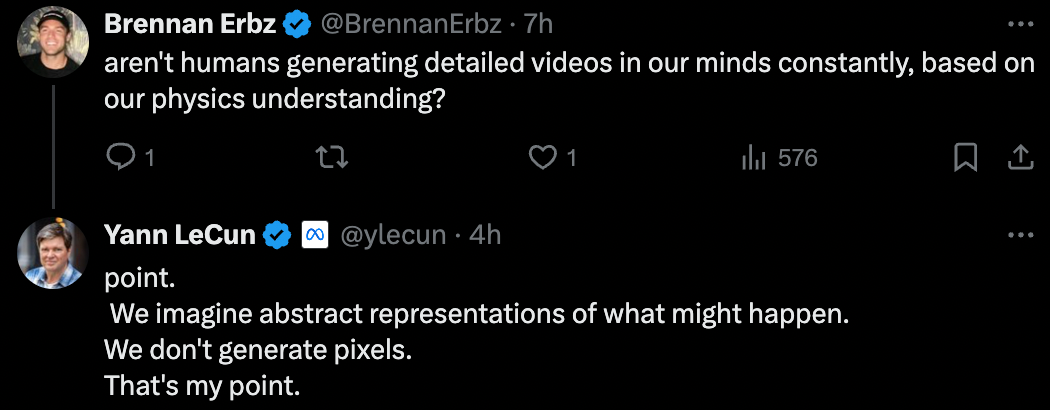

However, Turing Award winner Yann LeCun has been "pouring cold water".

He believes, "Producing the most realistic-looking videos based on prompts does not mean that the system understands the physical world, and generating causal predictions from world models is very different."

On July 1, Yann LeCun posted 6 posts in a row. Generative models for bombardment videos.

He retweeted a video of AI-generated gymnastics. The characters in the video either had their heads disappear out of thin air, or four legs suddenly appeared, and all kinds of weird pictures were everywhere.

Video link: https://www.php.cn/link/dbf138511ed1d9278bde43cc0000e49a

Yann LeCun said that the video generation model does not understand basic physical principles, let alone the structure of the human body.

"Sora and other video generative models have similar problems. There is no doubt that video generation technology will become more advanced over time, but a good world model that truly understands physics will not be generative "All birds and mammals understand physics better than any video generation model, yet none of them can generate detailed videos," said Yann LeCun.

Some netizens questioned: Don’t humans constantly generate detailed “videos” in their minds based on their understanding of physics?

Yann LeCun answered questions online, "We envision abstract scenarios that may occur, rather than generating pixel images. This is the point I want to express."

Yann LeCun retorts: No, they don’t. They just generate abstract scenarios of what might happen, which is very different from generating detailed videos.  In the future, we will bring more AIGC case demonstrations through new columns, and everyone is welcome to join the group for communication.

In the future, we will bring more AIGC case demonstrations through new columns, and everyone is welcome to join the group for communication.

The above is the detailed content of Runway and Luma are fighting again! Yann LeCun bombards: No matter how good you are, you are not a 'world model'. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1664

1664

14

14

1423

1423

52

52

1317

1317

25

25

1268

1268

29

29

1246

1246

24

24

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

But maybe he can’t defeat the old man in the park? The Paris Olympic Games are in full swing, and table tennis has attracted much attention. At the same time, robots have also made new breakthroughs in playing table tennis. Just now, DeepMind proposed the first learning robot agent that can reach the level of human amateur players in competitive table tennis. Paper address: https://arxiv.org/pdf/2408.03906 How good is the DeepMind robot at playing table tennis? Probably on par with human amateur players: both forehand and backhand: the opponent uses a variety of playing styles, and the robot can also withstand: receiving serves with different spins: However, the intensity of the game does not seem to be as intense as the old man in the park. For robots, table tennis

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

On August 21, the 2024 World Robot Conference was grandly held in Beijing. SenseTime's home robot brand "Yuanluobot SenseRobot" has unveiled its entire family of products, and recently released the Yuanluobot AI chess-playing robot - Chess Professional Edition (hereinafter referred to as "Yuanluobot SenseRobot"), becoming the world's first A chess robot for the home. As the third chess-playing robot product of Yuanluobo, the new Guoxiang robot has undergone a large number of special technical upgrades and innovations in AI and engineering machinery. For the first time, it has realized the ability to pick up three-dimensional chess pieces through mechanical claws on a home robot, and perform human-machine Functions such as chess playing, everyone playing chess, notation review, etc.

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

The start of school is about to begin, and it’s not just the students who are about to start the new semester who should take care of themselves, but also the large AI models. Some time ago, Reddit was filled with netizens complaining that Claude was getting lazy. "Its level has dropped a lot, it often pauses, and even the output becomes very short. In the first week of release, it could translate a full 4-page document at once, but now it can't even output half a page!" https:// www.reddit.com/r/ClaudeAI/comments/1by8rw8/something_just_feels_wrong_with_claude_in_the/ in a post titled "Totally disappointed with Claude", full of

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference being held in Beijing, the display of humanoid robots has become the absolute focus of the scene. At the Stardust Intelligent booth, the AI robot assistant S1 performed three major performances of dulcimer, martial arts, and calligraphy in one exhibition area, capable of both literary and martial arts. , attracted a large number of professional audiences and media. The elegant playing on the elastic strings allows the S1 to demonstrate fine operation and absolute control with speed, strength and precision. CCTV News conducted a special report on the imitation learning and intelligent control behind "Calligraphy". Company founder Lai Jie explained that behind the silky movements, the hardware side pursues the best force control and the most human-like body indicators (speed, load) etc.), but on the AI side, the real movement data of people is collected, allowing the robot to become stronger when it encounters a strong situation and learn to evolve quickly. And agile

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

At this ACL conference, contributors have gained a lot. The six-day ACL2024 is being held in Bangkok, Thailand. ACL is the top international conference in the field of computational linguistics and natural language processing. It is organized by the International Association for Computational Linguistics and is held annually. ACL has always ranked first in academic influence in the field of NLP, and it is also a CCF-A recommended conference. This year's ACL conference is the 62nd and has received more than 400 cutting-edge works in the field of NLP. Yesterday afternoon, the conference announced the best paper and other awards. This time, there are 7 Best Paper Awards (two unpublished), 1 Best Theme Paper Award, and 35 Outstanding Paper Awards. The conference also awarded 3 Resource Paper Awards (ResourceAward) and Social Impact Award (

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Deep integration of vision and robot learning. When two robot hands work together smoothly to fold clothes, pour tea, and pack shoes, coupled with the 1X humanoid robot NEO that has been making headlines recently, you may have a feeling: we seem to be entering the age of robots. In fact, these silky movements are the product of advanced robotic technology + exquisite frame design + multi-modal large models. We know that useful robots often require complex and exquisite interactions with the environment, and the environment can be represented as constraints in the spatial and temporal domains. For example, if you want a robot to pour tea, the robot first needs to grasp the handle of the teapot and keep it upright without spilling the tea, then move it smoothly until the mouth of the pot is aligned with the mouth of the cup, and then tilt the teapot at a certain angle. . this

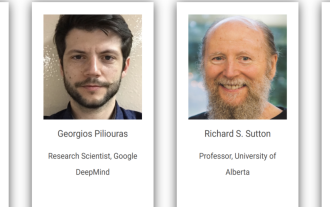

Distributed Artificial Intelligence Conference DAI 2024 Call for Papers: Agent Day, Richard Sutton, the father of reinforcement learning, will attend! Yan Shuicheng, Sergey Levine and DeepMind scientists will give keynote speeches

Aug 22, 2024 pm 08:02 PM

Distributed Artificial Intelligence Conference DAI 2024 Call for Papers: Agent Day, Richard Sutton, the father of reinforcement learning, will attend! Yan Shuicheng, Sergey Levine and DeepMind scientists will give keynote speeches

Aug 22, 2024 pm 08:02 PM

Conference Introduction With the rapid development of science and technology, artificial intelligence has become an important force in promoting social progress. In this era, we are fortunate to witness and participate in the innovation and application of Distributed Artificial Intelligence (DAI). Distributed artificial intelligence is an important branch of the field of artificial intelligence, which has attracted more and more attention in recent years. Agents based on large language models (LLM) have suddenly emerged. By combining the powerful language understanding and generation capabilities of large models, they have shown great potential in natural language interaction, knowledge reasoning, task planning, etc. AIAgent is taking over the big language model and has become a hot topic in the current AI circle. Au

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

This afternoon, Hongmeng Zhixing officially welcomed new brands and new cars. On August 6, Huawei held the Hongmeng Smart Xingxing S9 and Huawei full-scenario new product launch conference, bringing the panoramic smart flagship sedan Xiangjie S9, the new M7Pro and Huawei novaFlip, MatePad Pro 12.2 inches, the new MatePad Air, Huawei Bisheng With many new all-scenario smart products including the laser printer X1 series, FreeBuds6i, WATCHFIT3 and smart screen S5Pro, from smart travel, smart office to smart wear, Huawei continues to build a full-scenario smart ecosystem to bring consumers a smart experience of the Internet of Everything. Hongmeng Zhixing: In-depth empowerment to promote the upgrading of the smart car industry Huawei joins hands with Chinese automotive industry partners to provide