The AIxiv column is a column where this site publishes academic and technical content. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com

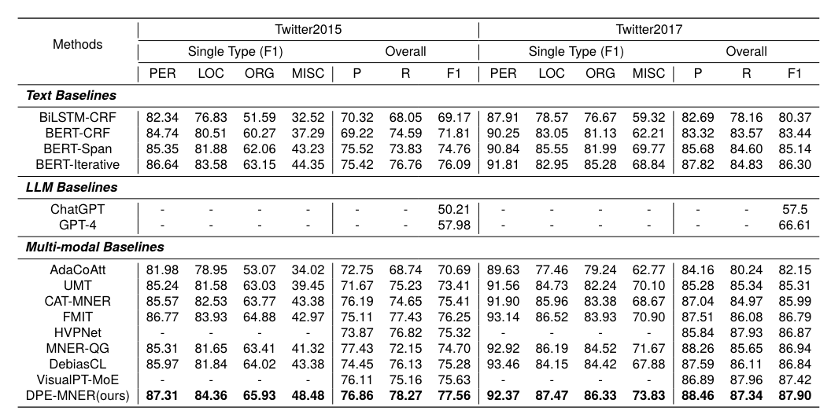

The author team of this article comes from the Social Computing and Information Retrieval Research Center of Harbin Institute of Technology. The author team consists of: Zheng Zihao, Zhang Zihan, Wang Zexin, Fu Rui Ji, Liu Ming, Wang Zhongyuan, Qin Bing. Multimodal representationMultimodal named entity recognition, as a basic and key task in building multimodal knowledge graphs, requires researchers to integrate multiple modal information to accurately Extract named entities from text. Although previous research has explored integration methods of multi-modal representations at different levels, they are still insufficient in fusing these multi-modal representations to provide rich contextual information and thereby improve the performance of multi-modal named entity recognition. . In this paper, the research team proposes DPE-MNER, an innovative iterative reasoning framework that follows the "decompose, prioritize, eliminate" strategy and dynamically integrates diverse multi-modal representations. This framework cleverly decomposes the fusion of multimodal representations into hierarchical and interconnected fusion layers, greatly simplifying the processing process. When integrating multimodal information, the team placed special emphasis on progressive transitions from "simple to complex" and "macro to micro." In addition, by explicitly modeling cross-modal correlations, the research team effectively excludes irrelevant information that may mislead MNER predictions. Through extensive experiments on two public datasets, the research team's method has been proven to be significantly effective in improving the accuracy and efficiency of multi-modal named entity recognition. This article is one of the ten best paper candidates among 1558 accepted papers for LREC-COLING 2024.

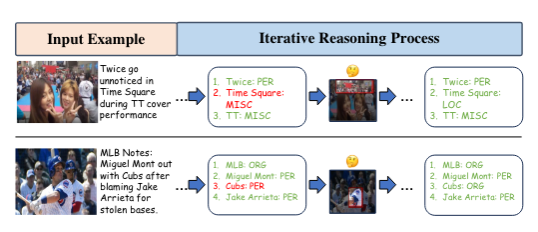

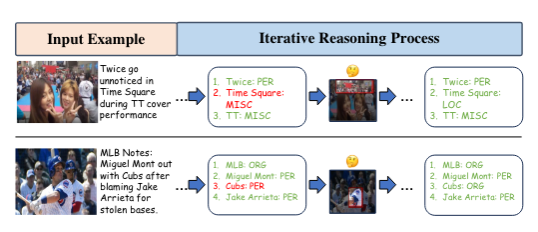

An example of multi-modal named entity recognition. The research team demonstrated a variety of multimodal representations that may be useful for named entity recognition decisions. Humans typically process this information mentally in an iterative manner. To address this problem, the research team drew inspiration from the field of Complex Problem Solving (Sternberg and Frensch, 1992). This field focuses on the study of methods and strategies used by humans and computers to solve problems involving multiple variables, uncertainty, and high complexity. First, they believe that when faced with complex problems, humans generally adopt an iterative approach. As shown in the figure, the research team actually uses an iterative process when dealing with MNER. Second, humans use specific strategies to simplify these problems, such as decomposing, prioritizing, and eliminating irrelevant factors. The research team believes that treating multi-modal named entity recognition (MNER) as an iterative process of integrating multi-modal information and using these strategies is very suitable for MNER tasks. Compared with single-step methods, multi-step methods can more comprehensively exploit diverse multi-modal representations in the process of iteratively optimizing named entity recognition (NER) results. In addition, these three strategies are very suitable for the integration of multiple representations in multi-modal NER:

- The decomposition strategy encourages us to split the fusion of multi-modal representations into smaller, more Easily tractable units capable of exploring multimodal interactions at different levels of granularity.

- The prioritization strategy recommends integrating multi-modal information according to the order of "easy to difficult" and "coarse to fine"; this progressive integration contributes to the step-by-step optimization of MNER predictions. This enables the model to gradually shift attention from simple but coarse information to complex but precise details.

- The irrelevance elimination strategy inspires us to explicitly screen and exclude irrelevant information in different multi-modal representations; this can eliminate irrelevant information that may affect MNER performance.

The research team designed an iterative multi-modal entity extraction framework that dynamically fuses multiple multi-modal features, which includes an iterative process and a prediction network.

The research team followed the diffusion model to model object recognition, visual alignment and text entity extraction as an iterative denoising process, and also used the diffusion model to combine multi-modal entities Extraction is modeled as an iterative process. The model first randomly initializes a series of entity intervals  , and uses a prediction network to encode multi-modal features to iteratively denoise during the denoising process to obtain the correct entity intervals

, and uses a prediction network to encode multi-modal features to iteratively denoise during the denoising process to obtain the correct entity intervals  in the text. As shown in the figure, the research team obtained a total of three granular representations in the text

in the text. As shown in the figure, the research team obtained a total of three granular representations in the text  , two granularities and two difficulties in the picture (they believe that aligned Representations are simple representations, misaligned representations are difficult representations)

, two granularities and two difficulties in the picture (they believe that aligned Representations are simple representations, misaligned representations are difficult representations)  . The team's prediction network AMRN includes an encoding network (DMMF) and a decoding network (MER). The design of the prediction network is based on the three strategies mentioned earlier. As shown in the figure, the encoding network is a hierarchical fusion network that fuses and decomposes multiple multi-modal features into a hierarchical process. The bottom-up process is to first integrate the image features of the same granularity and different difficulty into the text features $x_i$ of each granularity, then integrate the image features $Y$ of different granularities into the text features of each granularity, and finally integrate the different granularity features $Y$ into the text features

. The team's prediction network AMRN includes an encoding network (DMMF) and a decoding network (MER). The design of the prediction network is based on the three strategies mentioned earlier. As shown in the figure, the encoding network is a hierarchical fusion network that fuses and decomposes multiple multi-modal features into a hierarchical process. The bottom-up process is to first integrate the image features of the same granularity and different difficulty into the text features $x_i$ of each granularity, then integrate the image features $Y$ of different granularities into the text features of each granularity, and finally integrate the different granularity features $Y$ into the text features  of each granularity. The image features

of each granularity. The image features  Y and text features X are fused to obtain the final multi-modal representation. Input to the decoding network for decoding, and the decoding network obtains new intervals and the entity type of each interval.

Y and text features X are fused to obtain the final multi-modal representation. Input to the decoding network for decoding, and the decoding network obtains new intervals and the entity type of each interval. Underlying fusion. The research team at this level integrates image features of a certain granularity into text features of a certain granularity. According to the diffusion process, the research team can obtain a scheduler that can reflect the status of the current iteration, which is also the key to introducing

priority. Based on this scheduler, the research team fused image features of different difficulties together to obtain and  correlation

correlation  rel, which is used to eliminate irrelevant information. Finally, a bottleneck transformer is used based on this correlation to fuse and

rel, which is used to eliminate irrelevant information. Finally, a bottleneck transformer is used based on this correlation to fuse and  , and a multi-modal image and text fusion representation

, and a multi-modal image and text fusion representation  of a certain granularity is obtained.

of a certain granularity is obtained.

Mid layer fusion.The research team at this layer fuses image features of different granularities into text features of a certain granularity, that is, fusion

. At this layer, we use a scheduler to dynamically fuse image features of different granularities to obtain a multi-modal text representation of a certain granularity

.

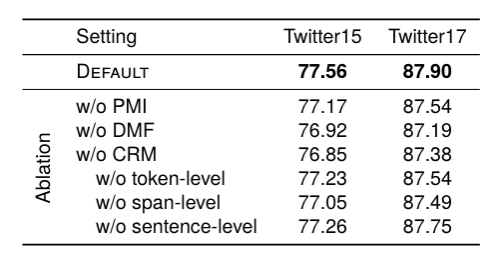

Top fusion. The research team at this layer fuses multi-modal text representation  of different granularities into the interval representation to obtain a total multi-modal text representation

of different granularities into the interval representation to obtain a total multi-modal text representation  , which is input into the decoding network for prediction. The author team compared some typical methods of MNER. Experimental results show that this method achieves the best performance on two commonly used data sets. The researchers removed the prioritization, hierarchical, and elimination designs in our paper to observe the model performance. The results show that removing each design brings performance degradation. Comparison with static feature fusion methodsThey compared some typical static multi-modal fusion methods, such as max pooling, average pooling, MLP-based and MoE-based methods, The results show that their proposed dynamic fusion framework can achieve the best performance.

, which is input into the decoding network for prediction. The author team compared some typical methods of MNER. Experimental results show that this method achieves the best performance on two commonly used data sets. The researchers removed the prioritization, hierarchical, and elimination designs in our paper to observe the model performance. The results show that removing each design brings performance degradation. Comparison with static feature fusion methodsThey compared some typical static multi-modal fusion methods, such as max pooling, average pooling, MLP-based and MoE-based methods, The results show that their proposed dynamic fusion framework can achieve the best performance.

The research team selected two representative samples to illustrate the iterative process. It can be seen that in the first iteration step, the types of time square and cubes were incorrectly predicted; however, based on the important feature clues in the picture, it was iteratively corrected to the correct entity type.  This paper aims to fully utilize the potential of various multi-modal representations in the field of multi-modal named entity recognition (MNER), in order to obtain excellent recognition results. To this end, the authors designed and proposed an innovative iterative reasoning framework—DPE-MNER. DPE-MNER cleverly simplifies the integration process of these rich and diverse multi-modal representations by decomposing the MNER task into multiple stages. In this iterative process, multimodal representations achieve dynamic fusion and integration based on the strategy of “decomposition, prioritization, and elimination.” Through a series of rigorous experimental verifications, the research team fully demonstrated the remarkable effects and superior performance of the DPE-MNER framework. [1] Knowledge Graphs Meet Multi-Modal Learning: Comprehensive Survey, arxiv[2] Decompose, Prioritize, and Eliminate: Dynamically In tegrating Diverse Representations for Multi-modal Named Entity Recognition,2024,Joint International Conference on Computational Linguistics, Language Resources and Evaluation[3] Complex problem solving : Principles and mechanisms,1992, American Journal of Psycholog [4] DiffusionNER: Boundary Diffusion for Named Entity Recognition, ACL23 [5] DiffusionDet: Diffusion Model for Object Detection, ICCV23[6] Language-Guided Diffusion Model for Visual Grounding , arxiv23

This paper aims to fully utilize the potential of various multi-modal representations in the field of multi-modal named entity recognition (MNER), in order to obtain excellent recognition results. To this end, the authors designed and proposed an innovative iterative reasoning framework—DPE-MNER. DPE-MNER cleverly simplifies the integration process of these rich and diverse multi-modal representations by decomposing the MNER task into multiple stages. In this iterative process, multimodal representations achieve dynamic fusion and integration based on the strategy of “decomposition, prioritization, and elimination.” Through a series of rigorous experimental verifications, the research team fully demonstrated the remarkable effects and superior performance of the DPE-MNER framework. [1] Knowledge Graphs Meet Multi-Modal Learning: Comprehensive Survey, arxiv[2] Decompose, Prioritize, and Eliminate: Dynamically In tegrating Diverse Representations for Multi-modal Named Entity Recognition,2024,Joint International Conference on Computational Linguistics, Language Resources and Evaluation[3] Complex problem solving : Principles and mechanisms,1992, American Journal of Psycholog [4] DiffusionNER: Boundary Diffusion for Named Entity Recognition, ACL23 [5] DiffusionDet: Diffusion Model for Object Detection, ICCV23[6] Language-Guided Diffusion Model for Visual Grounding , arxiv23The above is the detailed content of Harbin Institute of Technology proposes an innovative iterative reasoning framework DPE-MNER: giving full play to the potential of multi-modal representation. For more information, please follow other related articles on the PHP Chinese website!

, and uses a prediction network to encode multi-modal features to iteratively denoise during the denoising process to obtain the correct entity intervals

, and uses a prediction network to encode multi-modal features to iteratively denoise during the denoising process to obtain the correct entity intervals  in the text.

in the text.  , two granularities and two difficulties in the picture (they believe that aligned Representations are simple representations, misaligned representations are difficult representations)

, two granularities and two difficulties in the picture (they believe that aligned Representations are simple representations, misaligned representations are difficult representations)  . The team's prediction network AMRN includes an encoding network (DMMF) and a decoding network (MER). The design of the prediction network is based on the three strategies mentioned earlier.

. The team's prediction network AMRN includes an encoding network (DMMF) and a decoding network (MER). The design of the prediction network is based on the three strategies mentioned earlier.  of each granularity. The image features

of each granularity. The image features  Y and text features X are fused to obtain the final multi-modal representation. Input to the decoding network for decoding, and the decoding network obtains new intervals and the entity type of each interval.

Y and text features X are fused to obtain the final multi-modal representation. Input to the decoding network for decoding, and the decoding network obtains new intervals and the entity type of each interval.  correlation

correlation  rel, which is used to eliminate irrelevant information. Finally, a bottleneck transformer is used based on this correlation to fuse and

rel, which is used to eliminate irrelevant information. Finally, a bottleneck transformer is used based on this correlation to fuse and  , and a multi-modal image and text fusion representation

, and a multi-modal image and text fusion representation  of a certain granularity is obtained.

of a certain granularity is obtained.

. At this layer, we use a scheduler to dynamically fuse image features of different granularities to obtain a multi-modal text representation of a certain granularity

. At this layer, we use a scheduler to dynamically fuse image features of different granularities to obtain a multi-modal text representation of a certain granularity .

.  of different granularities into the interval representation to obtain a total multi-modal text representation

of different granularities into the interval representation to obtain a total multi-modal text representation  , which is input into the decoding network for prediction.

, which is input into the decoding network for prediction.