Technology peripherals

Technology peripherals

AI

AI

Peking University launches new multi-modal robot model! Efficient reasoning and operations for general and robotic scenarios

Peking University launches new multi-modal robot model! Efficient reasoning and operations for general and robotic scenarios

Peking University launches new multi-modal robot model! Efficient reasoning and operations for general and robotic scenarios

The AIxiv column is a column where this site publishes academic and technical content. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com

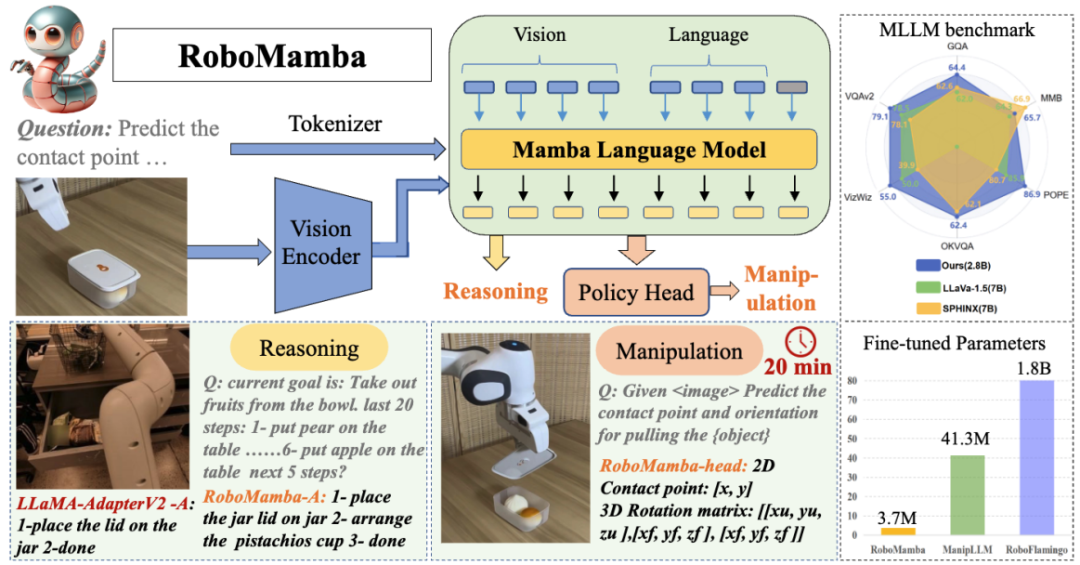

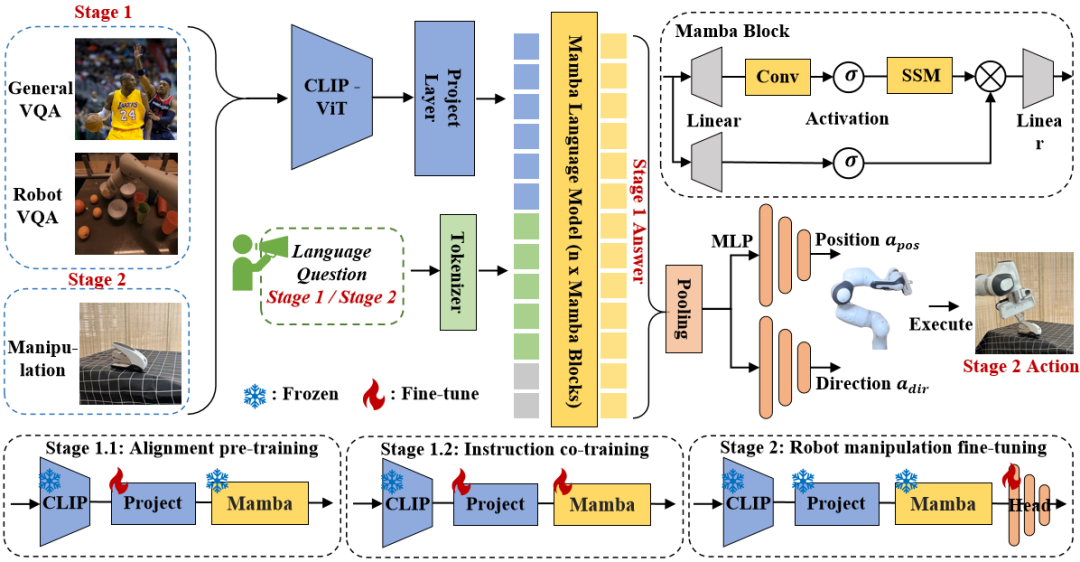

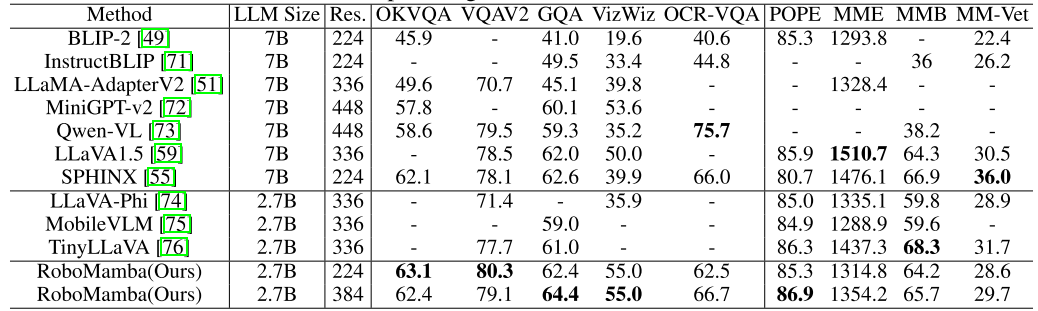

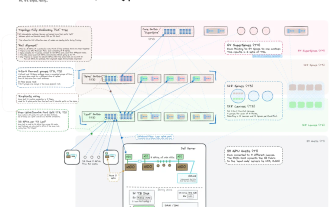

In order to give the robot end-to-end reasoning and manipulation capabilities, this article innovatively integrates the visual encoder with an efficient state space language model to build a new RoboMamba multi-modal large model, making it capable of visual common sense tasks and robots reasoning capabilities on related tasks, and have achieved advanced performance. At the same time, this article found that when RoboMamba has strong reasoning capabilities, we can enable RoboMamba to master multiple manipulation posture prediction capabilities through extremely low training costs.

Paper: RoboMamba: Multimodal State Space Model for Efficient Robot Reasoning and Manipulation

Paper link: https://arxiv.org/abs/2406.04339

Project homepage: https:// sites.google.com/view/robomamba-web

Github: https://github.com/lmzpai/roboMamba

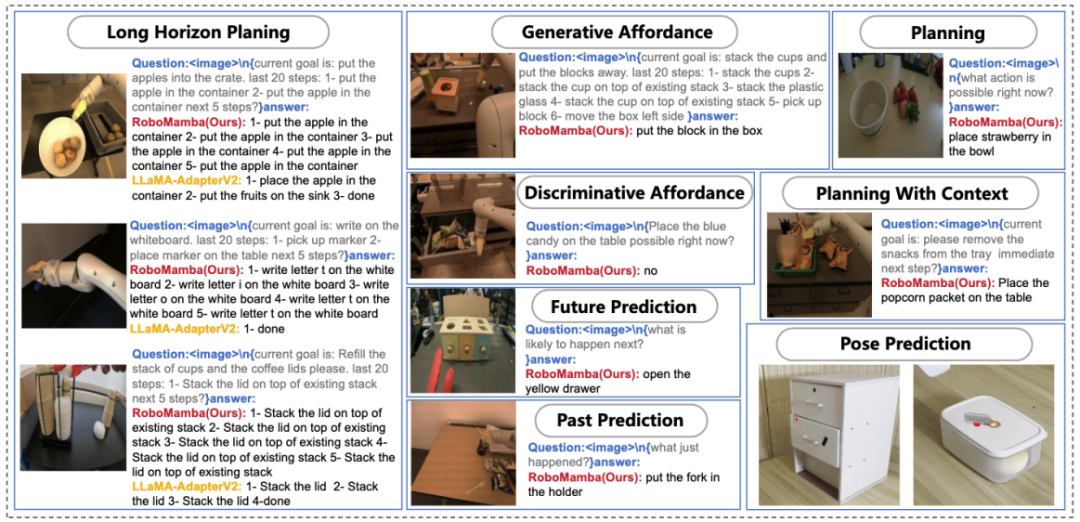

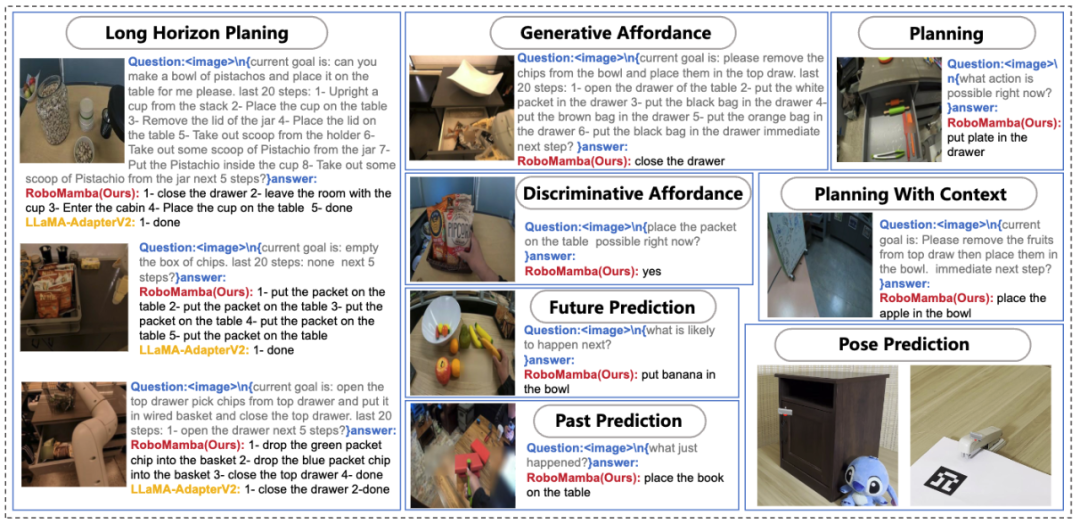

We innovatively integrate the visual encoder with the efficient Mamba language model to build a new end-to-end multi-modal large model of robot, RoboMamba , which has visual common sense and comprehensive reasoning capabilities related to robots. In order to equip RoboMamba with end-effector manipulation pose prediction capabilities, we explored an efficient fine-tuning strategy using a simple Policy Head. We found that once RoboMamba reaches sufficient reasoning capabilities, it can master manipulation pose prediction skills at very low cost. In our extensive experiments, RoboMamba performs well on general and robotic inference evaluation benchmarks, and demonstrates impressive pose prediction results in simulators and real-world experiments.

Problem statement

and language questions

and language questions  The answer is

The answer is  , expressed as

, expressed as  .Reasoning answers often contain separate subtasks

.Reasoning answers often contain separate subtasks  for a question

for a question  . For example, when faced with a planning problem such as "How to clear the table?" responses typically include steps such as "Step 1: Pick up the object" and "Step 2: Put the object into the box." For action prediction, we utilize an efficient and simple policy head π to predict actions

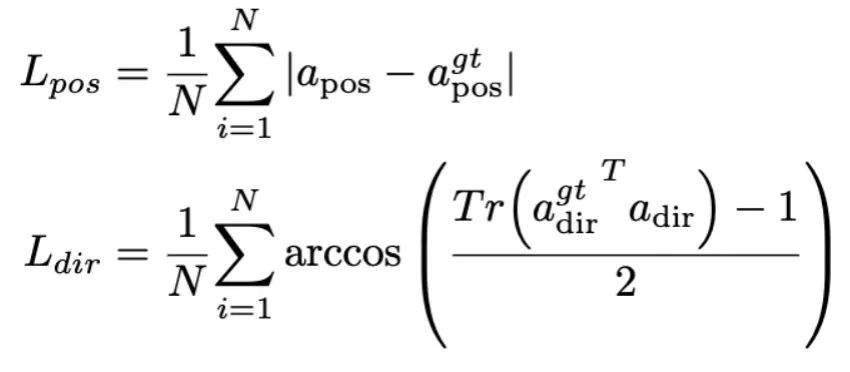

. For example, when faced with a planning problem such as "How to clear the table?" responses typically include steps such as "Step 1: Pick up the object" and "Step 2: Put the object into the box." For action prediction, we utilize an efficient and simple policy head π to predict actions  . Following previous work, we use 6-DoF to express the end-effector pose of the Franka Emika Panda robotic arm. The 6 degrees of freedom include the end effector position

. Following previous work, we use 6-DoF to express the end-effector pose of the Franka Emika Panda robotic arm. The 6 degrees of freedom include the end effector position  representing the three-dimensional coordinates and the direction

representing the three-dimensional coordinates and the direction  representing the rotation matrix. If training on a grasping task, we add the gripper state to the pose prediction, enabling 7-DoF control.

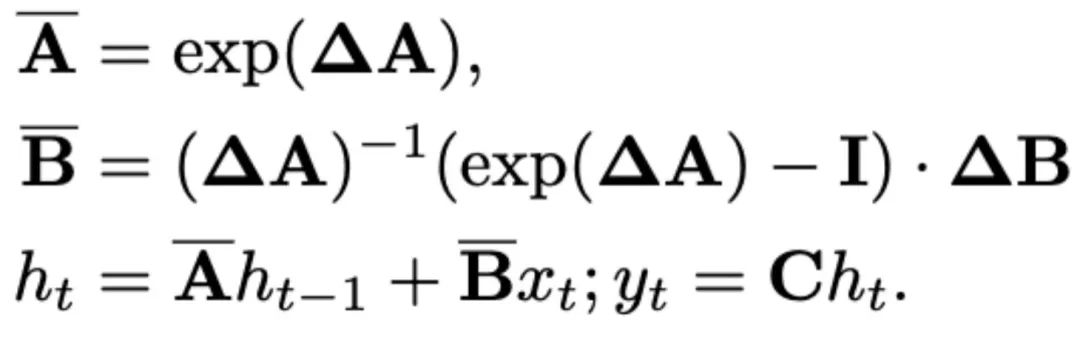

representing the rotation matrix. If training on a grasping task, we add the gripper state to the pose prediction, enabling 7-DoF control. State Space Model (SSM)

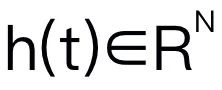

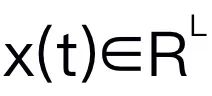

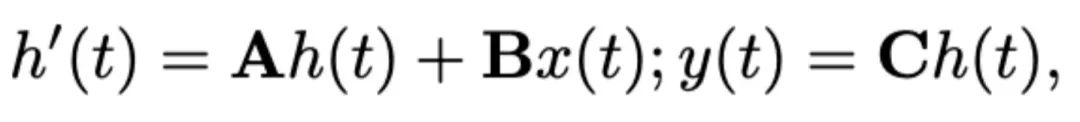

to a 1D output sequence

to a 1D output sequence  through hidden states

through hidden states  . SSM consists of three key parameters: state matrix

. SSM consists of three key parameters: state matrix  , input matrix

, input matrix  , and output matrix

, and output matrix  . The SSM can be expressed as:

. The SSM can be expressed as:

and

and  . The discretization adopts the zero-order preserving method, which is defined as follows:

. The discretization adopts the zero-order preserving method, which is defined as follows:

for better content-aware inference. The details of the Mamba block are shown in Figure 3 below.

for better content-aware inference. The details of the Mamba block are shown in Figure 3 below.

from the input image I, where B and N represent the batch size and the number of tokens respectively. Unlike recent MLLMs, we do not employ visual encoder ensemble techniques, which use multiple backbone networks (i.e., DINOv2, CLIP-ConvNeXt, CLIP-ViT) for image feature extraction. Integration introduces additional computational costs, severely affecting the practicality of robotic MLLM in the real world. Therefore, we demonstrate that simple and straightforward model design can also achieve powerful inference capabilities when high-quality data and appropriate training strategies are combined. To make the LLM understand visual features, we use a multilayer perceptron (MLP) to connect the visual encoder to the LLM. With this simple cross-modal connector, RoboMamba can transform visual information into a language embedding space

from the input image I, where B and N represent the batch size and the number of tokens respectively. Unlike recent MLLMs, we do not employ visual encoder ensemble techniques, which use multiple backbone networks (i.e., DINOv2, CLIP-ConvNeXt, CLIP-ViT) for image feature extraction. Integration introduces additional computational costs, severely affecting the practicality of robotic MLLM in the real world. Therefore, we demonstrate that simple and straightforward model design can also achieve powerful inference capabilities when high-quality data and appropriate training strategies are combined. To make the LLM understand visual features, we use a multilayer perceptron (MLP) to connect the visual encoder to the LLM. With this simple cross-modal connector, RoboMamba can transform visual information into a language embedding space  .

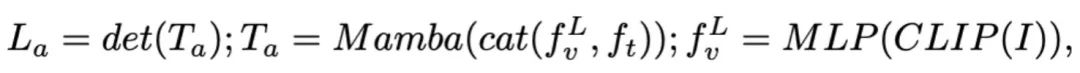

.  using a pretrained tokenizer, then concatenated (cat) with visual tokens and fed into Mamba. We leverage Mamba's powerful sequence modeling to understand multimodal information and use effective training strategies to develop visual reasoning capabilities (as described in the next section). The output token (

using a pretrained tokenizer, then concatenated (cat) with visual tokens and fed into Mamba. We leverage Mamba's powerful sequence modeling to understand multimodal information and use effective training strategies to develop visual reasoning capabilities (as described in the next section). The output token ( ) is then decoded (det) to generate a natural language response

) is then decoded (det) to generate a natural language response  . The forward process of the model can be expressed as follows:

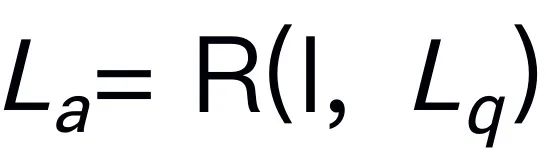

. The forward process of the model can be expressed as follows:

Stage 1.1: Alignment pre-training.

Stage 1.2: Command to train together.

3. Robot Manipulation Ability Evaluation (SAPIEN)

3. Robot Manipulation Ability Evaluation (SAPIEN)

The above is the detailed content of Peking University launches new multi-modal robot model! Efficient reasoning and operations for general and robotic scenarios. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1664

1664

14

14

1423

1423

52

52

1317

1317

25

25

1268

1268

29

29

1242

1242

24

24

A Diffusion Model Tutorial Worth Your Time, from Purdue University

Apr 07, 2024 am 09:01 AM

A Diffusion Model Tutorial Worth Your Time, from Purdue University

Apr 07, 2024 am 09:01 AM

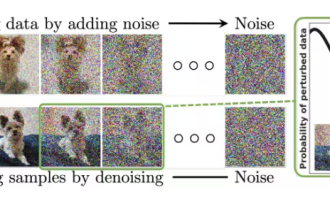

Diffusion can not only imitate better, but also "create". The diffusion model (DiffusionModel) is an image generation model. Compared with the well-known algorithms such as GAN and VAE in the field of AI, the diffusion model takes a different approach. Its main idea is a process of first adding noise to the image and then gradually denoising it. How to denoise and restore the original image is the core part of the algorithm. The final algorithm is able to generate an image from a random noisy image. In recent years, the phenomenal growth of generative AI has enabled many exciting applications in text-to-image generation, video generation, and more. The basic principle behind these generative tools is the concept of diffusion, a special sampling mechanism that overcomes the limitations of previous methods.

Generate PPT with one click! Kimi: Let the 'PPT migrant workers' become popular first

Aug 01, 2024 pm 03:28 PM

Generate PPT with one click! Kimi: Let the 'PPT migrant workers' become popular first

Aug 01, 2024 pm 03:28 PM

Kimi: In just one sentence, in just ten seconds, a PPT will be ready. PPT is so annoying! To hold a meeting, you need to have a PPT; to write a weekly report, you need to have a PPT; to make an investment, you need to show a PPT; even when you accuse someone of cheating, you have to send a PPT. College is more like studying a PPT major. You watch PPT in class and do PPT after class. Perhaps, when Dennis Austin invented PPT 37 years ago, he did not expect that one day PPT would become so widespread. Talking about our hard experience of making PPT brings tears to our eyes. "It took three months to make a PPT of more than 20 pages, and I revised it dozens of times. I felt like vomiting when I saw the PPT." "At my peak, I did five PPTs a day, and even my breathing was PPT." If you have an impromptu meeting, you should do it

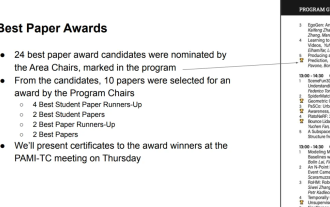

All CVPR 2024 awards announced! Nearly 10,000 people attended the conference offline, and a Chinese researcher from Google won the best paper award

Jun 20, 2024 pm 05:43 PM

All CVPR 2024 awards announced! Nearly 10,000 people attended the conference offline, and a Chinese researcher from Google won the best paper award

Jun 20, 2024 pm 05:43 PM

In the early morning of June 20th, Beijing time, CVPR2024, the top international computer vision conference held in Seattle, officially announced the best paper and other awards. This year, a total of 10 papers won awards, including 2 best papers and 2 best student papers. In addition, there were 2 best paper nominations and 4 best student paper nominations. The top conference in the field of computer vision (CV) is CVPR, which attracts a large number of research institutions and universities every year. According to statistics, a total of 11,532 papers were submitted this year, and 2,719 were accepted, with an acceptance rate of 23.6%. According to Georgia Institute of Technology’s statistical analysis of CVPR2024 data, from the perspective of research topics, the largest number of papers is image and video synthesis and generation (Imageandvideosyn

From bare metal to a large model with 70 billion parameters, here is a tutorial and ready-to-use scripts

Jul 24, 2024 pm 08:13 PM

From bare metal to a large model with 70 billion parameters, here is a tutorial and ready-to-use scripts

Jul 24, 2024 pm 08:13 PM

We know that LLM is trained on large-scale computer clusters using massive data. This site has introduced many methods and technologies used to assist and improve the LLM training process. Today, what we want to share is an article that goes deep into the underlying technology and introduces how to turn a bunch of "bare metals" without even an operating system into a computer cluster for training LLM. This article comes from Imbue, an AI startup that strives to achieve general intelligence by understanding how machines think. Of course, turning a bunch of "bare metal" without an operating system into a computer cluster for training LLM is not an easy process, full of exploration and trial and error, but Imbue finally successfully trained an LLM with 70 billion parameters. and in the process accumulate

PyCharm Community Edition Installation Guide: Quickly master all the steps

Jan 27, 2024 am 09:10 AM

PyCharm Community Edition Installation Guide: Quickly master all the steps

Jan 27, 2024 am 09:10 AM

Quick Start with PyCharm Community Edition: Detailed Installation Tutorial Full Analysis Introduction: PyCharm is a powerful Python integrated development environment (IDE) that provides a comprehensive set of tools to help developers write Python code more efficiently. This article will introduce in detail how to install PyCharm Community Edition and provide specific code examples to help beginners get started quickly. Step 1: Download and install PyCharm Community Edition To use PyCharm, you first need to download it from its official website

AI in use | AI created a life vlog of a girl living alone, which received tens of thousands of likes in 3 days

Aug 07, 2024 pm 10:53 PM

AI in use | AI created a life vlog of a girl living alone, which received tens of thousands of likes in 3 days

Aug 07, 2024 pm 10:53 PM

Editor of the Machine Power Report: Yang Wen The wave of artificial intelligence represented by large models and AIGC has been quietly changing the way we live and work, but most people still don’t know how to use it. Therefore, we have launched the "AI in Use" column to introduce in detail how to use AI through intuitive, interesting and concise artificial intelligence use cases and stimulate everyone's thinking. We also welcome readers to submit innovative, hands-on use cases. Video link: https://mp.weixin.qq.com/s/2hX_i7li3RqdE4u016yGhQ Recently, the life vlog of a girl living alone became popular on Xiaohongshu. An illustration-style animation, coupled with a few healing words, can be easily picked up in just a few days.

A must-read for technical beginners: Analysis of the difficulty levels of C language and Python

Mar 22, 2024 am 10:21 AM

A must-read for technical beginners: Analysis of the difficulty levels of C language and Python

Mar 22, 2024 am 10:21 AM

Title: A must-read for technical beginners: Difficulty analysis of C language and Python, requiring specific code examples In today's digital age, programming technology has become an increasingly important ability. Whether you want to work in fields such as software development, data analysis, artificial intelligence, or just learn programming out of interest, choosing a suitable programming language is the first step. Among many programming languages, C language and Python are two widely used programming languages, each with its own characteristics. This article will analyze the difficulty levels of C language and Python

Five programming software for getting started with learning C language

Feb 19, 2024 pm 04:51 PM

Five programming software for getting started with learning C language

Feb 19, 2024 pm 04:51 PM

As a widely used programming language, C language is one of the basic languages that must be learned for those who want to engage in computer programming. However, for beginners, learning a new programming language can be difficult, especially due to the lack of relevant learning tools and teaching materials. In this article, I will introduce five programming software to help beginners get started with C language and help you get started quickly. The first programming software was Code::Blocks. Code::Blocks is a free, open source integrated development environment (IDE) for