Technology peripherals

Technology peripherals

It Industry

It Industry

Moore Thread Kua'e Intelligent Computing Center has expanded to the scale of 10,000 cards, with 10,000 P-level floating point computing capabilities

Moore Thread Kua'e Intelligent Computing Center has expanded to the scale of 10,000 cards, with 10,000 P-level floating point computing capabilities

Moore Thread Kua'e Intelligent Computing Center has expanded to the scale of 10,000 cards, with 10,000 P-level floating point computing capabilities

According to news from this website on July 3, Moore Thread announced today that its AI flagship product KUAE intelligent computing cluster solution has been expanded from the current kilo-card level to the 10,000-card scale. Moore Thread Kua'e Wanka intelligent computing cluster uses a full-featured GPU as the base to create a domestic general-purpose accelerated computing platform capable of carrying Wanka scale and 10,000 P-level floating point computing capabilities. It is specially designed for training complex large models with trillions of parameters. And design.

Wanka WanP: Kuae Intelligent Computing Cluster achieves a single cluster scale of over 10,000 cards, with floating point computing power reaching 10Exa-Flops, reaching PB level. Total video memory capacity, total PB-level ultra-high-speed interconnection bandwidth between cards per second, and total PB-level ultra-high-speed node interconnection bandwidth per second.

Long-term and stable training: Moore Thread boasts that the average trouble-free running time of the Wanka cluster exceeds 15 days, and can achieve stable training of large models for up to 30 days. The average weekly training efficiency is above 99%, far exceeding the industry average.

High MFU: Kua'e Wanka cluster has undergone a series of optimizations at the system software, framework, algorithm and other levels to achieve high-efficiency training of large models. MFU (a common indicator for evaluating the training efficiency of large models) can reach up to 60%.

Eco-friendly: Can accelerate large models of different architectures and modes such as LLM, MoE, multi-modal, Mamba, etc. Based on the MUSA programming language, fully compatible with CUDA capabilities and the automated migration tool Musify, it accelerates the "Day0" migration of new models.

This site has learned that Moore Thread will carry out three Wanka cluster projects, namely:

- Qinghai Zero Carbon Industrial Park Wanka Cluster Project

- Qinghai Plateau Kua'e Wanka Cluster Project

- Guangxi ASEAN Wanka Cluster Project

The above is the detailed content of Moore Thread Kua'e Intelligent Computing Center has expanded to the scale of 10,000 cards, with 10,000 P-level floating point computing capabilities. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1664

1664

14

14

1423

1423

52

52

1317

1317

25

25

1268

1268

29

29

1243

1243

24

24

Moore Threads signed a strategic cooperation with Baidu Maps to create a new generation of digital twin maps

Jul 25, 2024 am 12:31 AM

Moore Threads signed a strategic cooperation with Baidu Maps to create a new generation of digital twin maps

Jul 25, 2024 am 12:31 AM

This website reported on July 24 that recently, Moore Thread and Baidu Maps signed a strategic cooperation agreement. Both parties will leverage their respective advantages in technology and products to jointly promote technological innovation in digital twin maps. According to the cooperation agreement, the two parties will focus on the construction of the digital twin map project, taking advantage of Baidu Map's map engine advantages, digital twin technology advantages, map big data application advantages, and the 3D graphics rendering and AI computing technology advantages of Moore's thread full-featured GPU to actively carry out In-depth and extensive continuous cooperation will jointly promote the application and large-scale implementation of digital twin map solutions. According to the official introduction of Moore Thread, map data is a key asset of the country, and digital twin maps especially highlight its importance in high-load rendering scenarios, which has a significant impact on the rendering performance and performance of GPUs.

The real light of domestic graphics cards! An in-depth interpretation of Moore Thread's domestic GPU, AI and Metaverse developments

Jun 05, 2023 am 11:10 AM

The real light of domestic graphics cards! An in-depth interpretation of Moore Thread's domestic GPU, AI and Metaverse developments

Jun 05, 2023 am 11:10 AM

1. A brief history of Moore's threads: Lightspeed Entrepreneurship takes full advantage. Today, we already have relatively mature independent CPU processors, NAND flash memory, DRAM memory, and OS operating systems. As a very critical part of the computing platform, GPU graphics cards have There have always been serious shortcomings, mainly because it is not only extremely difficult in hardware design, but also ecological cultivation is even more difficult, and it cannot be achieved overnight. There are actually quite a few domestic GPU companies, but many of them are limited to specific industry fields or are oriented to high-performance computing. Those who truly dare to make a comprehensive layout and enter the consumer market cannot but mention MooreThread. On May 31, Moore Line sent an invitation to Kuai Technology for its 2023 summer press conference.

In the main battlefield of AI, Wanka is the standard configuration: the domestic GPU Wanka WanP cluster is here!

Jul 10, 2024 pm 03:07 PM

In the main battlefield of AI, Wanka is the standard configuration: the domestic GPU Wanka WanP cluster is here!

Jul 10, 2024 pm 03:07 PM

ScalingLaw continues to show results, and the computing power can hardly keep up with the expansion of large models. "The larger the scale, the higher the computing power, and the better the effect" has become an industry standard. It only took one year for mainstream large models to jump from tens of billions to 1.8 trillion parameters. Giants such as META, Google, and Microsoft have also been building ultra-large clusters with more than 15,000 cards starting in 2022. "Wanka has become the standard for the main battlefield of AI." However, in China, there are only a handful of nationally produced GPU Wanka clusters. There is a super large-scale Wanka cluster with super versatility, which is a gap in the industry. When the domestic GPU Wanka WanP cluster made its debut, it naturally attracted widespread attention in the industry. On July 3, Moore Thread announced its AI flagship product in Shanghai

Supporting the game 'Zero', Moore Thread releases graphics card driver v260.70.2 version update

Jul 22, 2024 pm 08:44 PM

Supporting the game 'Zero', Moore Thread releases graphics card driver v260.70.2 version update

Jul 22, 2024 pm 08:44 PM

According to news from this website on July 22, Moore Thread today released the graphics card driver with version number v260.70.2. This update supports the game "Zero Zero" and fixes the missing probabilistic rendering of the game launch lobby and in-game scenes and other issues. At the same time, the new driver focuses on improving the performance of many popular games including "LEGO Legends", "Survival Island: Legend of the Fountain of Youth" and "Hymn of Babel". In addition, the new driver has also specially optimized the performance of some DirectX11 games. For example, the average frame rate of "Expedition: Mud Run Game" has increased by more than 150%, and the average frame rate of "Project Zero 2: Apocalypse Party" has increased by about 150%. Graphics card users can download the latest version v2 by visiting the Moore Thread official website

Moore Thread's 'Intelligent Entertainment Mofang' desktop computer host configuration is new: i5-14400F + MTT S80 domestic graphics card sold for 7,099 yuan

Jul 28, 2024 pm 05:14 PM

Moore Thread's 'Intelligent Entertainment Mofang' desktop computer host configuration is new: i5-14400F + MTT S80 domestic graphics card sold for 7,099 yuan

Jul 28, 2024 pm 05:14 PM

According to news from this site on July 28, Moore Thread launched a desktop computer host called "Intelligent Entertainment Mofang" on June 30 last year, claiming to "focus on the gaming field". Today the official launch of the "i5-14400F" for the machine +32GBRAM+1TB+MTTS80 graphics card” configuration, the product page shows that the price is 7,099 yuan. It is reported that the gaming computer uses a 10-core 16-thread Core i5-14400F processor, a B760I landing ship motherboard, a running memory of 32GBDDR43200Mhz, and a built-in PCIe4.01TBSSD. For specific specifications, please refer to JD.com parameters as follows: In terms of GPU, "Intelligent Entertainment Mofang" "The gaming computer uses Moore Thread's own MTTS80 graphics card, which is equipped with

Moore Thread MTT S30 domestic graphics card is on sale: 4GB memory, 40W power consumption, priced at 399 yuan

Feb 22, 2024 am 08:00 AM

Moore Thread MTT S30 domestic graphics card is on sale: 4GB memory, 40W power consumption, priced at 399 yuan

Feb 22, 2024 am 08:00 AM

According to news from this website on February 21, Moore Thread MTTS30 domestic graphics card is currently on JD.com, with an initial price of 399 yuan, and a deposit of 50 yuan is required. This website noticed that the Moore Thread MTTS30 graphics card is based on the MUSA unified system architecture and is compatible with multiple CPU architectures such as x86, Arm, LoongArch, etc. It supports various graphics APIs such as OpenGL, OpenGLES and Vulkan, and supports 4K picture output and AV1 codec. Fits in a compact chassis and requires no additional power supply. In terms of parameters, this graphics card has a 1024MUSA core, a core frequency of 1.3Ghz, a 4GB graphics card, a power consumption of 40W, a card length of 144mm, a card width of 67mm, and is equipped with 1 HDMI2.

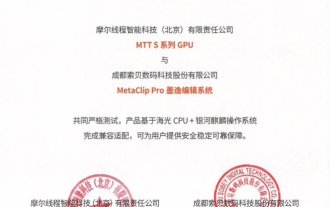

Moore Threads and Sobey implement a localized global ultra-clear solution for the entire chain of CPU, GPU, software, and system

Sep 03, 2024 am 12:00 AM

Moore Threads and Sobey implement a localized global ultra-clear solution for the entire chain of CPU, GPU, software, and system

Sep 03, 2024 am 12:00 AM

According to news from this site on September 2, Moore Thread cooperated with Sobey to demonstrate the "domesticated global ultra-clear solution" at the 31st Beijing International Radio, Film and Television Exhibition. Based on the full-featured Moore thread GPU, Sobey's MetaClipPro non-linear editing system and graphic packaging system can run stably in the domestic environment. ▲Picture source Moore Thread public account, the same as below. This site learned that through joint development, the two parties have successfully realized the localization of the entire chain from software, CPU, operating system to GPU. Sobey has also launched a number of domestic solutions equipped with Haiguang 3330 series and Haiguang 7000 series CPUs, Galaxy Kirin operating system and Moore thread full-featured GPUs.

Moore Thread releases graphics card driver v270.80: supports OpenGL 4.1 and AV1 encoding, solving 'Black Myth: Wukong ' Benchmark crash under DX11

Aug 20, 2024 pm 07:52 PM

Moore Thread releases graphics card driver v270.80: supports OpenGL 4.1 and AV1 encoding, solving 'Black Myth: Wukong ' Benchmark crash under DX11

Aug 20, 2024 pm 07:52 PM

According to news from this site on August 20, Moore Thread today released the graphics card driver version number v270.80. The new version of the driver can fully support OpenGL4.1 under the Windows 10 and Windows 11 operating system environments, and supports AV1 format encoding and VC1 format decoding. At the same time, the new driver solves the crash problem of running "Black Myth: Wukong" Benchmark in DirectX11 mode. The Moore Threading team is currently optimizing the game. The new driver has optimized the performance of more DirectX11 games. For example, the average frame rate of "Chain Together" has been increased by more than 150%, the average frame rate of "Payday 3" has been increased by more than 100%, and the average frame rate of DOTA2 has been improved by more than 150%.