A circuit breaker detects failures and encapsulate the logic of handling those failures in a way that prevents the failure from constantly recurring. For example, they’re useful when dealing with network calls to external services, databases, or really, any part of your system that might fail temporarily. By using a circuit breaker, you can prevent cascading failures, manage temporary errors, and maintain a stable and responsive system amidst a system breakdown.

Cascading failures occur when a failure in one part of the system triggers failures in other parts, leading to widespread disruption. An example is when a microservice in a distributed system becomes unresponsive, causing dependent services to timeout and eventually fail. Depending on the scale of the application, the impact of these failures can be catastrophic which is going to degrade performance and probably even impact user experience.

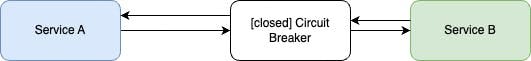

A circuit breaker itself is a technique/pattern and there are three different states it operates which we will talk about:

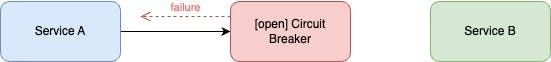

2. Open State : In an open state, the circuit breaker immediately fails all incoming requests without attempting to contact the target service. The state is entered to prevent further overload of the failing service and give it time to recover. After a predefined timeout, the circuit breaker moves to the half-open state. A relatable example is this; Imagine an online store experiences a sudden issue where every purchase attempt fails. To avoid overwhelming the system, the store temporarily stops accepting any new purchase requests.

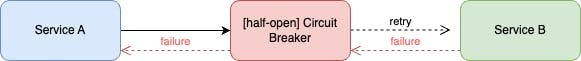

3. Half-Open State : In the half-open state, the circuit breaker allows a (configurable) limited number of test requests to pass through to the target service. And if these requests are successful, the circuit transitions back to the closed state. If they fail, the circuit returns to the open state. In the example of the online store i gave in the open state above, this is where the online store starts to allow a few purchase attempts to see if the issue has been fixed. If these few attempts succeed, the store will fully reopen its service to accept new purchase requests.

This diagram shows when the circuit breaker tries to see if requests to Service B are successful and then it fails/breaks:

The follow up diagram then shows when the test requests to Service B succeeds, the circuit is closed, and all further calls are routed to Service B again:

Note : Key configurations for a circuit breaker include the failure threshold (number of failures needed to open the circuit), the timeout for the open state, and the number of test requests in the half-open state.

It’s important to mention that prior knowledge of Go is required to follow along in this article.

As with any software engineering pattern, circuit breakers can be implemented in various languages. However, this article will focus on implementation in Golang. While there are several libraries available for this purpose, such as goresilience, go-resiliency, and gobreaker, we will specifically concentrate on using the gobreaker library.

Pro Tip : You can see the internal implementation of the gobreaker package, check here.

Let’s consider a simple Golang application where a circuit breaker is implemented to handle calls to an external API. This basic example demonstrates how to wrap an external API call with the circuit breaker technique:

Let’s touch on a few important things:

Let’s write some unit tests to verify our circuit breaker implementation. I will only be explaining the most critical unit tests to understand. You can check here for the full code.

t.Run("FailedRequests", func(t *testing.T) {

// Override callExternalAPI to simulate failure

callExternalAPI = func() (int, error) {

return 0, errors.New("simulated failure")

}

for i := 0; i < 4; i++ {

_, err := cb.Execute(func() (interface{}, error) {

return callExternalAPI()

})

if err == nil {

t.Fatalf("expected error, got none")

}

}

if cb.State() != gobreaker.StateOpen {

t.Fatalf("expected circuit breaker to be open, got %v", cb.State())

}

})

//Simulates the circuit breaker being open,

//wait for the defined timeout,

//then check if it closes again after a successful request.

t.Run("RetryAfterTimeout", func(t *testing.T) {

// Simulate circuit breaker opening

callExternalAPI = func() (int, error) {

return 0, errors.New("simulated failure")

}

for i := 0; i < 4; i++ {

_, err := cb.Execute(func() (interface{}, error) {

return callExternalAPI()

})

if err == nil {

t.Fatalf("expected error, got none")

}

}

if cb.State() != gobreaker.StateOpen {

t.Fatalf("expected circuit breaker to be open, got %v", cb.State())

}

// Wait for timeout duration

time.Sleep(settings.Timeout + 1*time.Second)

//We expect that after the timeout period,

//the circuit breaker should transition to the half-open state.

// Restore original callExternalAPI to simulate success

callExternalAPI = func() (int, error) {

resp, err := http.Get(server.URL)

if err != nil {

return 0, err

}

defer resp.Body.Close()

return resp.StatusCode, nil

}

_, err := cb.Execute(func() (interface{}, error) {

return callExternalAPI()

})

if err != nil {

t.Fatalf("expected no error, got %v", err)

}

if cb.State() != gobreaker.StateHalfOpen {

t.Fatalf("expected circuit breaker to be half-open, got %v", cb.State())

}

//After verifying the half-open state, another successful request is simulated to ensure the circuit breaker transitions back to the closed state.

for i := 0; i < int(settings.MaxRequests); i++ {

_, err = cb.Execute(func() (interface{}, error) {

return callExternalAPI()

})

if err != nil {

t.Fatalf("expected no error, got %v", err)

}

}

if cb.State() != gobreaker.StateClosed {

t.Fatalf("expected circuit breaker to be closed, got %v", cb.State())

}

})

t.Run("ReadyToTrip", func(t *testing.T) {

failures := 0

settings.ReadyToTrip = func(counts gobreaker.Counts) bool {

failures = int(counts.ConsecutiveFailures)

return counts.ConsecutiveFailures > 2 // Trip after 2 failures

}

cb = gobreaker.NewCircuitBreaker(settings)

// Simulate failures

callExternalAPI = func() (int, error) {

return 0, errors.New("simulated failure")

}

for i := 0; i < 3; i++ {

_, err := cb.Execute(func() (interface{}, error) {

return callExternalAPI()

})

if err == nil {

t.Fatalf("expected error, got none")

}

}

if failures != 3 {

t.Fatalf("expected 3 consecutive failures, got %d", failures)

}

if cb.State() != gobreaker.StateOpen {

t.Fatalf("expected circuit breaker to be open, got %v", cb.State())

}

})

We can take it a step further by adding an exponential backoff strategy to our circuit breaker implementation. We will this article keep it simple and concise by demonstrating an example of the exponential backoff strategy. However, there are other advanced strategies for circuit breakers worth mentioning, such as load shedding, bulkheading, fallback mechanisms, context and cancellation. These strategies basically enhance the robustness and functionality of circuit breakers. Here’s an example of using the exponential backoff strategy:

Exponential Backoff

Circuit breaker with exponential backoff

Let’s make a couple of things clear:

Custom Backoff Function: The exponentialBackoff function implements an exponential backoff strategy with a jitter. It basically calculates the backoff time based on the number of attempts, ensuring that the delay increases exponentially with each retry attempt.

Handling Retries: As you can see in the /api handler, the logic now includes a loop that attempts to call the external API up to a specified number of attempts ( attempts := 5). After each failed attempt, we wait for a duration determined by the exponentialBackoff function before retrying.

Circuit Breaker Execution: The circuit breaker is used within the loop. If the external API call succeeds ( err == nil), the loop breaks, and the successful result is returned. If all attempts fail, an HTTP 503 (Service Unavailable) error is returned.

Integrating custom backoff strategy in a circuit breaker implementation indeed aims to handle transient errors more gracefully. The increasing delays between retries help reduce the load on failing services, allowing them time to recover. As evident in our code above, our exponentialBackoff function was introduced to add delays between retries when calling an external API.

Additionally, we can integrate metrics and logging to monitor circuit breaker state changes using tools like Prometheus for real-time monitoring and alerting. Here’s a simple example:

Implementing a circuit breaker pattern with advanced strategies in go

As you’ll see, we have now done the following:

Pro Tip : The init function in Go is used to initialize the state of a package before the main function or any other code in the package is executed. In this case, the init function registers the requestCount metric with Prometheus. And this essentially ensures that Prometheus is aware of this metric and can start collect data as soon as the application starts running.

We create the circuit breaker with custom settings, including the ReadyToTrip function that increases the failure counter and determines when to trip the circuit.

OnStateChange to log state changes and increment the corresponding prometheus metric

We expose the Prometheus metrics at /metrics endpoint

To wrap up this article, i hope you saw how circuit breakers play a huge role in building resilient and reliable systems. By proactively preventing cascading failures, they fortify the reliability of microservices and distributed systems, ensuring a seamless user experience even in the face of adversity.

Keep in mind, any system designed for scalability must incorporate strategies to gracefully handle failures and swiftly recover — Oluwafemi , 2024

Originally published at https://oluwafemiakinde.dev on June 7, 2024.

The above is the detailed content of Circuit Breakers in Go: Stop Cascading Failures. For more information, please follow other related articles on the PHP Chinese website!