Technology peripherals

Technology peripherals

AI

AI

Exploration and practice of multi-modal remote sensing large models, Wang Jian, head of remote sensing large models of Ant Group, brings in-depth interpretation

Exploration and practice of multi-modal remote sensing large models, Wang Jian, head of remote sensing large models of Ant Group, brings in-depth interpretation

Exploration and practice of multi-modal remote sensing large models, Wang Jian, head of remote sensing large models of Ant Group, brings in-depth interpretation

On July 5, under the guidance of the World Artificial Intelligence Conference Organizing Committee Office and the People's Government of Xuhui District, Shanghai, the 2024 WAIC Yunfan Award and Artificial Intelligence Competition was hosted by the Shanghai Artificial Intelligence Laboratory, this site, and the Global University Artificial Intelligence Academic Alliance. The Intelligent Youth Forum was successfully held. The forum brought together more than 30 past and new Yunfan graduates from universities, research institutions and enterprises at home and abroad, including Stanford University, Oxford University, UCLA, University of California, ETH Zurich, University of Hong Kong, Tsinghua University, Peking University, Shanghai Jiao Tong University, etc. Award winners attended the conference offline, gathering the wisdom of international young AI scientists, actively exploring the boundaries of AI capabilities, and contributing new energy to China's AI development blueprint. Wang Jianzuo, the person in charge of remote sensing large models of Ant Group, as one of the representatives of the 2024 WAIC Yunfan Award, delivered a keynote speech at the forum titled "Exploration and Practice of Multimodal Remote Sensing Large Models".

Wang Jian summarized the opportunities for the development of remote sensing large models and the current progress of the industry, and shared the 2 billion parameter multi-modal remote sensing model SkySense developed by Ant Group based on the Ant Bailing large model platform, as well as SkySense's open source plan. Through technological innovations in data, model architecture, and unsupervised pre-training algorithms, SkySense ranked first in 17 evaluations for seven common remote sensing sensing tasks, including land use monitoring and surface feature change detection. At the same time, Wang Jian also introduced the application of SkySense in rural finance, ant forest woodland protection and other scenarios.

The following is the transcript of Wang Jian’s speech:

Good afternoon everyone! I am Wang Jian from Ant Group. I am very happy to share Ant Group’s exploration and practice in the direction of multi-modal remote sensing large models at the Yunfan Award Forum. My sharing will start from the following three aspects: first, the research background, second, the multi-modal remote sensing large model SkySense developed by Ant Group, and third, applications based on SkySense.

The emergence of large models has led to the rapid development of generative artificial intelligence, but now in the industrial world, it is still very far away from large-scale application. Although the emergence of large models has opened the door to a new world of AI, we believe that only by deeply integrating innovative applications based on large models into thousands of industries and realizing changes in productivity can the intrinsic value of the new AI paradigm be truly released. With this kind of thinking, Ant Group is actively deploying large model technology and applications.

In terms of basic capabilities, we have built a Wanka cluster computing system and focused on large model security and knowledge. First of all, in terms of security, Ant Group has developed its own Ant Tianjian platform to provide an integrated solution for the security of large models, thereby ensuring that Ant Group’s large models are safe and trustworthy. On top of our basic capabilities, we have built the Bailing language large model and the Bailing multimodal large model. Based on these two basic large models, and according to the characteristics of Ant Group’s business, we focus on the application of large models in finance, medical care, people’s livelihood, Applications in security, remote sensing, coding and other industries to serve consumers and corporate customers, thereby promoting the development of trusted intelligence and service industries. The whole system is still very large. Next, I will use the application of large models in remote sensing as an entry point to share with you some of our thoughts and practices in the entire field of large models.

The development of language and visual large models provides many important references for the development of large remote sensing models. For example, large language models, when expanded to the multi-modal field, have shown good results in some previous visual tasks, such as OCR, VQA and other tasks. In terms of pure visual large models, algorithms like SAM show strong performance in classification, detection, and segmentation tasks. The main tasks solved in the field of remote sensing are also classification, detection, and segmentation. The natural idea is to apply the successful experience of large visual models in the field of remote sensing.

On the other hand, with the rapid development of remote sensing technology, the field of remote sensing continues to produce massive amounts of multi-temporal remote sensing data. These data include visible light images that are more similar to natural images, multispectral data with more spectral information, and Radar SAR images, these data come from different satellites and different sensors, and we can regard them as data of different modes. These data are not labeled, and labeling these data is not only time-consuming and labor-intensive, but also can only be done by relying on expert experience in many cases. Only with the help of unsupervised algorithms can the value of these data be fully utilized. In recent years, many channels for obtaining remote sensing image data have emerged in the industry, such as the European Space Agency's Copernicus platform, Google's GEE platform, and the China Resources Satellite Center's data platform. These platforms all provide convenience for us to obtain remote sensing data. In summary, there is a lot of easy-to-obtain data in the field of remote sensing. Combined with the successful experience of large-scale visual models, these factors provide good opportunities and motivation for the development of large-scale remote sensing models.

This picture shows the large remote sensing models released in recent years. As you can see, starting in 2021, the industry has been using unsupervised pre-training algorithms for remote sensing image recognition, including models like SeCo. Subsequently, more and more companies and institutions have participated, and there are many representative works, such as the RingMo model released by the Institute of Aeronautics and Astronautics of the Chinese Academy of Sciences in 2022, the Satlas model in 2023, and the GRAFT model released not long ago by Fudan University. Several obvious trends can also be seen in this picture. The scale of model data and parameters is getting larger and larger, and the performance is getting stronger and stronger. From the earliest support for single-modal data to the current fusion of multi-modal data, from the earliest support for images from a single data source to now for the fusion of images from multiple data sources, from the early support for only a single static image interpretation, to fuse the information of the entire time series image. The entire trend is consistent with the development trend of large language and visual models. It is foreseeable that large remote sensing models with stronger performance and larger number of parameters will definitely appear in the future.

Back to ants, why do ants make large remote sensing models? Because Ant has many financial businesses, one of which is rural finance. In the financial industry, if you ask what is the most difficult, I believe 99% of people will say that rural finance is the most difficult. The main customers of rural finance are farmers. Farmers, unlike corporate white-collar workers, have good credit data. Compared with small and micro business owners, farmers lack bank-recognized collateral. In addition, banks have very few rural outlets and cannot conduct large-scale offline surveys to determine farmers’ assets. The main pain point behind this is that the value of land, the main asset of farmers, cannot be digitized on a large scale.

In response to this pain point, Ant's online merchant bank developed an asset evaluation system using satellite remote sensing and AI image recognition in 2019. Specifically, it uses satellite remote sensing images combined with artificial intelligence algorithms to identify what crops are planted in farmers' fields. Information such as how big the plant is and whether it is good or not is used to comprehensively analyze the farmer's planting situation, thereby determining the value of the asset and providing him with credit services. In the early days, it mainly focused on identifying staple food crops, such as rice, corn, wheat, etc., and served millions of farmers.

When we push this system to cash crops such as apples and citrus, we encounter some problems in identification. Because compared with staple food crops, cash crops are more sparsely planted, their planting methods are more diverse, and their categories are very long-tailed. For example, there are only a few types of staple food crops, but there are dozens of types of cash crops, so there are so many types of crops to be identified across the country. Crop types are difficult problems to solve in the field of remote sensing. From a technical perspective, we can improve the model effect by using few-sample learning, multi-modal sequential algorithms, and universal representation to improve model generalization performance. These technical features are exactly the characteristics of the basic model, so in this case , we decided to develop a large remote sensing model.

The following summarizes the opportunities and motivations for Ant Group to build large-scale remote sensing models.

On a technical level, the technology of basic models is developing rapidly and now has the potential for commercialization. At the data level, there is a massive amount of remote sensing data in the field of remote sensing, which lays the foundation for the development of large remote sensing models. At the business level, it can meet the needs of Ant's multi-modal, multi-sequential, and multi-task scenarios. Driven by these factors, Ant Group and the School of Remote Sensing of Wuhan University developed multi-modal remote sensing big data SkySense.

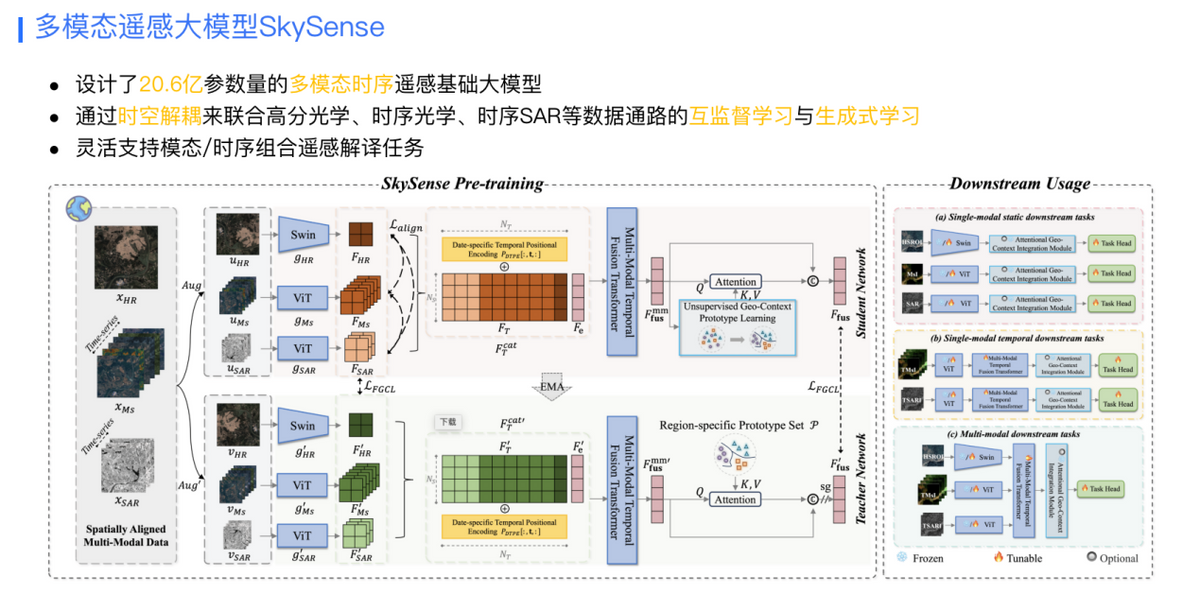

In order to train this model, we collected 21.5 million sets of samples distributed around the world. Each set of samples contains high-resolution optical, time-series optical, and radar SAR images. These data cover more than 40 countries and regions around the world, covering 8.78 million square kilometers of land and 300TB.

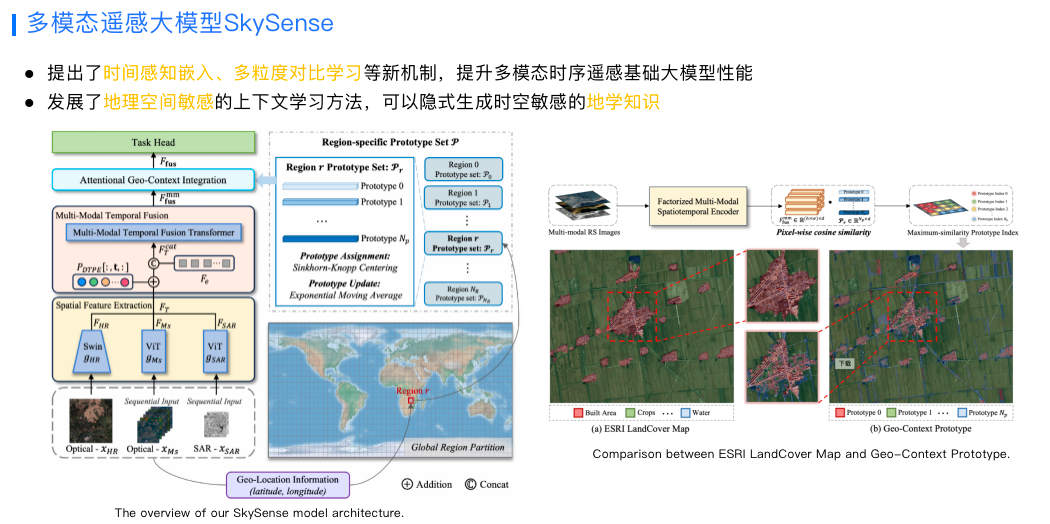

In terms of model structure, in order to better integrate information from different modalities, we designed a multi-granularity contrastive learning method, and proposed a spatio-temporal perception embedding algorithm based on the characteristics of remote sensing images. These are very helpful to improve the performance of basic remote sensing models.

There is another characteristic in the field of remote sensing. A whole remote sensing image is usually very large, and there is no way to put it into the GPU for training at the same time. Therefore, the common practice in the industry is to cut the entire remote sensing image into small pieces to adapt to the GPU. of video memory. An obvious problem with this is that contextual information is lost for each small patch of training. In response to this situation, we also developed a geospatially sensitive context learning algorithm that can implicitly generate spatiotemporally sensitive geoscience knowledge.

Currently, the parameter scale of SkySense has reached 2.06 billion. In terms of model training methods, in addition to the commonly used unsupervised contrastive learning pre-training method, we also proposed to combine high-resolution optics through spatio-temporal decoupling based on the characteristics of remote sensing images. , mutually supervised learning and generative learning methods for data channels such as temporal optics and temporal SAR to flexibly support downstream remote sensing interpretation tasks of different modes and different temporal combinations. Currently, SkySense has achieved good results in 17 categories of evaluation data sets, including land monitoring and utilization, target detection, etc., and related papers have been included in CVPR2024 (IEEE International Conference on Computer Vision and Pattern Recognition).

Training this model requires a lot of investment in storage, computing power and human resources. We very much hope to share SkySense with the industry to unleash its value and promote the development of the entire field of remote sensing interpretation. On June 15 this year, we have begun to trial large remote sensing models for some scientific research institutions. During the use process, everyone also received a lot of feedback. For example, some feedback said that 2 billion parameters are too large, and many scenarios do not require models with such large parameters. In response to this situation, we have developed a set of algorithms that can generate small models of multiple sizes through one pre-training, and for each small model, the effect is better than directly training a model of this size.

In actual industrial applications, it is not enough to just have the weight parameters of the model. There must also be a matching data system and product system to truly bring out the value of the large model. This is the big picture of Ant Group’s remote sensing technology. At the data level, we have developed a spatio-temporal database to manage data in different modalities and sources to support efficient training and inference of large remote sensing models. In addition, we have cooperated with the School of Remote Sensing of Wuhan University to develop a domestic remote sensing data preprocessing system to greatly improve the quality of domestic data through integrated photogrammetry and remote sensing technology. In terms of products, we have developed the mEarth intelligent remote sensing workbench. This workbench can perform one-stop data asset management, data production and processing, model training and business application capabilities, so that it can efficiently and flexibly support various downstream application scenarios. access.

Next, I will share the application practice of SkySense. In the rural financial scenario mentioned earlier, through satellite remote sensing and remote sensing large model recognition, we can accurately identify the types of crops in different periods, as well as information such as whether they are affected by diseases and insect pests, and also analyze what growth cycle the crops are in. , matching diversified financial services according to different growth cycles, thereby providing farmers with better credit support.

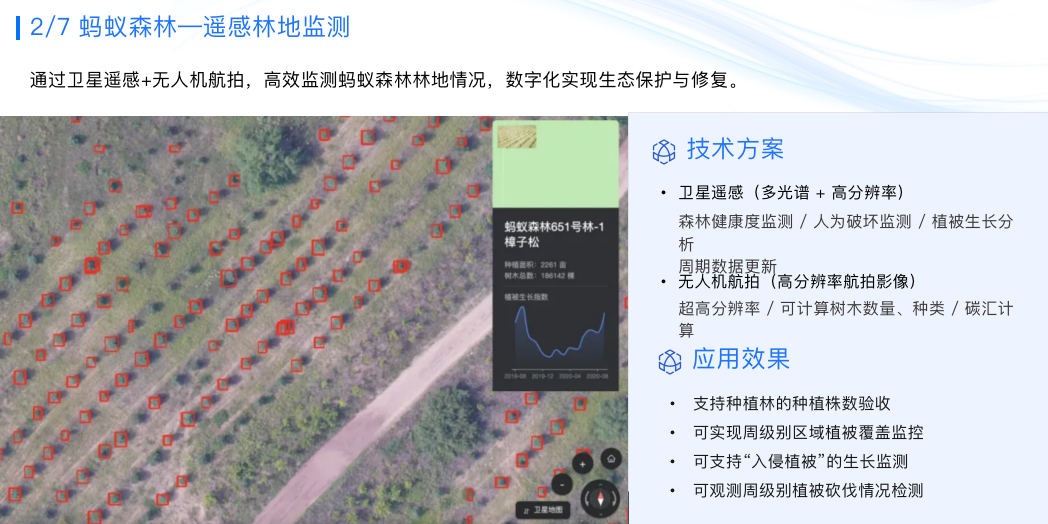

Ant Forest has planted 475 million trees and protects 4,800 square kilometers of social welfare protected areas. To protect so much forest land, technological means must be used. We use satellite remote sensing and drone aerial photography, combined with remote sensing large model recognition, to efficiently monitor the status of the ant forest and achieve digital ecological protection and restoration.

The calculation and measurement of carbon sinks is a very important topic in the field of ESG. The current carbon sink calculation relies heavily on manual labor, which hinders the development of carbon sink trading. We are experimenting with satellite remote sensing and large model technology to develop a zero-labor Intervene or reduce the carbon sink system plan of artificial intervention, and try to develop a forest area change monitoring and biomass increment estimation system.

This is in the forest protection project. We use remote sensing large models to support the change detection of natural weathering and the detection of changes in human destruction, thereby achieving regular monitoring and protection of large-scale forest land.

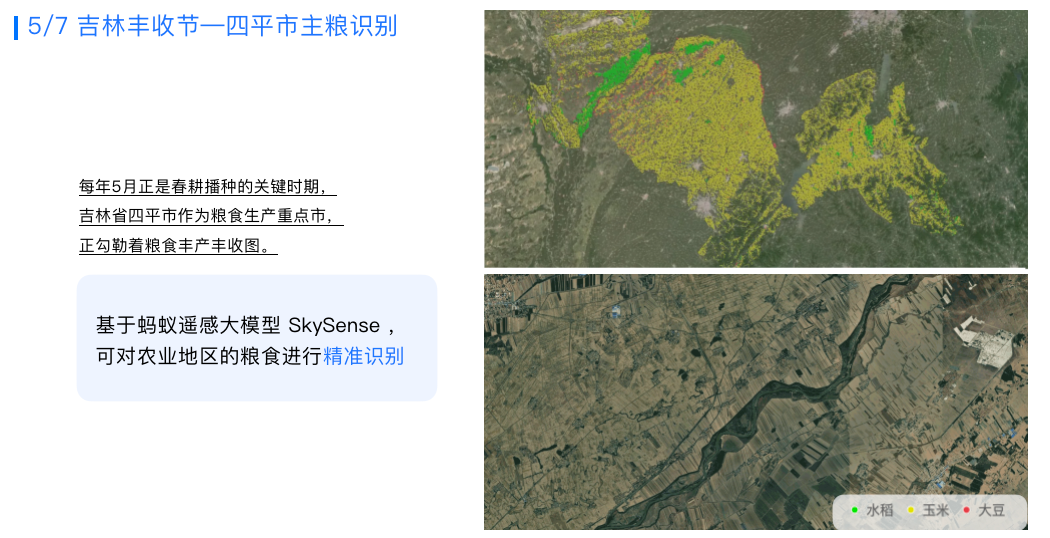

This is the result of identifying staple food crops in Siping City, Jilin through a large remote sensing model. It can be seen that in this area with relatively complex planting conditions, the large remote sensing model can also make accurate identification at the pixel level.

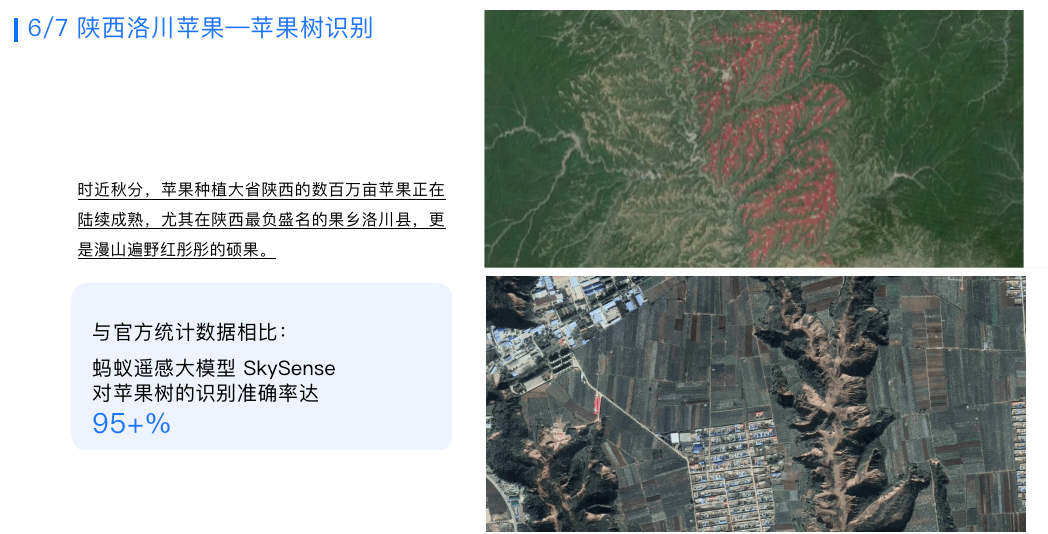

这是在陕西洛川,在这种地形结构复杂的区域,SkySense对苹果种植识别的准确率也可以达到95%以上。

同时,我们还用SkySense对全球区域的夜光数据进行了分析,展示不同区域经济活跃度的情况,明显看到上海区域经济非常活跃。

以上就是我的分享,谢谢大家!

The above is the detailed content of Exploration and practice of multi-modal remote sensing large models, Wang Jian, head of remote sensing large models of Ant Group, brings in-depth interpretation. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1666

1666

14

14

1426

1426

52

52

1328

1328

25

25

1273

1273

29

29

1253

1253

24

24

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

But maybe he can’t defeat the old man in the park? The Paris Olympic Games are in full swing, and table tennis has attracted much attention. At the same time, robots have also made new breakthroughs in playing table tennis. Just now, DeepMind proposed the first learning robot agent that can reach the level of human amateur players in competitive table tennis. Paper address: https://arxiv.org/pdf/2408.03906 How good is the DeepMind robot at playing table tennis? Probably on par with human amateur players: both forehand and backhand: the opponent uses a variety of playing styles, and the robot can also withstand: receiving serves with different spins: However, the intensity of the game does not seem to be as intense as the old man in the park. For robots, table tennis

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

On August 21, the 2024 World Robot Conference was grandly held in Beijing. SenseTime's home robot brand "Yuanluobot SenseRobot" has unveiled its entire family of products, and recently released the Yuanluobot AI chess-playing robot - Chess Professional Edition (hereinafter referred to as "Yuanluobot SenseRobot"), becoming the world's first A chess robot for the home. As the third chess-playing robot product of Yuanluobo, the new Guoxiang robot has undergone a large number of special technical upgrades and innovations in AI and engineering machinery. For the first time, it has realized the ability to pick up three-dimensional chess pieces through mechanical claws on a home robot, and perform human-machine Functions such as chess playing, everyone playing chess, notation review, etc.

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

The start of school is about to begin, and it’s not just the students who are about to start the new semester who should take care of themselves, but also the large AI models. Some time ago, Reddit was filled with netizens complaining that Claude was getting lazy. "Its level has dropped a lot, it often pauses, and even the output becomes very short. In the first week of release, it could translate a full 4-page document at once, but now it can't even output half a page!" https:// www.reddit.com/r/ClaudeAI/comments/1by8rw8/something_just_feels_wrong_with_claude_in_the/ in a post titled "Totally disappointed with Claude", full of

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference being held in Beijing, the display of humanoid robots has become the absolute focus of the scene. At the Stardust Intelligent booth, the AI robot assistant S1 performed three major performances of dulcimer, martial arts, and calligraphy in one exhibition area, capable of both literary and martial arts. , attracted a large number of professional audiences and media. The elegant playing on the elastic strings allows the S1 to demonstrate fine operation and absolute control with speed, strength and precision. CCTV News conducted a special report on the imitation learning and intelligent control behind "Calligraphy". Company founder Lai Jie explained that behind the silky movements, the hardware side pursues the best force control and the most human-like body indicators (speed, load) etc.), but on the AI side, the real movement data of people is collected, allowing the robot to become stronger when it encounters a strong situation and learn to evolve quickly. And agile

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

At this ACL conference, contributors have gained a lot. The six-day ACL2024 is being held in Bangkok, Thailand. ACL is the top international conference in the field of computational linguistics and natural language processing. It is organized by the International Association for Computational Linguistics and is held annually. ACL has always ranked first in academic influence in the field of NLP, and it is also a CCF-A recommended conference. This year's ACL conference is the 62nd and has received more than 400 cutting-edge works in the field of NLP. Yesterday afternoon, the conference announced the best paper and other awards. This time, there are 7 Best Paper Awards (two unpublished), 1 Best Theme Paper Award, and 35 Outstanding Paper Awards. The conference also awarded 3 Resource Paper Awards (ResourceAward) and Social Impact Award (

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Deep integration of vision and robot learning. When two robot hands work together smoothly to fold clothes, pour tea, and pack shoes, coupled with the 1X humanoid robot NEO that has been making headlines recently, you may have a feeling: we seem to be entering the age of robots. In fact, these silky movements are the product of advanced robotic technology + exquisite frame design + multi-modal large models. We know that useful robots often require complex and exquisite interactions with the environment, and the environment can be represented as constraints in the spatial and temporal domains. For example, if you want a robot to pour tea, the robot first needs to grasp the handle of the teapot and keep it upright without spilling the tea, then move it smoothly until the mouth of the pot is aligned with the mouth of the cup, and then tilt the teapot at a certain angle. . this

Distributed Artificial Intelligence Conference DAI 2024 Call for Papers: Agent Day, Richard Sutton, the father of reinforcement learning, will attend! Yan Shuicheng, Sergey Levine and DeepMind scientists will give keynote speeches

Aug 22, 2024 pm 08:02 PM

Distributed Artificial Intelligence Conference DAI 2024 Call for Papers: Agent Day, Richard Sutton, the father of reinforcement learning, will attend! Yan Shuicheng, Sergey Levine and DeepMind scientists will give keynote speeches

Aug 22, 2024 pm 08:02 PM

Conference Introduction With the rapid development of science and technology, artificial intelligence has become an important force in promoting social progress. In this era, we are fortunate to witness and participate in the innovation and application of Distributed Artificial Intelligence (DAI). Distributed artificial intelligence is an important branch of the field of artificial intelligence, which has attracted more and more attention in recent years. Agents based on large language models (LLM) have suddenly emerged. By combining the powerful language understanding and generation capabilities of large models, they have shown great potential in natural language interaction, knowledge reasoning, task planning, etc. AIAgent is taking over the big language model and has become a hot topic in the current AI circle. Au

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

This afternoon, Hongmeng Zhixing officially welcomed new brands and new cars. On August 6, Huawei held the Hongmeng Smart Xingxing S9 and Huawei full-scenario new product launch conference, bringing the panoramic smart flagship sedan Xiangjie S9, the new M7Pro and Huawei novaFlip, MatePad Pro 12.2 inches, the new MatePad Air, Huawei Bisheng With many new all-scenario smart products including the laser printer X1 series, FreeBuds6i, WATCHFIT3 and smart screen S5Pro, from smart travel, smart office to smart wear, Huawei continues to build a full-scenario smart ecosystem to bring consumers a smart experience of the Internet of Everything. Hongmeng Zhixing: In-depth empowerment to promote the upgrading of the smart car industry Huawei joins hands with Chinese automotive industry partners to provide