Technology peripherals

Technology peripherals

AI

AI

MotionClone: No training required, one-click cloning of video movements

MotionClone: No training required, one-click cloning of video movements

MotionClone: No training required, one-click cloning of video movements

The AIxiv column is a column where this site publishes academic and technical content. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com

No training or fine-tuning is required. Clone the movement of the reference video in the new scene specified by the prompt word, whether it is global camera movement or local body movement. One click to get it done.

Paper: https://arxiv.org/abs/2406.05338

Homepage: https://bujiazi.github.io/motionclone.github.io/

Code: https:// github.com/Bujiazi/MotionClone

This article proposes a new framework called MotionClone. Given any reference video, the corresponding motion information can be extracted without model training or fine-tuning; this motion information can Directly guide the generation of new videos together with text prompts to achieve text-generated videos with customized motion (text2video).

Compared with previous research, MotionClone has the following advantages:

No training or fine-tuning required: Previous methods usually require training models to encode motion cues or fine-tuning video diffusion models to fit specific motion patterns. Training models to encode motion cues have poor generalization ability to motion outside the training domain, and fine-tuning existing video generation models may damage the underlying video generation quality of the base model. MotionClone does not require the introduction of any additional training or fine-tuning, improving motion generalization capabilities while retaining the generation quality of the base model to the greatest extent.

Higher motion quality: It is difficult for existing open source Wensheng video models to generate large and reasonable movements. MotionClone introduces principal component timing attention motion guidance to greatly enhance the motion amplitude of the generated video while effectively ensuring motion. rationality.

Better spatial position relationship: In order to avoid the spatial semantic mismatch that may be caused by direct motion cloning, MotionClone proposes spatial semantic information guidance based on cross-attention masks to assist in the correct spatial semantic information and spatiotemporal motion information. coupling.

Motion information in the temporal attention module

In text-generated video work, the temporal attention module (Temporal Attention) is widely used to model the inter-frame correlation of videos. Since the attention score (attention map score) in the temporal attention module characterizes the correlation between frames, an intuitive idea is whether the inter-frame connections can be replicated by constraining the attention scores to be completely consistent to achieve motion cloning.

However, experiments have found that directly copying the complete attention map (plain control) can only achieve very rough motion transfer. This is because most of the weights in the attention correspond to noise or very subtle motion information, which is difficult to Combining this with text-specified new scenarios, on the other hand, obscures potentially effective movement guidance.

In order to solve this problem, MotionClone introduces the principal component temporal-attention guidance mechanism (Primary temporal-attention guidance), which uses only the main components in temporal attention to sparsely guide video generation, thereby filtering noise and subtle movements The negative impact of information enables effective cloning of motion in new scenarios specified by the text.

Spatial semantic correction

Principal component temporal attention motion guidance can achieve motion cloning of the reference video, but it cannot ensure that the moving subject is consistent with the user's intention, which will reduce the quality of video generation. In some cases, it may even lead to the dislocation of the moving subject.

In order to solve the above problems, MotionClone introduces a spatial semantic guidance mechanism (Location-aware semantic guidance), divides the front and rear background areas of the video through a Cross Attention Mask, and guarantees this by respectively constraining the semantic information of the front and rear background of the video. The rational layout of spatial semantics promotes the correct coupling of temporal motion and spatial semantics.

MotionClone implementation details

DDIM Inversion: MotionClone uses DDIM Inversion to invert the input reference video into latent space to implement temporal attention principal component extraction of the reference video.

Guidance stage: During each denoising, MotionClone simultaneously introduces principal component temporal attention motion guidance and spatial semantic information guidance, which work together to provide comprehensive motion and semantic guidance for controllable video generation.

Gaussian Mask: In the spatial semantic guidance mechanism, the Gaussian kernel function is used to blur the cross-attention mask to eliminate the influence of potential structural information.

30 videos from the DAVIS dataset were used for testing. Experimental results show that MotionClone has achieved significant improvements in text fit, timing consistency, and multiple user survey indicators, surpassing previous motion transfer methods. The specific results are shown in the table below.

Comparison of the generation results of MotionClone and existing motion migration methods is shown in the figure below. It can be seen that MotionClone has leading performance.

To sum up, MotionClone is a new motion transfer framework that can effectively clone motion in a reference video to a new scene specified by a user-given prompt word without training or fine-tuning. Provides plug-and-play motion customization solutions for existing Vincent video models.

MotionClone introduces efficient principal component motion information guidance and spatial semantic guidance on the basis of retaining the generation quality of the existing base model, which significantly improves the motion consistency with the reference video while ensuring the semantic alignment ability with the text. , to achieve high-quality and controllable video generation.

In addition, MotionClone can directly adapt to rich community models to achieve diversified video generation, and has extremely high scalability.

The above is the detailed content of MotionClone: No training required, one-click cloning of video movements. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

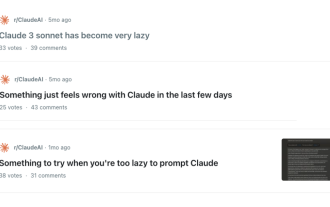

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

Claude has become lazy too! Netizen: Learn to give yourself a holiday

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

The first large UI model in China is released! Motiff's large model creates the best assistant for designers and optimizes UI design workflow

Aug 19, 2024 pm 04:48 PM

The first large UI model in China is released! Motiff's large model creates the best assistant for designers and optimizes UI design workflow

Aug 19, 2024 pm 04:48 PM

The first large UI model in China is released! Motiff's large model creates the best assistant for designers and optimizes UI design workflow