Technology peripherals

Technology peripherals

It Industry

It Industry

Japan's fastest AI supercomputer will be launched within the year: Based on NVIDIA H200 GPU, the AI computing power can reach 6 Exaflops

Japan's fastest AI supercomputer will be launched within the year: Based on NVIDIA H200 GPU, the AI computing power can reach 6 Exaflops

Japan's fastest AI supercomputer will be launched within the year: Based on NVIDIA H200 GPU, the AI computing power can reach 6 Exaflops

According to news from this site on July 18, according to NVIDIA Japan’s official blog, HPE will build Japan’s fastest AI supercomputer ABCI 3.0 for the Japan Institute of Industrial Science and Technology (Note from this site: referred to as AIST). The ABCI 3.0 supercomputer will be installed in a facility in Kashiwa City, Chiba Prefecture, a suburb of Tokyo, and is scheduled to be put into use by the end of this year.

▲ Installation site diagram As the name suggests, ABCI 3.0 will become the third generation AI Bridging Cloud Infrastructure (ABCI) supercomputer of the Industry and Research Institute, for Japanese industry, government and academia The industry provides AI cloud services to accelerate Japan’s research, development, innovation and social practice in the field of AI.

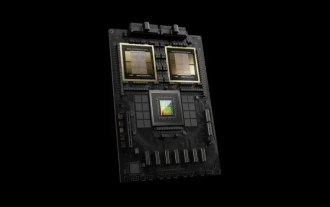

ABCI 3.0 is based on HPE’s Cray XD node system, and each node will be equipped with 8 NVIDIA H200 GPUs. The NVIDIA Quantum-2 InfiniBand interconnection network will be used between Cray XD nodes to meet the high-speed communication needs of intensive AI workloads and massive data sets.

ABCI 3.0 will include thousands of NVIDIA H200 Tensor Core GPUs, with 6 exaflops of 16-bit floating point AI computing power.

In terms of double-precision floating-point computing power, the traditional standard for measuring supercomputing performance, ABCI 3.0 will also reach 410 petaflops. If this theoretical computing power is fully realized, this would be enough for ABCI 3.0 to surpass LUMI, which ranked fifth on the most recent Top500 supercomputing list.

NVIDIA CEO Jensen Huang said:

We will work with HPE and IRI to help Japan leverage its unique capabilities and data to increase productivity, revitalize the economy and advance scientific discovery.

The above is the detailed content of Japan's fastest AI supercomputer will be launched within the year: Based on NVIDIA H200 GPU, the AI computing power can reach 6 Exaflops. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Remember the Japanese otaku who married Hatsune Miku? The two have actually been divorced for almost 4 years

Mar 17, 2024 am 09:37 AM

Remember the Japanese otaku who married Hatsune Miku? The two have actually been divorced for almost 4 years

Mar 17, 2024 am 09:37 AM

On March 31, 2020, Hatsune Miku officially "divorced" the Japanese otaku who once spent millions to marry her. It has been almost 4 years since then. In fact, when the two got married, many people were not optimistic about the couple. After all, it was very outrageous for a person living in the third dimension to marry a paper person from the second dimension. However, in the face of the criticism from netizens, the Japanese otaku Kondo Akihiko did not back down. In the end, he held a wedding with Hatsune Miku. Judging from the photos Kondo Akihiko posted from time to time after his marriage, his life with Hatsune Miku It was quite good, but unfortunately their marriage did not last too long. As the Gatebox copyright of the first-generation Hatsune model expired, Kondo Akihiko's wife Hatsune Miku also

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

The open LLM community is an era when a hundred flowers bloom and compete. You can see Llama-3-70B-Instruct, QWen2-72B-Instruct, Nemotron-4-340B-Instruct, Mixtral-8x22BInstruct-v0.1 and many other excellent performers. Model. However, compared with proprietary large models represented by GPT-4-Turbo, open models still have significant gaps in many fields. In addition to general models, some open models that specialize in key areas have been developed, such as DeepSeek-Coder-V2 for programming and mathematics, and InternVL for visual-language tasks.

'AI Factory” will promote the reshaping of the entire software stack, and NVIDIA provides Llama3 NIM containers for users to deploy

Jun 08, 2024 pm 07:25 PM

'AI Factory” will promote the reshaping of the entire software stack, and NVIDIA provides Llama3 NIM containers for users to deploy

Jun 08, 2024 pm 07:25 PM

According to news from this site on June 2, at the ongoing Huang Renxun 2024 Taipei Computex keynote speech, Huang Renxun introduced that generative artificial intelligence will promote the reshaping of the full stack of software and demonstrated its NIM (Nvidia Inference Microservices) cloud-native microservices. Nvidia believes that the "AI factory" will set off a new industrial revolution: taking the software industry pioneered by Microsoft as an example, Huang Renxun believes that generative artificial intelligence will promote its full-stack reshaping. To facilitate the deployment of AI services by enterprises of all sizes, NVIDIA launched NIM (Nvidia Inference Microservices) cloud-native microservices in March this year. NIM+ is a suite of cloud-native microservices optimized to reduce time to market

After multiple transformations and cooperation with AI giant Nvidia, why did Vanar Chain surge 4.6 times in 30 days?

Mar 14, 2024 pm 05:31 PM

After multiple transformations and cooperation with AI giant Nvidia, why did Vanar Chain surge 4.6 times in 30 days?

Mar 14, 2024 pm 05:31 PM

Recently, Layer1 blockchain VanarChain has attracted market attention due to its high growth rate and cooperation with AI giant NVIDIA. Behind VanarChain's popularity, in addition to undergoing multiple brand transformations, popular concepts such as main games, metaverse and AI have also earned the project plenty of popularity and topics. Prior to its transformation, Vanar, formerly TerraVirtua, was founded in 2018 as a platform that supported paid subscriptions, provided virtual reality (VR) and augmented reality (AR) content, and accepted cryptocurrency payments. The platform was created by co-founders Gary Bracey and Jawad Ashraf, with Gary Bracey having extensive experience involved in video game production and development.

TrendForce: Nvidia's Blackwell platform products drive TSMC's CoWoS production capacity to increase by 150% this year

Apr 17, 2024 pm 08:00 PM

TrendForce: Nvidia's Blackwell platform products drive TSMC's CoWoS production capacity to increase by 150% this year

Apr 17, 2024 pm 08:00 PM

According to news from this site on April 17, TrendForce recently released a report, believing that demand for Nvidia's new Blackwell platform products is bullish, and is expected to drive TSMC's total CoWoS packaging production capacity to increase by more than 150% in 2024. NVIDIA Blackwell's new platform products include B-series GPUs and GB200 accelerator cards integrating NVIDIA's own GraceArm CPU. TrendForce confirms that the supply chain is currently very optimistic about GB200. It is estimated that shipments in 2025 are expected to exceed one million units, accounting for 40-50% of Nvidia's high-end GPUs. Nvidia plans to deliver products such as GB200 and B100 in the second half of the year, but upstream wafer packaging must further adopt more complex products.

The computer I spent 300 yuan to assemble successfully ran through the local large model

Apr 12, 2024 am 08:07 AM

The computer I spent 300 yuan to assemble successfully ran through the local large model

Apr 12, 2024 am 08:07 AM

If 2023 is recognized as the first year of AI, then 2024 is likely to be a key year for the popularization of large AI models. In the past year, a large number of large AI models and a large number of AI applications have emerged. Manufacturers such as Meta and Google have also begun to launch their own online/local large models to the public, similar to "AI artificial intelligence" that is out of reach. The concept suddenly came to people. Nowadays, people are increasingly exposed to artificial intelligence in their lives. If you look carefully, you will find that almost all of the various AI applications you have access to are deployed on the "cloud". If you want to build a device that can run large models locally, then the hardware is a brand-new AIPC priced at more than 5,000 yuan. For ordinary people,

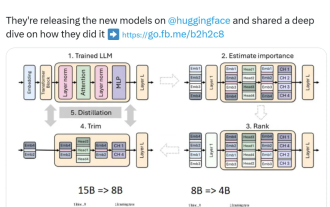

Nvidia plays with pruning and distillation: halving the parameters of Llama 3.1 8B to achieve better performance with the same size

Aug 16, 2024 pm 04:42 PM

Nvidia plays with pruning and distillation: halving the parameters of Llama 3.1 8B to achieve better performance with the same size

Aug 16, 2024 pm 04:42 PM

The rise of small models. Last month, Meta released the Llama3.1 series of models, which includes Meta’s largest model to date, the 405B model, and two smaller models with 70 billion and 8 billion parameters respectively. Llama3.1 is considered to usher in a new era of open source. However, although the new generation models are powerful in performance, they still require a large amount of computing resources when deployed. Therefore, another trend has emerged in the industry, which is to develop small language models (SLM) that perform well enough in many language tasks and are also very cheap to deploy. Recently, NVIDIA research has shown that structured weight pruning combined with knowledge distillation can gradually obtain smaller language models from an initially larger model. Turing Award Winner, Meta Chief A

Compliant with NVIDIA SFF-Ready specification, ASUS launches Prime GeForce RTX 40 series graphics cards

Jun 15, 2024 pm 04:38 PM

Compliant with NVIDIA SFF-Ready specification, ASUS launches Prime GeForce RTX 40 series graphics cards

Jun 15, 2024 pm 04:38 PM

According to news from this site on June 15, Asus has recently launched the Prime series GeForce RTX40 series "Ada" graphics card. Its size complies with Nvidia's latest SFF-Ready specification. This specification requires that the size of the graphics card does not exceed 304 mm x 151 mm x 50 mm (length x height x thickness). ). The Prime series GeForceRTX40 series launched by ASUS this time includes RTX4060Ti, RTX4070 and RTX4070SUPER, but it currently does not include RTX4070TiSUPER or RTX4080SUPER. This series of RTX40 graphics cards adopts a common circuit board design with dimensions of 269 mm x 120 mm x 50 mm. The main differences between the three graphics cards are