Hardware Tutorial

Hardware Tutorial

Hardware News

Hardware News

Aiming to completely replace closed-source drivers, NVIDIA announced a full shift to open-source GPU kernel modules

Aiming to completely replace closed-source drivers, NVIDIA announced a full shift to open-source GPU kernel modules

Aiming to completely replace closed-source drivers, NVIDIA announced a full shift to open-source GPU kernel modules

According to news from this site on July 18, NVIDIA issued a press release yesterday (July 17), announcing a comprehensive shift to open source GPU kernel modules. Subsequent versions will provide more powerful and comprehensive support for GeForce and Workstation Linux. And plans to eventually completely replace closed source drivers.

NVIDIA announced in May 2022 that it will release open source GPU kernel modules under the GPL/MIT dual license model starting with the R515 driver.

Developers can trace code paths and understand how kernel event dispatch interacts with their workloads for faster root cause debugging.

Additionally, enterprise software developers can now seamlessly integrate drivers into custom Linux kernels configured for their projects.

NVIDIA stated that after more than 2 years of development, the open source GPU kernel module has achieved equal or better performance and added a large number of new features:

Support for heterogeneous memory management (HMM)

Confidential computing

and Grace platform Consistent memory architecture

Supported GPUs

Note from this site: Not all GPUs are compatible with open source GPU kernel modules.

Nvidia says that on cutting-edge platforms such as Grace Hopper or Blackwell, users must use open source GPU kernel modules, which do not support proprietary drivers.

For newer GPUs with Turing, Ampere, Ada Lovelace or Hopper architecture, NVIDIA recommends using the open source GPU kernel module instead.

For older GPUs based on Maxwell, Pascal or Volta architecture, they are not compatible with the open source GPU kernel module platform. It is recommended that users continue to use NVIDIA proprietary drivers.

The above is the detailed content of Aiming to completely replace closed-source drivers, NVIDIA announced a full shift to open-source GPU kernel modules. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1664

1664

14

14

1423

1423

52

52

1317

1317

25

25

1268

1268

29

29

1248

1248

24

24

Ten recommended open source free text annotation tools

Mar 26, 2024 pm 08:20 PM

Ten recommended open source free text annotation tools

Mar 26, 2024 pm 08:20 PM

Text annotation is the work of corresponding labels or tags to specific content in text. Its main purpose is to provide additional information to the text for deeper analysis and processing, especially in the field of artificial intelligence. Text annotation is crucial for supervised machine learning tasks in artificial intelligence applications. It is used to train AI models to help more accurately understand natural language text information and improve the performance of tasks such as text classification, sentiment analysis, and language translation. Through text annotation, we can teach AI models to recognize entities in text, understand context, and make accurate predictions when new similar data appears. This article mainly recommends some better open source text annotation tools. 1.LabelStudiohttps://github.com/Hu

15 recommended open source free image annotation tools

Mar 28, 2024 pm 01:21 PM

15 recommended open source free image annotation tools

Mar 28, 2024 pm 01:21 PM

Image annotation is the process of associating labels or descriptive information with images to give deeper meaning and explanation to the image content. This process is critical to machine learning, which helps train vision models to more accurately identify individual elements in images. By adding annotations to images, the computer can understand the semantics and context behind the images, thereby improving the ability to understand and analyze the image content. Image annotation has a wide range of applications, covering many fields, such as computer vision, natural language processing, and graph vision models. It has a wide range of applications, such as assisting vehicles in identifying obstacles on the road, and helping in the detection and diagnosis of diseases through medical image recognition. . This article mainly recommends some better open source and free image annotation tools. 1.Makesens

Recommended: Excellent JS open source face detection and recognition project

Apr 03, 2024 am 11:55 AM

Recommended: Excellent JS open source face detection and recognition project

Apr 03, 2024 am 11:55 AM

Face detection and recognition technology is already a relatively mature and widely used technology. Currently, the most widely used Internet application language is JS. Implementing face detection and recognition on the Web front-end has advantages and disadvantages compared to back-end face recognition. Advantages include reducing network interaction and real-time recognition, which greatly shortens user waiting time and improves user experience; disadvantages include: being limited by model size, the accuracy is also limited. How to use js to implement face detection on the web? In order to implement face recognition on the Web, you need to be familiar with related programming languages and technologies, such as JavaScript, HTML, CSS, WebRTC, etc. At the same time, you also need to master relevant computer vision and artificial intelligence technologies. It is worth noting that due to the design of the Web side

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

The open LLM community is an era when a hundred flowers bloom and compete. You can see Llama-3-70B-Instruct, QWen2-72B-Instruct, Nemotron-4-340B-Instruct, Mixtral-8x22BInstruct-v0.1 and many other excellent performers. Model. However, compared with proprietary large models represented by GPT-4-Turbo, open models still have significant gaps in many fields. In addition to general models, some open models that specialize in key areas have been developed, such as DeepSeek-Coder-V2 for programming and mathematics, and InternVL for visual-language tasks.

'AI Factory” will promote the reshaping of the entire software stack, and NVIDIA provides Llama3 NIM containers for users to deploy

Jun 08, 2024 pm 07:25 PM

'AI Factory” will promote the reshaping of the entire software stack, and NVIDIA provides Llama3 NIM containers for users to deploy

Jun 08, 2024 pm 07:25 PM

According to news from this site on June 2, at the ongoing Huang Renxun 2024 Taipei Computex keynote speech, Huang Renxun introduced that generative artificial intelligence will promote the reshaping of the full stack of software and demonstrated its NIM (Nvidia Inference Microservices) cloud-native microservices. Nvidia believes that the "AI factory" will set off a new industrial revolution: taking the software industry pioneered by Microsoft as an example, Huang Renxun believes that generative artificial intelligence will promote its full-stack reshaping. To facilitate the deployment of AI services by enterprises of all sizes, NVIDIA launched NIM (Nvidia Inference Microservices) cloud-native microservices in March this year. NIM+ is a suite of cloud-native microservices optimized to reduce time to market

Alibaba 7B multi-modal document understanding large model wins new SOTA

Apr 02, 2024 am 11:31 AM

Alibaba 7B multi-modal document understanding large model wins new SOTA

Apr 02, 2024 am 11:31 AM

New SOTA for multimodal document understanding capabilities! Alibaba's mPLUG team released the latest open source work mPLUG-DocOwl1.5, which proposed a series of solutions to address the four major challenges of high-resolution image text recognition, general document structure understanding, instruction following, and introduction of external knowledge. Without further ado, let’s look at the effects first. One-click recognition and conversion of charts with complex structures into Markdown format: Charts of different styles are available: More detailed text recognition and positioning can also be easily handled: Detailed explanations of document understanding can also be given: You know, "Document Understanding" is currently An important scenario for the implementation of large language models. There are many products on the market to assist document reading. Some of them mainly use OCR systems for text recognition and cooperate with LLM for text processing.

Single card running Llama 70B is faster than dual card, Microsoft forced FP6 into A100 | Open source

Apr 29, 2024 pm 04:55 PM

Single card running Llama 70B is faster than dual card, Microsoft forced FP6 into A100 | Open source

Apr 29, 2024 pm 04:55 PM

FP8 and lower floating point quantification precision are no longer the "patent" of H100! Lao Huang wanted everyone to use INT8/INT4, and the Microsoft DeepSpeed team started running FP6 on A100 without official support from NVIDIA. Test results show that the new method TC-FPx's FP6 quantization on A100 is close to or occasionally faster than INT4, and has higher accuracy than the latter. On top of this, there is also end-to-end large model support, which has been open sourced and integrated into deep learning inference frameworks such as DeepSpeed. This result also has an immediate effect on accelerating large models - under this framework, using a single card to run Llama, the throughput is 2.65 times higher than that of dual cards. one

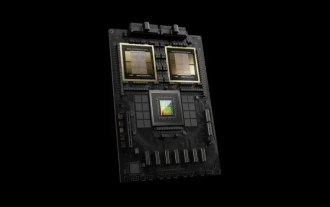

TrendForce: Nvidia's Blackwell platform products drive TSMC's CoWoS production capacity to increase by 150% this year

Apr 17, 2024 pm 08:00 PM

TrendForce: Nvidia's Blackwell platform products drive TSMC's CoWoS production capacity to increase by 150% this year

Apr 17, 2024 pm 08:00 PM

According to news from this site on April 17, TrendForce recently released a report, believing that demand for Nvidia's new Blackwell platform products is bullish, and is expected to drive TSMC's total CoWoS packaging production capacity to increase by more than 150% in 2024. NVIDIA Blackwell's new platform products include B-series GPUs and GB200 accelerator cards integrating NVIDIA's own GraceArm CPU. TrendForce confirms that the supply chain is currently very optimistic about GB200. It is estimated that shipments in 2025 are expected to exceed one million units, accounting for 40-50% of Nvidia's high-end GPUs. Nvidia plans to deliver products such as GB200 and B100 in the second half of the year, but upstream wafer packaging must further adopt more complex products.