Technology peripherals

Technology peripherals

AI

AI

At the top robotics conference RSS 2024, China's humanoid robot research won the best paper award

At the top robotics conference RSS 2024, China's humanoid robot research won the best paper award

At the top robotics conference RSS 2024, China's humanoid robot research won the best paper award

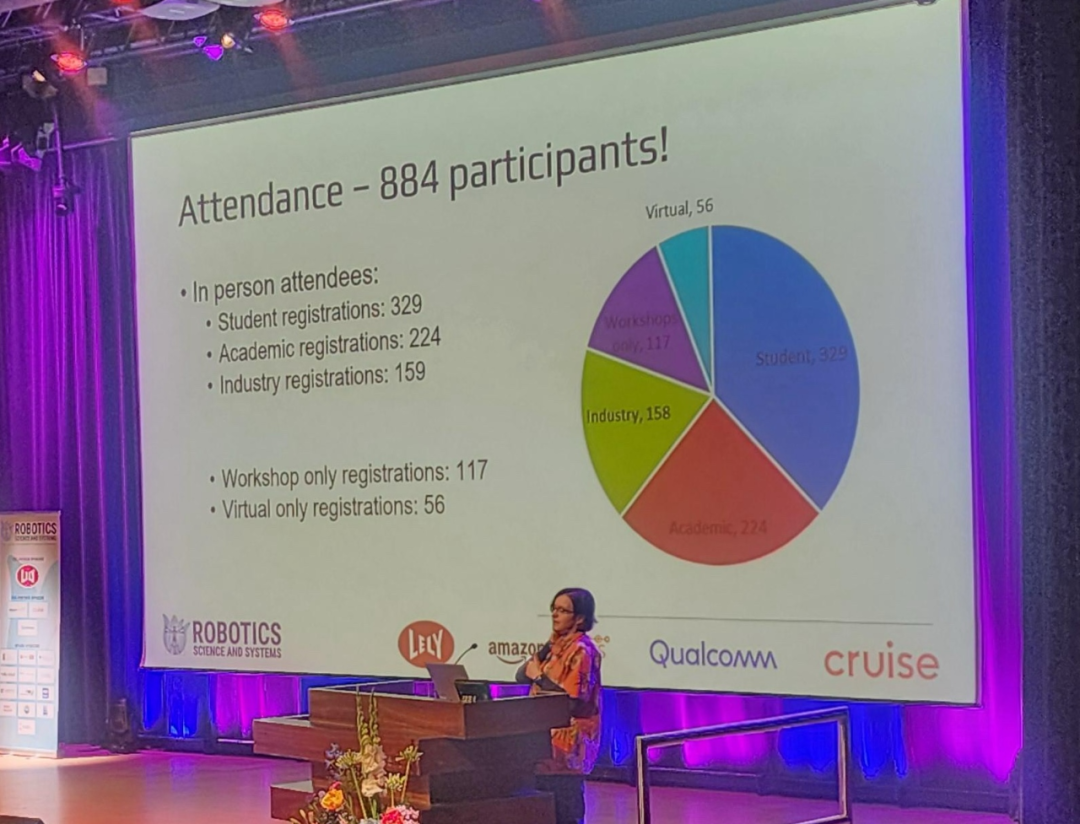

Recently, RSS (Robotics: Science and Systems) 2024, a famous conference in the field of robotics, concluded successfully at Delft University of Technology in the Netherlands.

Although the conference scale is not comparable to top AI conferences such as NeurIPS and CVPR, RSS has made great progress in the past few years, with nearly 900 participants this year.

On the last day of the conference, multiple awards such as Best Paper, Best Student Paper, Best System Paper, and Best Demo Paper were announced at the same time. In addition, the conference also selected the "Early Career Spotlight Award" and the "Time Test Award".

It is worth noting that the humanoid robot research from Tsinghua University and Beijing Xingdong Era Technology Co., Ltd. won the best paper award, and Chinese scholar Ji Zhang won this time test award.

The following is the information about the winning papers:

Best Demo Paper Award

Paper title: Demonstrating CropFollow++: Robust Under-Canopy Navigation with Keypoints

Author: Arun Narenthiran Sivakumar , Mateus Valverde Gasparino, Michael McGuire, Vitor Akihiro Hisano Higuti, M. Ugur Akcal, Girish Chowdhary

Institution: UIUC, Earth Sense

Paper link: https://enriquecoronadozu.github.io/rssproceedings2024/rss20/p023. pdf

In this paper, researchers propose an experience-based robust visual navigation system for under-crop canopy agricultural robots using semantic key points.

Autonomous navigation under crop canopies is challenging due to small crop row spacing (∼ 0.75 meters), reduced RTK-GPS accuracy due to multipath errors, and noise from lidar measurements caused by excessive clutter. An earlier work called CropFollow addressed these challenges by proposing a learning-based end-to-end perceptual visual navigation system. However, this approach suffers from the following limitations: lack of interpretable representations and lack of sensitivity to outlier predictions during occlusion due to insufficient confidence.

The system CropFollow++ in this article introduces a modular perception architecture and learned semantic key point representation. Compared to CropFollow, CropFollow++ is more modular, more interpretable, and provides greater confidence in detecting occlusions. CropFollow++ performed significantly better than CropFollow in challenging late-season field tests, each spanning 1.9 kilometers and requiring 13 versus 33 collisions. We also discuss key lessons learned from a large-scale deployment of CropFollow++ in multiple under-crop canopy cover cropping robots (total length 25 km) under varying field conditions.

Paper title: Demonstrating Agile Flight from Pixels without State Estimation

Authors: smail Geles, Leonard Bauersfeld, Angel Romero, Jiaxu Xing, Davide Scaramuzza

Paper link: https://enriquecoronadozu .github.io/rssproceedings2024/rss20/p082.pdf

Quadcopter drones are one of the most agile flying robots. Although some recent research has made advances in learning-based control and computer vision, autonomous drones still rely on explicit state estimation. Human pilots, on the other hand, can only rely on first-person video streams provided by the drone’s onboard camera to push the platform to its limits and fly steadily in unseen environments.

This article demonstrates the first vision-based quadcopter drone system that can autonomously navigate through a series of doors at high speed while mapping pixels directly to control commands. Like professional drone racers, the system does not use explicit state estimates, but instead utilizes the same control commands as humans (collective thrust and body velocity). Researchers have demonstrated agile flight at speeds up to 40km/h and accelerations up to 2g. This is achieved by training vision-based policies through reinforcement learning (RL). Using asymmetric Actor-Critic can obtain privileged information and facilitate training. To overcome the computational complexity during image-based RL training, we use the inner edges of gates as sensor abstractions. This simple yet powerful task-relevant representation can be simulated without rendering images during training. During the deployment process, the researchers used a door detector based on Swin Transformer.

The method in this article can use standard, off-the-shelf hardware to achieve autonomous agile flight. While the demonstration focused on drone racing, the approach has implications beyond competition and can serve as a basis for future research into real-world applications in structured environments.

Best System Paper Award

Paper title: Universal Manipulation Interface: In-The-Wild Robot Teaching Without In-The-Wild Robots

Cheng Chi, Zhenjia Xu, Chuer Pan, Eric Cousineau, Benjamin Burchfiel, Siyuan Feng, Russ Tedrake, Shuran Song

Institutions: Stanford University, Columbia University, Toyota Research Institute

Paper link: https://arxiv.org/pdf/2402.10329

This article introduces the Universal Manipulation Interface (UMI), a A data collection and policy learning framework that directly transfers skills demonstrated by humans in the wild into deployable robot policies. UMI utilizes a handheld gripper and careful interface design to provide portable, low-cost, and information-rich data collection for challenging dual-arm and dynamic manipulation demonstrations. To facilitate deployable policy learning, UMI employs a carefully designed policy interface with inference time delay matching and relative trajectory action representation capabilities. The learned policy is hardware-independent and can be deployed on multiple robotic platforms. With these capabilities, the UMI framework unlocks new robotic manipulation capabilities, enabling zero-shot generalization of dynamic, dual-arm, precise, and long-field-of-view behaviors by simply changing the training data for each task. The researchers demonstrated UMI's versatility and effectiveness through comprehensive real-world experiments in which policies learned with UMI Zero RF generalized to new environments and objects when trained on different human demonstrations.

Paper title: Khronos: A Unified Approach for Spatio-Temporal Metric-Semantic SLAM in Dynamic Environments

Authors: Lukas Schmid, Marcus Abate, Yun Chang, Luca Carlone

Paper link: https://arxiv.org/pdf/2402.13817

Perceiving and understanding highly dynamic and changing environments are key capabilities for robot autonomy. While considerable progress has been made in developing dynamic SLAM methods that can accurately estimate robot poses, insufficient attention has been paid to building dense spatiotemporal representations of robot environments. A detailed understanding of the scenario and its evolution over time is crucial for the long-term autonomy of the robot, and is also crucial for tasks that require long-term reasoning, such as operating effectively in an environment shared with humans and other agents and therefore subject to short- and long-term constraints. The impact of dynamic changes.

To address this challenge, this study defines the spatiotemporal metric-semantic SLAM (SMS) problem and proposes a framework to effectively decompose and solve the problem. It is shown that the proposed factorization suggests a natural organization of spatiotemporal perception systems, where a fast process tracks short-term dynamics in the active time window, while another slow process expresses responses to long-term changes in the environment using a factor graph. Make inferences. Researchers provide Khronos, an efficient spatiotemporal awareness method, and demonstrate that it unifies existing explanations of short-term and long-term dynamics and is able to construct dense spatiotemporal maps in real time.

The simulation and actual results provided in the paper show that the spatio-temporal map constructed by Khronos can accurately reflect the temporal changes of the three-dimensional scene, and Khronos outperforms the baseline in multiple indicators.

Best Student Paper Award

Paper Title: Dynamic On-Palm Manipulation via Controlled Sliding

Authors: William Yang, Michael Posa

Institution: University of Pennsylvania

Paper link: https://arxiv.org/pdf/2405.08731

Currently, research on robots performing non-grabbing actions mainly focuses on static contact to avoid problems that may be caused by sliding. However, if the problem of "hand slip" is fundamentally eliminated, that is, the sliding during contact can be controlled, this will open up new areas of actions that the robot can do.

In this paper, researchers propose a challenging dynamic non-grasping operation task that requires comprehensive consideration of various mixed contact modes. The researchers used the latest implicit contact model predictive control (MPC) technology to help the robot perform multi-modal planning to complete various tasks. The paper explores in detail how to integrate simplified models for MPC with low-level tracking controllers and how to adapt implicit contact MPC to the needs of dynamic tasks.

Impressively, although friction and rigid contact models are known to be often inaccurate, this paper's approach is able to respond sensitively to these inaccuracies while completing the task quickly. Moreover, the researchers did not use common auxiliary tools, such as reference trajectories or motion primitives, to assist the robot in completing the task, which further highlights the versatility of the method. This is the first time that implicit contact MPC technology has been applied to dynamic manipulation tasks in three-dimensional space.

Paper title: Agile But Safe: Learning Collision-Free High-Speed Legged Locomotion

Authors: Tairan He, Chong Zhang, Wenli Xiao, Guanqi He, Changliu Liu, Guanya Shi

Institution : CMU, ETH Zurich, Switzerland

Paper link: https://arxiv.org/pdf/2401.17583

When quadruped robots travel through cluttered environments, they need to have both flexibility and safety. They need to be able to complete tasks quickly while avoiding collisions with people or obstacles. However, existing research often only focuses on one aspect: either designing a conservative controller with a speed of no more than 1.0 m/s for safety, or pursuing flexibility but ignoring the problem of potentially fatal collisions.

This paper proposes a control framework called "Agile and Secure". This framework allows quadruped robots to safely avoid obstacles and people while maintaining flexibility, achieving collision-free walking.

ABS includes two sets of strategies: one is to teach the robot how to shuttle flexibly and nimbly between obstacles, and the other is to teach the robot how to recover quickly if it encounters a problem to ensure that the robot does not fall or hit something. The two strategies complement each other.

In the ABS system, the switching of strategies is controlled by a collision avoidance value network based on learning control theory. This network not only determines when to switch strategies, but also provides an objective function for the recovery strategy, ensuring that the robot always remains safe in the closed-loop control system. In this way, robots can flexibly respond to various situations in complex environments.

In order to train these strategies and networks, researchers have conducted extensive training in simulation environments, including agile strategies, collision avoidance value networks, recovery strategies, and external perception representation networks, etc. These trained modules can be directly applied to the real world. With the robot's own perception and computing capabilities, whether the robot is indoors or in a restricted outdoor space, whether it is facing immobile or movable obstacles, it can Act quickly and safely within the ABS framework.

If you want to know more details, you can refer to the previous introduction to this paper on this site.

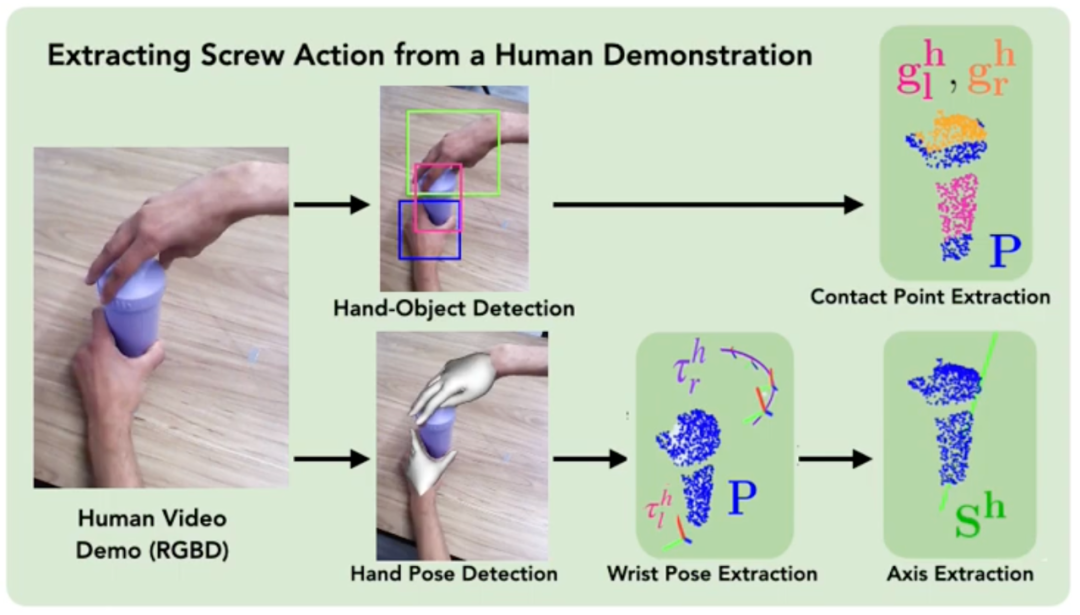

Paper title: ScrewMimic: Bimanual Imitation from Human Videos with Screw Space Projection

Authors: Arpit Bahety, Priyanka Mandikal, Ben Abbatematteo, Roberto Martín-Martín

Institution: Texas University of Austin

Paper link: https://arxiv.org/pdf/2405.03666

If you want to teach a robot how to do something with two hands at the same time, such as opening a box at the same time, it is actually very difficult difficulty. Because the robot needs to control many joints at the same time, it also needs to ensure that the movements of the two hands are coordinated. For humans, people learn new actions by observing others, then try them themselves and continuously improve. In this paper, researchers refer to human learning methods so that robots can learn new skills by watching videos and improve them in practice.

Researchers got inspiration from psychology and biomechanics studies. They imagined the movements of the two hands as a special chain that can rotate like a screw, which is called a "spiral action." Based on this, they developed a system called ScrewMimic. This system can help robots better understand human demonstrations and improve actions through self-supervision. Through experiments, the researchers found that the ScrewMimic system can help the robot learn complex two-hand operation skills from a video, and surpass in performance those systems that learn and improve directly in the original action space.

Method diagram Advancing Humanoid Locomotion: Mastering Challenging Terrains with Denoising World Model Learning

Author: Xinyang Gu, Yen-Jen Wang, Xiang Zhu, Chengming Shi, Yanjiang Guo, Yichen Liu, Jianyu Chen

Institution: Beijing Xingdong Era Technology Co., Ltd., Tsinghua University

論文連結:https://enriquecoronadozu.github.io/rssproceedings2024/rss20/p058.pdf

當前技術只能讓人形體平坦地面,這類簡單的機器人上行走。然而,讓它們在複雜環境,如真實的戶外場景中自如行動,仍然很困難。在這篇論文中,研究者提出了一種名為去噪世界模型學習(DWL)的新方法。

DWL 是一個用於人形機器人的運動控制的端到端的強化學習框架。這個框架使機器人能夠適應各種不平坦且具有挑戰性的地形,例如雪地、斜坡和樓梯。值得一提的是,這些機器人只需一次學習過程,無需額外的特別訓練,便能在現實世界中自如應對多樣化的地形挑戰。

這項研究由北京星動紀元科技有限公司和清華大學共同完成。星動紀元成立於2023 年,是一家由清華大學交叉資訊研究院孵化,研發具身智能以及通用人形機器人技術和產品的科技公司,創始人為清華大學交叉信息研究院助理教授、博導陳建宇,聚焦於通用人工智慧(AGI) 前沿應用,致力於研發適應寬領域、多情境、高智慧的通用人形機器人。

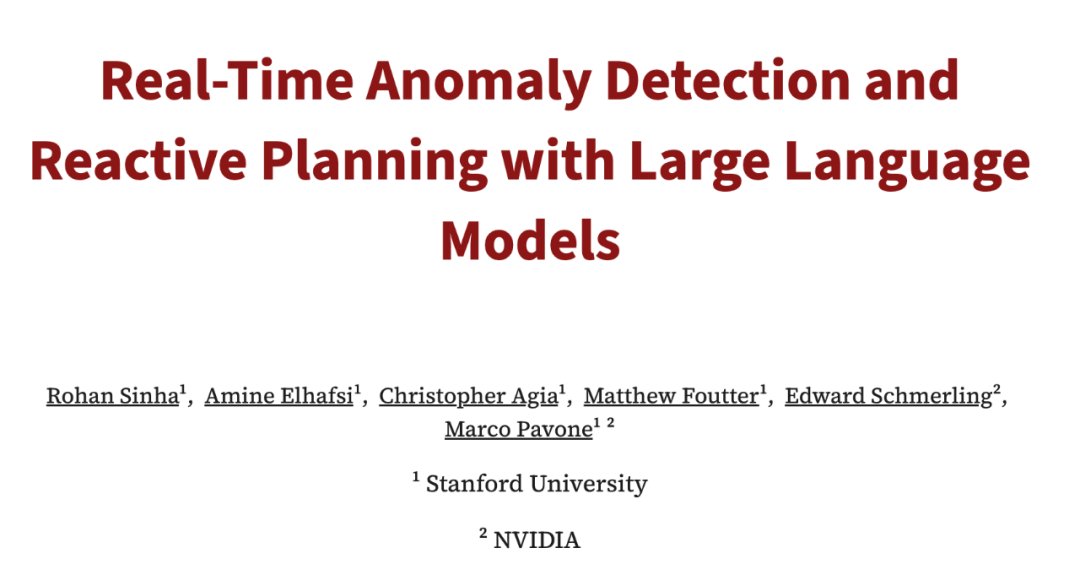

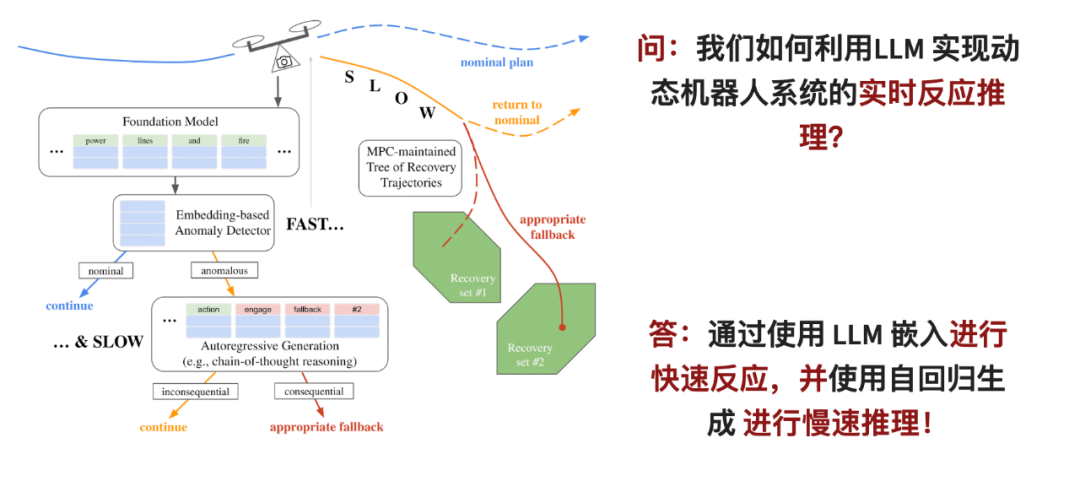

論文標題:Real-Time Anomaly Detection and Reactive Planning with Large Language Models

-

作者:Rohan Sinha, Amine ElhaEdward, Christopher Agia,

作者:Rohan Sincoup, Amine ElhaEdward, Christopher Agia, 作者:Rohan Sincoup, Amine Elhafsi, Christopher :史丹佛大學 - 論文連結:https://arxiv.org/pdf/2407.08735

- 大型語言模型(LLM),具有零樣本的泛化能力,這使得它們有希望成為檢測和排除機器人系統分佈外故障的技術。然而,想讓大型語言模型真正發揮作用,需要解決兩個難題:首先,LLM 需要很多計算資源才能在線應用;其次,需要讓 LLM 的判斷能夠融入到機器人的安全控制系統中。

在這篇論文中,研究者提出了一個兩階段的推理框架:對於第一階段,他們設計了一個快速的異常檢測器,它能在LLM 的理解空間裡迅速分析對機器人的觀察結果;如果發現問題,就會進入下一個備選選擇階段。在這個階段,將採用 LLM 的推理能力,進行更深入的分析。

進入哪個階段對應於模型預測控制策略中的分支點,這個策略能夠同時追蹤並評估不同的備選計劃,以解決慢速推理器的延遲問題。一旦系統偵測到任何異常或問題,這個策略會立即啟動,確保機器人的行動是安全的。

這篇論文中的快速異常分類器在性能上超越了使用最先進的 GPT 模型的自回歸推理,即使在使用相對較小的語言模型時也是如此。這使得論文中提出的即時監視器能夠在有限的資源和時間下,例如四旋翼無人機和無人駕駛汽車中,提高動態機器人的可靠性。

- 論文題目:Configuration Space Distance Fields for Manipulation Planning

- 作者:Ymiming機構:瑞士IDIAP 研究所、瑞士洛桑聯邦理工學院、浙江大學

- 論文連結:https://arxiv.org/pdf/2406.01137

- 符號距離場(SDF)是機器人學中一種流行的隱式形狀表示,它提供了關於物體和障礙物的幾何訊息,並且可以輕鬆地與控制、優化和學習技術結合。 SDF 一般被用來表示任務空間中的距離,這與人類在 3D 世界中感知的距離概念相對應。

-

在機器人領域中,SDF 常用來表示機器人每個關節的角度。研究者通常知道在機器人的關節角度空間中,哪些區域是安全的,也就是說,機器人的各個關節可以轉動到這些區域而不會發生碰撞。但是,他們不常用距離場的形式來表達這些安全區域。

在這篇論文中,研究者提出了用 SDF 優化機器人配置空間的潛力,他們稱之為配置空間距離場(簡稱 CDF)。與使用 SDF 類似,CDF 提供了高效的關節角度距離查詢和直接存取導數(關節角速度)。通常,機器人規劃會分成兩步:先在任務空間裡看看動作離目標有多遠,再用逆運動學算出關節怎麼轉。但 CDF 讓這兩步驟合成一步,直接在機器人的關節空間解決問題,這樣比較簡單,效率也更高。研究者在論文中提出了一種高效的演算法來計算和融合 CDF,可以推廣到任意場景。

他們還提出了一種使用多層感知器(MLPs)的相應神經 CDF 表示,用以獲得緊湊且連續的表示,提高了計算效率。論文中提供了一些具體範例來展示 CDF 的效果,例如讓機器人避開平面上的障礙物,一級讓一個 7 軸的機器人 Franka 完成一些動作規劃任務。這些範例都說明了 CDF 的有效性。

執行CDFping也選出了早期職業Spotlight 獎,本次獲獎者為Stefan Leutenegger,他的研究重點是機器人在潛在未知環境中的導航。

Stefan Leutenegger 是慕尼黑工業大學(TUM)計算、資訊與技術學院(CIT)助理教授(終身教職),並與慕尼黑機器人與機器智慧研究所(MIRMI)、慕尼黑數據科學研究所( MDSI)和慕尼黑機器學習中心(MCML)有所關聯,曾是戴森機器人實驗室的成員。他領導的智慧機器人實驗室(SRL)致力於感知、移動機器人、無人機和機器學習的交叉研究。此外,Stefan 也是倫敦帝國學院電腦系的客座講師。

他參與創辦了 SLAMcore 公司,這是一家以機器人和無人機的定位和繪圖解決方案的商業化為目標的衍生公司。 Stefan 獲得了蘇黎世聯邦理工學院機械工程學士學位和碩士學位,並在 2014 年獲得了博士學位,學位論文主題為《無人太陽能飛機:高效穩健自主運作的設計與演算法》。時間檢驗獎

RSS 時間考驗獎授予至少十年前在 RSS 上發表的影響力最大的論文(也可能是其期刊版本)。影響力可以從三個方面來理解:例如改變了人們對問題或機器人設計的思考方式,使新問題引起了社群的注意,或是開創了機器人設計或問題解決的新方法。

透過這個獎項,RSS 希望促進對本領域長期發展的討論。今年的時間檢驗獎頒給了 Ji Zhang 和 Sanjiv Singh 的研究《LOAM:雷射雷達測距和即時測繪》。

論文連結:https://www.ri.cmu.edu/pub_files/2014/7/Ji_LidarMapping_RSS2014_v8.pdf這篇十年前的論文提出了一種利用以6-DOF 運動的雙雙運動軸雷射雷達的測距資料進行里程測量和繪圖的即時方法。這個問題難以解決的原因是測距資料是在不同時間接收到的,而運動估計中的誤差會導致所得到的點雲的錯誤配準。相干的三維地圖可以透過離線批次方法建立,通常使用閉環來校正隨時間的漂移。而本文方法無需高精度測距或慣性測量,即可實現低漂移和低計算複雜度。 獲得這種效能等級的關鍵在於將複雜的同步定位和測繪問題分為兩種演算法,以同時最佳化大量變數。一種演算法以高頻率但低保真的方式進行測距,以估算光達的速度;另一種演算法以低一個數量級的頻率運行,用於點雲的精細匹配和註冊。這兩種演算法的結合使該方法能夠即時繪圖。研究者透過大量實驗以及 KITTI 測速基準進行了評估,結果表明該方法可以達到離線批量方法的 SOTA 精度等級。

更多大會及獎項訊息,可參考官網內容:https://roboticsconference.org/

更多大會及獎項訊息,可參考官網內容:https://roboticsconference.org/

The above is the detailed content of At the top robotics conference RSS 2024, China's humanoid robot research won the best paper award. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1664

1664

14

14

1422

1422

52

52

1316

1316

25

25

1267

1267

29

29

1239

1239

24

24

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

But maybe he can’t defeat the old man in the park? The Paris Olympic Games are in full swing, and table tennis has attracted much attention. At the same time, robots have also made new breakthroughs in playing table tennis. Just now, DeepMind proposed the first learning robot agent that can reach the level of human amateur players in competitive table tennis. Paper address: https://arxiv.org/pdf/2408.03906 How good is the DeepMind robot at playing table tennis? Probably on par with human amateur players: both forehand and backhand: the opponent uses a variety of playing styles, and the robot can also withstand: receiving serves with different spins: However, the intensity of the game does not seem to be as intense as the old man in the park. For robots, table tennis

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

On August 21, the 2024 World Robot Conference was grandly held in Beijing. SenseTime's home robot brand "Yuanluobot SenseRobot" has unveiled its entire family of products, and recently released the Yuanluobot AI chess-playing robot - Chess Professional Edition (hereinafter referred to as "Yuanluobot SenseRobot"), becoming the world's first A chess robot for the home. As the third chess-playing robot product of Yuanluobo, the new Guoxiang robot has undergone a large number of special technical upgrades and innovations in AI and engineering machinery. For the first time, it has realized the ability to pick up three-dimensional chess pieces through mechanical claws on a home robot, and perform human-machine Functions such as chess playing, everyone playing chess, notation review, etc.

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

The start of school is about to begin, and it’s not just the students who are about to start the new semester who should take care of themselves, but also the large AI models. Some time ago, Reddit was filled with netizens complaining that Claude was getting lazy. "Its level has dropped a lot, it often pauses, and even the output becomes very short. In the first week of release, it could translate a full 4-page document at once, but now it can't even output half a page!" https:// www.reddit.com/r/ClaudeAI/comments/1by8rw8/something_just_feels_wrong_with_claude_in_the/ in a post titled "Totally disappointed with Claude", full of

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference being held in Beijing, the display of humanoid robots has become the absolute focus of the scene. At the Stardust Intelligent booth, the AI robot assistant S1 performed three major performances of dulcimer, martial arts, and calligraphy in one exhibition area, capable of both literary and martial arts. , attracted a large number of professional audiences and media. The elegant playing on the elastic strings allows the S1 to demonstrate fine operation and absolute control with speed, strength and precision. CCTV News conducted a special report on the imitation learning and intelligent control behind "Calligraphy". Company founder Lai Jie explained that behind the silky movements, the hardware side pursues the best force control and the most human-like body indicators (speed, load) etc.), but on the AI side, the real movement data of people is collected, allowing the robot to become stronger when it encounters a strong situation and learn to evolve quickly. And agile

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

At this ACL conference, contributors have gained a lot. The six-day ACL2024 is being held in Bangkok, Thailand. ACL is the top international conference in the field of computational linguistics and natural language processing. It is organized by the International Association for Computational Linguistics and is held annually. ACL has always ranked first in academic influence in the field of NLP, and it is also a CCF-A recommended conference. This year's ACL conference is the 62nd and has received more than 400 cutting-edge works in the field of NLP. Yesterday afternoon, the conference announced the best paper and other awards. This time, there are 7 Best Paper Awards (two unpublished), 1 Best Theme Paper Award, and 35 Outstanding Paper Awards. The conference also awarded 3 Resource Paper Awards (ResourceAward) and Social Impact Award (

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Deep integration of vision and robot learning. When two robot hands work together smoothly to fold clothes, pour tea, and pack shoes, coupled with the 1X humanoid robot NEO that has been making headlines recently, you may have a feeling: we seem to be entering the age of robots. In fact, these silky movements are the product of advanced robotic technology + exquisite frame design + multi-modal large models. We know that useful robots often require complex and exquisite interactions with the environment, and the environment can be represented as constraints in the spatial and temporal domains. For example, if you want a robot to pour tea, the robot first needs to grasp the handle of the teapot and keep it upright without spilling the tea, then move it smoothly until the mouth of the pot is aligned with the mouth of the cup, and then tilt the teapot at a certain angle. . this

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

This afternoon, Hongmeng Zhixing officially welcomed new brands and new cars. On August 6, Huawei held the Hongmeng Smart Xingxing S9 and Huawei full-scenario new product launch conference, bringing the panoramic smart flagship sedan Xiangjie S9, the new M7Pro and Huawei novaFlip, MatePad Pro 12.2 inches, the new MatePad Air, Huawei Bisheng With many new all-scenario smart products including the laser printer X1 series, FreeBuds6i, WATCHFIT3 and smart screen S5Pro, from smart travel, smart office to smart wear, Huawei continues to build a full-scenario smart ecosystem to bring consumers a smart experience of the Internet of Everything. Hongmeng Zhixing: In-depth empowerment to promote the upgrading of the smart car industry Huawei joins hands with Chinese automotive industry partners to provide

Distributed Artificial Intelligence Conference DAI 2024 Call for Papers: Agent Day, Richard Sutton, the father of reinforcement learning, will attend! Yan Shuicheng, Sergey Levine and DeepMind scientists will give keynote speeches

Aug 22, 2024 pm 08:02 PM

Distributed Artificial Intelligence Conference DAI 2024 Call for Papers: Agent Day, Richard Sutton, the father of reinforcement learning, will attend! Yan Shuicheng, Sergey Levine and DeepMind scientists will give keynote speeches

Aug 22, 2024 pm 08:02 PM

Conference Introduction With the rapid development of science and technology, artificial intelligence has become an important force in promoting social progress. In this era, we are fortunate to witness and participate in the innovation and application of Distributed Artificial Intelligence (DAI). Distributed artificial intelligence is an important branch of the field of artificial intelligence, which has attracted more and more attention in recent years. Agents based on large language models (LLM) have suddenly emerged. By combining the powerful language understanding and generation capabilities of large models, they have shown great potential in natural language interaction, knowledge reasoning, task planning, etc. AIAgent is taking over the big language model and has become a hot topic in the current AI circle. Au

更多大會及獎項訊息,可參考官網內容:https://roboticsconference.org/

更多大會及獎項訊息,可參考官網內容:https://roboticsconference.org/