Technology peripherals

Technology peripherals

AI

AI

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

The open LLM community is an era when a hundred flowers bloom and compete. You can see Llama-3-70B-Instruct, QWen2-72B-Instruct, Nemotron-4-340B-Instruct, Mixtral-8x22BInstruct-v0.1 and many other excellent performances model. However, compared with proprietary large models represented by GPT-4-Turbo, open models still have significant gaps in many areas.

In addition to general models, there are also some open models that specialize in key areas have been developed, such as DeepSeek-Coder-V2 for programming and mathematics, InternVL 1.5 for visual-language tasks (which is used in some fields Comparable to GPT-4-Turbo-2024-04-09).

As the "shovel king in the AI gold rush era", NVIDIA itself is also making contributions to the field of open models, such as the ChatQA series of models it developed. Please refer to the report on this site "NVIDIA's new dialogue QA model is more accurate than GPT-4, But I was criticized: Unweighted code has little meaning.》. Earlier this year, ChatQA 1.5 was released, which integrates retrieval-augmented generation (RAG) technology and outperforms GPT-4 in conversational question answering.

Now, ChatQA has evolved to version 2.0. The main direction of improvement this time is to expand the context window.

Paper title: ChatQA 2: Bridging the Gap to Proprietary LLMs in Long Context and RAG Capabilities

Paper address: https://arxiv.org/pdf/2407.14482

Recent time , extending the context window length of LLM is a major research and development hotspot. For example, this site once reported "Directly expand to infinite length, Google Infini-Transformer ends the context length debate".

All leading proprietary LLMs support very large context windows - you can feed it hundreds of pages of text in a single prompt. For example, the context window sizes of GPT-4 Turbo and Claude 3.5 Sonnet are 128K and 200K respectively. Gemini 1.5 Pro can support a context of 10M length, which is amazing.

However, open source large models are also catching up. For example, QWen2-72B-Instruct and Yi-34B support 128K and 200K context windows respectively. However, the training data and technical details of these models are not publicly available, making it difficult to reproduce them. In addition, the evaluation of these models is mostly based on synthetic tasks and cannot accurately represent the performance on real downstream tasks. For example, multiple studies have shown that there is still a significant gap between open LLM and leading proprietary models on real-world long context understanding tasks.

And the NVIDIA team successfully made the performance of open Llama-3 catch up with the proprietary GPT-4 Turbo on real-world long context understanding tasks.

In the LLM community, long context capabilities are sometimes considered a technology that competes with RAG. But realistically speaking, these technologies can enhance each other.

For LLM with a long context window, depending on the downstream tasks and the trade-off between accuracy and efficiency, you can consider attaching a large amount of text to the prompt, or you can use retrieval methods to efficiently extract relevant information from a large amount of text. RAG has clear efficiency advantages and can easily retrieve relevant information from billions of tokens for query-based tasks. This is an advantage that long context models cannot have. Long context models, on the other hand, are very good at tasks such as document summarization, which RAG may not be good at.

Therefore, for an advanced LLM, both capabilities are required so that one can be considered based on the downstream tasks and accuracy and efficiency requirements.

Previously, NVIDIA’s open source ChatQA 1.5 model has been able to outperform GPT-4-Turbo on RAG tasks. But they didn't stop there. Now they have open sourced ChatQA 2, which also integrates long context understanding capabilities that are comparable to GPT-4-Turbo!

Specifically, they are based on the Llama-3 model, extending its context window to 128K (on par with GPT-4-Turbo), while also equipping it with the best long context retriever currently available.

Expand the context window to 128K

So, how did NVIDIA increase the context window of Llama-3 from 8K to 128K? First, they prepared a long context pre-training corpus based on Slimpajama, using the method from the paper "Data engineering for scaling language models to 128k context" by Fu et al. (2024).

They also made an interesting discovery during the training process: Compared with using the original start and end tokens to separate different documents will have a better effect. They speculate that the reason is that the

Using long context data for instruction fine-tuning

The team also designed an instruction fine-tuning method that can simultaneously improve the model's long context understanding capabilities and RAG performance.

Specifically, this instruction fine-tuning method is divided into three stages. The first two stages are the same as ChatQA 1.5, i.e. first training the model on the 128K high-quality instruction compliance dataset, and then training on a mixture of conversational Q&A data and provided context. However, the contexts involved in both stages are relatively short - the maximum sequence length is no more than 4K tokens. To increase the model's context window size to 128K tokens, the team collected a long supervised fine-tuning (SFT) dataset.

It adopts two collection methods:

1. For SFT data sequences shorter than 32k: using existing long context data sets based on LongAlpaca12k, GPT-4 samples from Open Orca, and Long Data Collections.

2. For data with sequence lengths between 32k and 128k: Due to the difficulty of collecting such SFT samples, they chose synthetic datasets. They used NarrativeQA, which contains both ground truth and semantically relevant paragraphs. They assembled all relevant paragraphs together and randomly inserted real summaries to simulate real long documents for question and answer pairs.

Then, the full-length SFT data set and the short SFT data set obtained in the first two stages are combined together and then trained. Here the learning rate is set to 3e-5 and the batch size is 32.

Long context retriever meets long context LLM

There are some problems with the RAG process currently used by LLM:

1. In order to generate accurate answers, top-k block-by-block retrieval will introduce non-negligible context fragments. For example, previous state-of-the-art dense embedding-based retrievers only supported 512 tokens.

2. A small top-k (such as 5 or 10) will lead to a relatively low recall rate, while a large top-k (such as 100) will lead to poor generation results because the previous LLM cannot be used well. Chunked context.

To solve this problem, the team proposes to use the most recent long context retriever, which supports thousands of tokens. Specifically, they chose to use the E5-mistral embedding model as the retriever.

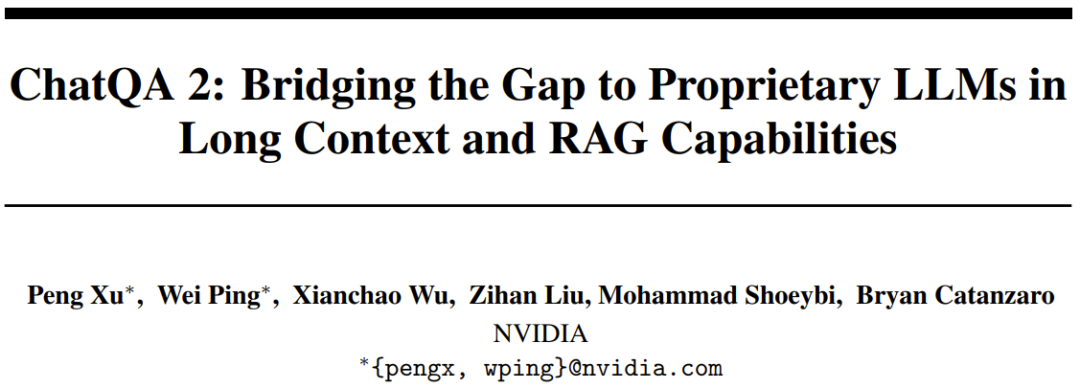

Table 1 compares top-k retrieval for different block sizes and the total number of tokens in the context window.

Comparing the changes in the number of tokens from 3000 to 12000, the team found that the more tokens, the better the results, which confirmed that the long context capability of the new model is indeed good. They also found that if the total number of tokens is 6000, there is a better trade-off between cost and performance. When the total number of tokens was set to 6000, they found that the larger the text block, the better the results. Therefore, in their experiments, the default settings they chose were a block size of 1200 and top-5 text blocks.

Experiments

Evaluation benchmarks

In order to conduct a comprehensive evaluation and analyze different context lengths, the team used three types of evaluation benchmarks:

1. Long context benchmarks, more than 100K tokens;

2. Medium long context benchmark, less than 32K tokens;

3. Short context benchmark, less than 4K tokens.

If a downstream task can use RAG, it will use RAG.

Results

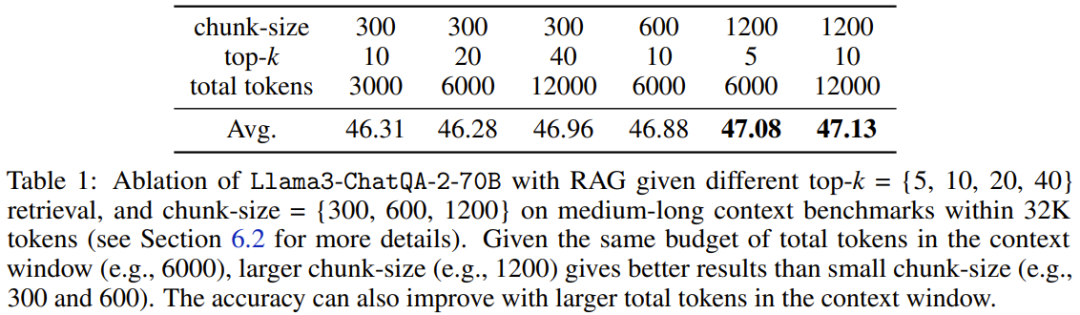

The team first conducted a Needle in a Haystack test based on synthetic data, and then tested the model’s real-world long context understanding and RAG capabilities.

1. Needle in a haystack test

Llama3-ChatQA-2-70B Can you find the target needle in the sea of text? This is a synthetic task commonly used to test the long-context ability of LLM and can be seen as assessing the threshold level of LLM. Figure 1 shows the performance of the new model in 128K tokens. It can be seen that the accuracy of the new model reaches 100%. This test confirmed that the new model has perfect long-context retrieval capabilities.

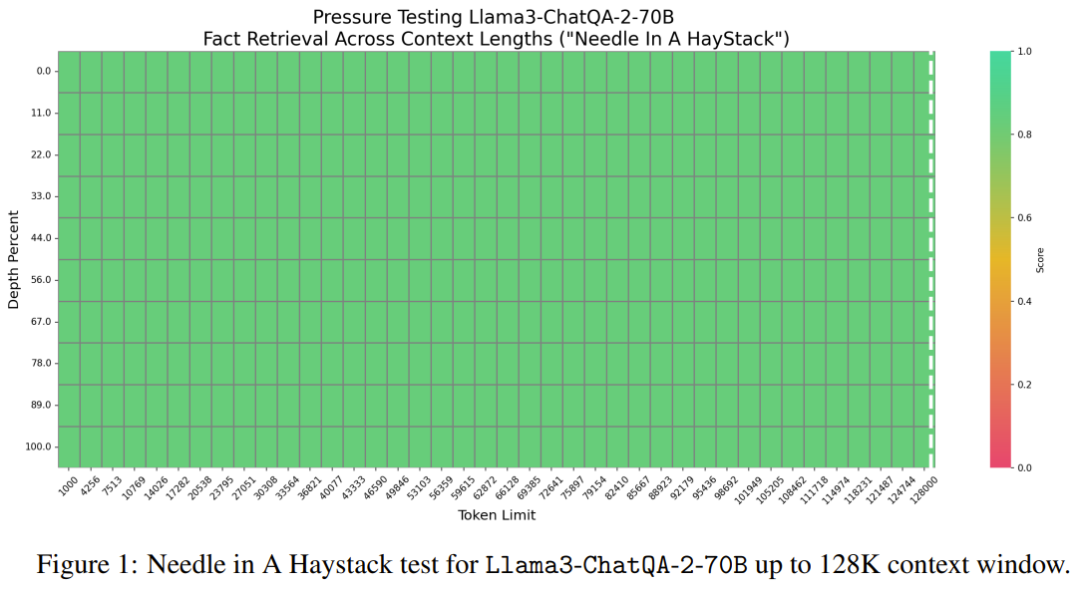

2. Long context evaluation over 100K tokens

On real-world tasks from InfiniteBench, the team evaluated the model’s performance when the context length exceeded 100K tokens. The results are shown in Table 2.

It can be seen that the new model performs better than many current best models, such as GPT4-Turbo-2024-04-09 (33.16), GPT4-1106 preview (28.23), Llama-3-70B-Instruct -Gradient-262k (32.57) and Claude 2 (33.96). In addition, the new model's score is very close to the highest score of 34.88 obtained by Qwen2-72B-Instruct. Overall, Nvidia’s new model is quite competitive.

3. Evaluation of medium-long contexts with the number of tokens within 32K

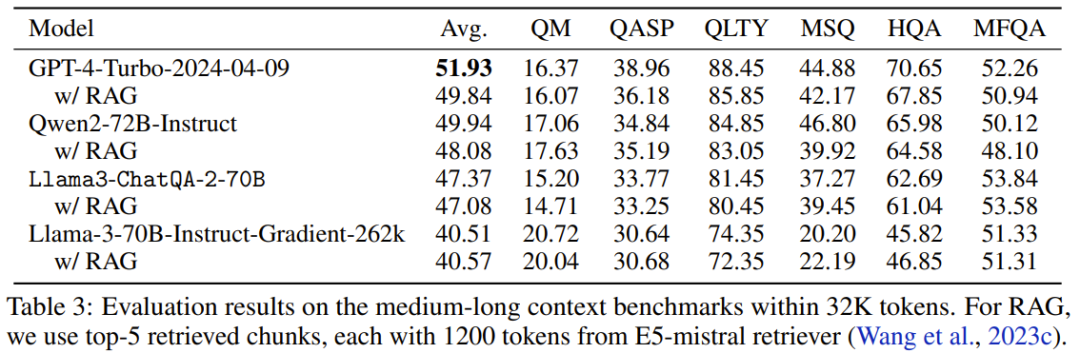

Table 3 shows the performance of each model when the number of tokens in the context is within 32K.

As you can see, GPT-4-Turbo-2024-04-09 has the highest score, 51.93. The score of the new model is 47.37, which is higher than Llama-3-70B-Instruct-Gradient-262k but lower than Qwen2-72B-Instruct. The reason may be that the pre-training of Qwen2-72B-Instruct heavily uses 32K tokens, while the continuous pre-training corpus used by the team is much smaller. Furthermore, they found that all RAG solutions performed worse than the long context solutions, indicating that all these state-of-the-art long context LLMs can handle 32K tokens within their context window.

4. ChatRAG Bench: Short context evaluation with the number of tokens less than 4K

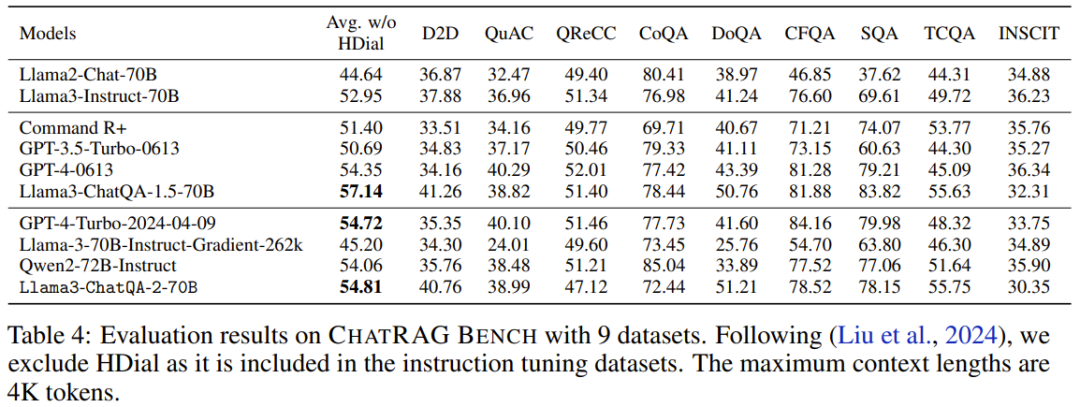

On ChatRAG Bench, the team evaluated the performance of the model when the context length is less than 4K tokens, see Table 4.

The average score of the new model is 54.81. Although this result is not as good as Llama3-ChatQA-1.5-70B, it is still better than GPT-4-Turbo-2024-04-09 and Qwen2-72B-Instruct. This proves the point: extending short context models to long context models comes at a cost. This also leads to a research direction worth exploring: How to further expand the context window without affecting its performance on short context tasks?

5. Comparing RAG with long context

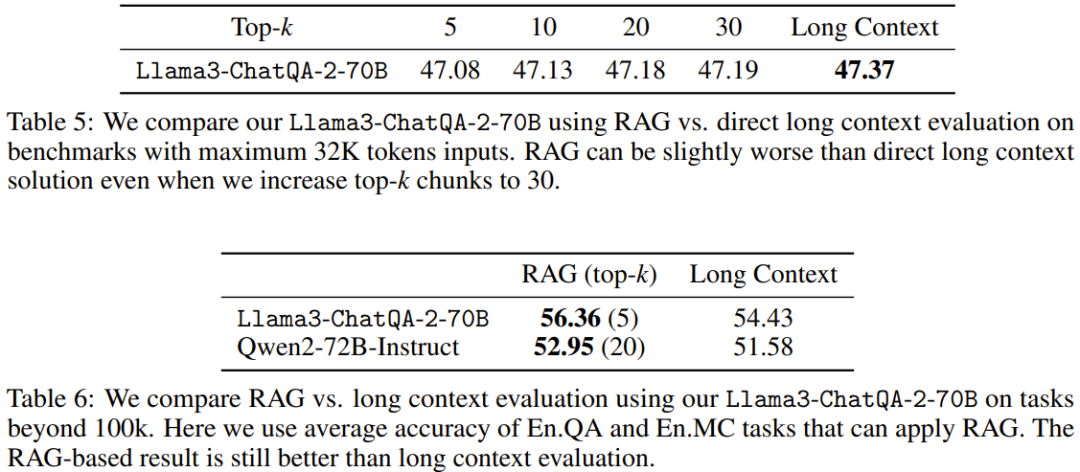

Tables 5 and 6 compare the performance of RAG with long context solutions when using different context lengths. When sequence length exceeds 100K, only the average scores for En.QA and En.MC are reported because the RAG settings are not directly available for En.Sum and En.Dia.

It can be seen that the newly proposed long context solution outperforms RAG when the sequence length of the downstream task is less than 32K. This means that using RAG results in cost savings, but at the expense of accuracy.

On the other hand, RAG (top-5 for Llama3-ChatQA-2-70B and top-20 for Qwen2-72B-Instruct) outperforms the long context solution when the context length exceeds 100K. This means that when the number of tokens exceeds 128K, even the current best long-context LLM may have difficulty achieving effective understanding and reasoning. The team recommends that in this case, use RAG whenever possible because it can bring higher accuracy and lower inference cost.

The above is the detailed content of NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Breaking through the boundaries of traditional defect detection, 'Defect Spectrum' achieves ultra-high-precision and rich semantic industrial defect detection for the first time.

Jul 26, 2024 pm 05:38 PM

Breaking through the boundaries of traditional defect detection, 'Defect Spectrum' achieves ultra-high-precision and rich semantic industrial defect detection for the first time.

Jul 26, 2024 pm 05:38 PM

In modern manufacturing, accurate defect detection is not only the key to ensuring product quality, but also the core of improving production efficiency. However, existing defect detection datasets often lack the accuracy and semantic richness required for practical applications, resulting in models unable to identify specific defect categories or locations. In order to solve this problem, a top research team composed of Hong Kong University of Science and Technology Guangzhou and Simou Technology innovatively developed the "DefectSpectrum" data set, which provides detailed and semantically rich large-scale annotation of industrial defects. As shown in Table 1, compared with other industrial data sets, the "DefectSpectrum" data set provides the most defect annotations (5438 defect samples) and the most detailed defect classification (125 defect categories

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

The open LLM community is an era when a hundred flowers bloom and compete. You can see Llama-3-70B-Instruct, QWen2-72B-Instruct, Nemotron-4-340B-Instruct, Mixtral-8x22BInstruct-v0.1 and many other excellent performers. Model. However, compared with proprietary large models represented by GPT-4-Turbo, open models still have significant gaps in many fields. In addition to general models, some open models that specialize in key areas have been developed, such as DeepSeek-Coder-V2 for programming and mathematics, and InternVL for visual-language tasks.

Training with millions of crystal data to solve the crystallographic phase problem, the deep learning method PhAI is published in Science

Aug 08, 2024 pm 09:22 PM

Training with millions of crystal data to solve the crystallographic phase problem, the deep learning method PhAI is published in Science

Aug 08, 2024 pm 09:22 PM

Editor |KX To this day, the structural detail and precision determined by crystallography, from simple metals to large membrane proteins, are unmatched by any other method. However, the biggest challenge, the so-called phase problem, remains retrieving phase information from experimentally determined amplitudes. Researchers at the University of Copenhagen in Denmark have developed a deep learning method called PhAI to solve crystal phase problems. A deep learning neural network trained using millions of artificial crystal structures and their corresponding synthetic diffraction data can generate accurate electron density maps. The study shows that this deep learning-based ab initio structural solution method can solve the phase problem at a resolution of only 2 Angstroms, which is equivalent to only 10% to 20% of the data available at atomic resolution, while traditional ab initio Calculation

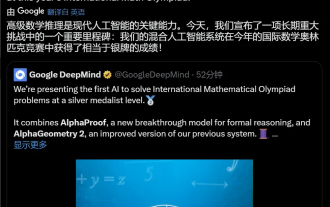

Google AI won the IMO Mathematical Olympiad silver medal, the mathematical reasoning model AlphaProof was launched, and reinforcement learning is so back

Jul 26, 2024 pm 02:40 PM

Google AI won the IMO Mathematical Olympiad silver medal, the mathematical reasoning model AlphaProof was launched, and reinforcement learning is so back

Jul 26, 2024 pm 02:40 PM

For AI, Mathematical Olympiad is no longer a problem. On Thursday, Google DeepMind's artificial intelligence completed a feat: using AI to solve the real question of this year's International Mathematical Olympiad IMO, and it was just one step away from winning the gold medal. The IMO competition that just ended last week had six questions involving algebra, combinatorics, geometry and number theory. The hybrid AI system proposed by Google got four questions right and scored 28 points, reaching the silver medal level. Earlier this month, UCLA tenured professor Terence Tao had just promoted the AI Mathematical Olympiad (AIMO Progress Award) with a million-dollar prize. Unexpectedly, the level of AI problem solving had improved to this level before July. Do the questions simultaneously on IMO. The most difficult thing to do correctly is IMO, which has the longest history, the largest scale, and the most negative

Nature's point of view: The testing of artificial intelligence in medicine is in chaos. What should be done?

Aug 22, 2024 pm 04:37 PM

Nature's point of view: The testing of artificial intelligence in medicine is in chaos. What should be done?

Aug 22, 2024 pm 04:37 PM

Editor | ScienceAI Based on limited clinical data, hundreds of medical algorithms have been approved. Scientists are debating who should test the tools and how best to do so. Devin Singh witnessed a pediatric patient in the emergency room suffer cardiac arrest while waiting for treatment for a long time, which prompted him to explore the application of AI to shorten wait times. Using triage data from SickKids emergency rooms, Singh and colleagues built a series of AI models that provide potential diagnoses and recommend tests. One study showed that these models can speed up doctor visits by 22.3%, speeding up the processing of results by nearly 3 hours per patient requiring a medical test. However, the success of artificial intelligence algorithms in research only verifies this

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

PRO | Why are large models based on MoE more worthy of attention?

Aug 07, 2024 pm 07:08 PM

PRO | Why are large models based on MoE more worthy of attention?

Aug 07, 2024 pm 07:08 PM

In 2023, almost every field of AI is evolving at an unprecedented speed. At the same time, AI is constantly pushing the technological boundaries of key tracks such as embodied intelligence and autonomous driving. Under the multi-modal trend, will the situation of Transformer as the mainstream architecture of AI large models be shaken? Why has exploring large models based on MoE (Mixed of Experts) architecture become a new trend in the industry? Can Large Vision Models (LVM) become a new breakthrough in general vision? ...From the 2023 PRO member newsletter of this site released in the past six months, we have selected 10 special interpretations that provide in-depth analysis of technological trends and industrial changes in the above fields to help you achieve your goals in the new year. be prepared. This interpretation comes from Week50 2023

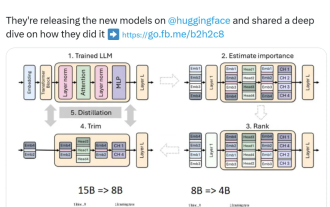

Nvidia plays with pruning and distillation: halving the parameters of Llama 3.1 8B to achieve better performance with the same size

Aug 16, 2024 pm 04:42 PM

Nvidia plays with pruning and distillation: halving the parameters of Llama 3.1 8B to achieve better performance with the same size

Aug 16, 2024 pm 04:42 PM

The rise of small models. Last month, Meta released the Llama3.1 series of models, which includes Meta’s largest model to date, the 405B model, and two smaller models with 70 billion and 8 billion parameters respectively. Llama3.1 is considered to usher in a new era of open source. However, although the new generation models are powerful in performance, they still require a large amount of computing resources when deployed. Therefore, another trend has emerged in the industry, which is to develop small language models (SLM) that perform well enough in many language tasks and are also very cheap to deploy. Recently, NVIDIA research has shown that structured weight pruning combined with knowledge distillation can gradually obtain smaller language models from an initially larger model. Turing Award Winner, Meta Chief A