Technology peripherals

Technology peripherals

AI

AI

Google AI won the IMO Mathematical Olympiad silver medal, the mathematical reasoning model AlphaProof was launched, and reinforcement learning is so back

Google AI won the IMO Mathematical Olympiad silver medal, the mathematical reasoning model AlphaProof was launched, and reinforcement learning is so back

Google AI won the IMO Mathematical Olympiad silver medal, the mathematical reasoning model AlphaProof was launched, and reinforcement learning is so back

For AI, Mathematical Olympiad is no longer a problem.

On Thursday, Google DeepMind’s artificial intelligence completed a feat: using AI to solve the real question of this year’s International Mathematical Olympiad IMO, and it was just one step away from winning the gold medal.

The IMO competition that just ended last week had a total of six questions involving algebra, combinatorics, geometry and number theory. Google’s proposed hybrid AI system got four questions right and scored 28 points, reaching silver medal level.

At the beginning of this month, UCLA tenured professor Terence Tao just promoted the AI Math Olympiad (AIMO Progress Award) with a million-dollar prize. Unexpectedly, the level of AI problem solving has improved to this level before July.

Solve questions simultaneously on IMO and get the hardest questions right

IMO is the oldest, largest and most prestigious competition for young mathematicians, held annually since 1959. Recently, the IMO competition has also been widely recognized as a grand challenge in the field of machine learning, becoming an ideal benchmark for measuring the advanced mathematical reasoning capabilities of artificial intelligence systems.

At this year’s IMO competition, AlphaProof and AlphaGeometry 2 developed by the DeepMind team jointly achieved a milestone breakthrough.

Among them, AlphaProof is a reinforcement learning system for formal mathematical reasoning, while AlphaGeometry 2 is an improved version of DeepMind’s geometry solving system AlphaGeometry.

This breakthrough demonstrates the potential of artificial general intelligence (AGI) with advanced mathematical reasoning capabilities to open new areas of science and technology.

So, how does DeepMind’s AI system participate in the IMO competition?

Simply put, first these mathematical problems are manually translated into formal mathematical language so that the AI system can understand them. In the official competition, human contestants submit answers in two sessions (two days), with a time limit of 4.5 hours per session. The AlphaProof+AlphaGeometry 2 AI system solved one problem in minutes, but took three days to solve other problems. Although if you strictly follow the rules, DeepMind's system has timed out. Some people speculate that this may involve a lot of brute force cracking.

Google said AlphaProof solved two algebra problems and one number theory problem by determining the answers and proving their correctness. These include the hardest problem in the competition, which only five contestants solved at this year's IMO. And AlphaGeometry 2 proves a geometry problem.

The solution given by AI: https://storage.googleapis.com/deepmind-media/DeepMind.com/Blog/imo-2024-solutions/index.html

IMO gold medal winner and Fields Medal winner Timothy Gowers and Dr. Joseph Myers, two-time IMO gold medalist and chairman of the IMO 2024 Problem Selection Committee, scored the solutions given by the combined system according to the IMO scoring rules.

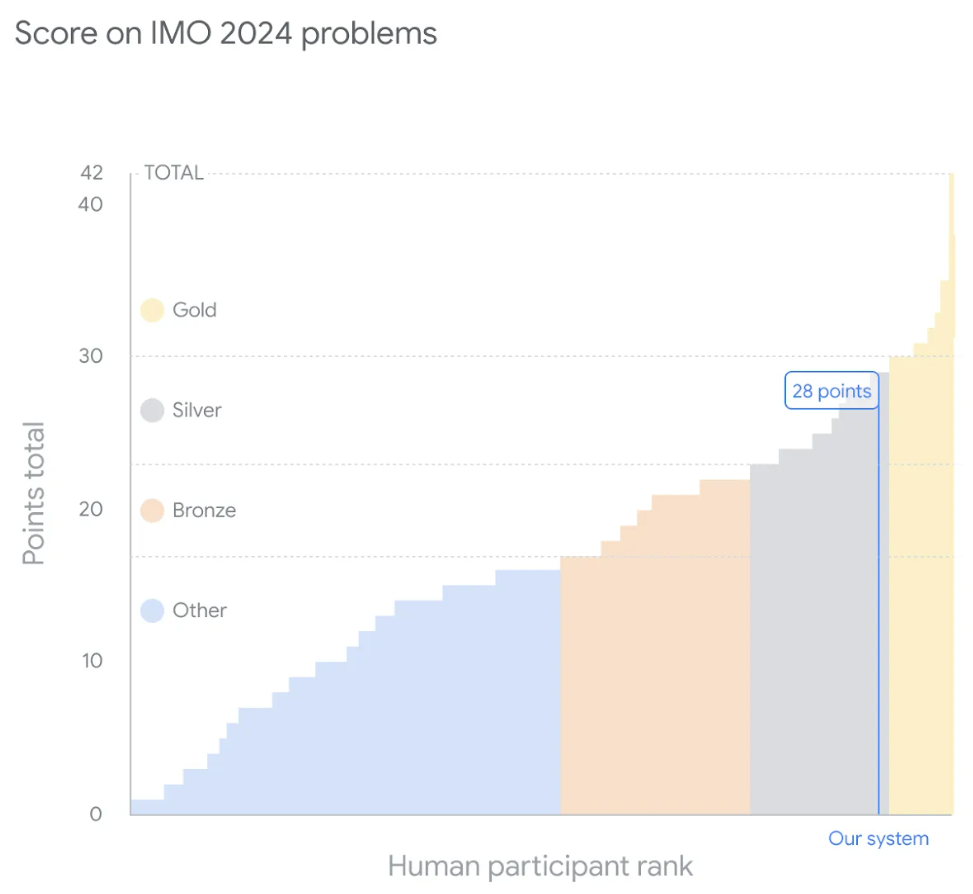

Each of the six questions is worth 7 points, for a maximum total score of 42 points. DeepMind's system received a final score of 28, meaning that all four of the problems it solved received perfect scores - equivalent to the highest score in the silver medal category. This year's gold medal threshold was 29 points, and 58 of the 609 competitors who competed officially earned gold medals.

This graph shows the performance of Google DeepMind’s artificial intelligence system relative to human competitors at IMO 2024. The system scored 28 points out of 42, putting it on par with the competition's silver medalist. Plus, 29 points is enough to get a gold medal this year.

AlphaProof: a formal reasoning method

In the hybrid AI system used by Google, AlphaProof is a self-trained system that uses the formal language Lean to prove mathematical statements. It combines a pre-trained language model with the AlphaZero reinforcement learning algorithm.

Among them, formal languages provide important advantages for formally verifying the correctness of mathematical reasoning proofs. Until now, this has been of limited use in machine learning because the amount of human-written data was very limited.

In contrast, although natural language-based methods have access to larger amounts of data, they produce intermediate reasoning steps and solutions that appear reasonable but incorrect.

Google DeepMind builds a bridge between these two complementary fields by fine-tuning the Gemini model to automatically translate natural language problem statements into formal statements, thereby creating a large library of formal problems of varying difficulty.

Given a mathematical problem, AlphaProof will generate candidate solutions and then prove them by searching for possible proof steps in Lean. Each proof solution found and verified is used to strengthen AlphaProof's language model and enhance its ability to solve subsequent more challenging problems.

To train AlphaProof, Google DeepMind proved or disproved millions of mathematical problems covering a wide range of difficulties and topics in the weeks leading up to the IMO competition. A training loop is also applied during the competition to strengthen the proof of self-generated competition problem variants until a complete solution is found.

AlphaProof reinforcement learning training loop process infographic: About one million informal mathematical problems are translated into formal mathematical language by the formal network. The solver then searches the network for proofs or disproofs of the problem, gradually training itself to solve more challenging problems via the AlphaZero algorithm.

AlphaProof reinforcement learning training loop process infographic: About one million informal mathematical problems are translated into formal mathematical language by the formal network. The solver then searches the network for proofs or disproofs of the problem, gradually training itself to solve more challenging problems via the AlphaZero algorithm.

More competitive AlphaGeometry 2

AlphaGeometry 2 is a significantly improved version of the mathematical AI AlphaGeometry that was featured in Nature magazine this year. It is a neuro-symbolic hybrid system in which the language model is based on Gemini and trained from scratch on an order of magnitude more synthetic data than its predecessor. This helps the model solve more challenging geometric problems, including those about object motion and equations of angles, proportions, or distances.

AlphaGeometry 2 uses a symbolic engine that is two orders of magnitude faster than the previous generation. When new problems are encountered, novel knowledge sharing mechanisms enable advanced combinations of different search trees to solve more complex problems.

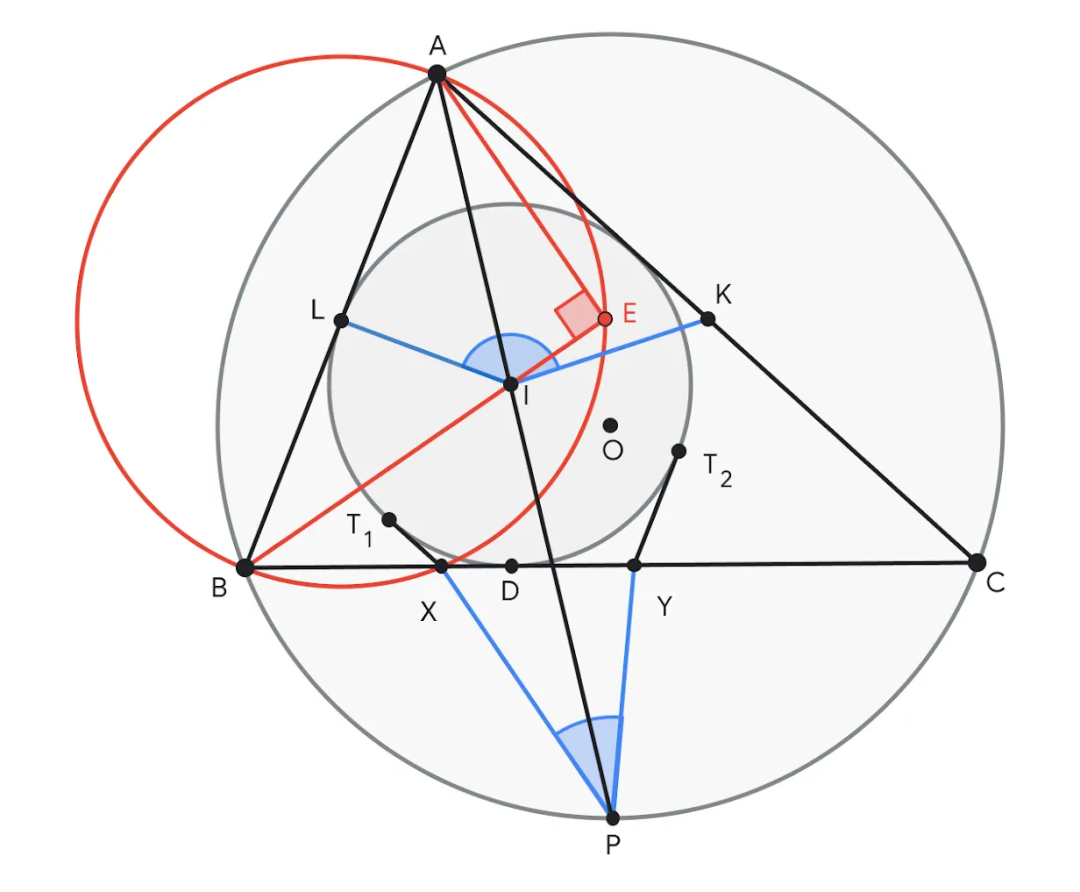

Prior to this year’s competition, AlphaGeometry 2 could solve 83% of all historical IMO geometry problems from the past 25 years, compared to its predecessor’s 53% solution rate. In IMO 2024, AlphaGeometry 2 solved Problem 4 within 19 seconds of receiving its formalization.

Example of question 4, asking to prove that the sum of ∠KIL and ∠XPY is equal to 180°. AlphaGeometry 2 proposes to construct point E on the line BI such that ∠AEB = 90°. The point E helps to give meaning to the midpoint L of the line segment AB thereby creating many pairs of similar triangles like ABE ~ YBI and ALE ~ IPC to prove the conclusion.

Google DeepMind also reports that as part of the IMO work, researchers are also experimenting with a new natural language reasoning system based on Gemini and a state-of-the-art natural language reasoning system, hoping to achieve advanced problem-solving capabilities. The system does not require translation of questions into formal language and can be combined with other AI systems. In the test of this year's IMO competition questions, it "showed great potential."

Google is continuing to explore AI methods to advance mathematical reasoning and plans to release more technical details about AlphaProof soon.

We’re excited about a future where mathematicians will use AI tools to explore hypotheses, try bold new ways to solve long-standing problems, and quickly complete time-consuming proof elements—and AI systems like Gemini will revolutionize mathematics and broader reasoning aspects become more powerful.

Research team

Google said that the new research was supported by the International Mathematical Olympiad Organization. In addition:

The development of AlphaProof was led by Thomas Hubert, Rishi Mehta and Laurent Sartran; main contributors include Hussain Masoom, Aja Huang, Miklós Z. Horváth, Tom Zahavy, Vivek Veeriah, Eric Wieser, Jessica Yung, Lei Yu, Yannick Schroecker, Julian Schrittwieser, Ottavia Bertolli, Borja Ibarz, Edward Lockhart, Edward Hughes, Mark Rowland and Grace Margand.

Among them, Aja Huang, Julian Schrittwieser, Yannick Schroecker and other members were also core members of the AlphaGo paper 8 years ago (2016). Eight years ago, their AlphaGo, based on reinforcement learning, became famous. Eight years later, reinforcement learning shines again with AlphaProof. Someone lamented in the circle of friends: RL is so back!

AlphaGeometry 2 and natural language inference work is led by Thang Luong. The development of AlphaGeometry 2 was led by Trieu Trinh and Yuri Chervonyi, with important contributions from Mirek Olšák, Xiaomeng Yang, Hoang Nguyen, Junehyuk Jung, Dawsen Hwang and Marcelo Menegali.

Additionally, David Silver, Quoc Le, Hassabis and Pushmeet Kohli are responsible for coordinating and managing the entire project.

Reference content:

https://deepmind.google/discover/blog/ai-solves-imo-problems-at-silver-medal-level/

The above is the detailed content of Google AI won the IMO Mathematical Olympiad silver medal, the mathematical reasoning model AlphaProof was launched, and reinforcement learning is so back. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Breaking through the boundaries of traditional defect detection, 'Defect Spectrum' achieves ultra-high-precision and rich semantic industrial defect detection for the first time.

Jul 26, 2024 pm 05:38 PM

Breaking through the boundaries of traditional defect detection, 'Defect Spectrum' achieves ultra-high-precision and rich semantic industrial defect detection for the first time.

Jul 26, 2024 pm 05:38 PM

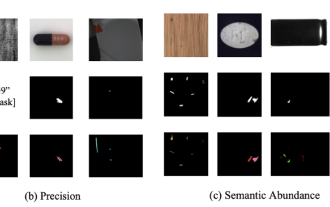

In modern manufacturing, accurate defect detection is not only the key to ensuring product quality, but also the core of improving production efficiency. However, existing defect detection datasets often lack the accuracy and semantic richness required for practical applications, resulting in models unable to identify specific defect categories or locations. In order to solve this problem, a top research team composed of Hong Kong University of Science and Technology Guangzhou and Simou Technology innovatively developed the "DefectSpectrum" data set, which provides detailed and semantically rich large-scale annotation of industrial defects. As shown in Table 1, compared with other industrial data sets, the "DefectSpectrum" data set provides the most defect annotations (5438 defect samples) and the most detailed defect classification (125 defect categories

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

The open LLM community is an era when a hundred flowers bloom and compete. You can see Llama-3-70B-Instruct, QWen2-72B-Instruct, Nemotron-4-340B-Instruct, Mixtral-8x22BInstruct-v0.1 and many other excellent performers. Model. However, compared with proprietary large models represented by GPT-4-Turbo, open models still have significant gaps in many fields. In addition to general models, some open models that specialize in key areas have been developed, such as DeepSeek-Coder-V2 for programming and mathematics, and InternVL for visual-language tasks.

Google AI won the IMO Mathematical Olympiad silver medal, the mathematical reasoning model AlphaProof was launched, and reinforcement learning is so back

Jul 26, 2024 pm 02:40 PM

Google AI won the IMO Mathematical Olympiad silver medal, the mathematical reasoning model AlphaProof was launched, and reinforcement learning is so back

Jul 26, 2024 pm 02:40 PM

For AI, Mathematical Olympiad is no longer a problem. On Thursday, Google DeepMind's artificial intelligence completed a feat: using AI to solve the real question of this year's International Mathematical Olympiad IMO, and it was just one step away from winning the gold medal. The IMO competition that just ended last week had six questions involving algebra, combinatorics, geometry and number theory. The hybrid AI system proposed by Google got four questions right and scored 28 points, reaching the silver medal level. Earlier this month, UCLA tenured professor Terence Tao had just promoted the AI Mathematical Olympiad (AIMO Progress Award) with a million-dollar prize. Unexpectedly, the level of AI problem solving had improved to this level before July. Do the questions simultaneously on IMO. The most difficult thing to do correctly is IMO, which has the longest history, the largest scale, and the most negative

Training with millions of crystal data to solve the crystallographic phase problem, the deep learning method PhAI is published in Science

Aug 08, 2024 pm 09:22 PM

Training with millions of crystal data to solve the crystallographic phase problem, the deep learning method PhAI is published in Science

Aug 08, 2024 pm 09:22 PM

Editor |KX To this day, the structural detail and precision determined by crystallography, from simple metals to large membrane proteins, are unmatched by any other method. However, the biggest challenge, the so-called phase problem, remains retrieving phase information from experimentally determined amplitudes. Researchers at the University of Copenhagen in Denmark have developed a deep learning method called PhAI to solve crystal phase problems. A deep learning neural network trained using millions of artificial crystal structures and their corresponding synthetic diffraction data can generate accurate electron density maps. The study shows that this deep learning-based ab initio structural solution method can solve the phase problem at a resolution of only 2 Angstroms, which is equivalent to only 10% to 20% of the data available at atomic resolution, while traditional ab initio Calculation

Nature's point of view: The testing of artificial intelligence in medicine is in chaos. What should be done?

Aug 22, 2024 pm 04:37 PM

Nature's point of view: The testing of artificial intelligence in medicine is in chaos. What should be done?

Aug 22, 2024 pm 04:37 PM

Editor | ScienceAI Based on limited clinical data, hundreds of medical algorithms have been approved. Scientists are debating who should test the tools and how best to do so. Devin Singh witnessed a pediatric patient in the emergency room suffer cardiac arrest while waiting for treatment for a long time, which prompted him to explore the application of AI to shorten wait times. Using triage data from SickKids emergency rooms, Singh and colleagues built a series of AI models that provide potential diagnoses and recommend tests. One study showed that these models can speed up doctor visits by 22.3%, speeding up the processing of results by nearly 3 hours per patient requiring a medical test. However, the success of artificial intelligence algorithms in research only verifies this

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

PRO | Why are large models based on MoE more worthy of attention?

Aug 07, 2024 pm 07:08 PM

PRO | Why are large models based on MoE more worthy of attention?

Aug 07, 2024 pm 07:08 PM

In 2023, almost every field of AI is evolving at an unprecedented speed. At the same time, AI is constantly pushing the technological boundaries of key tracks such as embodied intelligence and autonomous driving. Under the multi-modal trend, will the situation of Transformer as the mainstream architecture of AI large models be shaken? Why has exploring large models based on MoE (Mixed of Experts) architecture become a new trend in the industry? Can Large Vision Models (LVM) become a new breakthrough in general vision? ...From the 2023 PRO member newsletter of this site released in the past six months, we have selected 10 special interpretations that provide in-depth analysis of technological trends and industrial changes in the above fields to help you achieve your goals in the new year. be prepared. This interpretation comes from Week50 2023

Automatically identify the best molecules and reduce synthesis costs. MIT develops a molecular design decision-making algorithm framework

Jun 22, 2024 am 06:43 AM

Automatically identify the best molecules and reduce synthesis costs. MIT develops a molecular design decision-making algorithm framework

Jun 22, 2024 am 06:43 AM

Editor | Ziluo AI’s use in streamlining drug discovery is exploding. Screen billions of candidate molecules for those that may have properties needed to develop new drugs. There are so many variables to consider, from material prices to the risk of error, that weighing the costs of synthesizing the best candidate molecules is no easy task, even if scientists use AI. Here, MIT researchers developed SPARROW, a quantitative decision-making algorithm framework, to automatically identify the best molecular candidates, thereby minimizing synthesis costs while maximizing the likelihood that the candidates have the desired properties. The algorithm also determined the materials and experimental steps needed to synthesize these molecules. SPARROW takes into account the cost of synthesizing a batch of molecules at once, since multiple candidate molecules are often available