Technology peripherals

Technology peripherals

AI

AI

Complete 1 year MD calculation in 2.5 days? DeepMind team's new calculation method based on Euclidean Transformer

Complete 1 year MD calculation in 2.5 days? DeepMind team's new calculation method based on Euclidean Transformer

Complete 1 year MD calculation in 2.5 days? DeepMind team's new calculation method based on Euclidean Transformer

Editor | Carrot Skin

In recent years, great progress has been made in the development of machine learning force fields (MLFF) based on ab initio reference calculations. Although low test errors are achieved, the reliability of MLFF in molecular dynamics (MD) simulations is facing increasing scrutiny due to concerns about instability over longer simulation time scales.

Research has shown a potential link between robustness to cumulative inaccuracies and the use of equivariant representations in MLFF, but the computational costs associated with these representations may limit this advantage in practice.

To solve this problem, researchers from Google DeepMind and TU Berlin proposed a transformer architecture called SO3krates, which combines sparse equivariant representation (Euclidean variables) with separation invariant and equivariant It combines a self-attention mechanism with variable information, eliminating the need for expensive tensor products.

SO3krates achieve a unique combination of precision, stability and speed, enabling in-depth analysis of the quantum properties of matter over long time periods and system scales.

The research was titled "A Euclidean transformer for fast and stable machine learned force fields" and was published in "Nature Communications" on August 6, 2024.

Background and Challenges

Molecular dynamics (MD) simulation can reveal the evolution of the system from microscopic interactions to macroscopic properties through long-term simulations, and its prediction accuracy depends on the interatomic interactions that drive the simulation. force accuracy. Traditionally, these forces have been derived from approximate force fields (FF) or computationally complex ab initio electronic structure methods.

In recent years, machine learning (ML) potential energy models have provided more flexible prediction methods by exploiting the statistical dependence of molecular systems.

However, studies have shown that the test error of ML models on benchmark datasets is weakly correlated with performance in long-term MD simulations.

To improve extrapolation performance, complex architectures such as message passing neural networks (MPNNs) have been developed, especially equivariant MPNNs, which capture the direction information between atoms by introducing tensor products to improve data transferability.

In the SO(3) equivariant architecture, the convolution is performed on the SO(3) rotation group based on spherical harmonics. By fixing the maximum degree  of the spherical harmonics in the architecture, exponential growth of the associated function space can be avoided.

of the spherical harmonics in the architecture, exponential growth of the associated function space can be avoided.

Scientists have proven that the maximum order is closely related to accuracy, data efficiency, and related to the reliability of the model in MD simulations. However, SO(3) convolutions scale  , which can increase the prediction time per conformation by up to two orders of magnitude compared to the invariant model.

, which can increase the prediction time per conformation by up to two orders of magnitude compared to the invariant model.

This leads to a situation where compromises must be made between accuracy, stability and speed, and can also create significant practical problems. These issues must be addressed before these models can be useful in high-throughput or broad-based exploration missions.

A new method with powerful performance

The research team of Google DeepMind and the Technical University of Berlin used this as a motivation to propose a Euclidean self-attention mechanism, using the relative directions of atomic neighborhoods filters instead of SO(3) convolutions, thereby representing atomic interactions without expensive tensor products; the method is called SO3krates.

Illustration: SO3krates architecture and building blocks. (Source: Paper)

This solution builds on recent advances in neural network architecture design and geometric deep learning. SO3krates uses sparse representations for molecular geometries and restricts the projection of all convolution responses to the most relevant invariant components of the equivariant basis functions.

Illustration: Learning invariants. (Source: paper)

Due to the orthogonality of spherical harmonics, this projection corresponds to the trace of the product tensor, which can be represented by a linearly scaled inner product. This can be efficiently extended to higher-order equivariant representations without sacrificing computational speed or memory cost.

Force predictions are derived from the gradient of the resulting invariant energy model, which represents a piecewise linearization of natural equivariants. Throughout the process, self-attention mechanisms are used to separate invariant and equivariant basic elements in the model.

The team compared the stability and speed of the SO3krates model with current state-of-the-art ML models and found that the solution overcomes the limitations of current equivariant MLFFs without compromising their advantages.

The mathematical formula proposed by the researchers can realize an efficient equivariant architecture, thereby achieving reliable and stable MD simulation; compared with the equivariant MPNN with comparable stability and accuracy, its speed can be increased by about 30 times.

To prove this, the researchers ran precise nanosecond-scale MD simulations of supramolecular structures in just a few hours, allowing them to calculate the range from a small peptide with 42 atoms to a peptide with 370 atoms. Fourier transform of the convergence rate autocorrelation function of the nanostructured structure.

Graphic: Overview of results. (Source: Paper)

The researchers further applied this model to explore the PES topology of docosahexaenoic acid (DHA) and Ac-Ala3-NHMe by studying 10k minima using a minimum hopping algorithm.

Such a study would require approximately 30M FF evaluations performed at temperatures between a few hundred K and 1200 K. Using DFT methods, this analysis requires more than a year of computational time. Existing equivariant MLFF with similar prediction accuracy would take more than a month to run to complete such an analysis.

In comparison, the team was able to complete the simulation in just 2.5 days, making it possible to explore hundreds of thousands of PES minima on realistic time scales.

In addition, SO3krates is able to detect physically valid minimum conformations that are not included in the training data. The ability to extrapolate to unknown parts of PES is critical for scaling MLFF to large structures, since available ab initio reference data only cover subregions of conformationally rich structures.

The team also studied the impact of disabling the equal variance property in the network architecture to gain a deeper understanding of its impact on model properties and its reliability in MD simulations.

The researchers found that equivariance is related to the stability of the resulting MD simulations and the extrapolation behavior to higher temperatures. It can be shown that even if the test error estimates are the same on average, equivariance reduces the spread of the error distribution.

Thus, using directional information via equivariant representation is similar in spirit to classical ML theory, where mapping to higher dimensions can yield richer feature spaces that are easier to parameterize.

Future Research

In a series of recent studies, methods aimed at reducing the computational complexity of SO(3) convolutions have been proposed. They can serve as a replacement for full SO(3) convolutions, and the method presented in this article can completely avoid the use of expensive SO(3) convolutions in the message passing paradigm.

These results all indicate that the optimization of equivariant interactions is an active research area that is not yet fully mature and may provide avenues for further improvements.

While the team’s work makes it possible to achieve stable extended simulation timescales using the modern MLFF modeling paradigm, future optimizations are still needed to bring the applicability of MLFF closer to traditional classic FF.

Currently, various promising avenues have emerged in this direction: In current designs, EVs are defined solely in terms of two-body interactions. The accuracy can be further improved by incorporating atomic cluster expansion into the MP step. At the same time, this may help reduce the number of MP steps and thus the computational complexity of the model.

Another issue that has not yet been discussed is the proper handling of global effects. By using low-rank approximations, trainable Ewald summation, or by learning long-range corrections in a physically inspired way. The latter type of approach is particularly important when extrapolation to larger systems is required.

While equivariant models can improve extrapolation of local interactions, this does not apply to interactions beyond the length scales present in the training data or beyond the effective cutoff of the model.

Since the above methods rely on local properties such as partial charge, electronegativity or Hirshfield volume, they can be seamlessly integrated into the team’s architecture by learning the corresponding local descriptors in the invariant feature branch of the SO3krates architecture. in method.

Therefore, future work will focus on incorporating many-body expansions, global effects, and long-range interactions into the EV formalism, and aim to further improve computational efficiency and ultimately span MD timescales with high accuracy.

Paper link: https://www.nature.com/articles/s41467-024-50620-6

Related content: https://phys.org/news/2024-08-faster-coupling- ai-fundamental-physics.html

The above is the detailed content of Complete 1 year MD calculation in 2.5 days? DeepMind team's new calculation method based on Euclidean Transformer. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1664

1664

14

14

1423

1423

52

52

1317

1317

25

25

1268

1268

29

29

1243

1243

24

24

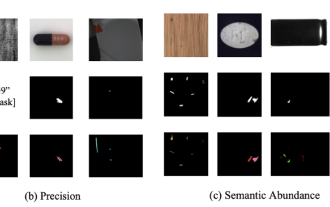

Breaking through the boundaries of traditional defect detection, 'Defect Spectrum' achieves ultra-high-precision and rich semantic industrial defect detection for the first time.

Jul 26, 2024 pm 05:38 PM

Breaking through the boundaries of traditional defect detection, 'Defect Spectrum' achieves ultra-high-precision and rich semantic industrial defect detection for the first time.

Jul 26, 2024 pm 05:38 PM

In modern manufacturing, accurate defect detection is not only the key to ensuring product quality, but also the core of improving production efficiency. However, existing defect detection datasets often lack the accuracy and semantic richness required for practical applications, resulting in models unable to identify specific defect categories or locations. In order to solve this problem, a top research team composed of Hong Kong University of Science and Technology Guangzhou and Simou Technology innovatively developed the "DefectSpectrum" data set, which provides detailed and semantically rich large-scale annotation of industrial defects. As shown in Table 1, compared with other industrial data sets, the "DefectSpectrum" data set provides the most defect annotations (5438 defect samples) and the most detailed defect classification (125 defect categories

Training with millions of crystal data to solve the crystallographic phase problem, the deep learning method PhAI is published in Science

Aug 08, 2024 pm 09:22 PM

Training with millions of crystal data to solve the crystallographic phase problem, the deep learning method PhAI is published in Science

Aug 08, 2024 pm 09:22 PM

Editor |KX To this day, the structural detail and precision determined by crystallography, from simple metals to large membrane proteins, are unmatched by any other method. However, the biggest challenge, the so-called phase problem, remains retrieving phase information from experimentally determined amplitudes. Researchers at the University of Copenhagen in Denmark have developed a deep learning method called PhAI to solve crystal phase problems. A deep learning neural network trained using millions of artificial crystal structures and their corresponding synthetic diffraction data can generate accurate electron density maps. The study shows that this deep learning-based ab initio structural solution method can solve the phase problem at a resolution of only 2 Angstroms, which is equivalent to only 10% to 20% of the data available at atomic resolution, while traditional ab initio Calculation

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

The open LLM community is an era when a hundred flowers bloom and compete. You can see Llama-3-70B-Instruct, QWen2-72B-Instruct, Nemotron-4-340B-Instruct, Mixtral-8x22BInstruct-v0.1 and many other excellent performers. Model. However, compared with proprietary large models represented by GPT-4-Turbo, open models still have significant gaps in many fields. In addition to general models, some open models that specialize in key areas have been developed, such as DeepSeek-Coder-V2 for programming and mathematics, and InternVL for visual-language tasks.

Google AI won the IMO Mathematical Olympiad silver medal, the mathematical reasoning model AlphaProof was launched, and reinforcement learning is so back

Jul 26, 2024 pm 02:40 PM

Google AI won the IMO Mathematical Olympiad silver medal, the mathematical reasoning model AlphaProof was launched, and reinforcement learning is so back

Jul 26, 2024 pm 02:40 PM

For AI, Mathematical Olympiad is no longer a problem. On Thursday, Google DeepMind's artificial intelligence completed a feat: using AI to solve the real question of this year's International Mathematical Olympiad IMO, and it was just one step away from winning the gold medal. The IMO competition that just ended last week had six questions involving algebra, combinatorics, geometry and number theory. The hybrid AI system proposed by Google got four questions right and scored 28 points, reaching the silver medal level. Earlier this month, UCLA tenured professor Terence Tao had just promoted the AI Mathematical Olympiad (AIMO Progress Award) with a million-dollar prize. Unexpectedly, the level of AI problem solving had improved to this level before July. Do the questions simultaneously on IMO. The most difficult thing to do correctly is IMO, which has the longest history, the largest scale, and the most negative

PRO | Why are large models based on MoE more worthy of attention?

Aug 07, 2024 pm 07:08 PM

PRO | Why are large models based on MoE more worthy of attention?

Aug 07, 2024 pm 07:08 PM

In 2023, almost every field of AI is evolving at an unprecedented speed. At the same time, AI is constantly pushing the technological boundaries of key tracks such as embodied intelligence and autonomous driving. Under the multi-modal trend, will the situation of Transformer as the mainstream architecture of AI large models be shaken? Why has exploring large models based on MoE (Mixed of Experts) architecture become a new trend in the industry? Can Large Vision Models (LVM) become a new breakthrough in general vision? ...From the 2023 PRO member newsletter of this site released in the past six months, we have selected 10 special interpretations that provide in-depth analysis of technological trends and industrial changes in the above fields to help you achieve your goals in the new year. be prepared. This interpretation comes from Week50 2023

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

The accuracy rate reaches 60.8%. Zhejiang University's chemical retrosynthesis prediction model based on Transformer was published in the Nature sub-journal

Aug 06, 2024 pm 07:34 PM

The accuracy rate reaches 60.8%. Zhejiang University's chemical retrosynthesis prediction model based on Transformer was published in the Nature sub-journal

Aug 06, 2024 pm 07:34 PM

Editor | KX Retrosynthesis is a critical task in drug discovery and organic synthesis, and AI is increasingly used to speed up the process. Existing AI methods have unsatisfactory performance and limited diversity. In practice, chemical reactions often cause local molecular changes, with considerable overlap between reactants and products. Inspired by this, Hou Tingjun's team at Zhejiang University proposed to redefine single-step retrosynthetic prediction as a molecular string editing task, iteratively refining the target molecular string to generate precursor compounds. And an editing-based retrosynthetic model EditRetro is proposed, which can achieve high-quality and diverse predictions. Extensive experiments show that the model achieves excellent performance on the standard benchmark data set USPTO-50 K, with a top-1 accuracy of 60.8%.

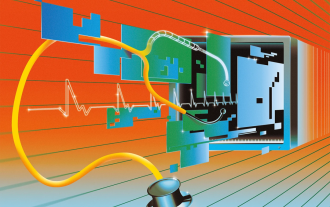

Nature's point of view: The testing of artificial intelligence in medicine is in chaos. What should be done?

Aug 22, 2024 pm 04:37 PM

Nature's point of view: The testing of artificial intelligence in medicine is in chaos. What should be done?

Aug 22, 2024 pm 04:37 PM

Editor | ScienceAI Based on limited clinical data, hundreds of medical algorithms have been approved. Scientists are debating who should test the tools and how best to do so. Devin Singh witnessed a pediatric patient in the emergency room suffer cardiac arrest while waiting for treatment for a long time, which prompted him to explore the application of AI to shorten wait times. Using triage data from SickKids emergency rooms, Singh and colleagues built a series of AI models that provide potential diagnoses and recommend tests. One study showed that these models can speed up doctor visits by 22.3%, speeding up the processing of results by nearly 3 hours per patient requiring a medical test. However, the success of artificial intelligence algorithms in research only verifies this